Crypto's ability to change the illusion of AI is a form of confidence, as well as a standard illusion.

By: Zuo Ye

Emergence: When many small entities interact to produce a larger whole, and this whole exhibits new characteristics that the individual entities that make it up do not possess, such as the emergent properties studied in biology.

Hallucination: The tendency of models to produce deceptive data, where the output of AI models appears to be correct but is actually incorrect.

The connection between AI and Crypto exhibits obvious fluctuations. After AlphaGo defeated human professional Go players in 2016, attempts to combine the two spontaneously emerged in the crypto world, such as Fetch.AI. Since the emergence of GPT-4 in 2023, the trend of AI + Crypto has resurged, represented by the issuance of WorldCoin. It seems that humanity is entering a utopian era where AI is responsible for productivity and Crypto is responsible for distribution.

This sentiment reached its peak after OpenAI launched the Sora text-to-video application. However, since it is an emotional response, there are inevitably irrational elements. At least Li Yizhou belongs to the part that has been mistakenly injured, for example:

The specific application of AI and algorithm development are often confused. The principles behind Sora and GPT-4's Transformer are open source, but using them requires payment to OpenAI.

The combination of AI and Crypto is still primarily driven by Crypto, while the AI giants have not shown a clear willingness. At this stage, what AI can do for Crypto is greater than what Crypto can do for AI.

The use of AI technology in Crypto applications does not equal the fusion of AI and Crypto, such as digital humans in blockchain games, metaverse, Web3 games, and AW.

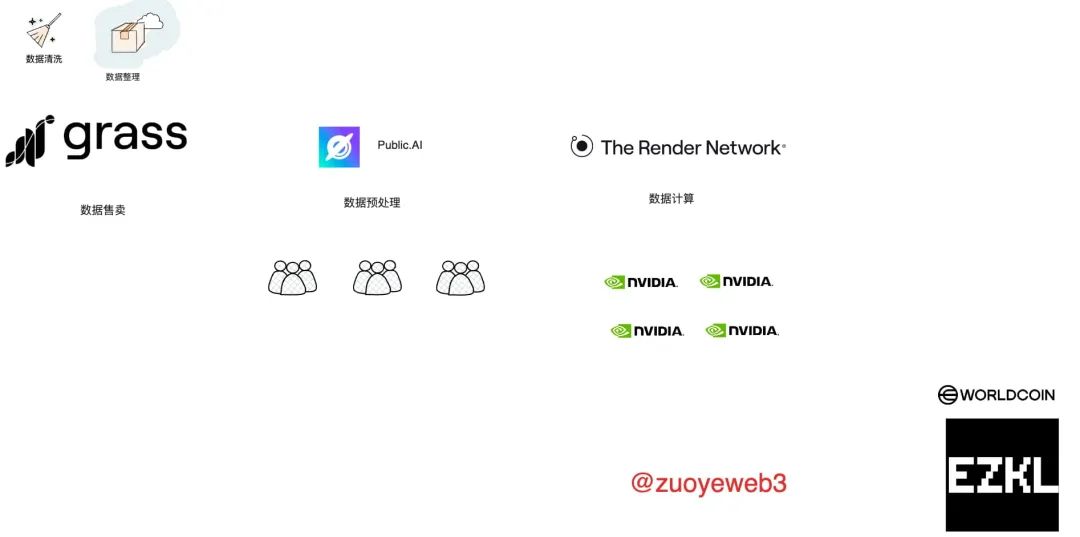

What Crypto can do for the development of AI technology mainly lies in decentralization and token incentives in the three essential components of AI: computing power, data, and models.

WorldCoin is a successful practice of combining the two, and zkML is at the intersection of AI and Crypto technologies, while the theory of UBI (Universal Basic Income) has undergone its first large-scale implementation.

In this article, I will focus on the benefits of Crypto for AI, and current Crypto projects that focus on AI applications are mainly gimmicks and will not be discussed.

From Linear Regression to Transformer

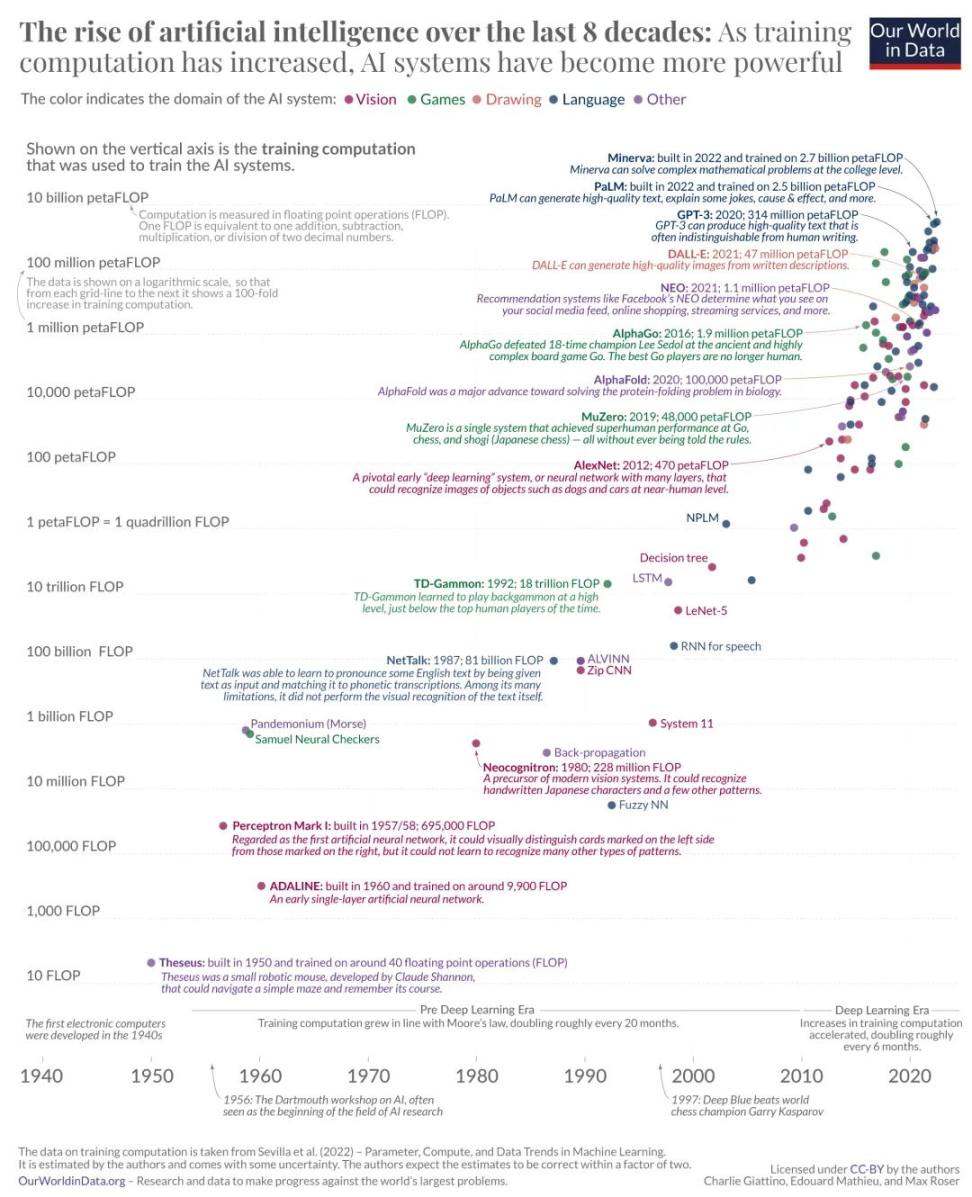

For a long time, the focus of AI discussions has been on whether the "emergence" of artificial intelligence will create a "Matrix"-like mechanical intelligence or a silicon-based civilization. Concerns about the coexistence of humans and AI technologies have always existed, most recently with the launch of Sora, and earlier with GPT-4 (2023), AlphaGo (2016), and IBM's Deep Blue defeating international chess in 1997.

These concerns have never materialized, so it's better to relax and briefly summarize the operating mechanism of AI.

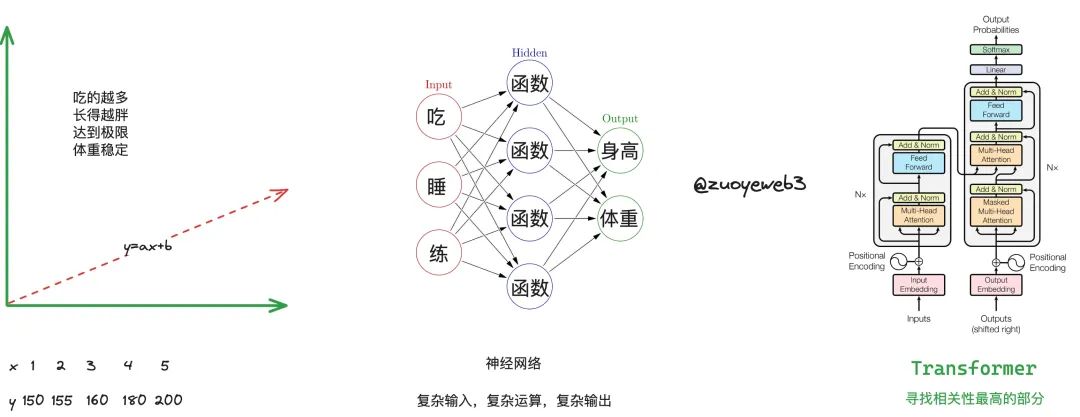

Starting from linear regression, it is essentially a simple linear equation. For example, the mechanism of weight loss for Jia Ling can be summarized as follows: x and y represent the relationship between energy intake and weight, meaning the more one eats, the fatter one becomes, and to lose weight, one must eat less.

However, this approach brings some problems. First, there are physiological limits to human height and weight, and it is unlikely to have 3-meter giants or thousand-kilogram ladies, so considering situations beyond the limits is meaningless. Second, simply eating less and exercising more does not adhere to the scientific principles of weight loss and can even harm the body.

We introduce the Body Mass Index (BMI), which measures the reasonable relationship between weight and height by dividing weight by the square of height. We use three factors—eating, sleeping, and exercising—to measure the relationship between height and weight. Therefore, we need three parameters and two outputs. It is clear that linear regression is not sufficient, and thus, neural networks were born. As the name suggests, neural networks mimic the structure of the human brain. The more one thinks, the more rational it may be, so thinking more deeply and frequently, i.e., deep learning (I'm making a far-fetched analogy, but just understand the meaning).

Brief history of AI algorithm development

However, the depth of layers is not limitless, and there is still a ceiling. Once a certain critical value is reached, the effect may deteriorate. Therefore, it becomes important to understand the relationship between existing information in a more reasonable way, such as deeply understanding the more detailed relationship between height and weight, finding factors that were previously unnoticed, or Jia Ling finding a top coach but being unable to express her desire to lose weight directly, so the coach needs to understand what Jia Ling really means.

Meaning of weight loss

In this scenario, Jia Ling and the coach constitute the encoder and decoder, and the back-and-forth transmission of meanings represents the true intentions of both parties. However, unlike the straightforward "I want to lose weight, give the coach a gift," the true intentions of both parties are hidden within the "meanings."

We notice a fact: if the back-and-forth exchanges occur frequently enough, the meanings of each party become easier to guess, and the meanings and the relationship between Jia Ling and the coach become clearer.

If this model is expanded, it becomes a large model in the colloquial sense (LLM, large language model), which examines the contextual relationships between words and sentences. However, current large models have been expanded to cover scenarios such as images and videos.

In the spectrum of AI, whether it is simple linear regression or extremely complex Transformers, they are all types of algorithms or models. In addition to these, there are two other elements: computing power and data.

Note: Brief history of AI development, image source: https://ourworldindata.org/brief-history-of-ai

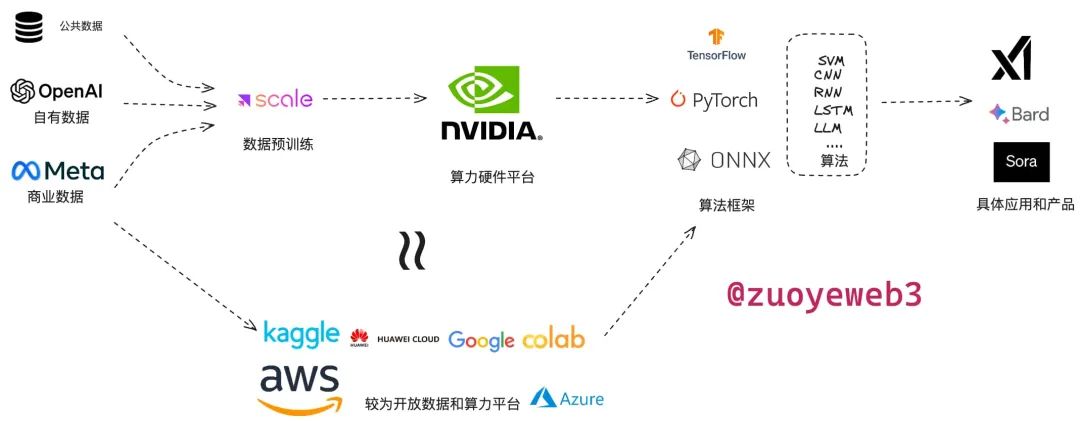

In simple terms, AI is a machine that ingests data, performs calculations, and produces results. However, compared to physical entities like robots, AI is more virtual. In the three parts of computing power, data, and models, the current operational process of Web2 businesses is as follows:

Data is divided into public data, company-owned data, and commercial data, which require professional annotation and preprocessing to be used. For example, Scale AI provides data preprocessing for mainstream AI companies.

Computing power is divided into self-built and cloud computing leasing models. Currently, NVIDIA dominates the GPU hardware, and its CUDA library has been prepared for many years. The software and hardware ecosystem is dominated by one company. The next option is cloud service providers' computing power leasing, such as Microsoft's Azure, Google Cloud, and AWS, which provide one-stop computing power and model deployment functions.

Models can be divided into frameworks and algorithms. The model war has ended, with Google's TensorFlow taking the lead, followed by Meta's PyTorch. However, whether it is Google, which introduced TransFomer, or Meta, which owns PyTorch, both are gradually falling behind OpenAI in commercialization, but their strength is still considerable. Currently, the Transformer algorithm dominates, and various large models mainly compete in terms of data sources and details.

AI operational process

As mentioned earlier, AI applications are diverse. For example, Vitalik has mentioned that code correction has long been in use. Looking from a different perspective, Crypto's main contribution to AI is primarily focused on non-technical areas, such as decentralized data markets, decentralized computing power platforms, etc. There have been some practical applications of decentralized LLM, but it is important to note that analyzing Crypto code with AI and running AI models on a large scale on the blockchain are fundamentally different. Additionally, adding some Crypto elements to AI models can hardly be considered a perfect integration.

Currently, Crypto is more adept at production and incentives. It is unnecessary to forcefully change the production paradigm of AI with Crypto, as this would be akin to creating new words to express sorrow and using a hammer to find nails. The rational choice is for Crypto to integrate into the workflow of AI and for AI to empower Crypto. Below are some potential points of integration that I have summarized:

Decentralized data production, such as DePIN's data collection, and the openness of on-chain data, which contains a wealth of transaction data that can be used for financial analysis, security analysis, and training data.

Decentralized preprocessing platforms. Traditional pre-training does not have insurmountable technical barriers, but behind the large models in Europe and the United States is the high-intensity labor of third-world manual annotators.

Decentralized computing power platforms, incentivizing and utilizing personal bandwidth, GPU computing power, and other hardware resources in a decentralized manner.

zkML. Traditional privacy methods such as data desensitization cannot perfectly solve the problem. zkML can hide data pointers and effectively evaluate the authenticity and effectiveness of open-source and closed-source models.

These four points are the scenarios where I can think of Crypto empowering AI. The field and projects of AI for Crypto are not further elaborated, and everyone can research them on their own.

It can be observed that Crypto currently mainly plays a role in encryption, privacy protection, and economic design. The only area of technical integration with some attempts is zkML. Here, we can brainstorm: if in the future, Solana's TPS can truly reach 100,000+, and if the combination of Filecoin and Solana is quite perfect, can a blockchain-based LLM environment be created? This could potentially create a real on-chain AI, changing the current unequal relationship where Crypto is attached to AI.

Web3 Integration into AI Workflow

There is no need for further explanation; the NVIDIA RTX 4090 graphics card is a valuable asset that is currently difficult to obtain in a certain Eastern country. However, more importantly, individuals, small companies, and academic institutions are also facing a graphics card crisis. After all, large commercial companies are the big players in this field. If a third path can be opened up outside of self-purchase and cloud service providers, it clearly has practical business value and is not purely speculative. The logical approach should be "if Web3 is not used, the project cannot be sustained." This is the correct approach for Web3 for AI.

AI workflow from a Web3 perspective

Data Source: Grass and DePIN Automotive Suite

Grass, launched by Wynd Network, is an idle bandwidth marketplace. Grass is an open network data acquisition and distribution channel. Unlike simple data collection and sales, Grass has data cleaning and verification functions to avoid the increasingly closed network environment. Moreover, Grass hopes to directly connect with AI models to provide them with directly usable datasets. AI datasets require professional processing, such as a large amount of manual fine-tuning, to meet the specific needs of AI models.

Expanding on this, Grass aims to solve the problem of data sales, while Web3's DePIN field can produce the data needed for AI, mainly focusing on autonomous driving in cars. Traditional autonomous driving requires the accumulation of data by corresponding companies, but projects like DIMO and Hivemapper run directly on cars, collecting more and more driving and road data.

In traditional autonomous driving, car recognition technology and high-precision maps are two key components. Information such as high-precision maps has long been accumulated by companies like Here Technologies, forming a de facto industry barrier. If newcomers can leverage Web3 data, they may have the opportunity to overtake the industry.

Data Preprocessing: Freeing Humans Enslaved by AI

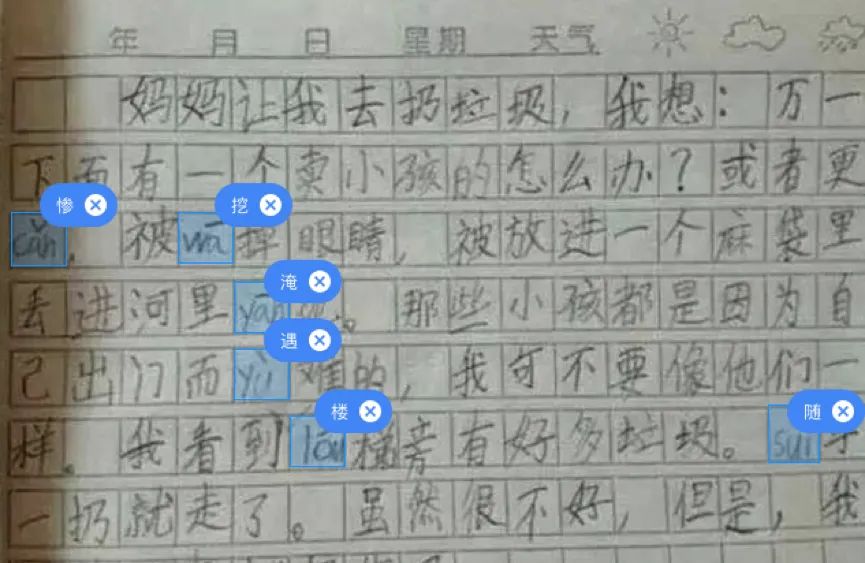

Artificial intelligence can be divided into manual annotation and intelligent algorithm parts. Third-world countries such as Kenya and the Philippines are responsible for the lowest value curve of manual annotation, while AI preprocessing companies in Europe and the United States take the lion's share of the income, which is then sold to AI development companies.

With the development of AI, more companies have entered this business, and the unit price of data annotation has been decreasing due to competition. This business mainly involves labeling data, similar to recognizing captchas, and has no technical threshold, with extremely low prices, even as low as 0.01 RMB.

Image source: https://aim.baidu.com/product/0793f1f1-f1cb-4f9f-b3a7-ef31335bd7f0

In this situation, Web3 data annotation platforms such as Public AI also have practical business markets, linking AI companies and data annotation workers, using incentive systems to replace the simple commercial low-price competition model. However, it is important to note that mature companies like Scale AI guarantee reliable quality in annotation technology, and how decentralized data annotation platforms control quality and prevent cheating is an absolute necessity. Essentially, this is a C2B2B enterprise service, and simply having a large amount of data does not convince companies.

Hardware Freedom: Render Network and Bittensor

It should be noted that unlike Bitcoin mining machines, there are currently no dedicated Web3 AI hardware. Existing computing power and platforms are transformed from mature hardware with added Crypto incentives, essentially falling into the DePIN field. However, there are differences between this and the data source projects, so it is written here according to the AI workflow.

The definition of DePIN can be referenced from an article I previously wrote: The Past of DePIN before Helium, Bitcoin, Arweave, and STEPN

Render Network is an "old project" that is not entirely prepared for AI. It originally focused on rendering work, as the name suggests. It began operations in 2017, when GPUs were not as crazy. However, market opportunities gradually emerged. The GPU market, especially high-end GPUs, is monopolized by NVIDIA, and the high prices hinder the entry of users into rendering, AI, and the metaverse. If a channel can be established between the demand and supply sides, a sharing economy model similar to shared bicycles has the opportunity to be established.

Furthermore, GPU resources do not need to be physically handed over; only software resources need to be allocated. It is also worth mentioning that Render Network switched to the Solana ecosystem in 2023, abandoning Polygon. Its move to Solana at a time when it was not yet popular has been proven to be the right decision. For GPU usage and allocation, high-speed networks are a necessity.

If Render Network is an old project, then Bittensor is currently in the spotlight.

BitTensor is built on Polkadot, and its goal is to train AI models through economic incentives, competing to see if each node can train AI models to minimize errors or maximize efficiency. It is also a Crypto project that aligns more with the classic AI on-chain process. However, the actual training process still requires NVIDIA GPUs and traditional platforms, similar to competition platforms like Kaggle.

zkML and UBI: Worldcoin's Two Sides

Zero-Knowledge Machine Learning (zkML) solves the problems of data leakage, privacy failure, and model verification by introducing zk technology into the AI model training process. The first two issues are easy to understand, as zk-encrypted data can still be used for training without leaking personal or private data.

Model verification refers to the evaluation of certain closed-source models. With zk technology, a target value can be set, and the closed-source model can prove its capabilities through verification results without disclosing the calculation process.

Worldcoin is not only an early mainstream project envisioning zkML but also a supporter of Universal Basic Income (UBI). In its vision, the future productivity of AI will far exceed the human demand ceiling. Therefore, the real issue lies in the fair distribution of AI benefits. The concept of UBI will be shared with global users through the $WLD token, and real human biometric recognition is required to adhere to the principle of fairness.

Of course, zkML and UBI are still in the early experimental stage, but they are interesting enough for me to continue to follow.

Conclusion

The development of AI, represented by Transformer and LLM, will gradually encounter bottlenecks, similar to linear regression and neural networks. After all, it is not possible to indefinitely increase model parameters or data volume, and the marginal returns of continued increases will diminish.

AI may be the emerging champion of wisdom, but the problem of illusion is very serious at present. It can be seen that the current belief that Crypto can change AI is a form of confidence and a standard illusion. The addition of Crypto is difficult to solve the problem of illusion from a technical perspective, but it can at least change some of the current situation from the perspective of fairness and transparency.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。