Author: Guo Xiaojing, Tencent Technology

Editor: Xu Qingyang

The world's top AI models can pass medical licensing exams, write complex code, and even defeat human experts in math competitions, yet they repeatedly struggle in a children's game, "Pokémon."

This eye-catching attempt began in February 2025 when a researcher from Anthropic launched a Twitch livestream titled "Claude Plays Pokémon Red" to coincide with the release of Claude Sonnet 3.7.

2,000 viewers flocked to the livestream. In the public chat area, viewers offered strategies and encouragement, turning the stream into a public observation of AI capabilities.

Sonet 3.7 could only be said to "play" Pokémon, but "playing" does not equate to "winning." It would get stuck at critical points for dozens of hours and make basic mistakes that even child players would not commit.

This was not Claude's first attempt.

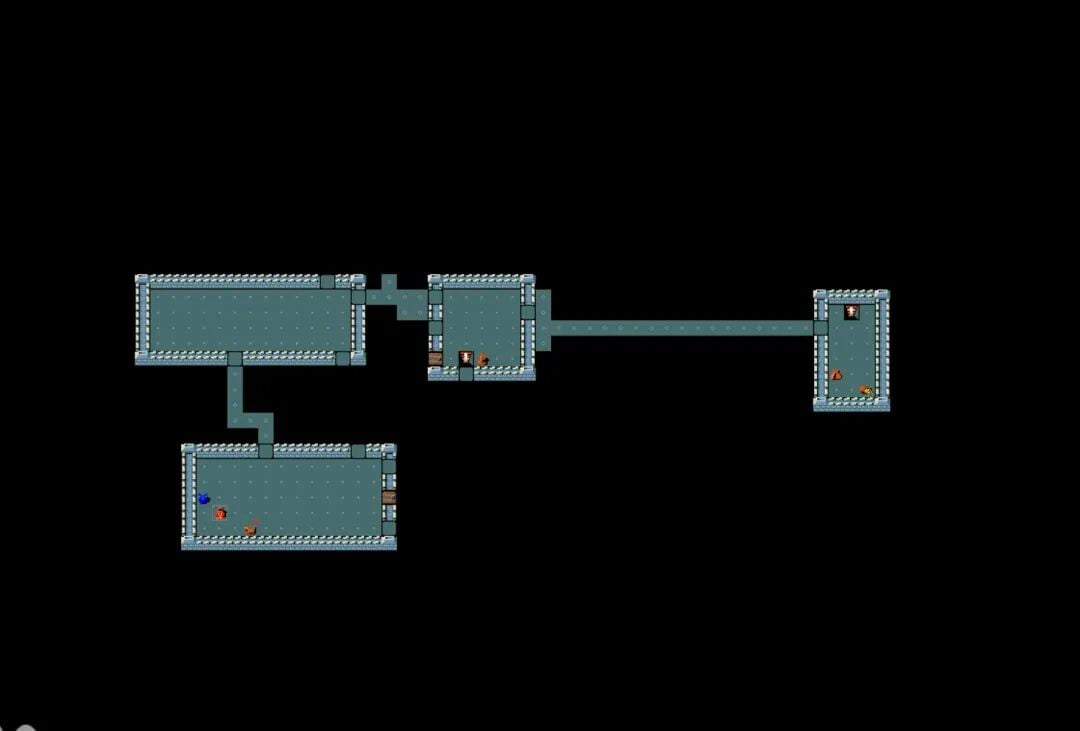

The performance of earlier versions was even more disastrous: some wandered aimlessly on the map, some fell into infinite loops, and many could not even leave the beginner village.

Even Claude Opus 4.5, which showed significant improvements, still made perplexing errors. Once, it circled outside a gym for four days without entering, simply because it did not realize it needed to cut down a tree blocking the entrance.

Why has a children's game become an AI's Waterloo?

Because what Pokémon requires is precisely the capability that today's AI lacks the most: the ability to continuously reason in an open world without explicit instructions, remember decisions made hours ago, understand implicit causal relationships, and make long-term plans among hundreds of possible actions.

These tasks are easy for an 8-year-old child but represent an insurmountable gap for AI models that claim to "surpass humans."

01 Does the toolkit gap determine success or failure?

In contrast, Google's Gemini 2.5 Pro successfully completed a similarly difficult Pokémon game in May 2025. Google CEO Sundar Pichai even jokingly stated in public that the company had taken a step toward creating "artificial Pokémon intelligence."

However, this result cannot simply be attributed to the Gemini model being inherently "smarter."

The key difference lies in the toolkit used by the model. Joel Zhang, an independent developer responsible for operating the Gemini Pokémon livestream, likened the toolkit to a set of "Iron Man armor": the AI did not enter the game empty-handed but was placed in a system that could call upon various external capabilities.

The Gemini toolkit provided more support, such as transcribing game visuals into text, thus compensating for the model's weaknesses in visual understanding, and offering customized puzzle-solving and path-planning tools. In contrast, the toolkit used by Claude was more minimalist, and its attempts more directly reflected the model's actual capabilities in perception, reasoning, and execution.

In everyday tasks, these differences are not obvious.

When users make requests to a chatbot that require online searches, the model will also automatically call upon search tools. But in long-term tasks like Pokémon, the differences in toolkits are magnified to the extent that they can determine success or failure.

02 Turn-based gameplay exposes AI's "long-term memory" shortcomings

Due to Pokémon's strict turn-based nature and lack of immediate reaction requirements, it has become an excellent "training ground" for testing AI. In each step of operation, the AI only needs to reason based on the current screen, target prompts, and available actions to output clear instructions like "press A."

This seems to be the interaction form that large language models excel at.

The crux lies in the "discontinuity" of the time dimension. Despite Claude Opus 4.5 having run for over 500 hours and executed about 170,000 steps, limited by the reinitialization after each operation, the model can only search for clues within an extremely narrow context window. This mechanism makes it resemble an amnesiac relying on sticky notes to maintain cognition, cycling through fragmented information and never achieving the qualitative leap in experience that a real human player can.

In fields like chess and Go, AI systems have long surpassed humans, but these systems are highly customized for specific tasks. In contrast, Gemini, Claude, and GPT, as general models, frequently defeat humans in exams and programming competitions but repeatedly struggle in a children's game.

This contrast itself is highly revealing.

According to Joel Zhang, the core challenge facing AI is its inability to continuously pursue a single clear goal over a long time span. "If you want an agent to do real work, it cannot forget what it did five minutes ago," he pointed out.

And this ability is an indispensable prerequisite for achieving the automation of cognitive labor.

Independent researcher Peter Whidden provided a more intuitive description. He once open-sourced a Pokémon algorithm based on traditional AI. "AI knows almost everything about Pokémon," he stated, "it has been trained on vast amounts of human data and knows the correct answers. But when it comes to execution, it appears clumsy."

In the game, this "knowing but unable to do" gap is continually magnified: the model may know it needs to find a certain item but cannot reliably locate it on a two-dimensional map; it knows it should talk to an NPC but repeatedly fails in pixel-level movements.

03 Behind the evolution of capabilities: the unbridged gap of "instinct"

Despite this, AI's progress is still clearly visible. Claude Opus 4.5 is significantly better than its predecessors in self-recording and visual understanding, allowing it to advance further in the game. Gemini 3 Pro completed the more challenging Pokémon Crystal after finishing Pokémon Blue, and did so without losing a single battle. This was something Gemini 2.5 Pro had never achieved.

Meanwhile, the Claude Code toolkit released by Anthropic allows the model to write and run its own code, which has been used in retro games like RollerCoaster Tycoon, reportedly managing virtual theme parks successfully.

These cases reveal a non-intuitive reality: AI equipped with the right toolkit may demonstrate high efficiency in knowledge work such as software development, accounting, and legal analysis, even if it still struggles with tasks requiring real-time responses.

The Pokémon experiment also reveals another intriguing phenomenon: models trained on human data exhibit behavior characteristics similar to humans.

In the technical report for Gemini 2.5 Pro, Google noted that when the system simulates a "panic state," such as when a Pokémon is about to faint, the quality of the model's reasoning significantly declines.

And when Gemini 3 Pro finally completed Pokémon Blue, it left itself a non-essential note: "To end poetically, I want to return to my original home and have one last conversation with my mother, allowing the character to retire."

In Joel Zhang's view, this behavior was unexpected and carried a certain human-like emotional projection.

04 The "digital long march" that AI finds hard to cross goes far beyond Pokémon

Pokémon is not an isolated case. In the pursuit of artificial general intelligence (AGI), developers have found that even if AI can excel in judicial exams, it still faces insurmountable "Waterloo" moments when confronted with the following types of complex games.

NetHack: The Abyss of Rules

This 1980s dungeon game is a "nightmare" for AI researchers. Its randomness is extreme, and it has a "permanent death" mechanism. Facebook AI Research found that even if a model can write code, it performs far worse than human beginners when faced with the common-sense logic and long-term planning required in NetHack.

Minecraft: The Disappearing Sense of Purpose

Although AI can now create wooden pickaxes and even mine diamonds, independently "defeating the Ender Dragon" remains a fantasy. In the open world, AI often "forgets" its original intention during resource collection that can last for dozens of hours or gets completely lost in complex navigation.

StarCraft II: The Gap Between Generality and Specialization

Although customized models have defeated professional players, if Claude or Gemini were to take over directly through visual commands, they would collapse instantly. In handling the uncertainty of "fog of war" and balancing micro-management with macro-building, general models still struggle.

RollerCoaster Tycoon: The Imbalance Between Micro and Macro

Managing a theme park requires tracking the status of thousands of visitors. Even Claude Code, which has basic management capabilities, can easily become fatigued when dealing with large-scale financial collapses or emergencies. Any reasoning gap can lead to the park's bankruptcy.

Elden Ring and Sekiro: The Gap of Physical Feedback

These types of games with strong action feedback are extremely unfriendly to AI. Current visual processing delays mean that while the AI is still "thinking" about a boss's actions, the character has often already perished. Millisecond-level reaction requirements create a natural limit to the model's interactive logic.

05 Why has Pokémon become a litmus test for AI?

Today, Pokémon is gradually becoming an informal yet highly persuasive benchmark in the field of AI evaluation.

Livestreams related to models from Anthropic, OpenAI, and Google on Twitch have collectively attracted hundreds of thousands of comments. Google has detailed the progress of Gemini in its technical reports, and Pichai publicly mentioned this achievement at the I/O developer conference. Anthropic even set up a "Claude Plays Pokémon" display area at industry conferences.

"We are a group of super tech enthusiasts," admitted David Hershey, head of AI applications at Anthropic. But he emphasized that this is not just entertainment.

Unlike traditional benchmarks that rely on one-off question-and-answer formats, Pokémon can continuously track the model's reasoning, decision-making, and goal progression over an extended period, making it closer to the complex tasks humans hope AI will perform in the real world.

As of now, the challenges for AI in Pokémon continue. But it is precisely these recurring dilemmas that clearly outline the boundaries of capabilities that general artificial intelligence has yet to cross.

Special contribution by Wu Ji also contributed to this article.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。