Author: Advait (Leo) Jayant

Translator: LlamaC

"Recommended message: Fully Homomorphic Encryption (FHE) is often hailed as the holy grail of cryptography. This article explores the prospects of FHE in the field of artificial intelligence, pointing out the current limitations. It also lists some projects dedicated to using fully homomorphic encryption (FHE) in the field of encryption for AI applications. For cryptocurrency enthusiasts, this article provides an in-depth understanding of fully homomorphic encryption. Enjoy!"

Text?

A wants highly personalized recommendations on Netflix and Amazon. B does not want Netflix or Amazon to understand their preferences.

A wants highly personalized recommendations on Netflix and Amazon. B does not want Netflix or Amazon to understand their preferences.

In today's digital age, we enjoy the convenience of personalized recommendations from services like Amazon and Netflix, which accurately cater to our interests. However, the deep intrusion of these platforms into our private lives is causing increasing concerns. We desire to enjoy customized services without sacrificing privacy. In the past, this seemed like a paradox: how to achieve personalization without sharing a large amount of personal data with cloud-based artificial intelligence systems. Fully Homomorphic Encryption (FHE) provides a solution that allows us to have the best of both worlds.

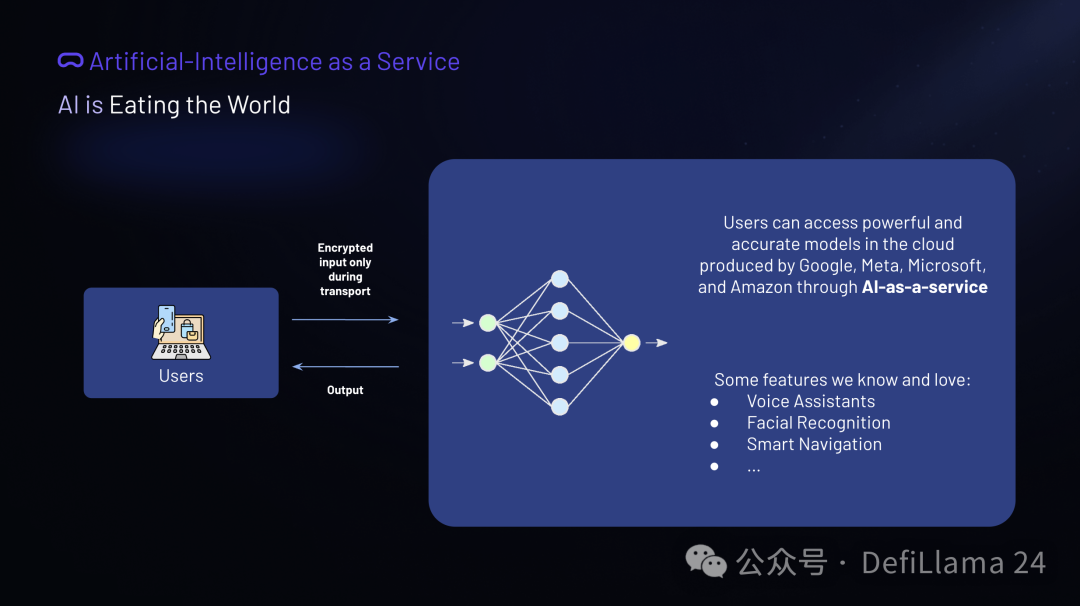

Artificial Intelligence as a Service (AIaaS)

Artificial intelligence (AI) now plays a crucial role in addressing complex challenges in various fields, including computer vision, natural language processing (NLP), and recommendation systems. However, the development of these AI models presents significant challenges for ordinary users:

Data Volume: Building precise models often requires massive datasets, sometimes reaching up to trillions of bytes.

Computing Power: Complex models like transformers require powerful computing capabilities of dozens of GPUs, often running continuously for weeks.

Domain Expertise: Fine-tuning these models requires deep domain knowledge.

These obstacles make it difficult for most users to independently develop powerful machine learning models.

AI as a Service (AIaaS) has entered an era where this model overcomes these barriers by providing access to state-of-the-art neural network models managed by tech giants (including FAANG members) through cloud services. Users only need to upload raw data to these platforms, where the data is processed and insightful inference results are generated. AIaaS effectively democratizes the use of high-quality machine learning models, making advanced AI tools available to a wider audience. However, regrettably, today's AIaaS sacrifices our privacy while providing these conveniences.

Data Privacy in Artificial Intelligence as a Service

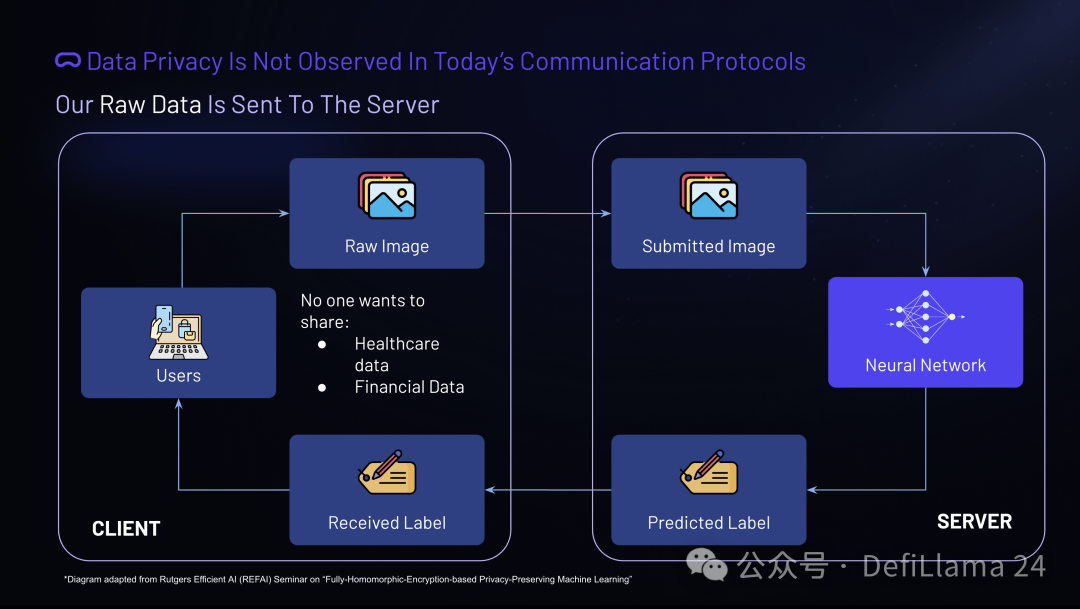

Currently, data is encrypted only during transmission from the client to the server. The server can access input data and predictions based on this data.

In the AI as a Service process, the server can access input and output data. This situation makes it complex for ordinary users to share sensitive information (such as medical and financial data). Regulations like GDPR and CCPA exacerbate these concerns, as they require users to explicitly consent to data sharing and ensure that users have the right to understand how their data is being used. GDPR further mandates encryption and protection of data during transmission. These regulations set strict standards to ensure user privacy and rights, advocating for clear transparency and control over personal information. Given these requirements, we must develop strong privacy mechanisms in the AI as a Service (AIaaS) process to maintain trust and compliance.

FHE Solves the Problem

By encrypting a and b, we can ensure the privacy of input data.

Fully Homomorphic Encryption (FHE) provides a solution to the data privacy issues associated with cloud computing. FHE schemes support operations such as ciphertext addition and multiplication. The concept is simple: the sum of two encrypted values equals the encryption result of the sum of these two values, and the same applies to multiplication.

In practice, its operation works as follows: the user performs addition operations on plaintext values a and b locally. Subsequently, the user encrypts a and b, and sends the ciphertext to the cloud server. The server can perform addition operations homomorphically on the encrypted values and return the result. The result decrypted from the server will be consistent with the local plaintext addition results of a and b. This process ensures data privacy while allowing computation in the cloud.

Deep Neural Networks (DNN) Based on Fully Homomorphic Encryption

In addition to basic addition and multiplication operations, significant progress has been made in using Fully Homomorphic Encryption (FHE) for neural network processing in the AI as a Service process. In this context, users can encrypt raw input data into ciphertext and only transmit these encrypted data to the cloud server. The server then performs homomorphic calculations on these ciphertexts, generates encrypted outputs, and returns them to the user. Crucially, only the user holds the private key, enabling them to decrypt and access the results. This establishes an end-to-end FHE encrypted data flow, ensuring the privacy and security of user data throughout the process.

Fully Homomorphic Encryption-based neural networks provide significant flexibility for users in AI as a Service. Once the ciphertext is sent to the server, users can go offline, as frequent communication between the client and server is not necessary. This feature is particularly advantageous for Internet of Things (IoT) devices, which often operate under constrained conditions where frequent communication is impractical.

However, it is worth noting the limitations of Fully Homomorphic Encryption (FHE). Its computational overhead is enormous; FHE schemes are inherently time-consuming, complex, and resource-intensive. Additionally, FHE currently struggles to effectively support nonlinear operations, posing a challenge to the implementation of neural networks. This limitation may affect the accuracy of neural networks built on FHE, as nonlinear operations are crucial for the performance of these models.

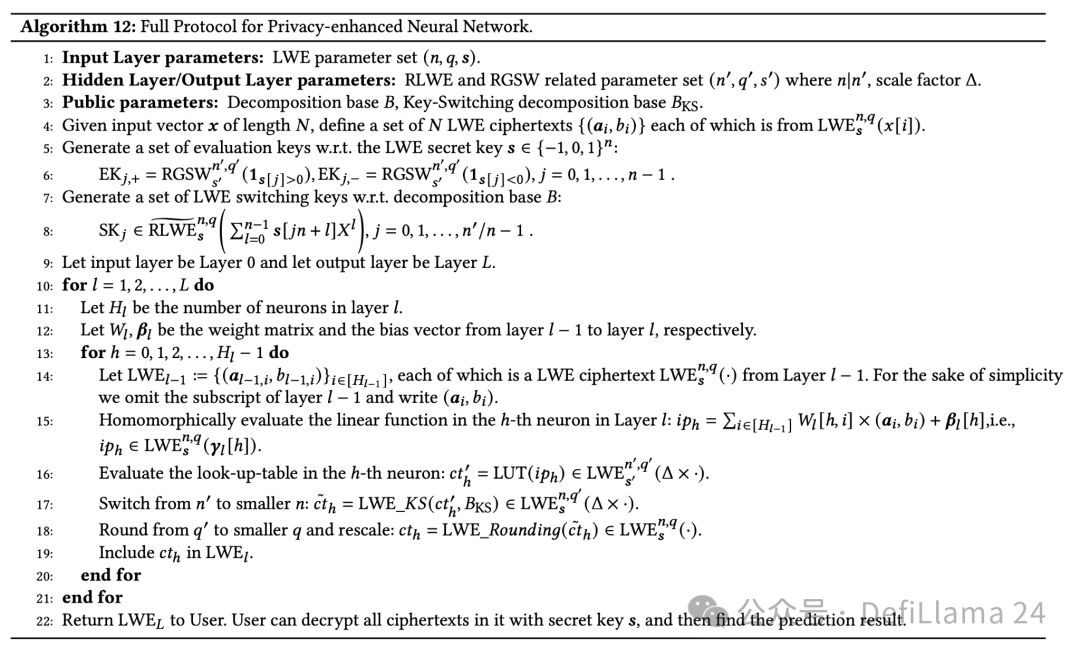

The paper "Privacy-Enhanced Neural Network Protocols for AI as a Service Based on Efficient Fully Homomorphic Encryption" by K.-Y. Lam, X. Lu, L. Zhang, X. Wang, H. Wang, and S. Q. Goh, published at Nanyang Technological University (Singapore) and the Chinese Academy of Sciences (China) in 2024, describes a protocol for privacy-enhanced neural networks for AI as a Service. The protocol first defines the parameters of the input layer using error learning (LWE). LWE is an encryption primitive used to protect data by allowing calculations on encrypted data without first decrypting it. For the hidden output layer, parameters are defined using ring LWE (RLWE) and ring GSW (RGSW), two advanced encryption technologies that extend LWE to achieve more efficient encryption operations.

The public parameters include the decomposition base ? and ??? Given an input vector ? of length ?, a set of ? LWE ciphertexts (??, ??) is generated for each element ?[?] using the LWE private key ?, and the evaluation key for ? is generated with indices ?[?]>0 and ?[?]0. Additionally, a set of LWE switching keys is set up for ? to efficiently switch between different encryption schemes.

The input layer is designated as layer 0, and the output layer as layer ?. For each layer ? from 1 to ?, the number of neurons ?? is determined, with the number of neurons in layer 0 already defined. The weight matrix ?? and bias vector ?? are defined starting from layer 0 and are stacked on layer 0. For each neuron ℎ from 0 to ??−1, LWE ciphertexts from layer ?−1 are evaluated homomorphically under the homomorphic encryption. This means that computations are performed on encrypted data to calculate linear functions in ℎ. The ℎ-th neuron in layer ?, combined with the weight matrix and bias vector, is then evaluated in a look-up table (LUT). After the operation on the ?′ is switched to a smaller ?, rounding and rescaling are performed on the result. The result is included in the set of LWE ciphertexts for layer ?.

Finally, the protocol returns the LWE ciphertexts to the user. The user can then decrypt all the ciphertexts using the private key ? to find the inference results.

This protocol efficiently achieves privacy-preserving neural network inference by leveraging Fully Homomorphic Encryption (FHE) technology. FHE allows computations on encrypted data without revealing the data itself to the processing server, ensuring data privacy while providing the advantages of AI as a Service.

Applications of Fully Homomorphic Encryption (FHE) in AI

FHE enables secure computations on encrypted data, opening up numerous new application scenarios while ensuring data privacy and security.

Consumer privacy in advertising: (Armknecht et al., 2013) proposed an innovative recommendation system that utilizes Fully Homomorphic Encryption (FHE). This system can provide personalized recommendations to users while ensuring complete secrecy of these recommendations to the system itself. This guarantees the privacy of user preference information, effectively addressing significant privacy concerns in targeted advertising.

Medical applications: (Naehrig et al., 2011) proposed a notable scheme for the healthcare industry. They suggested continuously uploading patients' medical data in encrypted form to service providers using Fully Homomorphic Encryption (FHE). This approach ensures that sensitive medical information remains confidential throughout its lifecycle, enhancing patient privacy while enabling seamless data processing and analysis by healthcare institutions.

Data mining: Mining large datasets can yield significant insights, but often at the cost of user privacy. (Yang, Zhong, & Wright, 2006) addressed this issue by applying function encryption under the background of Fully Homomorphic Encryption (FHE). This approach enables valuable information to be extracted from massive datasets without compromising the security of the mined individual data.

Financial privacy: Consider a scenario where a company possesses sensitive data and proprietary algorithms that must be kept confidential. (Naehrig et al., 2011) suggested using homomorphic encryption to address this issue. By applying Fully Homomorphic Encryption (FHE), the company can perform necessary computations on encrypted data without exposing the data or algorithms, ensuring the protection of financial privacy and intellectual property.

Forensic image recognition: (Bosch et al., 2014) described a method for outsourcing forensic image recognition using Fully Homomorphic Encryption (FHE). This technology is particularly beneficial for law enforcement agencies. By applying FHE, the police and other agencies can detect illegal images on hard drives without exposing the content of the images, thereby protecting the integrity and confidentiality of data in investigations.

From advertising and healthcare to data mining, financial security, and law enforcement, Fully Homomorphic Encryption has the potential to fundamentally change the way we handle sensitive information in various fields. The importance of protecting privacy and security in an increasingly data-driven world cannot be overstated as we continue to develop and refine these technologies.

Limitations of Fully Homomorphic Encryption (FHE)

Despite its potential, there are some key limitations that need to be addressed:

- Multi-user support: Fully Homomorphic Encryption (FHE) allows computations on encrypted data, but the complexity increases exponentially in scenarios involving multiple users. Typically, each user's data is encrypted using a unique public key. Managing these different datasets, especially considering the computational demands of FHE in large-scale environments, becomes impractical. To address this, researchers such as Lopez-Alt et al. proposed a multi-key FHE framework in 2013, allowing simultaneous operations on datasets encrypted with different keys. While this approach holds promise, it introduces additional complexity and requires careful coordination in key management and system architecture to ensure privacy and efficiency.

- Large-scale computational overhead: The core of Fully Homomorphic Encryption (FHE) lies in its ability to perform computations on encrypted data. However, this capability comes with a significant cost. The computational overhead of FHE operations is significantly higher compared to traditional unencrypted computations. This overhead typically manifests in polynomial form, but involving high-degree polynomials exacerbates the running time, making it unsuitable for real-time applications. Hardware acceleration for FHE represents a significant market opportunity aimed at reducing computational complexity and improving execution speed.

- Limited operations: Recent advancements have indeed expanded the application scope of Fully Homomorphic Encryption to support a wider range of operations. However, it primarily still applies to linear and polynomial computations, which is a significant limitation for artificial intelligence applications involving complex nonlinear models such as deep neural networks. The operations required by these AI models present a significant challenge for efficient execution within the current framework of Fully Homomorphic Encryption. While progress is being made, the gap between the operational capabilities of Fully Homomorphic Encryption and the requirements of advanced AI algorithms remains a critical barrier that needs to be overcome.

Fully Homomorphic Encryption (FHE) in the Context of Encryption and Artificial Intelligence

Here are some companies dedicated to using Fully Homomorphic Encryption (FHE) in the field of encryption for AI applications:

- Zama offers Concrete ML, an open-source tool designed to simplify the process of data scientists using Fully Homomorphic Encryption (FHE). Concrete ML can convert machine learning models into their homomorphic equivalent form, enabling secure computations on encrypted data. Zama's approach allows data scientists to utilize FHE without deep cryptographic knowledge, which is particularly useful in fields such as healthcare and finance where data privacy is crucial. Zama's tool promotes secure data analysis and machine learning while keeping sensitive information encrypted.

- Privasee focuses on building a secure AI computing network. Their platform utilizes Fully Homomorphic Encryption (FHE) technology, allowing multiple parties to collaborate without revealing sensitive information. By using FHE, Privasee ensures that user data remains encrypted throughout the AI computation process, thereby protecting privacy and complying with strict data protection regulations such as GDPR. Their system supports various AI models, providing a versatile solution for secure data processing.

- Octra combines cryptocurrency with artificial intelligence to enhance digital transaction security and data management efficiency. By integrating Fully Homomorphic Encryption (FHE) with machine learning technology, Octra aims to enhance the security and privacy of decentralized cloud storage. Their platform ensures that user data remains encrypted and secure through the application of blockchain, cryptography, and artificial intelligence technologies. This strategy builds a robust framework for digital transaction security and data privacy in the decentralized economy.

- Mind Network combines Fully Homomorphic Encryption (FHE) with artificial intelligence to achieve secure encrypted computations during AI processing without decryption. This facilitates a privacy-preserving, decentralized AI environment that seamlessly integrates encryption security with AI functionality. This approach not only protects the confidentiality of data but also achieves a trustless, decentralized environment where AI operations can be conducted without relying on central authority or exposing sensitive information, effectively combining the encryption strength of FHE with the operational requirements of AI systems.

The number of companies operating at the forefront of Fully Homomorphic Encryption (FHE), artificial intelligence (AI), and cryptocurrency remains limited. This is primarily due to the significant computational overhead required for effective implementation of FHE, demanding powerful processing capabilities for efficient encrypted computations.

Conclusion

Fully Homomorphic Encryption (FHE) provides a promising approach to enhancing privacy in AI by allowing computations on encrypted data without decryption. This capability is particularly valuable in sensitive fields such as healthcare and finance where data privacy is crucial. However, FHE faces significant challenges, including high computational overhead and limitations in handling the nonlinear operations necessary for deep learning. Despite these obstacles, advancements in FHE algorithms and hardware acceleration are paving the way for more practical applications in AI. The continued development in this field holds the potential to greatly enhance secure and privacy-preserving AI services, balancing computational efficiency with robust data protection.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。