Source: New Smart Element

Goodness, Huawei HarmonyOS integrates large models, can the smart assistant play like this?

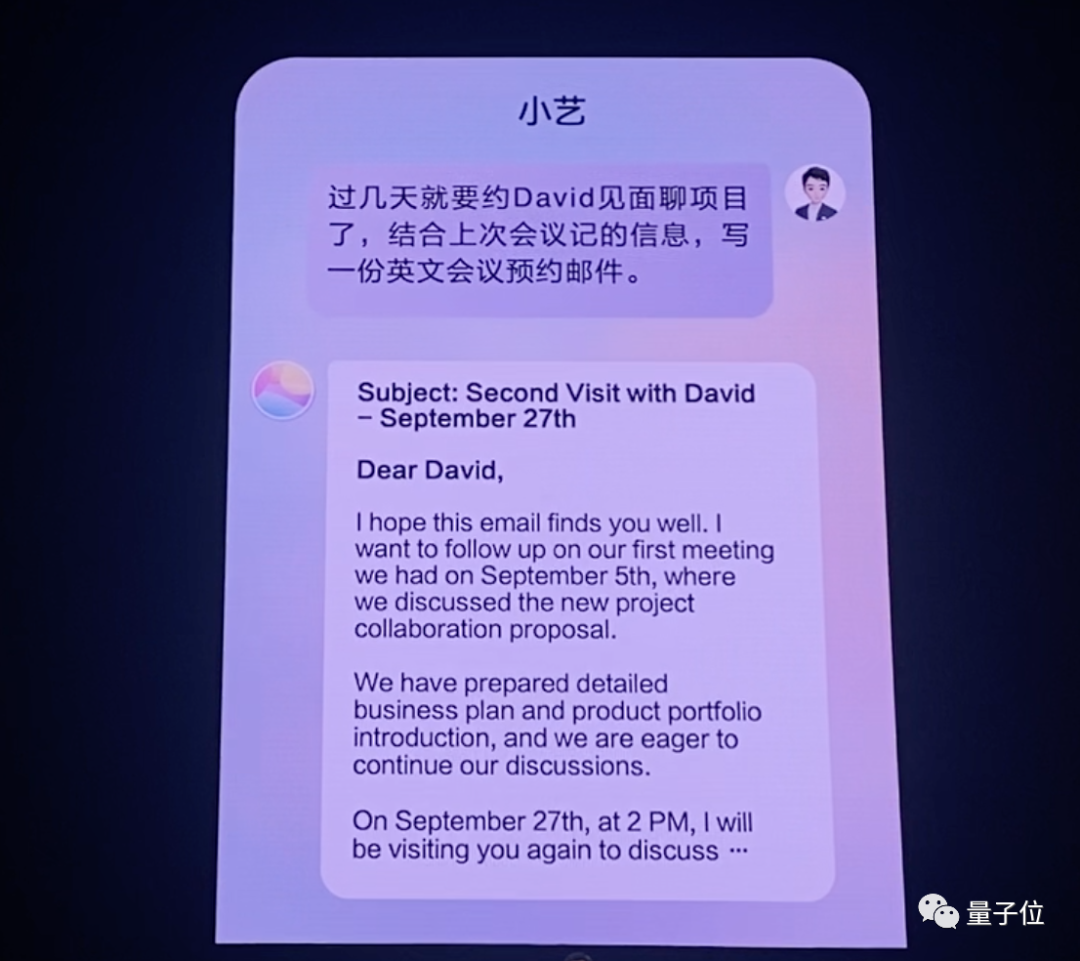

With just one Chinese command, Huawei Xiaoyi can write an English email:

You can use AI to create different styles with your own photos:

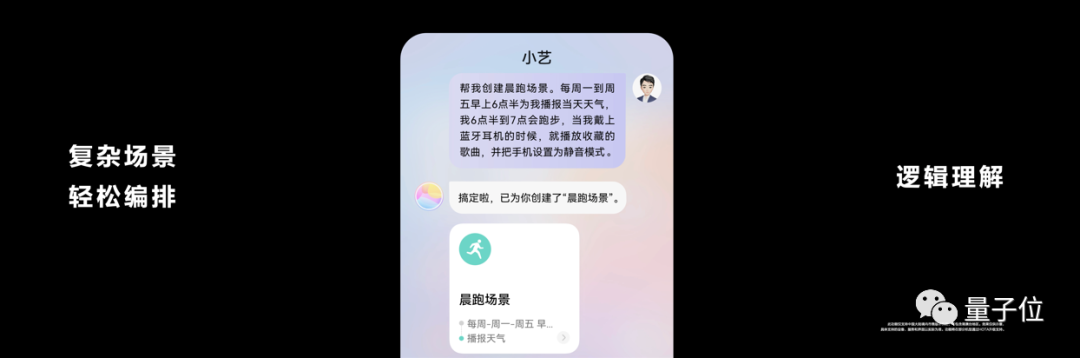

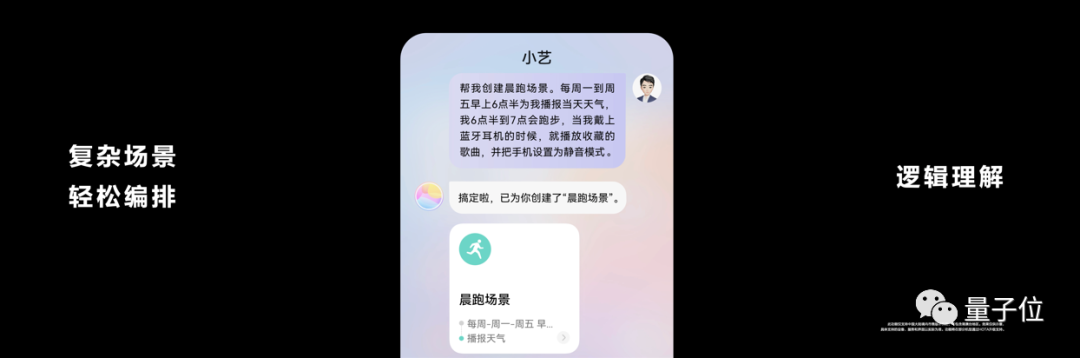

You can also give a long string of commands to create complex scenes that can be easily understood:

This is the brand new Xiaoyi in Huawei HarmonyOS 4.

It is based on the Huawei Pangu L0 base model, incorporating a large amount of scene data, fine-tuning the model, and finally refining it into an L1-level dialogue model.

It can handle tasks such as text generation, knowledge search, data summarization, intelligent arrangement, and understanding of fuzzy/complex intentions.

It can also call various app services to achieve a system-level intelligent experience.

So, what exactly can the new Huawei Xiaoyi do?

Smarter, more capable, more intimate

Based on the capabilities of large models, Huawei Xiaoyi has mainly made upgrades in three areas:

- Smart interaction

- Efficient productivity

- Personalized services

Specific capability enhancements include more natural language conversations, knowledge Q&A, searching for life services, recognizing screen content through dialogue, and generating summary text and images.

First, the upgrade in smart interaction makes conversations and interactions more natural and smooth.

Huawei Xiaoyi can understand plain language, comprehend vague intentions, and execute complex commands.

If you can't find the latest wallpaper setting function, or don't even know the function name, you can directly ask:

How do I change the wallpaper that changes according to the real-time weather?

Or a complex command that includes multiple requests:

Find a highly rated seafood restaurant near Songshan Lake, preferably with a discount package suitable for four people.

Xiaoyi can also call services to find a restaurant that meets the requirements.

At the same time, Xiaoyi also has multimodal capabilities, understanding image content. This means that steps that would normally require users to manually operate after viewing can now be handled by Xiaoyi.

For example, ask it to look at an invitation and then say:

Navigate to the address on the map.

It can extract the address information from the image and call the map service for navigation.

Or save the contact information from the invitation. It can understand the text information in the image very well.

Furthermore, it is now possible to delegate complex task arrangements to Xiaoyi, without having to manually set them repeatedly.

For example, you can ask it to set up a morning running scene:

Help me create a morning running scene. Report the weather every Monday to Friday at 6:30 a.m. When I put on my Bluetooth earphones, play my favorite songs and set the phone to silent mode.

Xiaoyi can understand this long list of requirements, then call different functions and can also judge whether to execute certain operations based on the phone's status (whether connected to Bluetooth earphones).

Secondly, thanks to the capabilities of large models, Xiaoyi can now provide more efficient productivity tools.

It can handle viewing, reading, and writing without any problems.

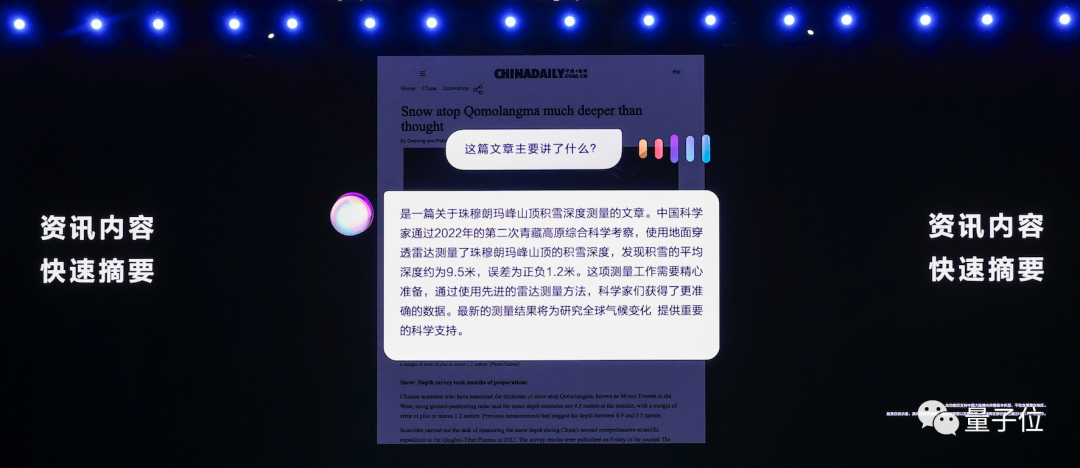

For example, show it an English article and then ask what the article is about?

Xiaoyi can provide a simple and concise explanation in Chinese.

If the user has asked it to remember some information in the past, it can also be called upon to generate the corresponding content.

I'm meeting David in a few days to discuss the project. Based on the information I remembered from the last meeting, write an English meeting appointment email.

As mentioned earlier, Xiaoyi can also use AI visual capabilities to create photos in various styles.

Finally, as a smart assistant, Xiaoyi now supports more personalized services and understands you better.

It can be used as a notebook or memo, and can handle small tasks through oral recording.

Huawei stated that all memory content is completed with the user's authorization and will fully protect user privacy.

In addition, Xiaoyi can now perceive more high-frequency scenarios for users, providing one-stop smart combination suggestions, saving many manual search processes.

For example, in the scenario of outbound travel, before departure, Xiaoyi can provide real-time reminders of the latest exchange rates, currency exchange, and help users instantly obtain destination travel guides; after arriving at the destination, it can also remind users of luggage carousel information, enable one-click activation of overseas data, and quickly obtain real-time translation tools.

According to reports, the new Xiaoyi has tripled its smart scene capabilities, and the number of POIs has increased sevenfold, covering core dining, shopping stores, business districts, airports, and high-speed rail stations.

In summary, the new Xiaoyi has not only gained the latest AIGC capabilities, but has also improved some of the shortcomings that were often criticized in the past for mobile voice assistants.

Such as lack of memory, stiff conversations, and inability to understand plain language, etc…

All of this is of course thanks to the support of large models, but how exactly did Xiaoyi do it?

Xiaoyi embraces large models

Xiaoyi is based on the underlying model of the Huawei Pangu series.

In July of this year, Huawei officially released Pangu large model 3.0 and proposed a 3-layer model architecture.

- L0: Basic large model, including natural language, vision, multimodal, prediction, scientific computing;

- L1: N industry large models, such as government affairs, finance, manufacturing, mining, meteorology, etc.;

- L2: More refined models for specific scenarios, providing "out-of-the-box" model services

The largest version of the L0 basic large model contains 100 billion parameters, and pre-training uses over 30 trillion tokens.

Xiaoyi is based on the Huawei Pangu L0 base model, and has built a large amount of scene data for consumer scenarios, fine-tuned the model, and finally refined it into an L1-level dialogue model.

In the fine-tuning process, Xiaoyi has incorporated mainstream data types covering terminal consumers, such as dialogue, travel guides, device control, and daily necessities.

This can effectively cover the knowledge range of ordinary users' daily conversations, and enhance the factual, real-time, and safe and compliant aspects of the model during the dialogue process.

However, it is well known that large models have challenges in deployment and rapid response due to their large scale.

In terms of deployment, Huawei is continuously enhancing the ability of large model edge-cloud collaboration. The edge-side large model can first preprocess user requests and context information, and then send the preprocessed request to the cloud side.

The advantage of this approach is that it can leverage the fast response of the edge-side model, improve the quality of Q&A and response through the cloud-side model, and further protect user privacy data.

To reduce inference latency, Huawei Xiaoyi has made systematic engineering optimizations, including the entire chain from the underlying chip, inference framework, model operators, input/output length, etc.

By decomposing the latency of each module, the R&D team has clarified the optimization goals of each part, and reduced latency through operator fusion, memory optimization, pipeline optimization, etc.

The length of the prompt and output also affects the inference speed of large models.

In this regard, Huawei has conducted a detailed analysis and compression of prompts and output formats for different scenarios, ultimately achieving a halving of the inference latency.

From the overall technical architecture perspective, the integration of Huawei Xiaoyi and large models is not simply enhancing tasks such as chatting, AIGC, and responses, but rather, it is a systematic enhancement with the large model at its core.

In other words, it makes the large model the "brain" of the system.

The underlying logic is to assign user tasks to the appropriate systems, with each system performing its role, while enhancing the experience in complex scenarios.

Looking specifically at Xiaoyi's typical dialogue process, it can be divided into three steps:

Step one, receive the user's question, analyze how to handle the question based on context understanding/Xiaoyi's memory.

Step two, call different capabilities based on the request type, including meta-service retrieval, creative generation, and knowledge retrieval.

If the user's request involves meta-services, such as asking about nearby restaurants suitable for gatherings, this involves calling food APP services, requiring API generation, and finally receiving a response from the service provider based on the recommendation mechanism.

If the user asks a knowledge question, such as asking about the number of parameters in the Pangu large model, the system will query search engines, domain-specific knowledge, and vector knowledge, then integrate and generate the answer.

If the user's request is a generative task, the large model's own capabilities can provide a response.

In the final step, all generated answers undergo risk assessment before being returned to the user.

In addition, Xiaoyi has also made further control in the details, conducting a series of underlying developments to ensure the effectiveness of Q&A and task execution.

Looking at the data aspect, since its launch on HarmonyOS in 2017, Xiaoyi has accumulated a certain amount of user conversation habits. On top of this, Huawei has built a large corpus of different types of expressions, covering as many written and spoken expressions as possible, allowing the large model to be proficient in various expressions during the pre-training phase.

In order to better evaluate and enhance Xiaoyi's capabilities, Huawei has built a complete test dataset. This not only evaluates the existing open large model capabilities but also guides the construction of Xiaoyi's data and capabilities based on the test results.

Enabling Xiaoyi to master tool invocation is also quite challenging. Device control requires the large model to generate complex format text with hundreds of tokens, where any formatting errors would prevent the central control system from parsing and interfacing.

To enable the large model to meet such formatting standards, Huawei has on one hand used prompts to understand the "temperament" of the large model, while also strengthening the code capabilities of the large model, thereby enhancing its formatting compliance capabilities, ultimately achieving almost 100% compliance with the format.

For complex scenarios, Xiaoyi's approach is to first use the large model's capabilities to fully learn and understand the tool scenario, and then reason.

It is understood that the team has optimized the device control effect from being completely unusable to a usability rate of over 80%.

In addition, the native HarmonyOS also makes it possible to optimize existing APIs. Through this reverse adaptation, the strengths of the large model can be better utilized.

Targeting all scenarios, not limited to the mobile end

So, why is Huawei able to rapidly deploy large model capabilities to smart assistants?

Accumulating and tackling the underlying basic research and development is indispensable, but there is also one point worth noting—

Huawei chose to approach it from actual scenarios to determine how to integrate large models with smart assistants and even the entire operating system.

In Huawei's own words:

Talk is cheap. Show me the Demo.

The many experiences shown above also come from the daily perception of scenarios by members of the Huawei R&D team.

For example, some people are used to getting news while driving to and from work, and it's inconvenient to listen to very long news, so the news summary function has been added to Huawei Xiaoyi.

Others have found themselves at a loss for words when writing shopping reviews or birthday wishes, so Huawei Xiaoyi provides a copywriting function.

This focus on scenario experience is a natural advantage of HarmonyOS. Since its inception, HarmonyOS has not been limited to the mobile end, but has been aimed at multiple terminals and all scenarios.

It has now created a "1+8+N" all-scenario ecosystem.

Huawei Xiaoyi has now been deployed on 1+8 devices, and in the future, it will gradually deploy Xiaoyi, which has large model capabilities, onto the entire consumer scenario experience in combination with all-scenario devices.

As an AI-driven smart assistant, Xiaoyi has been continuously integrating various AI capabilities since its inception, such as AI subtitles, Xiaoyi reading, etc. The R&D team behind it has always been focused on exploring more possibilities for AI and smart assistants.

It is reported that the team noticed last year that the combination of a billion-scale pre-training model with prompt prompting technology can already bring very good text understanding and generation capabilities, and can be applied in casual conversation, Q&A, and task-based dialogue.

With the latest AI trend explosion, RLHF has brought significant improvements to large models, and the door to industrial landing has officially opened.

Since the emergence of the generative AI trend this year, many applications have chosen to integrate large model capabilities and embed smart assistants.

However, as one of the world's most knowledgeable operating system manufacturers, Huawei chose to approach it from a more fundamental level, using large models to reshape the OS.

A more fundamental approach means a more thorough and comprehensive approach.

But for R&D, the challenge is also greater. This not only requires a solid model base but also requires systematic integration optimization, as well as demands for scenario understanding and user demand perception.

Looking at it: Huawei is one of the earliest domestic manufacturers with large model capabilities; it has built full-stack AI development capabilities; HarmonyOS covers 700 million+ devices…

Therefore, it is not difficult to understand why Huawei Xiaoyi was able to quickly integrate large model capabilities, making HarmonyOS 4 the first operating system to fully integrate large models.

As one of the world's most watched operating systems, HarmonyOS's embrace of large models may also open a new paradigm, allowing everyone to experience large model capabilities simply by opening their phones, no longer limited to imagination.

Currently, Huawei has announced the Xiaoyi test plan:

The new Xiaoyi will open for invitation testing at the end of August this year, and will be available for OTA upgrade on some models running HarmonyOS 4.0 and above at a later date, with specific upgrade plans to be announced later.

Interested friends can stay tuned for more information.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。