文章作者:a16z New Media

文章编译:Block unicorn

作为投资者,我们的职责是深入了解科技行业的各个角落,从而把握未来的发展趋势。因此,每年 12 月,我们都会邀请投资团队分享他们认为科技企业在未来一年将要解决的一个重大构想。

今天,我们将分享来自基础设施、增长、生物+健康以及 Speedrun 团队的观点。敬请期待明天其他团队的分享。

基础设施

Jennifer Li:初创公司如何驾驭多模态数据的混乱局面

非结构化、多模态数据一直是企业面临的最大瓶颈,也是他们尚未开发的最大宝藏。每家公司都深陷于 PDF、屏幕截图、视频、日志、电子邮件和半结构化数据的海洋之中。模型不断变得更加智能,但输入数据却变得越来越混乱,这导致 RAG 系统出现故障,代理以不易察觉且代价高昂的方式失效,关键工作流程仍然严重依赖人工质检。人工智能公司面临的制约因素如今是数据熵:在非结构化数据世界中,新鲜度、结构性和真实性都在持续衰减,而 80% 的企业知识如今就存在于这些非结构化数据中。

正因如此,理清非结构化数据成为千载难逢的机遇。企业需要一种持续的方法来清理、构建、验证和管理其多模态数据,从而确保下游人工智能工作负载能够真正发挥作用。应用场景无处不在:合同分析、入职流程、理赔处理、合规性、客服、采购、工程搜索、销售赋能、分析流水线,以及所有依赖可靠上下文的代理工作流程。那些能够构建从文档、图像和视频中提取结构、解决冲突、修复流水线或保持数据新鲜度和可检索性的平台的初创公司,掌握着企业知识和流程王国的钥匙。

Joel de la Garza:人工智能让网络安全招聘重获新生

在过去十年的大部分时间里,首席信息安全官 (CISO) 面临的最大挑战是招聘。从 2013 年到 2021 年,网络安全职位空缺岗位从不足 100 万个增长到 300 万个。这是因为安全团队雇佣了大量技术娴熟的工程师,让他们每天从事枯燥乏味的一级安全工作,例如审查日志,而没有人愿意干这种活。问题的根源在于,网络安全团队购买了能够检测一切的产品,从而制造了这种繁琐的工作,这意味着他们的团队需要审查所有信息——这反过来又造成了虚假的劳动力短缺。这是一个恶性循环。

到 2026 年,人工智能将打破这一循环,并通过自动化网络安全团队的许多重复性工作来填补招聘缺口。任何在大型安全团队工作过的人都知道,一半的工作都可以通过自动化轻松解决,但当工作堆积如山时,却很难确定哪些工作需要自动化。能够帮助安全团队解决这些问题的原生 AI 工具,最终将使他们能够腾出手来做他们真正想做的事情:追捕坏人、构建新系统以及修复漏洞。

Malika Aubakirova:原生代理基础设施将成为标配

到 2026 年,最大的基础设施冲击将并非来自外部企业,而是来自企业内部。我们正在从可预测、低并发的“人类速度”流量转向递归、突发且规模庞大的“代理速度”工作负载。

如今的企业后端是为 1:1 的人类操作与系统响应比例而设计的。它没有为单个代理式“目标”在毫秒级触发 5000 个子任务、数据库查询和内部 API 调用的递归扇出做好架构准备。当代理尝试重构代码库或修复安全日志时,它看起来不像是一个用户。在传统数据库或限流器眼里,它就像一次 DDoS 攻击。

为 2026 年的代理构建系统意味着要重新设计控制平面。我们将见证“代理原生”基础设施的兴起。下一代基础设施必须将“惊群效应”(thundering herd)视为默认状态。冷启动时间必须缩短,延迟波动必须大幅降低,并发限制必须成倍提升。瓶颈在于协调:在大规模并行执行中实现路由、锁定、状态管理和策略执行。只有那些能够应对随之而来的工具执行洪流的平台才能最终胜出。

Justine Moore:创意工具走向多模态

我们现在拥有了用人工智能讲述故事的构建模块:生成式语音、音乐、图像和视频。但对于任何超出一次性片段的内容,获得所需的输出往往既耗时又令人沮丧——甚至是不可能的——尤其是在你想要接近传统导演级别的控制水平时。

为什么我们不能给模型喂一个 30 秒的视频,然后让它用参考图像和声音创建的新角色继续演绎这个场景呢?或者重新拍摄一段视频,以便我们从不同的角度观察场景,或者让动作与参考视频相匹配?

2026 年是人工智能迈向多模态的一年。你可以给模型提供任何形式的参考内容,并利用它创作新内容或编辑现有场景。我们已经看到一些早期产品,例如 Kling O1 和 Runway Aleph。但还有很多工作要做——我们需要在模型层和应用层都进行创新。

内容创作是人工智能最具杀伤力的应用场景之一,我预计我们将看到众多成功的产品涌现,涵盖各种应用场景和客户群体,从表情包制作者到好莱坞导演。

Jason Cui:人工智能原生数据栈持续演进

过去一年里,随着数据公司从专注于数据摄取、转换和计算等专业领域转向捆绑式统一平台,我们看到了“现代数据栈”的整合。例如:Fivetran/dbt 的合并以及 Databricks 等统一平台的持续崛起。

尽管整个生态已明显成熟,但我们仍处于真正人工智能原生数据架构的早期阶段。我们对人工智能持续变革数据堆栈多个环节的方式感到兴奋,并且开始意识到数据和人工智能基础设施正变得密不可分。

以下是我们看好的一些方向:

数据将如何与传统结构化数据一起流入高性能向量数据库

人工智能代理如何解决“上下文难题”:持续访问正确的业务数据上下文和语义层,从而构建强大的应用程序,例如与数据进行交互,并确保这些应用程序在多个记录系统中始终拥有正确的业务定义

随着数据工作流变得更加代理化和自动化,传统的商业智能工具和电子表格将如何改变

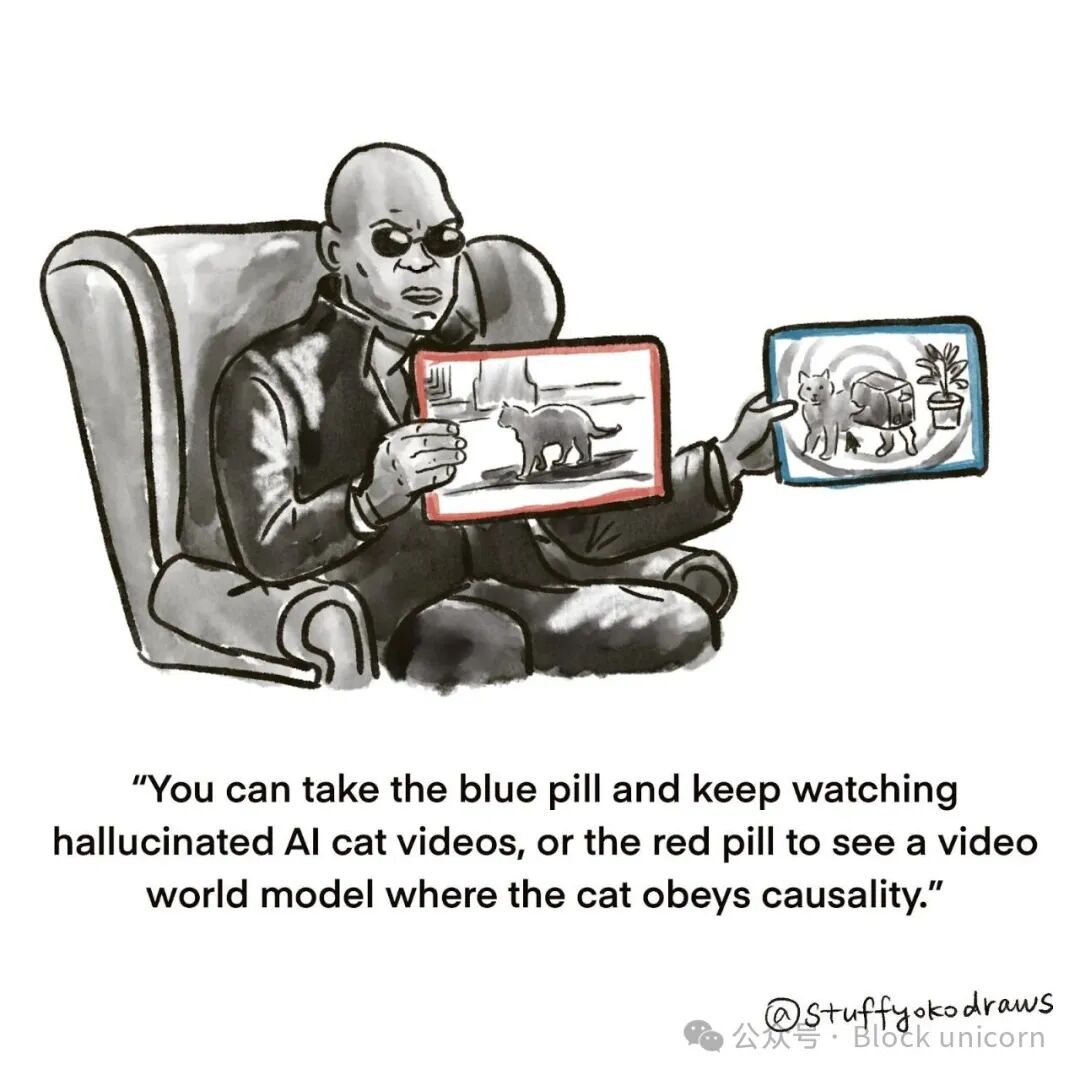

Yoko Li:我们走进视频的一年

到 2026 年,视频将不再是我们被动观看的内容,而更像是一个我们可以真正置身其中的空间。视频模型最终能够理解时间,记住它们已经展示过的内容,对我们的操作做出反应,并保持现实世界那种可靠的一致性。这些系统不再仅仅生成几秒钟的零散影像,而是能够维持角色、物体和物理效果足够长的时间,使行动产生意义,并展现其后果。这种转变将视频变成了一种可以不断发展的媒介:一个机器人可以练习、游戏可以演进、设计师可以制作原型、代理可以在实践中学习的空间。最终呈现的不再像一段视频片段,而更像是一个鲜活的环境,一个开始弥合感知与行动之间鸿沟的环境。我们第一次感觉自己可以置身于我们生成的视频之中。

增长

Sarah Wang:记录系统失去主导地位

到 2026 年,企业软件领域真正的颠覆性变革在于记录系统最终将失去其主导地位。人工智能正在缩小意图与执行之间的距离:模型现在可以直接读取、写入和推理操作数据,将 IT 服务管理(ITSM)和客户关系管理(CRM)系统从被动的数据库转变为自主的工作流引擎。随着推理模型和代理工作流的最新进展不断积累,这些系统不仅能够响应,还能预测、协调和执行端到端的流程。界面转变为动态代理层,而传统的记录系统则退居幕后,成为一种通用的持久层——其战略优势将被让渡给真正掌控员工日常使用的代理执行环境的那一方。

Alex Immerman:垂直行业的人工智能从信息检索和推理演进到多人协作

人工智能推动垂直行业软件实现了前所未有的增长。医疗保健、法律和房地产公司在短短几年内就达到了超过 1 亿美元的年度经常性收入 (ARR);金融和会计行业紧随其后。这一演进首先是信息检索:查找、提取和汇总正确的信息。2025 年带来了推理功能:Hebbia 分析财务报表并构建模型,Basis 在不同系统间对账试算表,EliseAI 诊断维护问题并派遣合适的供应商。

2026 年将解锁多人协作模式。垂直行业软件受益于特定领域的界面、数据和集成。但垂直行业的工作本质上是多方协作的。如果代理要代表劳动力,它们就需要协作。从买家和卖家,到租户、顾问和供应商,每一方都有不同的权限、工作流程和合规要求,而这些只有垂直行业软件才能理解。

如今,各方都独立地使用人工智能,导致交接过程中缺乏授权。分析采购协议的人工智能不会与首席财务官沟通以调整模型。维护人工智能也不知道现场工作人员曾向租户承诺过什么。多方协作的变革在于跨利益相关者的协调:将任务路由至职能专家、维持上下文、同步变更。交易对手的人工智能在既定参数范围内进行谈判,并将不对称之处标记出来供人工审核。高级合伙人的标记用于训练整个公司的系统。人工智能执行的任务将以更高的成功率完成。

当多人协作和多代理协作的价值提升时,转换成本也会随之增加。我们将看到人工智能应用一直以来未能实现的网络效应:协作层将成为护城河。

Stephenie Zhang:为代理而设计,而非为人类

到 2026 年,人们将开始通过代理与网络交互。过去为人类消费所优化的东西,对代理消费来说将不再同样重要。

多年来,我们一直致力于优化可预测的人类行为:在谷歌搜索结果中排名靠前,在亚马逊搜索结果中名列前茅,并以简短精炼的“TL;DR”开头。高中时,我选修了一门新闻课,老师教我们要用“5W1H”写新闻,专题文章要以引人入胜的开头吸引读者。或许人类读者会错过隐藏在第五页的那些极具价值、见解深刻的论述,但人工智能不会。

这种转变也体现在软件领域。应用程序的设计初衷是为了满足人类的视觉和点击需求,优化意味着良好的用户界面和直观的操作流程。随着人工智能接管检索和解读工作,视觉设计对于理解的重要性逐渐降低。工程师不再盯着 Grafana 仪表盘,人工智能系统可靠性工程师 (SRE) 可以解读遥测数据,并在 Slack 上发布分析结果。销售团队不再需要费力地翻阅客户关系管理系统 (CRM),人工智能可以自动提取模式和摘要。

我们不再为人类设计内容,而是为人工智能设计内容。新的优化目标不再是视觉层级,而是机器可读性——这将改变我们创作的方式以及我们使用的工具。

Santiago Rodriguez:人工智能应用中“屏幕时间”KPI 的终结

过去 15 年来,屏幕时间一直是衡量消费者和企业应用价值交付的最佳指标。我们一直生活在一个以 Netflix 流媒体播放时长、医疗电子病历用户体验中的鼠标点击次数(以此证明有效使用)甚至在 ChatGPT 上花费的时间作为关键绩效指标的范式中。随着我们迈向基于结果的定价模式,这种模式能够完美地协调供应商和用户的激励机制,我们将首先摒弃屏幕时间报告。

我们已经在实践中看到了这一点。当我在 ChatGPT 上运行 DeepResearch 查询时,即使屏幕时间几乎为零,我也能获取到巨大的价值。当 Abridge 神奇地捕捉到医患对话并自动执行后续操作时,医生几乎不用看屏幕。当 Cursor 开发出完整的端到端应用程序时,工程师们正在规划下一个功能开发周期。而当 Hebbia 根据数百份公开文件撰写演示文稿时,投资银行家们终于可以好好睡上一觉了。

这带来了一个独特的挑战:应用程序的单用户收费标准需要更复杂的投资回报率 (ROI) 衡量方法。人工智能 (AI) 应用的普及将提升医生满意度、开发人员效率、财务分析师福祉以及消费者幸福感。那些能够以最简洁的方式阐述 ROI 的公司将继续超越竞争对手。

生物 + 健康

Julie Yoo:健康的月活跃用户 (MAU)

到 2026 年,一个新的医疗保健客户群体将成为焦点:“健康的月活跃用户”。

传统的医疗保健系统主要服务于三大用户群体:(a) “患病的月活跃用户”:需求波动较大且费用较高的人群;(b) “患病的日活跃用户*”:例如需要长期重症监护的患者;以及 (c) “健康的青年活跃用户*”:相对健康且很少就医的人群。健康青年活跃用户面临着转变为患病的月活跃用户/日活跃用户的风险,而预防性护理可以减缓这种转变。但我们以治疗为主的医疗报销体系奖励的是治疗而非预防,因此主动健康检查和监测服务并未得到优先考虑,而且保险也很少涵盖这些服务。

现在,健康的月活跃用户群体应运而生:他们并非患病,但希望定期监测和了解自身健康状况——而且他们也可能是消费者群体中占比最大的群体。我们预计,一批公司——包括人工智能原生创业公司和现有企业的升级版——将开始提供定期服务,以服务于这一用户群体。

随着人工智能降低医疗服务成本的潜力、专注于预防的新型健康保险产品的出现,以及消费者越来越愿意自费支付订阅模式的费用,“健康的月活跃用户”代表着医疗科技领域下一个极具潜力的客户群体:他们持续参与、数据驱动且注重预防。

Speedrun(a16z 内部一个投资团队的名称)

Jon Lai:世界模型在叙事领域中大放异彩

2026 年,人工智能驱动的世界模型将通过交互式虚拟世界和数字经济彻底改变叙事方式。诸如 Marble(World Labs)和 Genie 3(DeepMind)等技术已经能够根据文本提示生成完整的 3D 环境,让用户像在游戏中一样探索它们。随着创作者采用这些工具,全新的叙事形式将会出现,最终可能演变成“生成式我的世界”,玩家可以共同创造庞大且不断演化的宇宙。这些世界可以将游戏机制与自然语言编程相结合,例如,玩家可以发出“创造一支画笔,将我触碰到的任何东西都变成粉色”这样的指令。

此类模型将模糊玩家和创作者之间的界限,使用户成为动态共享现实的共同创造者。这种演变可能会催生相互关联的生成式多元宇宙,让奇幻、恐怖、冒险等不同类型并存。在这些虚拟世界中,数字经济将蓬勃发展,创作者可以通过打造资产、指导新手或开发新的互动工具来获得收入。除了娱乐之外,这些生成式世界还将成为训练人工智能代理、机器人乃至通用人工智能(AGI)的丰富模拟环境。因此,世界模型的兴起不仅标志着一种新的游戏类型的出现,更预示着一个全新的创意媒介和经济前沿的到来。

Josh Lu:“我的元年”

2026 年将成为“我的元年”:届时,产品将不再批量生产,而是为你量身定制。

我们已经在各处看到了这种趋势。

在教育领域,像 Alphaschool 这样的初创公司正在构建人工智能导师,这些导师能够适应每个学生的学习进度和兴趣,让每个孩子都能获得与其学习节奏和偏好相匹配的教育。如果没有在每个学生身上花费数万美元的辅导费,这种程度的关注是不可能实现的。

在健康领域,人工智能正在设计根据你的生理特点量身定制的每日营养补充剂组合、锻炼计划和膳食方案。无需教练或实验室。

即使在媒体领域,人工智能也能让创作者将新闻、节目和故事重新组合,打造出完全符合你兴趣和喜好的个性化信息流。

上个世纪最大的公司之所以能取得成功,是因为它们找到了普通消费者。

下个世纪最大的公司将通过找到普通消费者中的个体来赢得胜利。

2026 年,世界将不再为所有人优化,而是开始为你优化。

Emily Bennett:第一所原生人工智能大学

我预计,2026 年我们将见证第一所原生人工智能大学的诞生,这是一所从零开始围绕人工智能系统构建的机构。

过去几年,大学一直在尝试将人工智能应用于评分、辅导和课程安排。但如今正在涌现的是一种更深层次的人工智能,一种能够实时学习和自我优化的自适应学术体系。

想象一下,在一个这样的机构里,课程、咨询、研究合作,甚至建筑运营都会根据数据反馈循环不断调整。课程表会自我优化。阅读清单每晚都会更新,并随着新研究的出现而自动重写。学习路径会实时调整,以适应每位学生的学习进度和实际情况。

我们已经看到了一些先兆。亚利桑那州立大学(ASU)与 OpenAI 的全校合作催生了数百个涵盖教学和行政管理的 AI 驱动项目。纽约州立大学(SUNY)现在已将 AI 素养纳入其通识教育要求。这些都是更深入部署的基础。

在 AI 原生大学里,教授们将成为学习的架构师,他们负责数据管理、模型调优,并指导学生如何质疑机器推理。

评估方式也将改变。检测工具和抄袭禁令将被 AI 意识评估所取代,学生的评分标准不再是是否使用了 AI,而是他们如何使用 AI。透明和策略性运用取代了禁止。

随着各行各业都在努力招聘能够设计、管理和协作 AI 系统的人才,这所新型大学将成为培训基地,培养出精通 AI 系统协调的毕业生,助力快速变化的劳动力市场。

这所 AI 原生大学将成为新经济的人才引擎。

今天就到这里,我们下一部分见,敬请期待。

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。