I. Outlook

1. Macroeconomic Summary and Future Predictions

Last week, the U.S. macroeconomy continued to show signs of cooling under the framework of "slowing employment + downward growth revision + inflation yet to be verified." The re-release of the third quarter GDP second revision, delayed due to the government shutdown, showed a slight downward adjustment in growth, with weakened contributions from business investment and inventory, reflecting a waning economic momentum. Concurrently, the number of initial jobless claims slightly rebounded, further confirming the trend of cooling employment. Overall, last week's data combination presented a typical picture of "weakened demand, loosening labor market, and rising growth pressures."

Looking ahead, as the October PCE is still awaiting re-release, the market remains cautious about inflation trends, with increasing divergence in policy expectations. The current U.S. economy is entering a critical phase of "uncertain speed of inflation decline and confirmed slowdown in growth," and the re-released data in the coming weeks will play a decisive role in market trends and policy direction.

2. Market Movements and Warnings in the Cryptocurrency Industry

Last week, the cryptocurrency market continued to weaken overall. Bitcoin, after repeatedly failing to contest the $95,000 mark, dipped again, reaching just above $87,000, with market sentiment falling back into a state of panic. The funding situation remains tight, with ETF inflows insufficient to offset selling pressure in the market, and institutions continue to adopt a wait-and-see attitude; on-chain activity has decreased, while net inflows of stablecoins have increased, indicating that more funds are choosing to withdraw from risk assets. Altcoins performed even more poorly, with several popular sectors experiencing a second bottoming out; even occasional short-term rebounds lack sustainability and volume support, showing a clear structural weakness throughout the week.

On the macro front, key inflation and employment data are about to be re-released. If they show that the U.S. economy remains resilient or that inflation is sticky, it will further suppress the rebound potential of crypto assets; conversely, if the economy shows clear signs of weakness and the market trades on rate cut expectations again, a short-term repair window may open. However, before a clear improvement in liquidity occurs, the overall crypto market still needs to be wary of the risk of revisiting previous lows or even expanding declines.

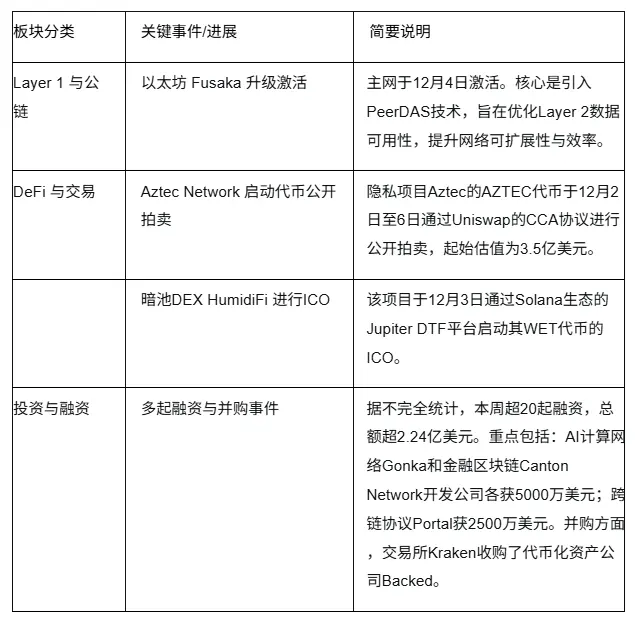

3. Industry and Sector Hotspots

Total financing of $9.8 million, led by Pantera, with participation from OKX—Accountable, a data verification layer born for institutional-level DeFi, has built a comprehensive platform centered on data verifiability; with investments from Waterdrip and IoTeX—DGrid.AI is constructing a decentralized AI inference routing network based on a quality assurance mechanism, redefining the future of AI.

II. Market Hotspot Sectors and Potential Projects of the Week

1. Overview of Potential Projects

1.1. Brief Analysis of Total Financing of $9.8 Million, Led by Pantera, with Participation from OKX—Accountable, a Data Verification Layer for Institutional-Level DeFi that Balances Privacy and Transparency

Introduction

Accountable is reshaping the financial landscape, bringing transparency and trust back to the financial system without sacrificing privacy.

Its integrated ecosystem combines cutting-edge privacy protection technology with a deep understanding of financial markets, creating a comprehensive platform centered on data verifiability, enabling users to obtain liquidity and build customized financial infrastructure based on trustworthy data.

The Accountable ecosystem supports each other through a three-layer structure of DVN → VaaS → YieldApp:

DVN provides a "privacy + trust" data verification foundation;

VaaS enables rapid and secure deployment of yield vaults;

YieldApp transforms verified data into real liquidity and investment opportunities.

Core Mechanism Overview

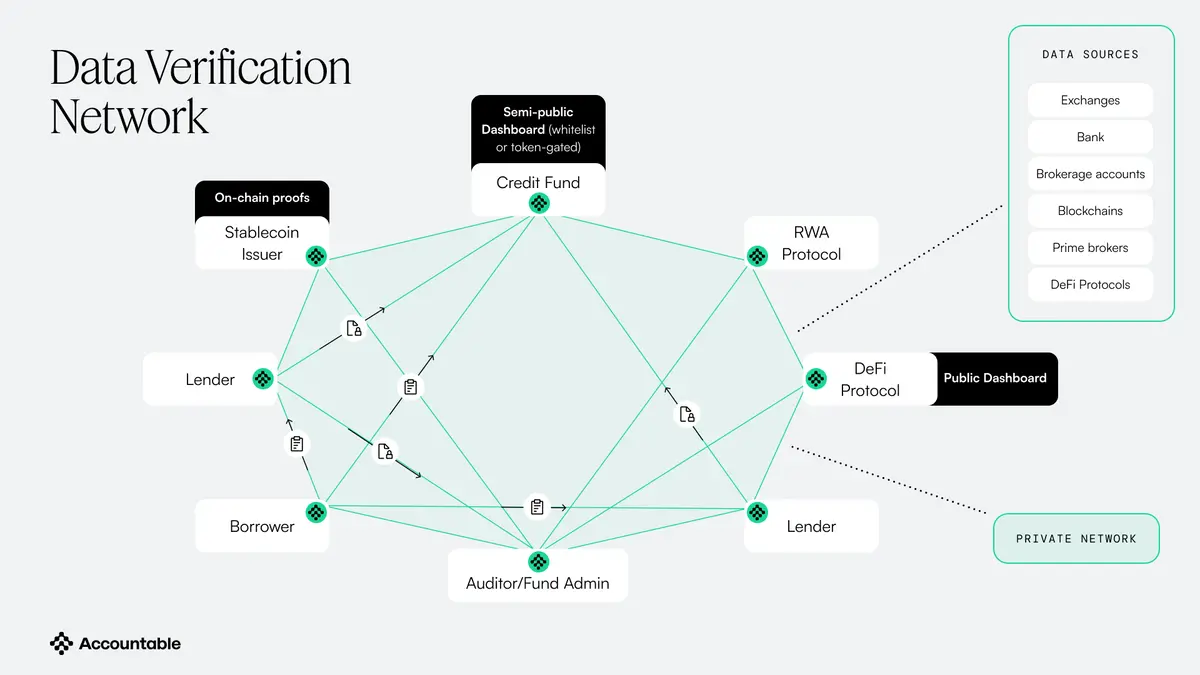

Data Verification Network (DVN)

DVN aims to solve the paradox of "transparency and privacy cannot coexist," achieving "verifiable but private" financial data transparency through cryptographic proof mechanisms.

- Privacy-Preserving Data Verification Solutions

Technical solutions include:

- On-Premise Processing

All sensitive data is processed in an environment controlled by the user; API keys and wallet addresses are stored locally.

Verifiable Computation

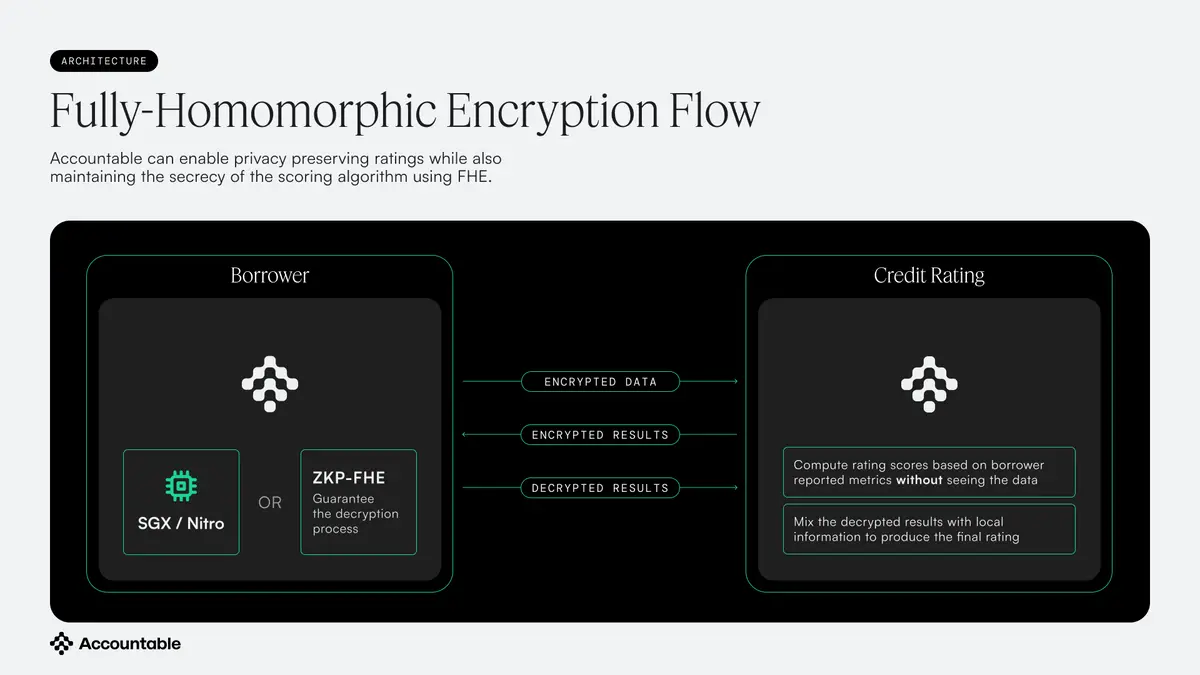

The data collection and report generation process includes cryptographic proofs (ZKPs) to ensure the authenticity of the source and the trustworthiness of the results.

Selective Disclosure

Users decide what information to share, with whom, and to what extent (P2P, restricted reports, public dashboards).

Trusted Execution

Supports hardware secure execution environments (SGX, Nitro, SEV-SNP, TDX).

DVN achieves both data privacy and verifiability through local computation + cryptographic proofs + selective disclosure, providing financial institutions with a new standard of "trusted transparency."

2. How DVN Works

Core Mechanism:

DVN is a real-time data verification network that combines privacy computing and cryptographic proofs.

Each participant runs a local node, forming a trustless but permissioned network.

Supports real-time monitoring and snapshot data sharing.

Structural Composition:

Data Verification Platform (DVP): Connects DVN's localized backend + private dApp.

Secure Local Backend: Access is authorized only, protected by an isolated firewall.

Private dApp Frontend: Connects local nodes, with no data leakage.

Data Processing Flow:

Collect asset and liability information from multiple data sources (on-chain, exchanges, banks, custodians).

Verify and store through cryptographic signatures.

Reports can be shared within the network or published via API or public chain.

3. What Can Accountable Prove?

Verification Scope:

Assets: Supports on-chain and off-chain assets (fiat, spot, futures, options, stocks, government bonds, DeFi, RWA, etc.), confirming the authenticity of the source through cryptographic proofs.

Ownership: Verifies account ownership through signatures or APIs.

Attestations: Combines with auditing firms for periodic "light audits" + annual "full audits."

Liabilities: Automatically aggregates lending system and protocol data, hiding liabilities through ZK verification.

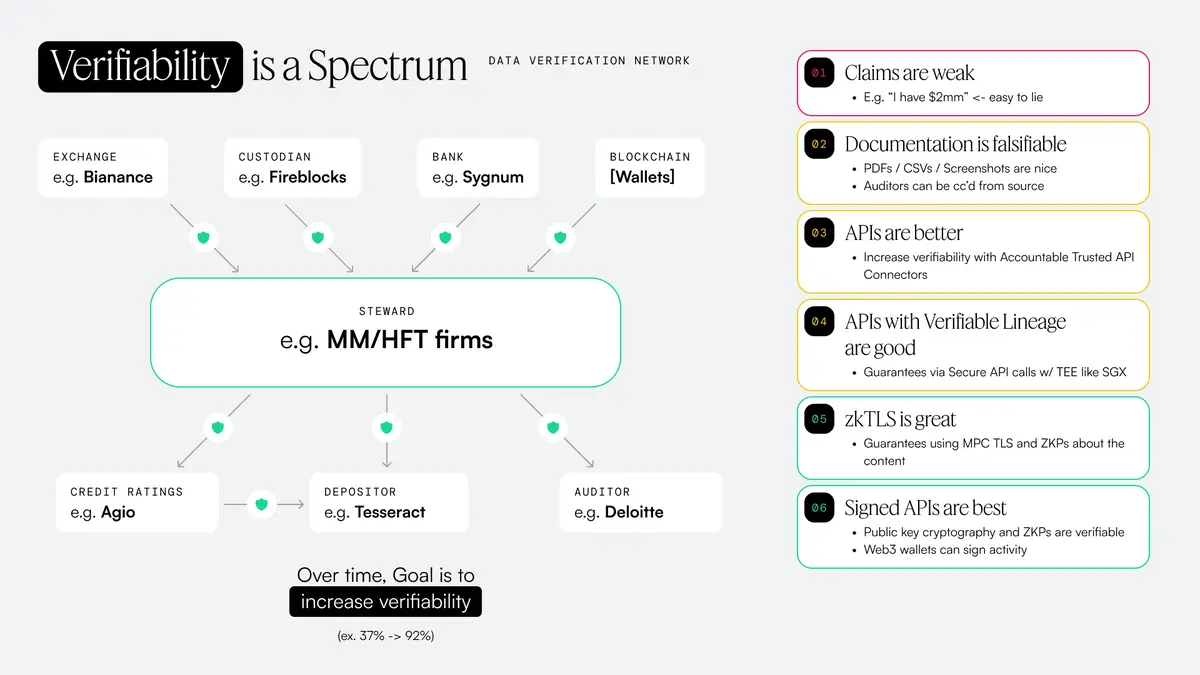

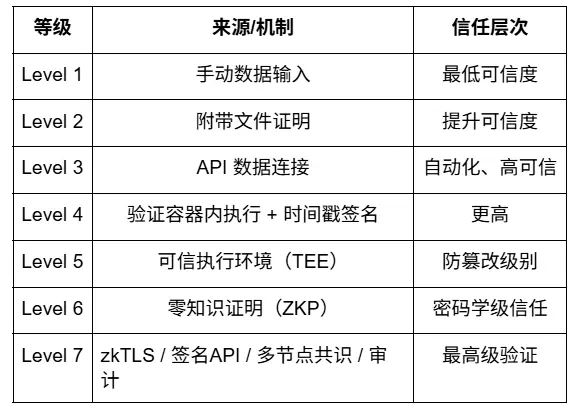

4. Verifiability Levels

Core Idea:

"Verifiability is not binary, but a continuous spectrum." DVN layers based on the credibility of data sources:

**

**

5. Comprehensive Localized Reporting

After data verification, the system provides highly customizable aggregated reports, real-time dashboards, and advanced alert functions, enabling users to comprehensively grasp portfolio performance and risks.

Features:

Comprehensive localized reports

Aggregated reports and analysis: Generates aggregated reports on portfolio risk exposure, custodian composition, stablecoin ratios, etc.; supports customizable reports from historical performance to complex risk models; can integrate third-party indicators through plugins; provides real-time risk management dashboards.

Advanced alert system: Actively monitors data verifiability, total limits, concentration risks, etc.; provides customizable trigger alerts for both borrowers and lenders.

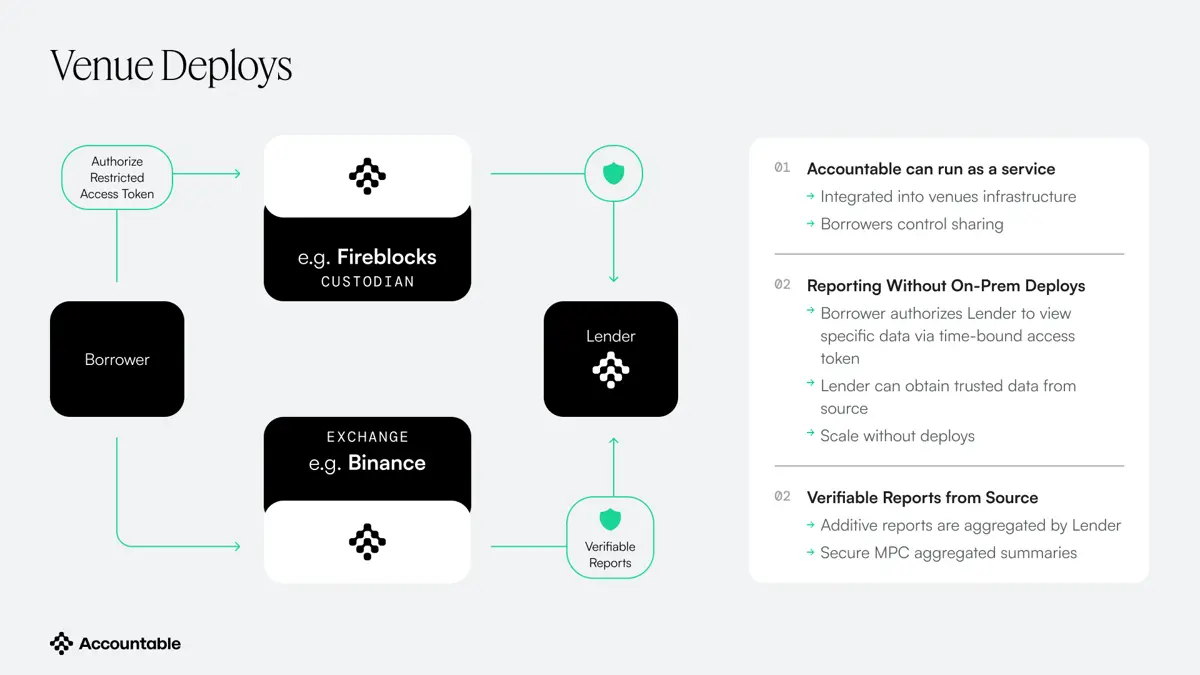

6. Controlled Secure Data Sharing

While ensuring data encryption security, it provides a flexible data sharing mechanism, including selective sharing, real-time data stream publishing, and supports generating joint reports for multiple borrowers under confidentiality.

Features:

Controlled and secure data sharing

Selective report sharing: Borrowers can share complete reports or specific data proofs with lenders, and can attach proofs for public sharing.

Snapshot and real-time reports: Supports one-time snapshots or continuous reports at customizable frequencies.

Oracle publishing: Can transmit reserve data streams to third-party oracles or directly on-chain to prove solvency.

Confidential multi-borrower reports: Provides individual and aggregated risk views to lenders while ensuring the confidentiality of each borrower's data.

7. Solvency Proof and Deployment

The core function of the network is to generate cryptographically guaranteed solvency proofs, with plans to embed them at the source of transactions after reaching scale, achieving seamless privacy-protecting verification.

Features:

Solvency proof

Reserve proof: Constructs a verifiable panorama of assets and liabilities using Merkle trees and zero-knowledge proofs, automatically generating reports and verifying liability misstatements, supporting auditor-integrated verification.

Source deployment: Plans to deploy at the source of transactions after reaching critical scale, simplifying processes through authorized tokens to achieve privacy-protecting verification.

8. Ecosystem Integration and Future Development

This network is the cornerstone of the entire Accountable ecosystem, supporting transparent reports for vaults and connecting liquidity. Future expansions will include privacy-protecting transactions, advanced risk control, and more asset classes.

Features:

Integration with the Accountable Ecosystem

As the foundation of the ecosystem: Supports "vault as a service" integration with verifiable reports to enhance transparency; users can obtain liquidity through YieldApp.

Future development plans: Develop privacy-preserving quote requests, advanced trading and risk control, liability tracking features, and expand supported asset classes and connectors.

Tron Comments

Advantages:

Technical Trust: Emphasizes the use of cutting-edge technologies such as "zero-knowledge proofs" and "fully homomorphic encryption" to replace traditional commercial trust, providing stronger security guarantees and privacy protection.

Addressing Core Pain Points: Directly tackles two key issues in the DeFi space: "lack of transparent solvency proof" and "the need for confidential data sharing by institutions."

Flexibility: Supports everything from simple report sharing to complex on-chain integration, meeting the needs of different users.

Disadvantages:

Technical Complexity: The powerful technology comes with implementation complexity, which may hinder understanding and adoption by non-technical users.

Dependence on Network Effects: As a platform service, its value is closely related to the number of users (especially borrowing and lending institutions), presenting a "cold start" problem.

Market Adoption Uncertainty: Clearly points out that the project's success ultimately depends on market acceptance and the scale of institutional user adoption.

1.2. Brief Analysis of Waterdrip and IoTeX's Investment—Making AI a Verifiable Public Utility on the Blockchain: DGrid.AI

Introduction

DGrid.AI is building a decentralized AI inference routing network based on a quality assurance mechanism, redefining the future of AI and enabling freer and more efficient flow of AI inference. Unlike traditional centralized AI systems, the DGrid network allows every node to participate, making each call traceable and establishing AI as a foundational capability in the blockchain world.

DGrid combines AI RPC, LLM inference, and a distributed node network, addressing core pain points such as high costs, uncontrollable services, and single points of failure in centralized AI, while filling critical gaps in Web3 AI, such as the lack of unified interfaces and trustworthy inference environments.

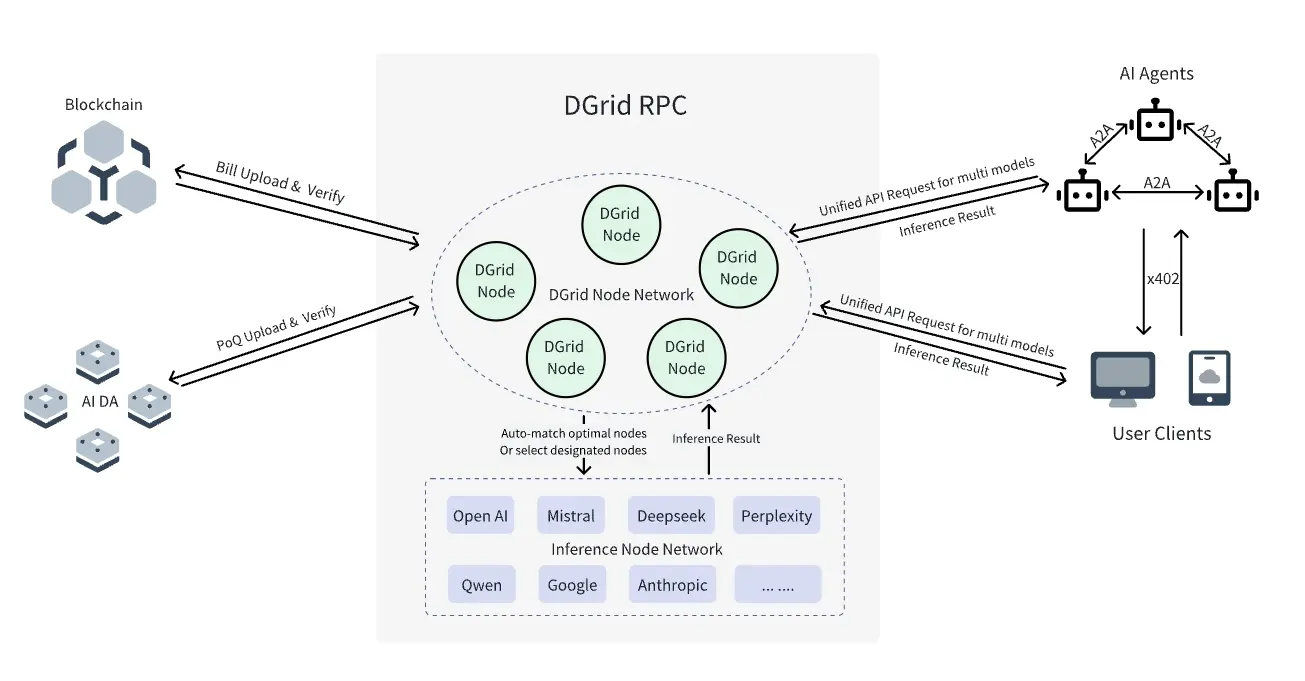

Architecture Overview

DGrid.AI addresses the key gaps in Web3 AI and the limitations of centralized AI through an ecosystem composed of interconnected nodes, protocols, and decentralized infrastructure.

By integrating standardized AI RPC interfaces, distributed inference nodes, smart routing, on-chain settlement, and secure storage, it builds a large language model inference network that is trustless, scalable, and user-centric—making AI a native capability for blockchain applications. The core of the DGrid solution lies in redefining decentralized AI inference by integrating three foundational components: executing models on distributed nodes using quality assurance mechanisms to ensure result credibility, establishing standardized protocols for universal access, and ensuring transparency through on-chain mechanisms.

These elements collectively eliminate reliance on centralized providers, allowing AI to operate as an open, community-governed public utility.

DGrid's Solutions

DGrid Nodes: Decentralized Inference Execution

DGrid nodes are community-operated nodes that form the computational core of the network by integrating one or more large language models. These nodes:

Execute inference tasks for users, processing inputs (such as text prompts or smart contract queries) and generating outputs through pre-loaded models. At the same time, they verify the quality of inference results through quality assurance mechanisms to ensure the reliability and accuracy of outputs.

Adapt to different hardware capabilities, allowing operators to choose matching models based on their server specifications (from lightweight 7 billion parameter models running on basic GPUs to models with over 70 billion parameters running on high-performance hardware).

Report metrics (including latency and computational unit consumption) in real-time to DGrid adapter nodes, providing data support for smart routing to achieve optimal task allocation.

By distributing inference tasks across thousands of independent nodes, DGrid eliminates single points of failure and ensures geographic redundancy—crucial for Web3 applications that require 24/7 high reliability.

DGridRPC: Universal Access and Request Verification

DGridRPC: A standardized JSON-RPC protocol that simplifies user access to models within the network. It provides a unified API to call any LLM (regardless of node or model type) and integrates EIP-712 signatures to verify user requests—ensuring that only authorized and prepaid tasks are processed.

DGridRPC addresses the "interface fragmentation" issue in Web3 AI, making LLM integration as straightforward as calling a smart contract.

PoQ: Trust Guarantee for Inference Results

PoQ is the core mechanism of the DGrid ecosystem, ensuring the credibility of LLM inference results. It operates in conjunction with distributed nodes and GridRPC, forming a "request-execute-verify" closed loop:

Multi-dimensional Quality Assessment: PoQ objectively scores inference results generated by DGrid nodes based on three key dimensions: "accuracy matching" (comparison with standard answers or reference results), "response consistency" (output deviations for the same request across different nodes), and "format compliance" (adherence to output format requirements specified in user requests).

On-chain Verifiable Proof Generation: After completing inference tasks, nodes must upload inference process logs and PoQ scoring data to the network to generate tamper-proof quality proofs. Users can query these proofs on-chain to quickly verify the reliability of results without re-executing inference tasks.

Billing Contracts and AI DA Layer: On-chain Transparency

Billing Contracts: Smart contracts deployed on the blockchain that automatically complete $DGAI token settlements between users and nodes. These contracts calculate fees based on computational units and latency, deducting from user accounts through the x402 protocol and distributing rewards to node operators—eliminating intermediaries.

AI DA Layer: A decentralized storage network where all inference request data is accompanied by PoQ support to ensure auditability. Users can verify billing details, while nodes can prove task completion, enhancing transparency for dispute resolution or compliance audits.

Security Mechanisms

DGrid.AI has established a comprehensive security framework that combines technical safeguards and on-chain transparency to ensure trustlessness in the decentralized network:

Trusted Inference Environment

Immutable Runtime: DGrid node operators cannot modify LLM weights or execution environments, ensuring consistency in model behavior across the network.

Resource Control: Strict limits on CPU, GPU, and network usage (enforced by nodes) prevent denial-of-service attacks.

On-chain Auditing and Accountability

Immutable Records: All key activities—node registration, inference metadata (input/output), fee settlements, and rewards—are recorded on-chain through billing contracts and archived in the AI DA layer.

Automatic Penalties: DGrid monitors node behavior; malicious actors (e.g., submitting false results) will face penalties such as token forfeiture or imprisonment, enforced by smart contracts.

Decentralized Governance: $DGAI token holders vote on protocol upgrades, fee structures, and security parameters, ensuring the network evolves in line with community interests.

By combining a secure inference environment, on-chain transparency, and community governance, DGrid.AI ensures that the network operates in a secure, reliable, and trustless manner—providing users with robust decentralized AI inference services.

dToken: Incentives and Governance

$DGAI serves as the economic engine of the network, coordinating the interests of the entire ecosystem:

Payments: Users pay for inference task fees using $DGAI, with fees dynamically adjusted through billing contracts.

Rewards: Node operators earn $DGAI based on contribution quality (e.g., low latency, high online rates) and participation in verification.

Staking: DGrid nodes must stake $DGAI to participate in the network, with misconduct leading to token forfeiture.

Governance: Token holders vote on protocol parameters (e.g., fee structures, model whitelists) to guide network evolution.

This architecture provides a scalable (anyone can operate a node), trustless (on-chain proofs replace reliance on intermediaries), and natively Web3-supported (integrated with blockchain workflows) solution. By unifying distributed execution, smart coordination, secure inference, and transparent settlement, DGrid.AI transforms LLM inference into a foundational capability for Web3—applicable in areas ranging from DeFi strategy analyzers to on-chain chatbots and more.

Tron Comments

Advantages:

Trust and Transparency: "Quality assurance" and "on-chain settlement" are core differentiators from traditional AI services, providing result verifiability.

Reliability and Audit Resistance: The "decentralized architecture" avoids single points of failure and control by a single company.

Web3 Native: Directly integrates with blockchain and smart contracts, addressing the challenges of integrating AI into existing Web3 projects.

Disadvantages:

Performance Challenges: Distributed networks are inherently more complex in coordination and consistency than centralized systems, which may affect response speed and the ability to handle complex tasks.

Dependence and Network Effects: This is a two-sided market model that requires a sufficient number of nodes and users to form a healthy ecosystem, making initial startup and growth key challenges.

Technical Maturity: As an innovative architecture, its stability and robustness need to be validated through large-scale practice.

2. Key Project Details of the Week

2.1. Detailed Analysis of $79.55 Million in Three Rounds of Financing, with Vitalik Buterin Participating and Endorsing—The World's First EVM Blockchain with Web2 Real-Time Performance: MegaETH

Introduction

MegaETH is an EVM-compatible blockchain that brings Web2-level real-time performance to the crypto world for the first time. Our goal is to push performance to the hardware limits, bridging the gap between blockchain and traditional cloud computing servers.

MegaETH offers several unique features, including high transaction throughput, ample computing power, and most uniquely—achieving millisecond response times even under high load. With MegaETH, developers can build and combine the most challenging applications without restrictions.

Architecture Overview

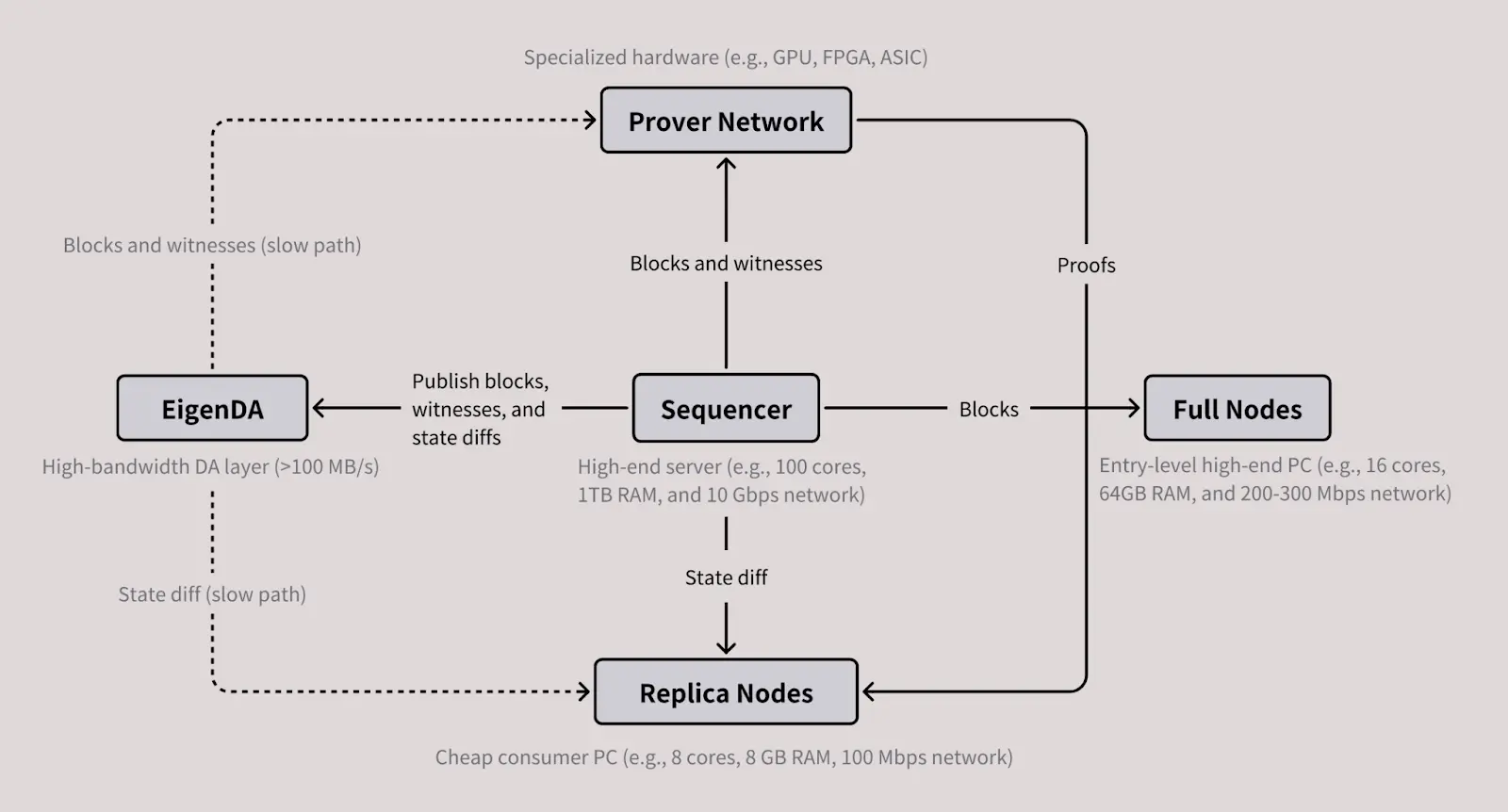

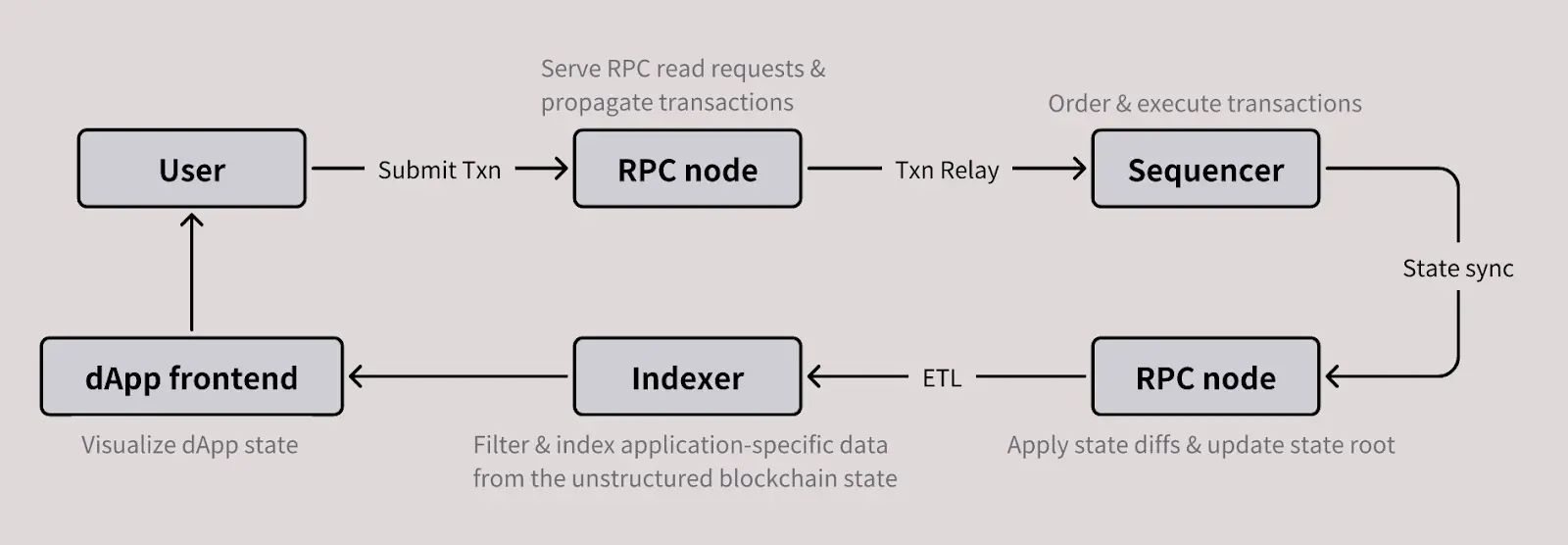

MegaETH has four main roles: Sequencer, Prover, Full Node, and Replica Node.

Sequencer nodes are responsible for ordering and executing user transactions. However, MegaETH has only one active sequencer at any given time, eliminating consensus overhead during normal execution.

Replica nodes receive state diffs from the sequencer via a P2P network and directly apply these diffs to update their local state. Notably, they do not re-execute transactions but instead verify blocks indirectly through proofs provided by Provers.

Full nodes operate similarly to traditional blockchains: they re-execute each transaction to validate blocks. This mechanism is crucial for certain high-demand users (such as bridging operators and market makers) who require fast finality, although it necessitates higher-performance hardware to keep up with the sequencer's speed.

Provers use a stateless validation scheme to asynchronously and unordered validate blocks.

The diagram below illustrates the basic architecture of MegaETH and the interactions between its main components. It is important to note that EigenDA is an external component built on EigenLayer.

Diagram: Main components of MegaETH and their interactions

A key advantage of node specialization is that it allows for independent hardware requirements to be set for each type of node.

For example, since sequencer nodes bear the primary load of execution, they should ideally be deployed on high-performance servers to enhance overall performance. In contrast, the hardware requirements for replica nodes can remain lower because the computational cost of verifying proofs is minimal.

Additionally, although full nodes still need to execute transactions, they can leverage auxiliary information generated by the sequencer to re-execute transactions more efficiently.

The significance of this architecture is profound: as Vitalik outlined in his article "Endgame," node specialization ensures that while block production tends to centralize, block validation can still maintain trustlessness and high decentralization.

The table below lists the expected hardware requirements for various types of nodes in MegaETH:

ZK prover nodes are omitted from the table because their hardware requirements largely depend on the specific proof system, and the differences between providers can be substantial.

The hourly cost data for various virtual machines comes from instance-pricing.com. It is noteworthy that node specialization allows us to optimize performance while maintaining the overall economic viability of the system:

The cost of Sequencer nodes can be up to 20 times that of a regular Solana validator node, but their performance can improve by 5–10 times;

Meanwhile, the operating cost of Full nodes can still be comparable to that of Ethereum L1 nodes.

This design achieves high-performance execution while maintaining decentralization and cost control in network operations.

MegaETH does not solely rely on a powerful centralized sequencer to enhance performance. While comparing MegaETH to high-performance servers helps understand its potential, this analogy severely underestimates the research and engineering complexity behind it.

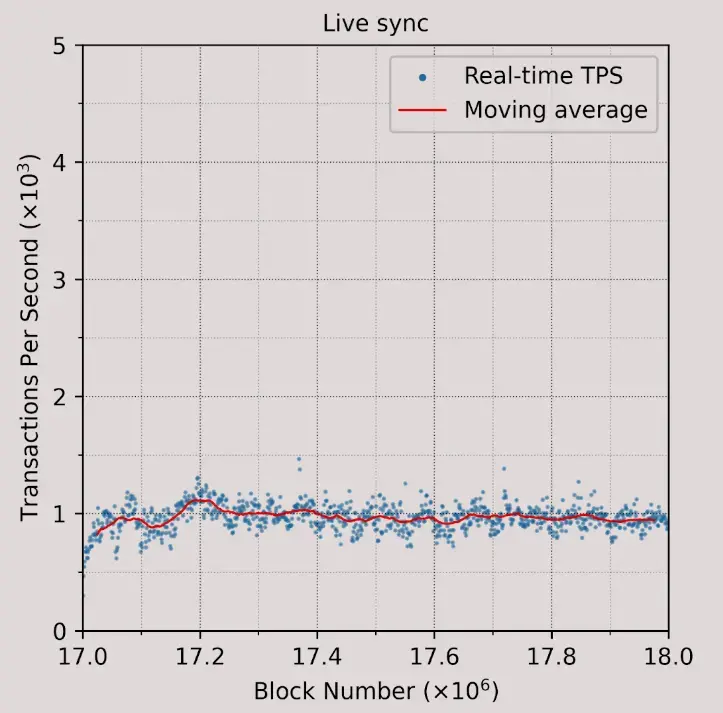

The performance breakthroughs of MegaETH come not just from hardware stacking but also from deep optimizations of the underlying blockchain architecture. For instance, in experiments, even using high-end servers equipped with 512GB of memory, Reth (Ethereum execution client) could only achieve about 1000 TPS (equivalent to 100 MGas/s) when live syncing the latest Ethereum blocks. The performance bottleneck primarily arises from the overhead of updating the Merkle Patricia Trie (MPT)—this part of the computational cost is nearly 10 times that of transaction execution.

This indicates that:

Simply improving hardware performance cannot fundamentally enhance blockchain execution speed.

The key to blockchain performance optimization lies in the innovative design of underlying data structures and execution logic.

While node specialization indeed brings significant performance enhancement potential, achieving a "super high-performance, real-time responsive" blockchain system remains an engineering challenge that has not yet been fully resolved.

1. The Architectural Design Logic of MegaETH

Like any complex computing system, the performance bottlenecks of a blockchain are often distributed across multiple interrelated components. Single-point optimizations cannot yield end-to-end performance improvements because the bottlenecks may not be the most critical or may shift to other components.

The R&D Philosophy of MegaETH:

Measure, then build

By conducting in-depth performance analysis, identify the real issues, and then design systematic solutions.Hardware-limit design

Rather than making minor tweaks, start from scratch to build a new architecture that approaches the theoretical limits of hardware. The goal is to bring blockchain infrastructure close to the "performance limit point," thereby releasing industry resources to other innovative fields.

Core Challenges in Transaction Execution

Diagram: Processing flow of user transactions (RPC nodes can be full nodes or replica nodes)

The Sequencer is responsible for transaction ordering and execution. Although the EVM is often criticized for its poor performance, experiments have shown that revm can achieve 14,000 TPS, indicating that the EVM is not the fundamental bottleneck.

The real performance issues primarily lie in the following three areas:

High state access latency

Lack of parallelism

High interpreter overhead

Through node specialization, the sequencer of MegaETH can store the complete state (approximately 100GB) in memory, eliminating SSD access latency, making state access almost no longer a bottleneck.

However, challenges remain in terms of parallelism and execution efficiency:

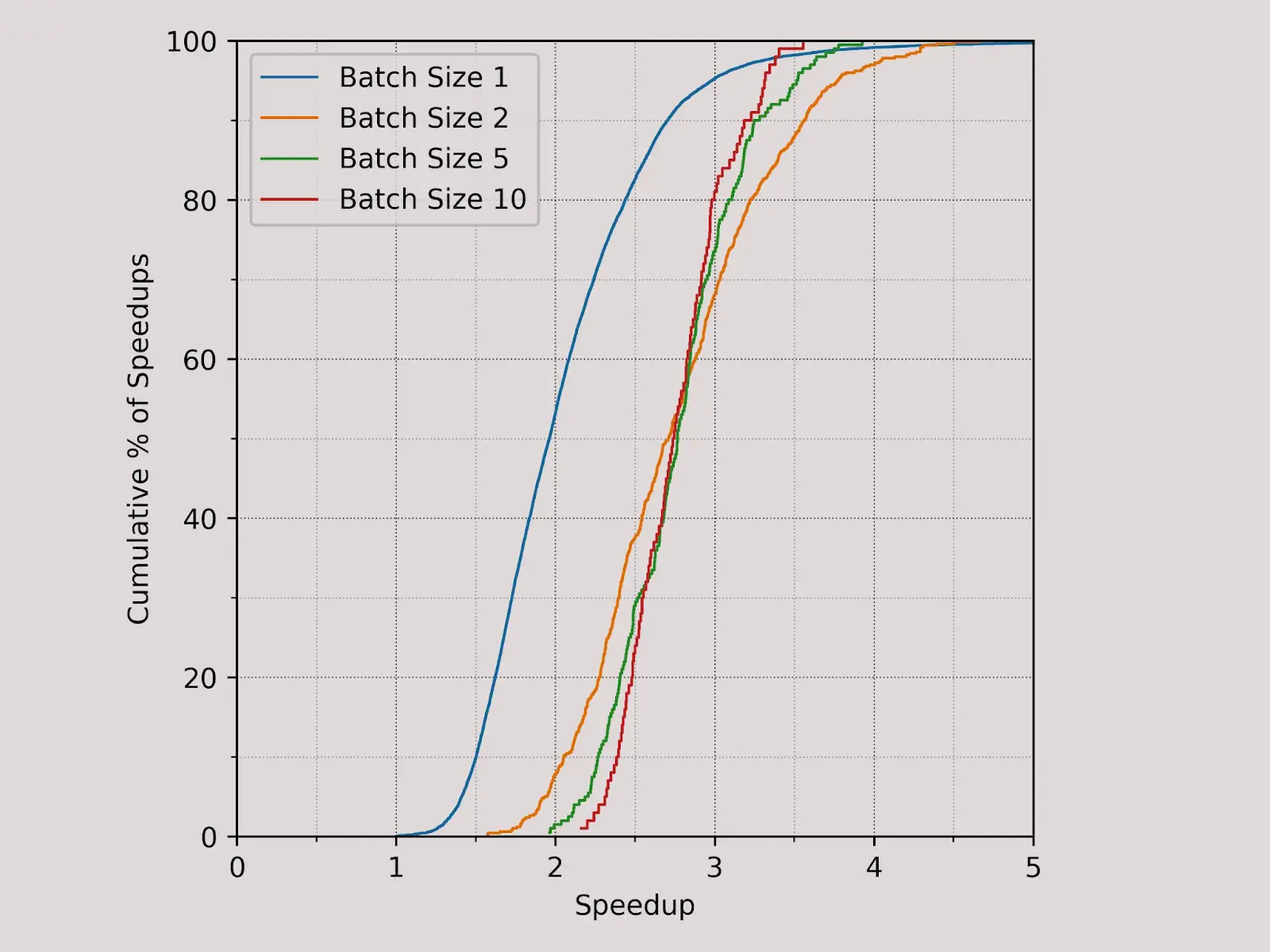

Limited parallelism:

Empirical measurements show that the median parallelism of Ethereum blocks is less than 2, and even merging batch executions only increases it to 2.75. This indicates that most transactions have long dependency chains, limiting the acceleration potential of parallel algorithms like Block-STM.Limited benefits from compilation optimizations:

Although AOT/JIT compilation (such as revmc, evm-mlir) is effective in compute-intensive contracts, in production environments, about half of the execution time is spent on "system instructions" implemented in Rust (such as keccak256, sload, sstore), thus overall acceleration is limited, with a maximum improvement of about 2 times.

Diagram: Available parallelism between blocks 20000000 and 20010000

Additional Challenges for Real-Time Blockchains

To achieve true "real-time" performance, MegaETH must overcome two major challenges:

Extremely high block production frequency (approximately one block every 10 milliseconds)

Transaction prioritization, ensuring that critical transactions can still be executed immediately under high load.

Therefore, traditional parallel execution frameworks (such as Block-STM) can improve throughput but cannot meet the ultra-low latency design goals.

2. Bottlenecks in State Sync + MegaETH's Solutions

Bottleneck Review

Full nodes need to sync the latest state changes (state diffs) from the sequencer or the network to catch up with the on-chain state.

High-throughput scenarios (such as 100,000 ERC-20 transfers or swaps per second) generate a large number of state changes: for example, 100,000 ERC-20 transfers per second require approximately ~152.6 Mbps bandwidth; 100,000 Uniswap swaps per second require approximately ~476.1 Mbps bandwidth.

Even if the node network link is rated at 100 Mbps, it cannot guarantee 100% utilization; a buffer must be reserved, and new nodes must also be able to catch up.

Therefore, state sync has become an underestimated but actually serious bottleneck in performance optimization.

MegaETH's Solutions

Node Specialization: MegaETH classifies nodes into Sequencer, Replica nodes, Full nodes, and Provers. Replica nodes do not re-execute all transactions but instead "receive state diffs" and apply them, thereby reducing their synchronization burden.

State Diffs Instead of Full Re-execution: Replica nodes receive state changes (state diffs) directly from the sequencer, allowing them to update their local state without re-executing each transaction. This significantly reduces the computational load and bandwidth required during synchronization.

Compressed State Diff Data + Dedicated Synchronization Protocol: MegaETH explicitly mentions that to handle large operations of 100,000 transactions per second, it employs state diff compression (for example, a 19× compression rate is mentioned) to reduce synchronization bandwidth requirements.

Efficient P2P Protocol and Data Availability Layer: MegaETH utilizes a dedicated peer-to-peer (P2P) network to distribute state changes and delegates data availability (DA) to EigenDA/EigenLayer, thereby improving synchronization efficiency while ensuring security.

3. Bottlenecks in State Root Updates + MegaETH's Solutions

Bottleneck Review

Blockchains (e.g., Ethereum) use data structures similar to Merkle Patricia Trie (MPT) to commit state (state root).

Updating the state root typically means that after modifying leaf nodes, all intermediate nodes along the path to the root need to be updated, resulting in a large amount of random disk I/O.

Experiments indicate that in the Reth client, this overhead is nearly 10 times that of transaction execution overhead.

For high update rate scenarios (e.g., needing to update hundreds of thousands of key-value pairs per second), even with caching optimizations, the I/O demands far exceed the capabilities of ordinary SSDs.

MegaETH's Solutions

Custom New State Trie Structure: MegaETH explicitly mentions this on its official website.

In-memory State + Large Capacity RAM: Sequencer nodes place the entire state in memory (instead of frequently reading from disk), significantly reducing state access latency and I/O costs.

Optimized Backend Storage Layer (Write-Optimized Storage Backend): To cope with high write rates, MegaETH has also optimized the storage backend (e.g., addressing write amplification and single write lock issues in MDBX) to enhance write performance and reduce latency.

Parallel Execution + JIT/AOT Compilation: Although this primarily belongs to execution layer optimizations, it also indirectly alleviates the pressure on state root updates (as some logic executes faster and state changes are processed more quickly). MegaETH mentions using JIT to compile EVM bytecode into machine code and adopting a "two-stage parallel execution" strategy.

3. Bottlenecks in Block Gas Limit + MegaETH's Solutions

Bottleneck Review

The block gas limit serves as a "throttle" in the consensus mechanism—specifying the maximum amount of gas that can be consumed within a single block, ensuring that any node can process data within the block time.

Even if the execution engine speeds up by 10×, if the gas limit remains set at a low level, the overall throughput of the chain is still constrained.

Raising the gas limit requires caution, as the worst-case scenarios (long dependency chains, low parallelism contracts, varying node performance) must be considered rather than relying solely on average performance.

While parallel EVM and JIT compilation bring acceleration, they are limited in practice: for example, the median parallelism is less than 2, and JIT acceleration in production environments is also limited (~2×).

MegaETH's Solutions

Node Specialization + High-Spec Sequencer: MegaETH employs a single high-performance sequencer to produce blocks, eliminating the traditional multi-node consensus delays, thereby logically reducing the need to rely on low gas limits to ensure nodes can keep up with speed.

Separation of Block Structure and Data Availability Layer: MegaETH uses a separate data availability layer like EigenDA to decouple execution from data publication, making the gas limit less constrained by traditional L1 models. By efficiently publishing state diffs, execution results, proofs, etc., to the DA layer, it opens pathways for high throughput and high-frequency block production.

Redesigning Pricing Models and Parallel Execution Mechanisms: MegaETH mentions in its documentation that although the gas limit is a traditional mechanism, its architecture allows for "internal" block gas limits to be higher on sequencer nodes, and it can reduce the resource consumption per transaction through JIT, parallel execution, and in-memory state, significantly enhancing execution efficiency per unit of gas.

Compressed State Diffs + Lightweight Replica Nodes: Faster synchronization and more efficient data propagation also mean that the processing capacity required from nodes (and thus the minimum requirements for maintaining gas limits) can be relaxed, allowing nodes to operate more lightly, which means throughput can be safely increased without compromising the decentralization participation threshold.

Tron Comments

Advantages:

MegaETH aims for a "real-time blockchain" by significantly enhancing the execution performance and response speed of the EVM through innovative architectures such as node specialization, in-memory state, parallel execution, and state diff synchronization, achieving millisecond-level block production and high throughput (up to Web2 levels). Its design philosophy of "starting from hardware limits" allows the system to maintain Ethereum compatibility while greatly reducing latency and resource waste, making it suitable for building high real-time applications (such as blockchain games, AI, financial matching, etc.).

Disadvantages:

This extreme optimization brings a certain degree of centralization tendency (such as a single sequencer design), a higher hardware threshold (requiring high-end servers), and challenges in decentralized validation, network fault tolerance, and economic incentive mechanisms during the early stages of the ecosystem. Additionally, its high performance relies on specialized architecture and external components (such as EigenDA), which still need time to validate in terms of cross-chain compatibility and community adoption.

1. Overall Market Performance

1.1. Price Trends of Spot BTC vs ETH

BTC

ETH

2. Public Chain Data

**

**

### 3. Macroeconomic Data Review and Key Data Release Points for Next Week

In terms of employment, the November ADP private employment increase was significantly below expectations, and JOLTS job openings fell to a near three-year low, indicating a continued contraction in hiring intentions among companies; weekly initial jobless claims saw a slight rebound, further confirming that the labor market is transitioning from "tight" to "weak." In terms of growth, the delayed second revision of Q3 GDP due to the government shutdown was released, with economic growth rates being downgraded, weak corporate investment momentum, declining inventory contributions, and no significant improvement in manufacturing demand.

Important data to be released this week:

December 11: Federal Reserve interest rate decision (upper limit) as of December 10 in the U.S.

### 4. Regulatory Policies

United States: Key Legislative Procedures Entering Countdown

Core legislation is about to be voted on: A bill that may clarify Bitcoin and Ethereum as "commodities" primarily regulated by the U.S. Commodity Futures Trading Commission (CFTC) is expected to be voted on in the Senate Banking Committee in late December.

Regulatory agencies' agenda is clear: The U.S. Securities and Exchange Commission (SEC) plans to hold a "crypto roundtable" on December 16 to discuss trading rules. At the same time, the SEC intends to launch a new "crypto innovation exemption" program in January 2026, aimed at providing clearer operational guidelines for compliant companies.

South Korea: Stablecoin Legislation Facing Deadline

- Legislative pressure: The ruling party in South Korea has set a "final ultimatum" deadline for the stalled stablecoin regulatory bill, requiring relevant agencies to submit and process the bill by December 10. Currently, there are still disagreements between regulatory agencies and the Bank of Korea over who should lead the issuance of the Korean won stablecoin.

Russia: Considering Easing Cryptocurrency Trading Restrictions

- Policy signals a shift: The Central Bank of Russia is considering lifting strict restrictions on cryptocurrency trading. This move is primarily aimed at addressing the difficulties faced by Russians in conducting cross-border transactions due to international sanctions.

Europe: Digital Euro Project Steadily Advancing

- Pilot Phase Moving to Decision Stage: The European Central Bank (ECB) has confirmed that after successfully completing pilots with commercial banks and payment providers, the digital euro project will enter the decision stage on whether to officially launch. Current testing focuses on privacy features, offline payment capabilities, and interoperability within the Eurozone.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。