As the towers rise higher, the decision-makers behind the scenes shrink further, and the centralization of AI brings numerous hidden dangers. How can the crowds gathered in the square avoid the shadows cast by the towers?

Written by: Coinspire

TL;DR:

Web3 projects with AI concepts are becoming targets for capital in both primary and secondary markets.

The opportunities for Web3 in the AI industry lie in: using distributed incentives to coordinate potential supply in the long tail—across data, storage, and computation; at the same time, establishing an open-source model and a decentralized market for AI Agents.

The main application of AI in the Web3 industry is in on-chain finance (crypto payments, trading, data analysis) and assisting development.

The utility of AI+Web3 is reflected in their complementarity: Web3 is expected to counteract AI centralization, while AI is expected to help Web3 break out of its niche.

Introduction

In the past two years, the development of AI has accelerated as if a button has been pressed. The butterfly effect initiated by ChatGPT has not only opened a new world of generative artificial intelligence but has also stirred up currents in Web3 on the other side.

With the backing of AI concepts, the financing in the crypto market has shown a significant boost compared to its slowdown. Media statistics indicate that in the first half of 2024 alone, 64 Web3+AI projects completed financing, with the AI-based operating system Zyber365 achieving a maximum financing amount of $100 million in its Series A round.

The secondary market is even more prosperous. Data from the crypto aggregation site Coingecko shows that in just over a year, the total market value of the AI sector has reached $48.5 billion, with a 24-hour trading volume close to $8.6 billion; the benefits brought by advancements in mainstream AI technology are evident. After the release of OpenAI's Sora text-to-video model, the average price in the AI sector rose by 151%; the AI effect has also radiated to one of the capital-absorbing segments of cryptocurrency, Meme: the first AI Agent concept MemeCoin—GOAT quickly became popular and achieved a valuation of $1.4 billion, successfully igniting the AI Meme craze.

Research and discussions about AI+Web3 are also heating up, from AI+Depin to AI Memecoin and now to the current AI Agent and AI DAO, the FOMO sentiment can hardly keep up with the speed of the new narrative rotation.

AI+Web3, this term combination filled with hot money, trends, and future fantasies, is inevitably seen as a marriage arranged by capital. It seems difficult to discern whether beneath this splendid robe lies the domain of speculators or the eve of a dawn explosion.

To answer this question, a critical reflection for both sides is whether it will become better with the other? Can it benefit from the other's model? In this article, we also attempt to examine this pattern from the shoulders of predecessors: How can Web3 play a role in various aspects of the AI technology stack, and what new vitality can AI bring to Web3?

What Opportunities Does Web3 Have Under the AI Stack?

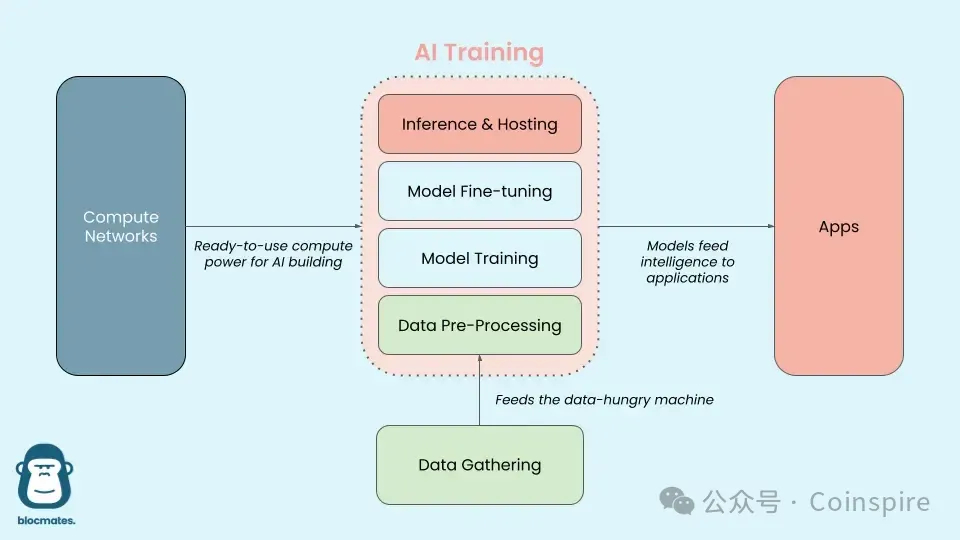

Before delving into this topic, we need to understand the technology stack of AI large models:

Image Source: Delphi Digital

To put the entire process in simpler terms: the "large model" is like the human brain. In the early stages, this brain belongs to a newborn baby, which needs to observe and absorb a vast amount of external information to understand the world; this is the "data collection" phase. Since computers do not possess human-like visual and auditory senses, before training, the large-scale unlabelled information from the outside world needs to be transformed into a format that computers can understand and use through "preprocessing."

After inputting data, AI constructs a model with understanding and predictive capabilities through "training," which can be seen as the process of a baby gradually understanding and learning about the outside world. The model's parameters are like the language abilities that the baby adjusts during the learning process. When the learned content begins to specialize, or when feedback is received from communication with others and corrections are made, it enters the "fine-tuning" phase of the large model.

As the child grows and learns to speak, they can understand meanings and express their feelings and thoughts in new conversations. This stage is similar to the "inference" of the AI large model, where the model can predict and analyze new language and text inputs. A baby expresses feelings, describes objects, and solves various problems through language abilities, which is also akin to how the AI large model applies its inference phase to various specific tasks after training, such as image classification and speech recognition.

AI Agents are closer to the next form of large models—capable of independently executing tasks and pursuing complex goals, possessing not only thinking abilities but also memory, planning, and the ability to interact with the world using tools.

Currently, in response to the pain points of AI across various stacks, Web3 has initially formed a multi-layered, interconnected ecosystem that covers all stages of the AI model process.

1. Base Layer: Airbnb for Computing Power and Data

Computing Power

Currently, one of the highest costs for AI is the computing power and energy required for training and inference models.

For example, Meta's LLAMA3 requires 16,000 H100 GPUs produced by NVIDIA (a top graphics processing unit designed for AI and high-performance computing workloads) and takes 30 days to complete training. The price of the 80GB version ranges from $30,000 to $40,000, necessitating an investment of $400 million to $700 million in computing hardware (GPU + network chips), while monthly training consumes 1.6 billion kilowatt-hours, with energy expenses nearing $20 million per month.

To alleviate the pressure on AI computing power, Web3 has intersected with AI in the field of DePin (Decentralized Physical Infrastructure Network). Currently, the DePin Ninja data site has listed over 1,400 projects, including representative projects for GPU computing power sharing such as io.net, Aethir, Akash, Render Network, and more.

The main logic is: platforms allow individuals or entities with idle GPU resources to contribute their computing power in a permissionless decentralized manner, through an online marketplace for buyers and sellers similar to Uber or Airbnb, increasing the utilization of underutilized GPU resources, allowing end users to obtain more cost-effective and efficient computing resources; at the same time, a staking mechanism ensures that if there are violations of quality control mechanisms or network interruptions, resource providers face corresponding penalties.

Its characteristics include:

Aggregating Idle GPU Resources: The supply side mainly consists of excess computing power resources from third-party independent small and medium-sized data centers, crypto mining farms, etc., with a consensus mechanism based on PoS mining hardware, such as FileCoin and ETH miners. There are also projects aimed at lowering the entry barrier for devices, such as exolab, which utilizes local devices like MacBooks, iPhones, and iPads to establish a computing power network for running large model inference.

Addressing the Long Tail Market for AI Computing Power: a. "From a technical perspective," decentralized computing power markets are more suitable for inference steps. Training relies more on the data processing capabilities brought by ultra-large cluster-scale GPUs, while inference requires relatively lower GPU computing performance, such as Aethir, which focuses on low-latency rendering work and AI inference applications. b. "From a demand perspective," small and medium computing power demanders will not train their large models independently but will only choose to optimize and fine-tune around a few leading large models, and these scenarios are naturally suitable for distributed idle computing power resources.

Decentralized Ownership: The technological significance of blockchain lies in that resource owners always retain control over their resources, allowing for flexible adjustments based on demand while earning revenue.

Data

Data is the foundation of AI. Without data, computation is as useless as floating duckweed, and the relationship between data and models is like the saying "Garbage in, Garbage out." The quantity and quality of input data determine the final output quality of the model. For current AI model training, data determines the model's language ability, understanding ability, even values, and human-like performance. Currently, the data demand dilemma for AI mainly focuses on the following four aspects:

Data Hunger: AI model training relies on a large amount of data input. Public information shows that OpenAI's training of GPT-4 reached a trillion-level parameter count.

Data Quality: As AI integrates with various industries, the timeliness, diversity, specialization of vertical data, and the incorporation of emerging data sources such as social media sentiment impose new requirements on its quality.

Privacy and Compliance Issues: Currently, various countries and enterprises are gradually recognizing the importance of high-quality datasets and are imposing restrictions on data scraping.

High Data Processing Costs: The large volume of data and the complexity of the processing process. Public information indicates that over 30% of AI companies' R&D costs are spent on basic data collection and processing.

Currently, Web3's solutions are reflected in the following four aspects:

1. Data Collection: The availability of free real-world data being scraped is rapidly depleting, and AI companies' expenditures on data are increasing year by year. However, at the same time, this expenditure has not been returned to the true contributors of the data; platforms have entirely enjoyed the value creation brought by data, such as Reddit generating a total revenue of $203 million through data licensing agreements with AI companies.

Allowing the true contributors to also participate in the value creation brought by data, and obtaining more private and valuable data from users in a low-cost manner through distributed networks and incentive mechanisms, is the vision of Web3.

For example, Grass is a decentralized data layer and network where users can run Grass nodes, contribute idle bandwidth and relay traffic to capture real-time data from the entire internet, and receive token rewards;

Vana introduces a unique Data Liquidity Pool (DLP) concept, allowing users to upload their private data (such as shopping records, browsing habits, social media activities, etc.) to specific DLPs and flexibly choose whether to authorize these data for use by specific third parties;

In PublicAI, users can collect data by using #AI or #Web3 as classification tags and @PublicAI on X.

2. Data Preprocessing: In the data processing of AI, the collected data is often noisy and contains errors, so it must be cleaned and transformed into a usable format before training the model, involving repetitive tasks such as standardization, filtering, and handling missing values. This stage is one of the few manual processes in the AI industry, giving rise to the profession of data annotators. As the model's requirements for data quality increase, the threshold for data annotators also rises, making this task naturally suitable for Web3's decentralized incentive mechanisms.

Currently, Grass and OpenLayer are both considering incorporating data annotation as a key step.

Synesis has proposed the concept of "Train2earn," emphasizing data quality, where users can earn rewards by providing annotated data, comments, or other forms of input.

The data annotation project Sapien gamifies the labeling tasks and allows users to stake points to earn more points.

3. Data Privacy and Security: It is important to clarify that data privacy and security are two different concepts. Data privacy involves the handling of sensitive data, while data security protects data information from unauthorized access, destruction, and theft. Thus, the advantages of Web3 privacy technologies and potential application scenarios are reflected in two aspects: (1) Training with sensitive data; (2) Data collaboration: multiple data owners can jointly participate in AI training without sharing their raw data.

Currently, common privacy technologies in Web3 include:

Trusted Execution Environment (TEE), such as Super Protocol;

Fully Homomorphic Encryption (FHE), such as BasedAI, Fhenix.io, or Inco Network;

Zero-Knowledge Technology (zk), such as Reclaim Protocol, which uses zkTLS technology to generate zero-knowledge proofs for HTTPS traffic, allowing users to securely import activities, reputation, and identity data from external websites without exposing sensitive information.

However, this field is still in its early stages, and most projects are still exploratory. A current dilemma is the high computational costs, with some examples being:

The zkML framework EZKL takes about 80 minutes to generate a proof for a 1M-nanoGPT model.

According to Modulus Labs, the overhead of zkML is over 1000 times higher than pure computation.

4. Data Storage: Once data is available, a place is needed to store data on-chain, as well as the LLM generated from that data. With data availability (DA) as a core issue, before the Ethereum Danksharding upgrade, its throughput was 0.08MB. Meanwhile, AI model training and real-time inference typically require a data throughput of 50 to 100GB per second. This magnitude of difference leaves existing on-chain solutions struggling when faced with "resource-intensive AI applications."

- 0g.AI is a representative project in this category. It is a centralized storage solution designed for high-performance AI needs, with key features including: high performance and scalability, supporting fast uploads and downloads of large datasets through advanced sharding and erasure coding technologies, with data transfer speeds approaching 5GB per second.

II. Middleware: Model Training and Inference

Decentralized Market for Open Source Models

The debate over whether AI models should be closed-source or open-source has never disappeared. The collective innovation brought by open source is an unmatched advantage over closed-source models; however, without a profit model, how can open-source models enhance developer motivation? This is a direction worth pondering. Baidu founder Li Yanhong asserted in April this year that "open-source models will increasingly fall behind."

In response, Web3 proposes the possibility of a decentralized open-source model market, where the models themselves are tokenized, retaining a certain proportion of tokens for the team, and directing part of the future revenue streams of the model to token holders.

For example, the Bittensor protocol establishes a P2P market for open-source models, composed of dozens of "subnets," where resource providers (computing, data collection/storage, machine learning talent) compete to meet the goals of specific subnet owners. Each subnet can interact and learn from each other, resulting in more powerful intelligence. Rewards are distributed by community voting and further allocated based on competitive performance within each subnet.

ORA introduces the concept of Initial Model Offering (IMO), tokenizing AI models that can be bought, sold, and developed through a decentralized network.

Sentient, a decentralized AGI platform, incentivizes contributors to collaborate, build, replicate, and scale AI models, rewarding contributors.

Spectral Nova focuses on the creation and application of AI and ML models.

Verifiable Inference

To address the "black box" problem in AI inference processes, the standard Web3 solution is to have multiple validators repeat the same operations and compare results. However, due to the current shortage of high-end "Nvidia chips," this approach faces the obvious challenge of high AI inference costs.

A more promising solution is to execute ZK proofs for off-chain AI inference calculations—a zero-knowledge proof is a cryptographic protocol in which one party (the prover) can prove to another party (the verifier) that a given statement is true without revealing any additional information beyond the fact that the statement is true—allowing for permissionless verification of AI model computations on-chain. This requires cryptographically proving on-chain that off-chain computations have been correctly completed (e.g., that the dataset has not been tampered with) while ensuring all data remains confidential.

The main advantages include:

Scalability: Zero-knowledge proofs can quickly confirm a large number of off-chain computations. Even as the number of transactions increases, a single zero-knowledge proof can verify all transactions.

Privacy Protection: Data and AI model details remain private, while all parties can verify that the data and models have not been compromised.

No Trust Required: Verification of computations can be done without relying on centralized parties.

Web2 Integration: By definition, Web2 is off-chain integrated, meaning verifiable inference can help bring its datasets and AI computations on-chain. This aids in increasing the adoption of Web3.

Currently, Web3's verifiable technologies for verifiable inference include:

zkML: Combines zero-knowledge proofs with machine learning to ensure the privacy and confidentiality of data and models, allowing for verifiable computations without revealing certain underlying attributes. For instance, Modulus Labs has released a ZK prover built for AI based on ZKML to effectively check whether AI providers manipulate algorithms correctly on-chain, although current clients are primarily on-chain DApps.

opML: Utilizes the optimistic aggregation principle to improve the scalability and efficiency of ML computations by verifying the time of disputes. In this model, only a small portion of the results generated by the "verifier" needs to be verified, but the economic cost of reducing redundancy is set high enough to increase the cost of cheating for verifiers.

TeeML: Uses trusted execution environments to securely execute ML computations, protecting data and models from tampering and unauthorized access.

III. Application Layer: AI Agent

The current development of AI has clearly shifted the focus from model capabilities to AI Agents. Tech companies like OpenAI, AI large model unicorn Anthropic, and Microsoft are all turning to develop AI Agents, attempting to break the current technological plateau of LLMs.

OpenAI defines AI Agents as: systems driven by LLMs that possess the ability to autonomously understand perception, planning, memory, and tool usage, capable of automating the execution of complex tasks. When AI transitions from being a tool used to becoming an entity that can use tools, it becomes an AI Agent. This is also why AI Agents can become the most ideal intelligent assistants for humans.

What can Web3 bring to Agents?

1. Decentralization

The decentralized nature of Web3 can make Agent systems more distributed and autonomous, establishing incentive and penalty mechanisms for stakers and delegators through PoS, DPoS, and other mechanisms, promoting the democratization of Agent systems. GaiaNet, Theoriq, and HajimeAI have all made attempts in this area.

2. Cold Start

The development and iteration of AI Agents often require substantial financial support, and Web3 can help promising AI Agent projects secure early financing and cold starts.

Virtual Protocol launched the AI Agent creation and token issuance platform fun.virtuals, where any user can deploy an AI Agent with one click and achieve 100% fair issuance of AI Agent tokens.

Spectral proposed a product concept supporting the issuance of on-chain AI Agent assets: issuing tokens through IAO (Initial Agent Offering), allowing AI Agents to directly obtain funding from investors while becoming part of DAO governance, providing investors with opportunities to participate in project development and share future profits.

How Does AI Empower Web3?

The impact of AI on Web3 projects is evident; it benefits blockchain technology by optimizing on-chain operations (such as smart contract execution, liquidity optimization, and AI-driven governance decisions). At the same time, it can provide better data-driven insights, enhance on-chain security, and lay the foundation for new Web3-based applications.

I. AI and On-Chain Finance

AI and Crypto Economy

On August 31, Coinbase CEO Brian Armstrong announced the first AI-to-AI crypto transaction on the Base network, stating that AI Agents can now use USD to transact with humans, merchants, or other AIs on Base, with these transactions being instant, global, and free.

Apart from payments, the Virtuals Protocol's Luna also demonstrated for the first time how AI Agents can autonomously execute on-chain transactions, making AI Agents regarded as intelligent entities capable of perceiving the environment, making decisions, and taking actions, seen as the future of on-chain finance. Currently, the potential scenarios for AI Agents are reflected in the following points:

1. Information Collection and Prediction: Helping investors collect information such as exchange announcements, project public information, panic sentiment, and public opinion risks, while analyzing and assessing asset fundamentals and market conditions in real-time to predict trends and risks.

2. Asset Management: Providing users with suitable investment targets, optimizing asset portfolios, and automatically executing trades.

3. Financial Experience: Assisting investors in selecting the fastest on-chain trading methods, automating manual operations such as cross-chain transactions and adjusting gas fees, thereby lowering the threshold and cost of on-chain financial activities.

Imagine a scenario where you convey the following instruction to the AI Agent: "I have 1000 USDT, please help me find the highest yielding combination with a lock-up period of no more than one week." The AI Agent would provide the following suggestion: "I recommend an initial allocation of 50% in A, 20% in B, 20% in X, and 10% in Y. I will monitor interest rates and observe changes in risk levels, and rebalance as necessary." Additionally, finding potential airdrop projects and identifying Memecoin projects with signs of popularity are also tasks that AI Agents may accomplish in the future.

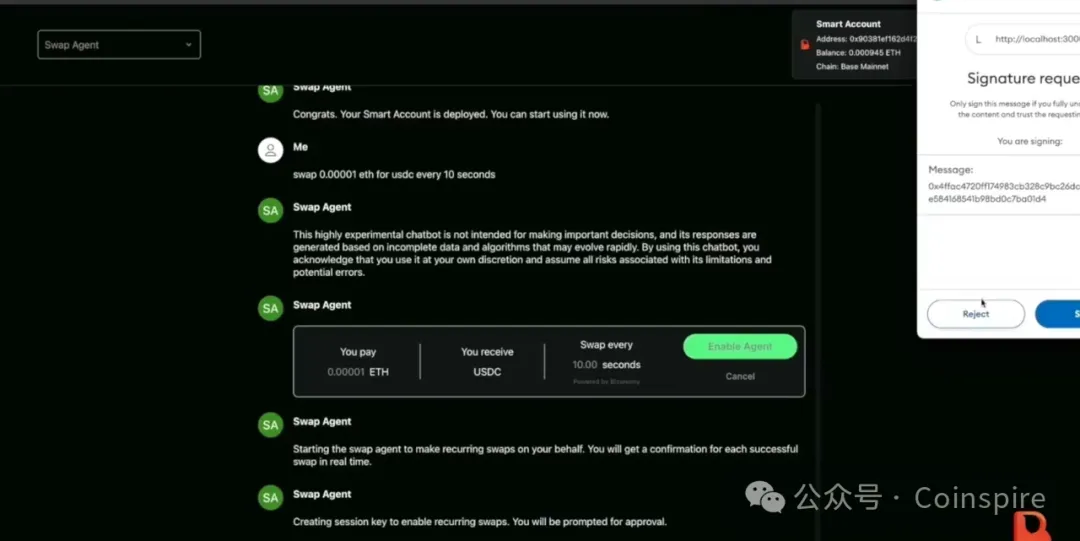

Image source: Biconomy

Currently, AI Agent wallets like Bitte and AI interaction protocols like Wayfinder are making such attempts. They both aim to integrate OpenAI's model API, allowing users to command the Agent to perform various on-chain operations through a chat window interface similar to ChatGPT. For example, WayFinder's first prototype released in April this year demonstrated four basic operations: swap, send, bridge, and stake on the mainnets of Base, Polygon, and Ethereum.

Currently, the decentralized Agent platform Morpheus also supports the development of such Agents, and Biconomy has demonstrated that an AI Agent can swap ETH for USDC without requiring full wallet permissions.

AI and On-Chain Transaction Security

In the Web3 world, on-chain transaction security is crucial. AI technology can be used to enhance the security and privacy protection of on-chain transactions, with potential scenarios including:

Transaction Monitoring: Real-time data technology monitors abnormal trading activities, providing real-time alert infrastructure for users and platforms.

Risk Analysis: Helping platforms analyze customer trading behavior data to assess their risk levels.

For example, the Web3 security platform SeQure utilizes AI to detect and prevent malicious attacks, fraud, and data breaches, providing real-time monitoring and alert mechanisms to ensure the security and stability of on-chain transactions. Similar security tools include AI-powered Sentinel.

II. AI and On-Chain Infrastructure

AI and On-Chain Data

AI technology plays an important role in on-chain data collection and analysis, such as:

Web3 Analytics: An AI-based analytics platform that uses machine learning and data mining algorithms to collect, process, and analyze on-chain data.

MinMax AI: It provides AI-based on-chain data analysis tools to help users discover potential market opportunities and trends.

Kaito: A Web3 search platform based on LLM that serves as a search engine.

Followin: Integrates ChatGPT to collect and present relevant information scattered across different websites and social platforms.

Another application scenario is oracles, where AI can obtain prices from multiple sources to provide accurate pricing data. For instance, Upshot uses AI to assess the volatile prices of NFTs, providing NFT prices with a percentage error of 3-10% through over a hundred million evaluations per hour.

AI and Development & Auditing

Recently, a Web2 AI code editor called Cursor has attracted considerable attention in the developer community. On its platform, users only need to describe in natural language, and Cursor can automatically generate corresponding HTML, CSS, and JavaScript code, greatly simplifying the software development process. This logic also applies to improving the development efficiency of Web3.

Currently, deploying smart contracts and DApps on public chains typically requires adherence to specific programming languages such as Solidity, Rust, Move, etc. The vision of new programming languages is to expand the design space of decentralized blockchains, making them more suitable for DApp development. However, with a significant shortage of Web3 developers, developer education remains a more pressing issue.

Currently, AI can assist Web3 development in imaginable scenarios such as: automated code generation, smart contract verification and testing, DApp deployment and maintenance, intelligent code completion, and AI dialogue to answer development-related questions, etc. With AI assistance, not only can development efficiency and accuracy be improved, but it also lowers the programming barrier, allowing non-programmers to turn their ideas into practical applications, bringing new vitality to the development of decentralized technologies.

Currently, the most eye-catching is one-click token launch platforms, such as Clanker, an AI-driven "Token Bot" designed for rapid DIY token deployment. You only need to tag Clanker on SocialFi protocol Farcaster clients like Warpcast or Supercast, tell it your token idea, and it will launch the token for you on the Base public chain.

There are also contract development platforms, such as Spectral, which provide one-click generation and deployment of smart contracts to lower the barriers to Web3 development, allowing even novice users to compile and deploy smart contracts.

In terms of auditing, the Web3 auditing platform Fuzzland uses AI to help auditors check for code vulnerabilities, providing natural language explanations to assist with auditing expertise. Fuzzland also utilizes AI to provide natural language explanations of formal specifications and contract code, along with example code to help developers understand potential issues in the code.

III. AI and New Narratives in Web3

The rise of generative AI brings new possibilities for new narratives in Web3.

NFTs: AI injects creativity into generative NFTs, allowing for the creation of various unique and diverse artworks and characters through AI technology. These generative NFTs can serve as characters, props, or scene elements in games, virtual worlds, or the metaverse. For example, Binance's Bicasso allows users to generate NFTs by uploading images and inputting keywords for AI computation. Similar projects include Solvo, Nicho, IgmnAI, and CharacterGPT.

GameFi: With AI's natural language generation, image generation, and intelligent NPC capabilities, GameFi is expected to improve efficiency and innovation in game content production. For instance, Binaryx's first blockchain game AI Hero allows players to explore different storyline options randomly through AI; similarly, there is the virtual companion game Sleepless AI, based on AIGC and LLM, where players can unlock personalized gameplay through different interactions.

DAOs: Currently, AI is also envisioned to be applied in DAOs to help track community interactions, record contributions, reward the most contributing members, and facilitate proxy voting, etc. For example, ai16z utilizes AI Agents to collect market information on-chain and off-chain, analyze community consensus, and make investment decisions based on suggestions from DAO members.

The significance of the AI + Web3 combination: Towers and Squares

In the heart of Florence, Italy, lies the most important political activity venue and gathering place for citizens and tourists—the central square, where a 95-meter-high town hall tower stands. The vertical and horizontal visual contrast between the tower and the square creates a dramatic aesthetic effect. Harvard University history professor Niall Ferguson was inspired by this, drawing parallels in his book "The Square and the Tower" to the world history of networks and hierarchies, both of which rise and fall over time.

This brilliant metaphor is equally relevant to the current relationship between AI and Web3. From the long-term, non-linear historical relationship between the two, it can be seen that the square is more conducive to generating new and creative things than the tower, yet the tower still possesses its legitimacy and strong vitality.

With the ability of tech companies to cluster energy, computing power, and data, AI has unleashed unprecedented imagination. Major tech firms are heavily investing and entering the field, with various iterations of different chatbots and "underlying large models" like GPT-4, GP4-4o making their appearances, along with the emergence of automatic programming robots (Devin) and Sora, which has preliminary capabilities to simulate the real physical world. The imagination of AI is being infinitely amplified.

At the same time, AI is essentially a scaled and centralized industry. This technological revolution pushes tech companies, which have gradually gained structural dominance since the "Internet era," to an even narrower peak. The vast power, monopolized cash flow, and the massive datasets required to dominate the intelligent era create higher barriers.

As the tower rises higher, the decision-makers behind the scenes shrink further. How can the crowds gathered in the square avoid the shadows cast by the tower? This is precisely the problem that Web3 hopes to solve.

Essentially, the inherent properties of blockchain enhance artificial intelligence systems and bring new possibilities, mainly:

"Code as Law" in the Age of Artificial Intelligence—achieving a transparent system that automatically executes rules through smart contracts and cryptographic verification, directing rewards to those closer to the goals.

Token Economy—creating and coordinating participant behaviors through token mechanisms, staking, reductions, token rewards, and penalties.

Decentralized Governance—prompting us to question the sources of information and encouraging a more critical and insightful approach to artificial intelligence technology, preventing bias, misinformation, and manipulation, ultimately fostering a more informed and empowered society.

The development of AI has also brought new vitality to Web3. Perhaps the impact of Web3 on AI will require time to prove, but the impact of AI on Web3 is immediate: this is evident in both the frenzy of memes and the way AI Agents help lower the usage threshold for on-chain applications.

When Web3 is defined as a self-indulgent activity for a small group of people, and is caught in doubts about replicating traditional industries, the addition of AI brings a foreseeable future: a more stable and larger Web2 user base, and more innovative business models and services.

We live in a world where "towers and squares" coexist. Although AI and Web3 have different timelines and starting points, their ultimate goal is how to make machines better serve humanity. No one can define a rushing river; we look forward to seeing the future of AI + Web3.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。