Author: Zuo Ye

Fashion is cyclical, and so is Web 3.

Near "re" becomes an AI public chain, and one of the founders, Transformer, attended the NVIDIA GTC conference, and had a discussion with the leather jacket old Huang about the future of generative AI. Solana, as the gathering place for io.net, Bittensor, and Render Network, has successfully transformed into an AI concept chain. In addition, there are also up-and-coming players involving GPU computing such as Akash, GAIMIN, and Gensyn.

If we raise our sights, in addition to the rise in coin prices, we can discover several interesting facts:

- The competition for GPU computing power has come to decentralized platforms. The more computing power, the stronger the computing effect. CPU, storage, and GPU are bundled together.

- In the transition from cloud-based to decentralized computing paradigms, there is a shift in demand from AI training to inference. On-chain models are no longer just empty talk.

- The underlying hardware and operating logic of the internet architecture have not fundamentally changed. Decentralized computing power layers play a more significant role in incentivizing networking.

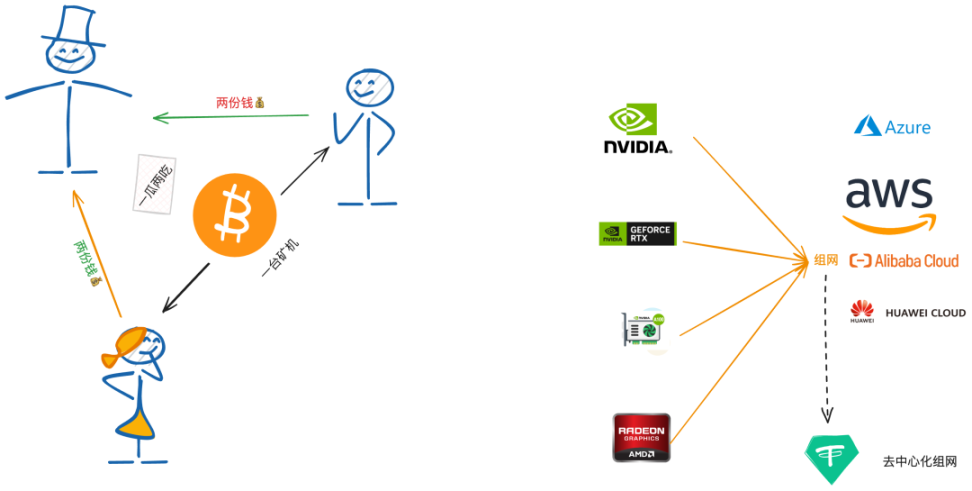

First, let's make a conceptual distinction. The cloud computing power in the Web3 world was born in the era of cloud mining, referring to the sale of mining machine computing power, eliminating the huge expense for users to purchase mining machines. However, computing power vendors often "oversell," for example, selling the combined computing power of 100 mining machines to 105 people to gain excess profits, ultimately making the term synonymous with deception.

The cloud computing power mentioned in this article specifically refers to the computing power resources of GPU-based cloud vendors. The question here is whether decentralized computing power platforms are the front puppets of cloud vendors or the next version update.

The integration of traditional cloud vendors and blockchain is deeper than we imagine. For example, public chain nodes, development, and daily storage are basically centered around AWS, Alibaba Cloud, and Huawei Cloud, eliminating the expensive investment in physical hardware. However, the resulting problems should not be ignored. In extreme cases, pulling the network cable can cause the public chain to crash, seriously violating the spirit of decentralization.

On the other hand, decentralized computing power platforms either directly build "data centers" to maintain network stability or directly build incentive networks, such as IO.NET's airdrop to promote GPU quantity strategy, similar to Filecoin's storage for FIL tokens. The starting point is not to meet usage demands, but to empower tokens. One piece of evidence is that major companies, individuals, or academic institutions rarely use them for ML training, inference, or graphic rendering work, leading to serious resource waste.

However, in the face of soaring coin prices and FOMO sentiment, all accusations of decentralized computing power being a cloud computing power scam have evaporated.

Two types of ☁️ computing power, same name, same operation?

Inference and FLOPS, quantifying GPU computing power

The computing power requirements of AI models are evolving from training to inference.

Take OpenAI's Sora as an example. Although it is also based on Transformer technology, its parameter volume is estimated to be in the billions, much lower than the trillion-level of GPT-4. Yang Likun even mentioned it's only 3 billion, meaning the training cost is relatively low. This is also very understandable, as the smaller the parameter volume, the proportionally lower the computing resources required.

However, conversely, Sora may require stronger "inference" capabilities. Inference can be understood as the ability to generate specific videos based on instructions. Videos have long been considered creative content, so AI needs a stronger understanding ability, while training is relatively simpler, as it can be understood as summarizing patterns based on existing content, requiring a lot of computing power.

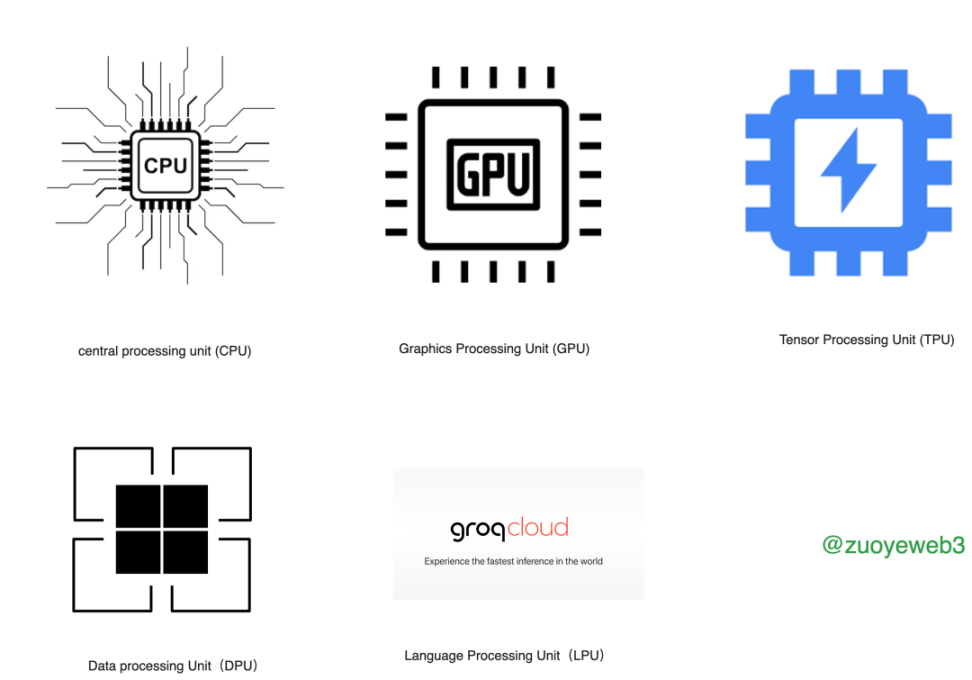

In the past, AI computing power was mainly used for training, with a small part used for inference capabilities, and basically all covered by various NVIDIA products. However, with the introduction of Groq LPU (Language Processing Unit), things began to change. Better inference capabilities, combined with the slimming down of large models and improved accuracy, are gradually becoming mainstream.

It's worth mentioning the classification of GPUs. It's often said that gaming GPUs are saving AI. The reason behind this is that the strong demand for high-performance GPUs in the gaming market covers the R&D costs. For example, the 4090 graphics card can be used for gaming and AI alchemy. However, it's important to note that gaming cards and compute cards will gradually decouple. This process is similar to the evolution of Bitcoin mining machines from personal computers to specialized mining machines, and the chips used also follow the sequence from CPU, GPU, FPGA, to ASIC.

LLM dedicated card under development…

LLM dedicated card under development…

With the maturity and progress of AI technology, especially the LLM route, there will be more attempts similar to TPUs, DPUs, and LPUs. Of course, NVIDIA's GPUs are still the main products, and the following discussions are also based on GPUs, with LPUs being more of a supplement to GPUs, and complete replacement will take time.

The decentralized computing power competition is not about snatching GPU procurement channels, but about trying to establish new profit models.

Up to this point, NVIDIA has become the protagonist. Fundamentally, NVIDIA occupies 80% of the graphics card market share, and the competition between N cards and A cards only exists in theory. In reality, everyone is honest.

The absolute monopoly position has led to a frenzy of competition for GPUs, from consumer-grade RTX 4090 to enterprise-grade A100/H100. Cloud vendors are the main buyers. However, companies involved in AI such as Google, Meta, Tesla, and OpenAI have taken action or have plans to develop their own chips. Domestic companies have also turned to domestic manufacturers such as Huawei. The GPU race remains extremely crowded.

For traditional cloud vendors, what they are actually selling is computing power and storage space, so the urgency to use their own chips is not as great as that of AI companies. However, for decentralized computing power projects, they are currently in the first half, competing with traditional cloud vendors for computing power business, emphasizing low cost and easy access. But the likelihood of Web3 AI chips appearing is not high, similar to the situation with Bitcoin mining.

As an additional comment, since Ethereum switched to PoS, there have been fewer and fewer specialized hardware in the crypto market. The market scale of devices such as Saga phones, ZK hardware accelerators, and DePIN is too small. Hopefully, decentralized computing power can explore a distinctive path for specialized AI computing power cards in Web3.

Decentralized computing power is the next step for the cloud or a supplement.

The computing power of GPUs is usually compared in the industry using FLOPS (Floating Point Operations Per Second), which is the most commonly used indicator of computing speed, regardless of GPU specifications or application parallel optimization measures.

From local computing to the cloud, it has been about half a century, and the concept of distributed computing has existed since the birth of computers. With the promotion of LLM, the combination of decentralization and computing power is no longer as elusive as before. I will try to summarize as many existing decentralized computing power projects as possible, focusing on two points:

- The quantity of hardware such as GPUs, which assesses their computing speed. According to Moore's Law, the computing power of newer GPUs is stronger, and a greater quantity of equal specifications results in stronger computing power.

- The organization of incentive layers, which is a characteristic of Web3, including dual tokens, governance functions, airdrop incentive measures, etc., to better understand the long-term value of each project, rather than focusing excessively on short-term coin prices, and only considering how many GPUs can be owned or scheduled in the long term.

From this perspective, decentralized computing power still follows the DePIN route based on "existing hardware + incentive network," or in other words, the underlying internet architecture is still in place, and the decentralized computing power layer is the monetization after "hardware virtualization," focusing on access without permission, and real networking still requires the cooperation of hardware.

Computing power needs to be decentralized, while GPUs need to be centralized

Using the blockchain trilemma framework, the security of decentralized computing power does not need to be specially considered. The main focus is on decentralization and scalability, the latter being the purpose of GPU networking, which is currently in a leading position in AI.

Starting from a paradox, if decentralized computing power projects want to succeed, then the number of GPUs on their networks should be as large as possible. The reason is simple: the parameter volume of large models like GPT explodes, and without a certain scale of GPUs, they cannot achieve effective training or inference.

Of course, compared to the absolute control of cloud vendors, at the current stage, decentralized computing power projects can at least set up mechanisms such as no admission and free migration of GPU resources. However, for the sake of capital efficiency, it is uncertain whether they will form something similar to mining pools in the future.

In terms of scalability, GPUs can be used not only for AI but also for cloud computing and rendering. For example, Render Network focuses on rendering work, while Bittensor focuses on providing model training. From a more straightforward perspective, scalability is equivalent to usage scenarios and purposes.

So, in addition to GPU and incentive networks, two additional parameters can be added: decentralization and scalability, forming a comparison of four dimensions. Please note that this approach is different from technical comparisons and is purely for fun.

| Project | GPU Quantity | Incentive Network | Decentralization | Scalability | |---------|--------------|-------------------|------------------|--------------| | Gensyn (Token and verification mechanism to be announced after launch) | - | Evaluation after online AI training and inference verification mechanism | - | - | | Render Network (12,000 GPUs + 503 CPUs) | Tokens + additional incentive fund + proposals + open source rendering + AI training | - | AI training and inference | | Akash (20,000 CPUs + 262 GPUs) | Tokens + staking system | Tokens fully circulated | AI inference | | io.net (180,000 GPUs + 28,000 CPUs) | GPU exchange airdrop not yet issued | - | AI inference + training |

In the above projects, Render Network is actually quite special. Essentially, it is a distributed rendering network, and its relationship with AI is not direct. In AI training and inference, each link is closely related, requiring consistency throughout, whether it's SGD (Stochastic Gradient Descent) or backpropagation algorithms. However, rendering work does not necessarily require this level of consistency and often involves dividing tasks for distribution.

Its AI training capability mainly comes from being a plugin for io.net. In any case, GPUs are at work, so it doesn't matter how they are used. What's more forward-looking is its move to Solana during a time of underestimation, which later proved to be more suitable for high-performance requirements in rendering networks.

Next is io.net's aggressive development route for GPU exchange. The official website lists a whopping 180,000 GPUs, placing it in the top tier among decentralized computing power projects. It has a significant difference in scale compared to its competitors. In terms of scalability, io.net focuses on AI inference, with AI training being a secondary aspect.

Strictly speaking, AI training is not suitable for distributed deployment. Even lightweight LLMs will not have significantly fewer absolute parameters. Centralized computing is more cost-effective, and the combination of Web 3 and AI in training is more about data privacy and encrypted operations, such as ZK and FHE technologies. On the other hand, AI inference has great potential in Web 3. It has relatively low requirements for GPU computing performance and can tolerate a certain degree of loss. Furthermore, from the user's perspective, incentivizing AI inference is more observable.

Another project, Filecoin, which exchanges mining for tokens, has also reached a GPU utilization agreement with io.net. Filecoin is using its 1,000 GPUs in conjunction with io.net, a collaboration between predecessors and successors. Best of luck to both parties.

Next is the unreleased Gensyn. Since it is still in the early stages of network construction, the number of GPUs has not been announced. However, its main use case is AI training, and I personally feel that it will require a significant number of high-performance GPUs, at least surpassing the level of Render Network. Compared to AI inference, AI training has a direct competitive relationship with cloud vendors and will be more complex in specific mechanism design.

Specifically, Gensyn needs to ensure the effectiveness of model training and, in order to improve training efficiency, it extensively uses off-chain computing paradigms. Therefore, the model verification and anti-cheating system require multi-party role-playing:

- Submitters: Task initiators who ultimately pay for training costs.

- Solvers: Training models and providing proof of effectiveness.

- Verifiers: Verify model effectiveness.

- Whistleblowers: Check the work of verifiers.

Overall, the operation is similar to PoW mining + optimistic proof mechanism, and the architecture is very complex. Perhaps moving the computation off-chain can save costs, but the complexity of the architecture will bring additional operating costs. At the current main juncture of decentralized computing power focusing on AI inference, I also wish Gensyn good luck here.

Finally, there's the experienced Akash, which basically started alongside Render Network. Akash focuses on decentralized CPU, while Render Network initially focused on decentralized GPU. Unexpectedly, after the AI explosion, both have entered the field of GPU + AI computing. The difference is that Akash is more focused on inference.

The key to Akash's rejuvenation lies in its recognition of the mining farm issue after the Ethereum upgrade. Idle GPUs can not only be sold as second-hand for personal use by college students, but now they can also be used for AI. After all, it's all about contributing to human civilization.

However, one advantage of Akash is that its tokens are almost fully circulated. After all, it's a very old project and actively adopts the staking system commonly used in PoS. But no matter how you look at it, the team seems to be quite laid-back, lacking the youthful vigor of io.net.

In addition, there are also THETA for edge cloud computing, Phoenix for specialized solutions in the field of AI computing power, and Bittensor and Ritual for both old and new computing power. Due to space limitations, I cannot list them all, especially since some of them are not available with GPU quantity and other parameters.

Conclusion

Looking back at the history of computer development, various computing paradigms can be built into decentralized versions. The only regret is that they have no impact on mainstream applications. Currently, Web3 computing projects are mainly self-entertaining within the industry. The founder of Near attended the GTC conference because of his identity as the author of Transformer, not as the founder of Near.

Even more pessimistic is that the scale and players in the current cloud computing market are too powerful. Can io.net replace AWS? If there are enough GPUs, it is indeed possible, especially since AWS has long used open-source Redis as a foundational component.

In a sense, the power of open source and decentralization has not been able to match the power of Web3 projects, which are overly concentrated in the financial field such as DeFi. AI may be a key path to enter the mainstream market.

References:

https://wow.groq.com/lpu-inference-engine/

https://docs.gensyn.ai/litepaper

https://renderfoundation.com/whitepaper

https://blog.impossible.finance/aethir-research-report-2/

Coinbase Market Intelligence Report - March 6, 2024

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。