Article Source: Titan Media AGI

Author: Lin Zhijia

Image Source: Generated by Unlimited AI

Following the explosion of the Sora introduced by the American company OpenAI, the Chinese internet technology giant Alibaba Group is now stepping up its efforts.

Titan Media AGI learned on February 28th that the Alibaba Group's Institute of Intelligent Computing recently launched a new AI image-audio-video model technology EMO, officially referred to as "an expressive audio-driven portrait video generation framework."

It is reported that with just a photo and any audio file, EMO can generate AI videos that can speak and sing, as well as seamlessly integrate dynamic short videos, with a maximum length of about 1 minute and 30 seconds. The expressions are very accurate, and any audio, any speed, and any image can correspond one-to-one.

For example, in the TV series "Raging Fire," "Gao Qiqiang" talks about Luo Xiang's popular law; a photo of Cai Xukun can "sing" a rap song with almost the same lip movements when combined with other audio; and even in the recent Sora case video released by OpenAI, a Japanese street girl generated by AI with sunglasses can now not only speak, but also sing a beautiful song.

Bilibili's "meme" videos are about to be replaced by AI.

The Alibaba research team stated that EMO can generate videos with rich facial expressions and various head poses driven by audio, and can generate videos of any duration based on the length of the input video.

At the same time, EMO also features audio-driven portrait video generation, expressive dynamic rendering, support for multiple head turning poses, increased video dynamics and realism, support for multiple languages and portrait styles, rapid rhythm synchronization, and cross-actor performance transformation, among other features and functions.

On the technical side, Alibaba researchers shared that the EMO framework uses the Audio2Video diffusion model to generate expressive portrait videos.

The technology mainly consists of three stages: the initial stage of frame encoding, where ReferenceNet is used to extract features from reference images and motion frames; the diffusion process stage, where the pre-trained audio encoder processes audio embeddings. Facial region masks and multi-frame noise integration are used to control the generation of facial images; and the use of the main network to facilitate denoising operations. In the main network, two forms—reference attention and audio attention mechanisms—are applied, which are crucial for preserving the character's identity and adjusting the character's actions. In addition, the time module of EMO is used to manipulate the time dimension and adjust the motion speed.

Currently, the EMO framework is available on GitHub, and the related paper is also publicly available on arXiv.

GitHub: https://github.com/HumanAIGC/EMO

Paper: https://arxiv.org/abs/2402.17485

In fact, over the past year, Alibaba has continued to strengthen its efforts in AI, including the launch of several AI large model products such as Tongyi Qianwen, Tongyi Wanxiang, which are benchmarked against OpenAI, as well as technologies such as Outfit Anyone and Animate Anyone based on the dual-stream conditional diffusion model, enabling multiple scenario applications.

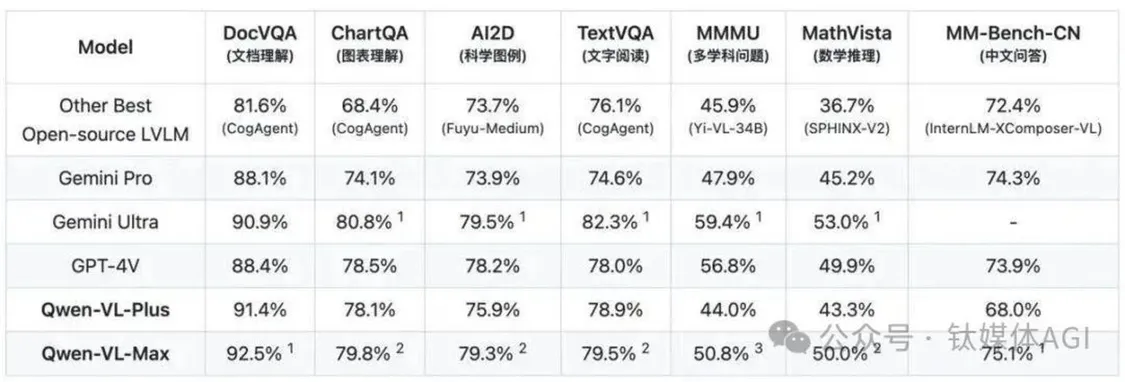

On January 26th of this year, Alibaba's Qwen-VL model underwent multiple iterative upgrades and announced upgrades to the Plus and Max versions, supporting inputs in the form of images and text, and outputs in the form of text, images, and detection boxes, enabling large models to truly have the ability to "see" the world.

Alibaba stated that compared to the open-source version of Qwen-VL, the Plus and Max version models achieved levels comparable to Gemini Ultra and GPT-4V in multiple multimodal standard tests, and significantly surpassed the best levels of the previously open-source models.

Titan Media AGI learned that Alibaba is currently assisting in the development of technology applications based on generative AI technology, including robots, digital humans, and Agent-related technologies.

In addition, Alibaba is one of the major tech companies in China's open-source model field, creating and operating the Chinese AI model open-source community "Moda." Since its launch a year ago, the model download volume on the "Moda" community has exceeded 100 million. Earlier, Alibaba also released a one-stop large model service platform—Alibaba Cloud "Bailian."

In addition to developing AI model technology products, Alibaba is also promoting investment in some AI large model companies.

Just this February, Alibaba led a new round of financing of $1 billion for the domestic AI large model team MoonShot AI, pushing the company's valuation to as high as $2.5 billion, making it the largest single round of financing for a Chinese AI startup.

Earlier, Alibaba also invested in multiple companies in the AI industry chain, such as Baichuan Intelligence and Zhipu AI, continuously betting on this wave of AI boom. Its competitor Tencent has also invested in multiple companies in the past year, including Baichuan Intelligence, Zhipu AI, MiniMax, and Light Years Beyond.

According to incomplete statistics from Titan Media AGI, Alibaba and Tencent have currently invested in over 40 startups related to AI and digitization.

Undoubtedly, OpenAI currently dominates the industry in the United States and other regions around the world, but it does not operate in the Chinese market. Therefore, whether it is OpenAI or Microsoft, neither will become the leader in the Chinese AI large model industry.

Today, Chinese tech giants such as Alibaba and Tencent have taken action to support the early-stage startups of Chinese AI large models through various investment methods, promoting the development of Chinese AI large models.

However, due to the continuous decline of technology stocks in the secondary market, the overall investment and financing scale in the field of AI in China is in a "lukewarm" state.

Data from research firm CB Insight shows that in 2023, there were approximately 232 investments in the field of AI in China, a year-on-year decrease of 38%, with a total financing amount of about $2 billion, a 70% decrease from the previous year.

Goldman Sachs predicts that by 2025, the global investment in the field of AI will reach approximately $200 billion.

Alibaba Group's newly appointed CEO Wu Yongming once stated that in order to better serve more enterprises and AI developers, Alibaba insists on doing two things well: providing a stable and efficient AI basic service system, especially powerful cloud computing capabilities, to build a solid foundation for training AI across the industry and using AI throughout society. The second is to create an open and prosperous AI ecosystem.

"In the visible future, all the common product forms in our lives will undergo changes, and a more intelligent next generation of products will enter our lives. More small and medium-sized enterprises will use AI collaboration to flexibly replace some services that currently only large enterprises can provide. The organization and collaboration methods of production, manufacturing, and circulation will also undergo fundamental changes. AI assistants will be everywhere, becoming assistants in everyone's work, life, and learning. Every company will also be equipped with AI assistants, just like our intelligent cars today, where assisted driving and autonomous driving have become standard equipment," said Wu Yongming.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。