Source: Quantum Bit

Image source: Generated by Wujie AI

Who would have thought that with the storm of large models, J.A.R.V.I.S. from Iron Man has become the busiest "Marvel hero" (manual dog head).

The reason is simple: the concept of a super assistant is too popular, from mobile phones to PCs to smart cabins, everywhere needs to be cued up.

Even the form of the hardware itself has changed as a result.

For example, the popular AI Pin in China and abroad fully demonstrates what it means to say "your next phone, why does it have to be a phone".

This small badge-like gadget, driven by Qualcomm chips, has a built-in intelligent voice assistant based on large model technology.

Even without a screen and buttons, relying on various sensors and an intelligent "brain," it can help you make calls, write messages, send emails, and record the world's operations.

Currently, the company behind AI Pin, Humane, has raised $230 million in funding, with a latest valuation of $850 million.

In fact, whether it's the large model intelligent assistant that has taken over the most important layout of major smartphone manufacturers' press conferences, or the more thorough hardware innovation like AI Pin, if we analyze the essence through the phenomenon, we will find that the core change is:

With the popularity of large models and AIGC technology, the prelude to the revolution of interaction methods has been irreversibly opened.

And the first wave of innovative opportunities is reflected in smart terminals.

In the AIGC era, the interaction method has changed

Although there is still a long way to go before the real "J.A.R.V.I.S.," for the smart terminal industry, under the impact of large model technology, the interaction method has undergone two changes:

The first change is in the interaction between humans and machines; the second is reflected in the interconnection between machines.

The revolution in human-machine interaction, which was sparked by ChatGPT, has received widespread attention from the tech community.

The reason is simple: from command line, to GUI (graphical user interface), to pure natural language interaction, the lower threshold for the use of the latest technology also means that all applications and even devices will undergo a reconstruction.

Just as the mobile internet has given rise to phenomenon-level apps like TikTok, behind this "reconstruction," the emergence of new era-defining killer apps, and even killer devices, has become possible.

Observing industry trends, it is not difficult to see that seizing the initiative has become a consensus for players in the field.

And the embryonic form of the highly anticipated super assistant is the intelligent voice assistant.

For example, Microsoft has directly replaced the Windows system's original voice assistant, Cortana, with Copilot driven by large models.

Not to mention the major smartphone manufacturers. Intelligent voice assistants driven by large models/AIGC technology have become the new focus of major press conferences, replacing images as the latest "selling point" of smartphones.

Compared to the widely discussed and practiced new paradigm of human-machine interaction, the change in interaction between machines is less mentioned, but in fact, the "machine brain" of large models is also causing a revolution in the Internet of Things (IoT).

In the past, due to the fragmentation of IoT scenarios, the "one thing, one discussion" mode has largely affected the progress and effectiveness of AI algorithm application.

In other words, various terminal sensors lack a "brain" that can truly coordinate the overall situation.

The emergence of large model intelligence fills this gap, better linking other intelligent terminals as "sensory" devices.

Another hot topic in the tech world in 2023, "embodied intelligence," is actually an example of the collision between large models and IoT devices.

**

**

In the era of large models, the interaction between humans and machines, and the interconnection between machines, has undoubtedly made more tangible progress in the era of "everything connected".

So, the question is, to further advance towards a true super assistant and gain the initiative in the new wave of competition, what key information needs to be focused on?

Underlying technology accelerates the revolution of interaction methods

The large-scale application of any technology can be observed from two aspects: carrier and implementation path.

For the super assistant, the carrier is the smart terminal, involving hardware computing power and software-hardware collaborative technology; as for the implementation path, one of the most likely and most potential technologies at present is AI technology represented by large models, and the era under this path is now also called the "Model Power Era".

First, let's look at the core carrier of the smart terminal.

Horizontally, from the perspective of terminal technology, there are two key measures for the super assistant as the carrier, computing and connectivity.

Computing, the AI computing power of devices represented by chips, is also crucial for supporting the super assistant.

Take Qualcomm, which currently dominates the field of smart terminals, as an example.

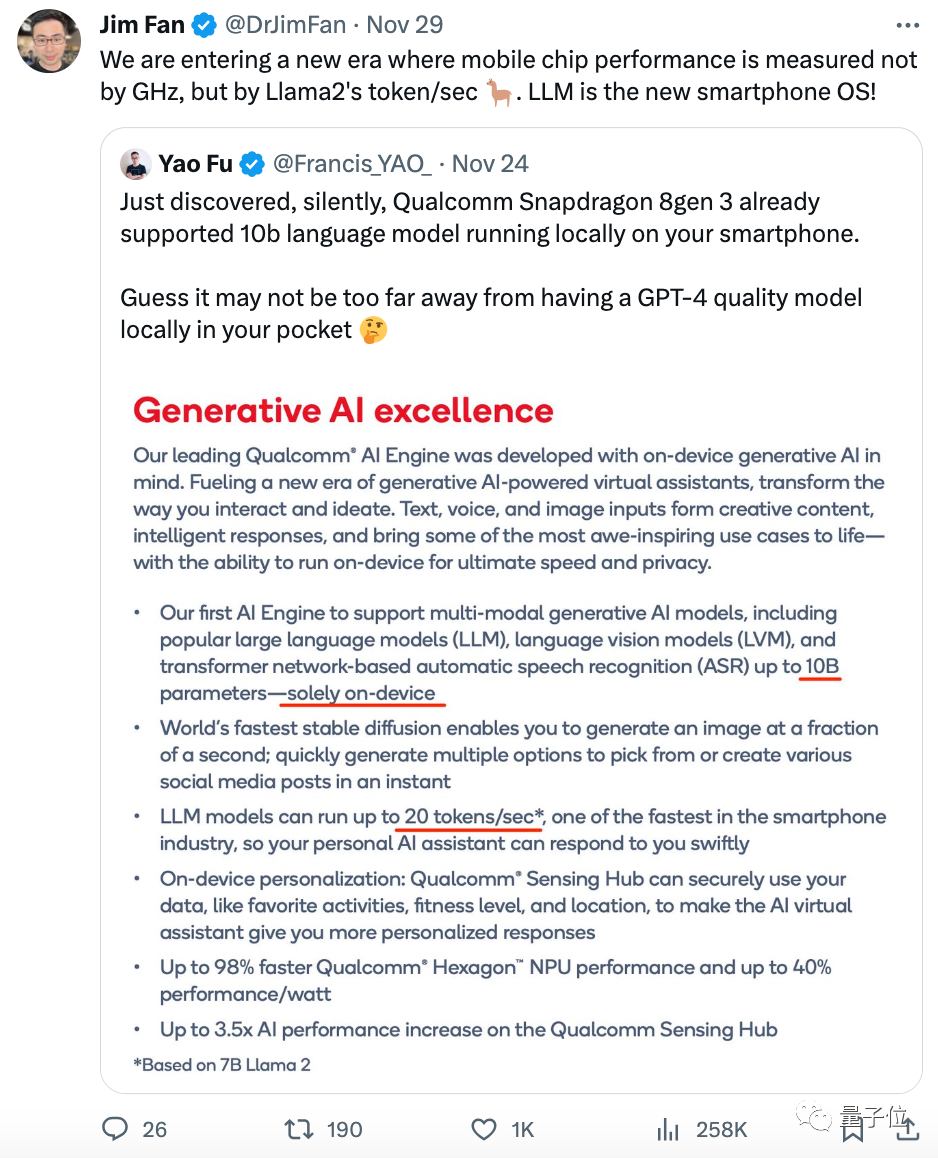

These days, Qualcomm's on-device running capability of hundred-billion-parameter models has become a hot topic, and has even been retweeted by Jim Fan, a senior AI scientist at NVIDIA:

We are entering a new era. In this era, the performance of mobile chips is not measured by GHz, but by the speed of generating tokens in Llama 2. Large language models are the new operating system for smartphones!

This AI computing capability can be specifically divided into two aspects: mobile phones and PCs.

On one hand, chips represented by the third-generation Snapdragon 8 mobile platform are further enhancing the computing generative AI capability of mobile phones.

For example, the core Hexagon NPU in the Qualcomm AI engine, in order to better support AI computing, has upgraded to a new microarchitecture, achieving a 98% performance improvement over the previous generation while reducing power consumption by 40%, and has achieved support for more Transformer networks.

In addition to the optimization of the Qualcomm AI engine and other parts, such as the centralization of Qualcomm sensors, the third-generation Snapdragon 8 mobile platform has already achieved the capability to run hundred-billion-parameter large models on terminals and run 70-billion-parameter large language models at a speed of generating 20 tokens per second.

**

**

On the other hand, chips represented by Snapdragon X Elite will further expand the AI computing capabilities that have emerged from mobile phones, bringing a little AI computing shock to the PC side.

The Qualcomm AI engine in Snapdragon X Elite has a computing power of 75 TOPS.

The core Hexagon NPU alone has a computing power of 45 TOPS. Qualcomm has intentionally added a new power supply system to the NPU, allowing it to adapt its frequency according to the workload. Additionally, to specifically accelerate complex AI models such as Transformer networks, a micro-slice inference architecture has been developed.

As a result, the PC side can directly run generative AI models with over 13 billion parameters, write PPTs, summaries, generate copywriting, and even achieve these tasks without the need for an internet connection. At the same time, AI processing speed is 4.5 times faster, and it can achieve smoother functions such as video conference background blurring, noise reduction, video editing, and adding filters to photos.

Connectivity, the performance of data transmission between devices, directly affects the interaction capabilities of the super assistant.

For terminal devices, the need for connectivity has two aspects: the human-machine interaction field represented by mobile phones and PCs, and the machine-to-machine interaction field represented by the Internet of Things.

In the human-machine interaction field, hardware connectivity needs to provide more intelligent network performance analysis and higher transmission efficiency.

For example, the Snapdragon X75 5.5G modem and RF system integrates a dedicated hardware tensor accelerator for the first time, which is the second-generation Qualcomm 5G AI processor. The AI performance is 2.5 times higher than the first generation, allowing AI to improve wireless bandwidth and latency by analyzing signal integrity and signal-to-noise ratio, making network performance more efficient and data transmission more intelligent.

In the machine-to-machine interaction field, connectivity hardware also has different limitations such as battery life, cost, and size.

For example, the Snapdragon X35 5G NR-Light modem and RF system, compared to mobile broadband and narrowband IoT, implements 5G transmission performance in a lightweight manner, while also providing longer battery life and lower cost, better adapting to smaller IoT devices.

However, there is a crucial point, which is the ability to balance and integrate computing and connectivity.

Whether it is a general large model running in the cloud or a personal large model targeting a super assistant on the terminal, in order to achieve parallel operation, it is necessary to have the dual drive of 5G + AI technology to ensure the stable operation of the models on all sides while ensuring efficient data transmission and user experience.

Qualcomm has been on this path for at least five years.

Starting from positioning intelligent terminal devices, Qualcomm has been using the connectivity capabilities of 5G technology to extend more AI technology from the cloud to the terminal, similar to the relationship between water and a channel, allowing "AI functions that were originally only achievable in data centers to now be achieved on terminals."

From the early development of AI functions in smartphones such as photography, image and video processing, to the low-latency AI functions required for data transmission in smart car cabins and XR gesture recognition, and now to the generative AI running in the cloud and on terminals…

Qualcomm continuously leads the innovation of terminal-side functions with the latest AI technology, and each step is inseparable from the connectivity support of 5G data transmission in the background.

It is the ability to coordinate AI with 5G that can further enhance the AI user experience with efficient connectivity, and in turn use AI to enhance the performance of connectivity, ultimately changing the way users interact with terminals.

Vertically, in terms of carrier types, this revolution in interaction methods can seamlessly connect to different types and functions of terminal devices through tools like the Qualcomm AI software stack.

The Qualcomm AI software stack fully supports various mainstream AI frameworks, different operating systems, and programming languages to enhance the compatibility of various AI software on intelligent terminals.

Based on this toolkit, even if developed on one platform such as a mobile phone, it can run on cars, XR, PCs, and IoT, greatly accelerating the speed of the revolution in interaction methods.

In summary, in the era of interconnected terminals, AI + 5G are two indispensable and mutually cooperative foundational capabilities, and Qualcomm happens to be in a leading position in both areas, continuously leading the technological development on the terminal side.

However, for the super assistant, as fast as the core technology of smart terminals develops, it is only a preparation as a carrier for its large-scale landing.

From the most critical implementation path of the super assistant—AI technology, how far are we from the ultimate goal?

How far are we from the super assistant?

Just as J.A.R.V.I.S. in "Iron Man," in the "Model Power Era," the public's imagination of the super assistant is also an "AI personal assistant" that coordinates everything.

Regarding the imagination of an AI personal assistant, Ziad Asghar, Senior Vice President of Product Management and Head of AI at Qualcomm, has described it as follows:

In all aspects, people may only need one application to complete all tasks, such as productivity apps, entertainment apps, etc., and then use an AI personal assistant to "coordinate everything," which will be a highly disruptive change.

Currently, AI technology is experiencing an outbreak of generative AI represented by large models.

In a recent interview with The Independent, Cristiano Amon, President and CEO of Qualcomm, emphasized the importance of generative AI for terminals:

Generative AI will empower users to greatly improve the efficiency of finding files, and to create and modify videos in an intuitive and efficient manner… Bringing these processing capabilities to terminals will lead to a plethora of application scenarios.

Looking at the smartphone field, the development of AI is expected to usher in a new cycle of growth. Only powerful technological changes can drive the transformation of the smartphone market. We see generative AI as a once-in-a-lifetime opportunity, and the new wave of innovation based on smartphones is unstoppable.

As one of the implementation paths recognized as most likely to achieve the super assistant, large models may still have three conditions to meet before becoming such a super assistant.

First, a change in thinking, that is, evolving into a more powerful self-learning ability.

Just as AlphaGo evolved from imitating humans to surpassing humans, the key to this part is to teach it to learn to self-improve and understand the purpose of actions.

In addition, the thinking mode of large models should also transition from "System 1" to "System 2," from instinctive prediction to slow, rational thinking.

Second, enhancement in functionality, evolving from simple text generation to multimodal, and even the ability to use tools.

Ziad believes that the key to this part still requires appropriate training data, and the size of the model itself may not be so important. For example, OpenAI's GPT-3, despite having 175 billion parameters, LLaMA's 650 billion parameters can achieve the same or even better results.

As an example of multimodal, Stable Diffusion's text-to-image capability is already considered multimodal in a sense, but it only has a few billion parameters. As long as appropriate data is used, adding more modalities to large models is not a problem, and it does not necessarily have to develop in the "large" direction.

Third, the ability to be customized, that is, to customize exclusive large models on terminals and fine-tune the technology for a personal super assistant.

In the case of current large models, Ziad stated that even if some personal information can be provided to cloud-based large models to provide "assistance" in planning, they will ultimately face issues of privacy, security, and even "forgetfulness."

Therefore, to achieve a super assistant, one major path is to create terminal-based large models that rely on personalized information for fine-tuning and customization, while not uploading personal information to the cloud to ensure user safety.

At the same time, the user's terminal usage records can allow large models to better understand the user's intentions through repeated "fine-tuning," becoming a more "intimate" super assistant.

Qualcomm is already preparing for this. The central sensor of the third-generation Snapdragon 8 is helping large models achieve customization on mobile phones, allowing personalized data such as user location and activities to be better utilized by AI personal assistants.

Overall, the future form of the super assistant led by large models may be a new intelligent operating system.

As OpenAI's Andrej Karpathy stated, in such an operating system, the windows and embeddings of large models correspond to memory and hard drives, the code interpreter, multimodal, browser, and other AI algorithms are the system's apps, and the large model itself is like a CPU core, responsible for coordinating and scheduling everything.

Therefore, not only large models, but also more AI technology support is needed to achieve the super assistant in various environmental perception and interaction scenarios.

For example, in the mobile photography scene, in addition to AIGC's generative capabilities, the basic image AI algorithms such as semantic segmentation and perception deployed in the cognitive ISP of the Snapdragon 8 Gen 3 chip can still be further enhanced, saving computing power while also enhancing the phone's environmental perception capabilities.

At the same time, combined with AI technology, it can also achieve applications such as voice-controlled photography, video editing, and seamless expansion of photos…

In XR scenarios, the latest second-generation Snapdragon XR2 and first-generation Snapdragon AR1 platforms support default AI algorithms such as plane detection, depth estimation, 3D reconstruction, semantic understanding, object recognition, and tracking, further enhancing the interaction capabilities of intelligent terminals.

In the field of IoT, the AI performance of the first-generation Qualcomm S7 and S7 Pro audio platforms can be increased up to 100 times compared to the previous generation.

As a result, not only does the AI-powered active noise cancellation function of headphones significantly improve, but different noise reduction capabilities can also be obtained in different scenarios such as meetings, socializing, and gaming. The accompanying sensor devices can also be enhanced in functionality due to AI computing power, allowing for more stable and accurate measurement of health data such as pulse and ear temperature, and intelligent analysis.

As for the automotive sector, the fourth-generation Snapdragon cockpit supreme platform (Snapdragon 8295) has also achieved a more intelligent cockpit experience using AI technology.

For example, in the extreme 01 equipped with Snapdragon 8295, the intelligent cockpit supports offline perception training, allowing algorithms to iterate on the car end. Users only need to speak, and the cockpit can combine AI understanding algorithms and multimodal perception capabilities to accurately understand and respond to needs.

Ziad even believes that within 5 years, AI will completely change the interaction between humans and cars, such as telling the car that you want to go to the airport, eat something good, and buy a cup of coffee, and the car can accurately recognize these 3 needs, intelligently navigate to the specified location.

Clearly, whether it's mobile phones, XR, IoT, or cars, the change in interaction methods of various intelligent terminal devices ultimately requires the development of AI technology in addition to the improvement of their own computing and connectivity capabilities.

This path is exactly what Qualcomm has long insisted on as the AI unification route.

Qualcomm believes that from the cloud, to the terminal, and then to the mixed AI end of the cloud and terminal, AI will eventually be ubiquitous, opening up a new AI era.

Based on this consistent path, Qualcomm can be the first to connect different terminals and computing architectures with AI in the "Model Power Era," taking a step closer to the ultimate goal of the super assistant.

No one can predict the final form of the super assistant.

But it can be foreseen that only by continuing to embrace and develop AI technology can we more quickly drive the transformation of interaction methods in the "Model Power Era" and lead the development of the intelligent terminal industry.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。