Source: Alpha Society

Image Source: Generated by Wujie AI

One year after the release of ChatGPT, generative AI has become a deterministic technological trend. Elon Musk's xAI company plans to integrate its large model Grok, released in early November, into the X platform (Twitter) and make it available to X Premium+ subscribers this week.

xAI was established in July of this year and quickly trained the base model Grok-0, which was then fine-tuned and evolved into Grok-1. Although this model has only about 33 billion parameters, its capabilities already surpass Llama2 70B and GPT-3.5, especially in the fields of mathematics and coding. The research team is also conducting research on the reasoning ability and reliability of large models.

Musk has assembled a high-caliber core technical team, with members from DeepMind, OpenAI, Google Research, Microsoft Research, Tesla, and the University of Toronto, who have led multiple AI fundamental algorithm research and well-known AI projects. The team has a high proportion of Chinese members, including two researchers whose paper citations exceed 200,000.

Grok will differentiate itself by accessing real-time user-generated posts and information on X (formerly Twitter). It can access the latest data posted on X and provide the latest information when users inquire about real-time issues.

If you are interested in the new wave of artificial intelligence, have insights, and have entrepreneurial aspirations, feel free to scan the QR code to add "Alpha Assistant" and indicate your "name + position" to connect with us deeply.

Building an "AI that Pursues Truth," Musk Assembles a High-Caliber Core Team

As the founder of xAI, Musk has deep accumulation and cognition of AI. On one hand, as early as 2013, he began exploring Tesla's autonomous driving capabilities. Currently, Tesla's autonomous driving hardware has iterated to the fourth generation, and the FSD algorithm has iterated to version 12, with an upcoming update.

On the other hand, Musk is one of the co-founders of OpenAI. When OpenAI was still a non-profit research organization, he injected $50-100 million to support its early development. One of OpenAI's co-founders, Andrej Karpathy, served as the AI director at Tesla from June 2017 to July 2022, leading Tesla's autonomous driving project.

In 2018, Musk left OpenAI. According to OpenAI's blog post and Musk's later tweets, the reason was to prevent conflicts of interest as Tesla became more focused on artificial intelligence. According to Semafor, Musk proposed taking over the leadership of OpenAI and left after the proposal was rejected. According to the Financial Times, Musk's departure was also due to conflicts with other board members and employees at OpenAI over AI safety methods.

After leaving OpenAI for several years and with the AI boom sparked by ChatGPT, Musk announced the establishment of xAI in July of this year. The company's goal is to build an artificial intelligence that can "understand the true nature of the universe."

In an interview, Musk stated, "From the perspective of AI safety, an extremely curious artificial intelligence, an artificial intelligence that seeks to understand the universe, will support humanity."

High-Caliber Core Technical Team

Musk has assembled a high-caliber core technical team, with members from DeepMind, OpenAI, Google Research, Microsoft Research, Tesla, and the University of Toronto.

They have led numerous breakthroughs in AI research and technology in the past, such as the Adam optimizer, adversarial examples, Transformer-XL, Memorizing Transformer, and automatic formalization. Additionally, they have contributed to important engineering and product achievements such as AlphaStar, AlphaCode, Inception, Minerva, GPT-3.5, and GPT-4.

In addition to coming from major companies and research institutions, a notable feature of this team is that most members have a solid background in mathematics and physics.

For example, xAI co-founder Greg Yang obtained a bachelor's degree in mathematics and a master's degree in computer science from Harvard, under the guidance of Shing-Tung Yau. Yau introduced Yang to various doctoral students and mathematicians from different fields, and also recommended that he apply for the highest honor a mathematics undergraduate student can receive: the Morgan Prize.

Yang revealed that xAI will delve into one aspect of artificial intelligence— "the mathematics of deep learning," and "develop a 'theory of everything' for the development of large neural networks," in order to "elevate artificial intelligence to the next level."

In addition to co-founder Greg Yang, the core team also includes Guodong Zhang, Zihang Dai, Yuhuai Tony Wu, and later additions such as Jimmy Ba, Xiao Sun, and Ting Chen, all of whom have made contributions in fundamental technology.

Zihang Dai was the co-first author of the XLNet paper, a pre-trained language model released by CMU and Google Brain in 2019, which surpassed the then-state-of-the-art model BERT in 20 tasks.

Dai entered the Information Management and Information Systems program at the School of Economics and Management of Tsinghua University in 2009, and then went to CMU for six years to pursue a Ph.D. in computer science under the guidance of Yiming Yang. During his doctoral studies, he was deeply involved in the Mila lab founded by Turing Award winner Yoshua Bengio, the Google Brain team, and officially joined Google Brain as a research scientist after completing his Ph.D., focusing on natural language processing and model pre-training.

Guodong Zhang studied at Zhejiang University for his undergraduate degree, ranking first in his major for three consecutive years in the ZHU Kezhen Engineering Education Advanced Class. He then went to the University of Toronto for a Ph.D. in machine learning.

During his Ph.D., under the guidance of Geoffrey Hinton, he worked as an intern at the Google Brain team, conducting research on large-scale optimization and fast-weights linear attention. He has also published papers in top conferences in the fields of multi-agent optimization and applications, deep learning, and Bayesian deep learning.

After completing his Ph.D., Zhang joined DeepMind full-time and became a core member of the Gemini project (directly competing with GPT-4), responsible for training and fine-tuning large language models.

Yuhuai (Tony) Wu spent his high school and college years in North America. He studied mathematics at the University of New Brunswick for his undergraduate degree and obtained a machine learning degree from the University of Toronto, under the guidance of Roger Grosse and Jimmy Ba (also a core team member of xAI).

During his studies, Wu worked as a researcher at Mila, OpenAI, DeepMind, and Google. In one of his research projects, he and other researchers trained an enhanced large language model called Minerva, which demonstrated strong mathematical abilities. In the 2022 Polish national mathematics exam, the model answered 65% of the questions correctly. This aligns well with xAI's goal of in-depth research into "the mathematics of deep learning."

Jimmy Ba previously served as an assistant professor (AP) at the University of Toronto. He completed his bachelor's, master's, and Ph.D. degrees at the University of Toronto, with Geoffrey Hinton as his doctoral advisor.

He is also the chair of artificial intelligence at the Canadian Institute for Advanced Research, with a long-term goal of building a general problem-solving machine with human-like efficiency and adaptability. Jimmy Ba has over 200,000 citations on Google Scholar, with over 160,000 citations related to papers on the Adam optimizer and over 11,000 citations related to papers on attention from 2015. He is considered one of the theoretical pioneers of large model technology.

Xiao Sun obtained his bachelor's degree from Peking University and his Ph.D. in EE from Yale University. He later worked as a research scientist at IBM Watson and Meta. His technical background is not in AI models but in AI-related hardware and semiconductors, particularly in the synergy between AI hardware and software. He has received the MIT TR35 (35 Innovators Under 35) award.

Ting Chen obtained his bachelor's degree from Beijing University of Posts and Telecommunications and his Ph.D. from Northeastern University and UCLA in the United States. He later worked as a research scientist at Google Brain, with a total citation count of 22,363 on Google Scholar. His most cited paper proposed SimCLR, a simple framework for contrastive learning of visual representations, which he co-authored with Geoffrey Hinton, with 14,579 citations.

In addition to Jimmy Ba, another senior researcher in the founding team with over 200,000 Google Scholar citations is Christian Szegedy. Szegedy was Wu's team leader at Google, where he worked for 13 years. He has two papers with over 50,000 citations and several others with over 10,000 citations, all focusing on fundamental AI algorithm research. Szegedy holds a Ph.D. in applied mathematics from the University of Bonn.

Igor Babuschkin and Toby Pohlen both participated in DeepMind's renowned AI project AlphaStar. AlphaStar learned from 500,000 games of "StarCraft 2" and then played 120 million games to improve its skills. Ultimately, it reached the highest Grandmaster level, surpassing 99.8% of players.

Grok-1 Model's Capabilities Second Only to GPT-4, Optimized for Reasoning and Mathematical Abilities

In early November, xAI released their first foundational large language model, Grok-1 (approximately 330 billion parameters), which was fine-tuned and completed using RLHF based on their prototype large model Grok-0. Its training data is up to the third quarter of 2023, with an output context length of 8k.

It is claimed that Grok-0 achieved nearly the same capabilities as Llama 2 70B using only half of the training resources, and then underwent targeted optimization for reasoning and coding abilities.

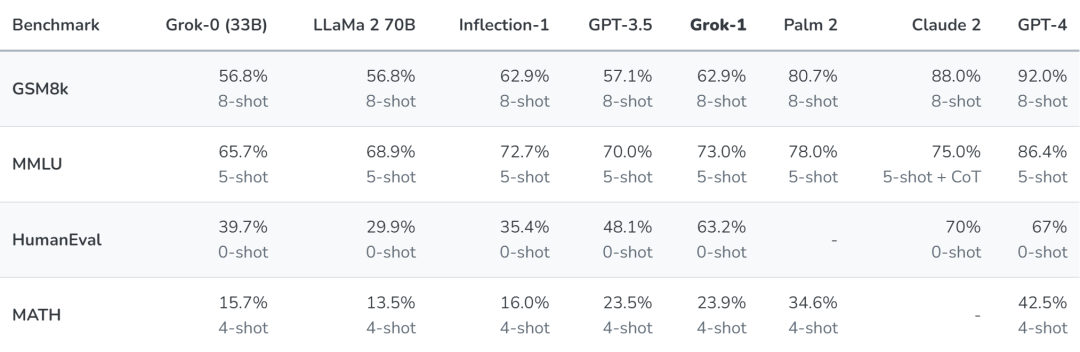

xAI has officially announced the evaluation of Grok-1's capabilities. The evaluation includes:

GSM8k: Middle school math word problems using chain of thought prompts.

MMLU: Multi-disciplinary multiple-choice questions testing comprehensive understanding.

HumanEval: Python code completion tasks testing coding abilities.

MATH: Middle and high school math problems written in LaTeX, testing higher-level mathematical abilities.

From the chart, it can be seen that Grok-1 leads Llama 2 70B and GPT-3.5 in almost all tests, with a significant lead over Llama 2 70B in the HumanEval and Math tests. However, there is still a visible gap between Grok-1 and Claude2 and GPT-4.

Considering that Grok-1's model size is only 33B and it only used half of the training resources of Llama 2 70B, it demonstrates outstanding efficiency. If a version with larger parameter size is released in the future, there is still room for improvement in its capabilities.

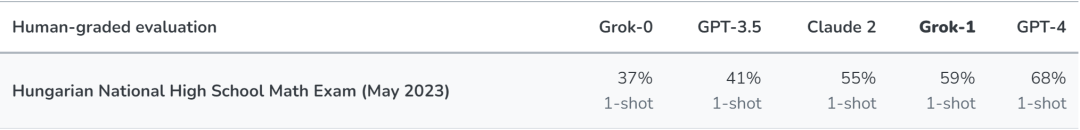

Since the above tests are quite mainstream, xAI also tested Grok's actual performance in the 2023 Hungarian national high school math final exam to eliminate deliberate optimization factors. This approach provides a more realistic scenario, and to ensure fairness, xAI did not make any special adjustments for this evaluation.

The experimental results show that Grok passed the exam with a C grade (59%), while Claude-2 also achieved the same score (55%), and GPT-4 passed with a B grade (68%).

In addition to large models, xAI also announced PromptIDE, an integrated development environment designed specifically for prompt engineering and interpretability research. The purpose of PromptIDE is to enable engineers and researchers to transparently access Grok-1. This IDE aims to empower users and help them quickly explore the capabilities of LLM.

When the large model was first released in early November, Grok-1 was only available to a limited number of users. This week, xAI plans to open up Grok's capabilities to X Premium+ subscribers. xAI also provides Grok with access to search tools and real-time information, giving it a differentiated advantage over other models.

It also offers dedicated "fun" mode, multitasking, shareable chat, and conversational feedback. The fun mode will be the most interesting feature of all, as it gives Grok a unique personality, allowing it to engage in more appealing conversations with irony and humor.

Will the Competitive Landscape of Large Models Change? Where Will the Capabilities Develop?

On the one-year anniversary of the release of ChatGPT, it appears that OpenAI's model capabilities and ecosystem development are still significantly ahead of those of various large model manufacturers. Companies like Anthropic, Inflection, and xAI are still playing catch-up. Even major companies like Google and Amazon are still lagging behind.

The competition between large model manufacturers is comprehensive, and given the high cost of AI model pre-training, continuous investment in massive computing power and financial resources is required for future model version iterations. Additionally, finding scenarios that can fully unleash the value of model capabilities is crucial, as it forms a feedback loop.

Currently, xAI has no shortage of talent, computing power, or financial resources. Furthermore, with the presence of X (Twitter), it is not difficult for them to find application scenarios in the early stages. Although Grok-1's absolute capabilities still lag behind GPT-4, when a version with larger parameter size is released in the future, it will significantly narrow the gap with OpenAI.

The competition of large models is a competition between major companies and super unicorns. However, it is precisely because of the competition and iteration of these companies that companies developing applications and end users will have increasingly powerful and cost-effective AI capabilities. Ultimately, AI will revolutionize all industries.

After one year of large models entering the public eye, we have gained a clearer understanding of their limitations, particularly in terms of reasoning abilities and reliability. In terms of development direction, multimodality is definitely the way forward.

To address these issues, xAI has conducted targeted research. To address the lack of reasoning abilities, they are researching scalable tools for assisted supervised learning, allowing AI and humans to collaborate in fine-tuning AI models.

To address the lack of reliability in AI, they are researching formal verification, adversarial robustness, and other technologies to enhance the reliability of AI. Additionally, although Grok currently lags behind models like GPT-4 in multimodal capabilities due to its parameter size, xAI is actively researching in this direction and will develop models with visual and audio capabilities in the future.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。