Solving AI value alignment requires taking a dual perspective, including technical solutions such as sample-based learning, as well as non-technical frameworks such as governance and regulatory measures.

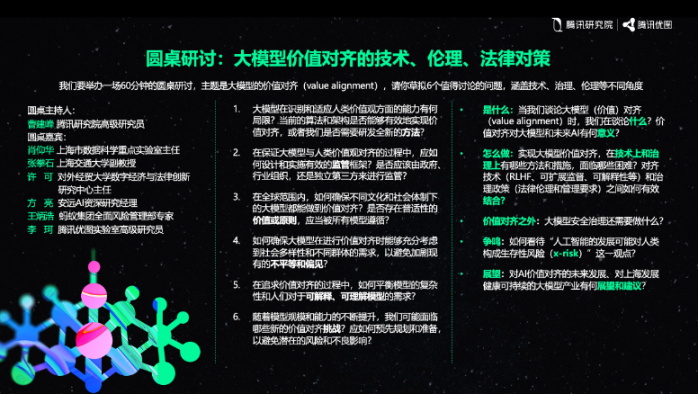

Speakers: Xiaoyanghua, Zhang Quanshi, Xu Ke, Fang Liang, Wang Binghao, Li Ke

Compiled by: Cao Jianfeng, Yao Suhui

Source: Tencent Research Institute

Core Summary:

1) The complexity of value alignment: The concept of value alignment in artificial intelligence is inherently vague and multifaceted, involving ethical standards, cultural norms, and subjective human values.

2) Technical and non-technical aspects: Solving AI value alignment requires taking a dual perspective, including technical solutions such as sample-based learning, as well as non-technical frameworks such as governance and regulatory measures.

3) Security and governance: Sound security and governance mechanisms are needed in artificial intelligence to manage privacy, intellectual property rights, and potential misuse of generated models.

4) Interdisciplinary approach: The issue of AI value alignment transcends disciplinary boundaries and requires collaborative approaches from technological, governance, legal, ethical, and social perspectives.

5) AI as a social entity: Value alignment should be viewed through the lens of social acceptance, transforming artificial intelligence from a simple tool into an entity capable of natural language dialogue and learning social norms.

6) Technical reliability: In addition to performance, the reliability and interpretability of AI models are crucial for trust and alignment with human values.

On October 26, 2023, the first "Intelligence and Innovation Shanghai Alliance" event hosted by Tencent Research Institute's East China Base and Tencent YouTu Laboratory was officially held at the Nobu Center in Shanghai. Professors Xiaoyanghua from the Computer Science Department of Fudan University, Zhang Quanshi, Associate Professor at Shanghai Jiao Tong University, Xu Ke, Associate Professor at the School of Law of the University of International Business and Economics, Fang Liang, Senior Research Manager at Anyuan AI, Wang Binghao, an expert from the Comprehensive Risk Management Department of Ant Group, and Li Ke, a senior researcher at Tencent YouTu Laboratory, deeply exchanged views on the methodology, regulatory implementation, governance, and challenges of AI alignment. From different perspectives, they analyzed and affirmed the importance of "AI value alignment" for the development of the artificial intelligence industry. As the moderator of this roundtable forum, Cao Jianfeng, a senior researcher at Tencent Research Institute, stated that the topic of "value alignment" involves multiple disciplines and requires discussions from various levels such as technology, governance, law, ethics, and society in order to shift from consensus principles to engineering practices, ensuring the creation of a safe and trustworthy future for AI. The following is a summary of the roundtable discussion content.

Xiaoyanghua: The Connotation and Controversy of Value Alignment

First, the issue at hand. We must acknowledge that the concept of value alignment itself has a certain degree of vagueness and uncertainty. Value alignment consists of two words, the first being "value" and the second being "alignment," and their combination gives rise to new connotations. The definition of what is valuable to humans is already relatively vague, and we actually use values at many different levels or from different perspectives. Alignment is an even more complex issue - alignment with whom? Is it alignment with individuals or with a certain group? Alignment with which culture? Alignment with Eastern civilization, culture, values, ethical views, moral views, or alignment with Western mainstream values? Therefore, the question of alignment is very complex in itself. Furthermore, the evaluation of alignment or lack thereof is a very difficult issue. Therefore, we must acknowledge the difficulty, complexity, and vagueness of value alignment.

Second, the question of how to do it. The transcendence of humans compared to machines lies in the fact that humans can think beyond the given level of a problem. If we are to answer this question, we must first define why value alignment is so difficult, understand the difficulties and pain points of value alignment, and only then can we truly answer the question of how to do it. There are two levels: the technical level and the non-technical level. The non-technical level includes governance and regulatory aspects. The technical level is difficult due to the scarcity of samples, making it very difficult to train for values; there is also a lot of ambiguity, and the boundaries are unclear, as mentioned earlier; and it is subjective, with different groups having conflicting and difficult-to-reconcile values. Recognizing these difficulties, many solutions naturally emerge.

Third, after value alignment, what security and governance measures can be taken? Value alignment is a prerequisite for the safe application of large models. The security governance of large models involves many aspects, such as privacy, copyright, and illusion issues. In fact, the security governance of large models is by no means simply a technical issue, but rather has a significant social governance component. Many times, the broad impact of the development of large models on society is our primary concern, and technology serves the purpose of social governance.

Fourth, the controversy. There are many sensational claims now, such as artificial intelligence is going to destroy humanity. First, we must take this view seriously. The impact of artificial intelligence on the entire society is indeed very significant, but the destructive way is not as depicted in some works of art, where humanity is eradicated from the flesh. This is imagination, science fiction, but the real destructive impact may be a gradual and imperceptible one, bringing long-term effects or social upheaval. This impact is initially very slow and subtle, but with the large-scale application of AGI, permeating every capillary of human society, its impact on human society may be very significant, even endangering the regression and extinction of the human population. If we do not conduct a good assessment and actively prepare, from a time perspective, ten or twenty years from now, we may have to face the overwhelming negative impact caused by the misuse of AGI.

With the rapid development of AI large models, if we do not impose certain restrictions on their use in scenarios, it may lead to the abuse of large models, resulting in the regression of human intelligence. For example, if AGI is abused by students in the basic education stage, it may deprive children of the opportunity to train their thinking, leading to the decline of intelligence. Every advanced technological revolution represents an advanced productive force. Any advanced productive force requires corresponding adjustments in the production relations and social structures of human society. The process of adapting production relations to productive forces often accompanies the birth pangs of social transformation. Previous technological revolutions (such as steam and electricity) essentially replaced our physical labor, and the process was slow, with its impact limited to our bodies (such as freeing our limbs from heavy physical labor), leaving us with ample buffer time. Human society had enough time to accept, adapt, and adjust social structures. However, the development speed of general artificial intelligence technology is too fast, and the object it replaces is human intellectual activity, affecting the regression of human intelligence itself, involving the stability of social structures and employment structures. Therefore, how to control its development speed within the range that human society can adapt to is the issue we need to closely monitor and respond to at present, and answering this question is an effective way to discuss the human survival risks brought about by AI.

Zhang Quanshi: Trustworthy Evaluation and Value Alignment of Large Models Based on Interpretability

From a technical perspective, the fundamental issue behind the value alignment of large models is whether they can be explained. Most discussions involving the value alignment of large models often focus on whether their answers align with human values, but this is not the fundamental issue. The important question is whether we can trust them in some major tasks.

For neural networks, we don't explain from the surface, but verify from the mechanism how many signals are trustworthy and how many are not? Can it be completely analyzed? The output signals of neural networks can be strictly explained as some symbolized concepts, such as in a general model, it can be explained as interactions between fewer than 100 different data units, while for large models, this number may be around 300. Considering any form of occlusion, if the graph model of these nodes remains consistent with the output of the neural network in various states, we can ensure the correctness of the explanation. Even for large models with different structures, their ways of explanation may converge.

So I have been thinking, can we really ground the explanation of large models, and what are the standards for grounding? Recently, this issue has been focused on some local loss functions or structures, but I believe this is not a very good explanation. We should bypass the complex structural parameters of large models and directly look at the equivalent modeling of concepts from input to output. Even if the connection patterns of neurons in each brain may be different, our cognition converges. In May of this year, we obtained theoretical verification, and in this regard, we can precisely explain the generalization and robustness of large models. We bypassed traditional methods and quantified the number of modeled concepts, redefining robustness and generalization in terms of concepts.

Currently, the most important issue in the training of large models is that they are black boxes during training. Some data may have disrupted the representation of the large model after a period of training. Can we judge which concepts are overfitting beyond the representation layer of end-to-end? Evaluating the quality of a black box a year or two later is not an effective method, so we can terminate overfitting samples on the representation of large models in advance to improve efficiency. In terms of performance, Chinese and American large models may be similar, and performance may have a ceiling, but the reliability of large models is more important. This is a good entry point. We hope to optimize algorithms, improve efficiency, and shorten training time through communication and collaboration with various parties.

Xu Ke: Value Alignment is the Socialization of AI

First, we need to ask: Why does AI need alignment? AI alignment does not stem from risk, but from the fact that AI is not explainable. In fact, if it can be explained, the internal logic is very simple, that is, it requires the people who design and operate the technology to bear the corresponding alignment obligations, not the machine's alignment, but everyone's alignment. Just as a person kills with a gun, it is the person who kills, not the gun. Therefore, there is no need for the alignment of the values of the gun and the person. The gun is set to serve people, so returning to the alignment of people is sufficient. It is precisely because AI is a black box that we cannot clearly define the responsibilities of all parties. When specific responsibilities of specific entities cannot be clearly defined, the AI system is required to assume abstract moral and ethical responsibilities, which is "alignment."

The question arises, who exactly is it aligned with? Can people's values be aligned? In fact, most social conflicts are caused by the misalignment of people with people. If we define alignment as the alignment of values, I think it is difficult to achieve. In fact, the governance of generative artificial intelligence in the EU, the United States, and China is based on their respective values. The values of the three parties are so different that it is almost impossible to achieve alignment in a certain sense.

However, if we look at it from the perspective of specific long-term values, it is possible to establish a global underlying consensus. But the greatest common denominator of values is often the most hollow, and it cannot guarantee that everyone's understanding of each value is the same.

In that case, what are we really discussing when we talk about alignment? Perhaps we can bypass the endless philosophical and moral debates and turn to the dimension of sociology. In this regard, the real meaning of discussing alignment is to make AI a socially accepted person. The breakthrough of generative AI is that it has transformed from a tool to being able to have natural language conversations with humans. From a social science perspective, the acquisition of natural language represents the first step of socialization. So, in reality, "alignment" is not legal or ethical, but a process of socialization. We can imagine AI as a child, it needs to learn social value norms and then become a member of society. Although socialized people may hold different values, they still need to adhere to the most basic principles and requirements of society and become responsible individuals.

There are two paths to socialization. The first is "raising without teaching," where rules are established by an authority, and the symbolic school of artificial intelligence is like this. The second type of socialization is called "self in the mirror," where one corrects their behavior through the evaluation of others. This process of observation, imitation, and feedback is the process of socialization, and it is the most effective form of alignment. This is also the successful path of connectionism's values and current generative AI.

The question arises again, what kind of method can achieve this self in the mirror? Socialization requires artificial intelligence to observe, imitate, and receive feedback, forming a closed loop. It can only truly align itself through the observation of others. Therefore, reinforcement learning based on human feedback (RLHF) is a very important method. In addition, can machines observe and learn from each other and receive feedback? If true alignment is to be achieved, the core is not a specific set of values, but the ability to form a technical closed loop and instill it in a certain way to achieve a state that is dialogical, adjustable, and controllable. There is no need to go further, because that step will never be reached.

Fang Liang: Need to Connect AI Security Technology and Governance, Model Evaluation Can Serve as Key Infrastructure

When we talk about "value alignment" (human-machine alignment), we may be discussing three non-mutually exclusive concepts:

The first concept refers to the "entire AGI safety field," which aims to control advanced AI through systematic methods. For example, the work of OpenAI's "Super Alignment" team and DeepMind's AGI safety team revolves around this goal. In 2021, Professors Gao Wen and Huang Tiejun also co-authored a paper on technical strategies for addressing the safety risks of strong artificial intelligence.

The second concept refers to "a subfield of AI safety," which is about guiding AI systems to improve towards human expected goals, preferences, or ethical principles. A well-known example is the Center for the Study of Artificial Intelligence Safety (CAIS), which is also the organization that issued the AI survival risk statement, dividing AI safety issues into four levels: 1) Systemic risk, reducing systemic harm to the entire deployment; 2) Monitoring, identifying and detecting malicious applications, monitoring the predictive and unexpected capabilities of models; 3) Robustness, emphasizing the practical impact of countering attacks or low-probability black swans. 4) Alignment issues, more about the internal harm of the model, enabling the model to represent and safely optimize difficult-to-set goals to align with human values.

The third concept refers to "ensuring the alignment technology of LLM to return safe content." For example, RLHF and Constitutional AI.

The first two concepts aim to reduce the extreme risks posed by AI, which is also related to the origin of this field. However, in China, the focus is more on the third concept. In fact, there are very subtle differences in terms such as AI alignment, value alignment, and intent alignment.

The key point worth considering here is the balance between safety and capabilities: Do we see alignment as a technology to enhance capabilities or to enhance safety? When providing new capabilities to enhance alignment, will it bring new risks?

Specifically, how to do it? From a governance perspective, I think the following three aspects are good points of integration:

First, propose better and more specific risk models to address them effectively. Advanced AI will have the ability to manipulate, deceive, and perceive situations, but good risk models should help everyone understand and address these issues. Currently, the core concern of AI security researchers is the pursuit of misaligned power and instrumental strategies, such as self-protection, self-replication, and resource acquisition (such as money and computing power), which can help AI achieve other goals but may lead to conflicts with humans.

Correspondingly, two types of technical reasons can be summarized: one is normative gaming, also known as Outer Alignment. AI systems exploit vulnerabilities in human-specified objective functions to obtain high rewards, without actually achieving human-expected goals. The other is goal misgeneralization, also known as Inner Alignment, which involves specifying a "correct" reward function, but the rewards used during training do not allow us to reliably control model behavior when generalizing to new situations.

Second, model evaluation can better connect security and governance issues. DeepMind has conducted model evaluations for extreme risks, focusing on the extent to which models are capable of causing extreme harm. Model alignment evaluations focus on the extent to which models tend to cause extreme harm. Embedding evaluations into governance processes, such as incorporating internal evaluation stages for research and development staff, external access stages, or using external independent third-party model audits, can help companies or regulatory agencies better identify risks.

Last month, the evaluation team of the Alignment Research Center proposed a Responsible Scaling Policy (RSP), which involves developing security measures based on the potential harm caused by potential risks. The benefits of this approach are threefold: it is a practical stance based on evaluation rather than speculation; it shifts from a principle-oriented approach to specific commitments required for continued safe development, such as information security, rejecting harmful requests, and alignment research; and it can help establish better standards and regulations based on evaluations, including standards, third-party audits, and voluntary RSPs to provide a testing platform for processes and technologies, which may aid future evaluation-based regulation.

Third, addressing the challenge of risk rating, from predicting risks to monitoring risks. Whether in the EU or in domestic generative artificial intelligence, a risk-based regulatory framework is mentioned. However, a challenge that cannot be avoided in the governance of AI is that, to some extent, evaluation can change the approach, shifting from risk rating to dynamic monitoring. This may help iterate governance paths, focusing on dynamic risk monitoring mechanisms and emphasizing the application of technical means to govern technological risks.

In summary, I suggest that we take security issues seriously:

First, prediction and anticipation: Take the possibility seriously and develop better technology-based risk models and probability judgments. "An ounce of prevention is worth a pound of cure."

Second, from a technological perspective: Allocate more research and development funds for AI security and value alignment research, and recommend investment in AI capabilities development.

Third, from a governance perspective: Connect AI security technology and governance through model evaluation work. Effective government regulation is needed for the risks of cutting-edge AI.

Wang Binghao: The Key Challenge of Value Alignment Practice is to Establish Consensus

Thank you very much for the opportunity to participate in the learning and exchange process with everyone. My focus is on the risks of large models and AI ethics, and because of the practical aspects, I have a deep understanding of the difficulties in evaluating and controlling large models.

First, I believe that the core of value alignment is to define what value is. We have listed many technical means to solve alignment issues, from technical collection to training and reasoning, but I have always had questions about how the most original values or knowledge system itself came about and how to inject them. This problem may be something that technology can solve. No one is perfect, and when it comes to values, such as public order and compliance with laws and regulations, and moral ethics, everyone has different interpretations. For large models, this must be ambiguous, so how do we refine it? I agree more with the idea of consensus and building trust, so we are also trying to reach consensus with all stakeholders, regulators, experts, users, and our internal staff.

Second, once we have a knowledge system, how do we verify whether it is aligned in the application process? How do we evaluate it? There are still many difficulties in evaluation. For example, we simplify tasks, such as simplifying an open-ended question and answer into a choice or judgment, allowing the model to make automatic judgments. However, this ultimately changes our understanding or interpretation of values, and we cannot ensure whether the model has learned the values we advocate.

Therefore, in my opinion, value alignment is a very complex system engineering in practice, and the two key words highlighted in the entire process are establishing consensus and building trust.

Li Ke: Considering Model Value Alignment from the Perspective of Human Education

In the direction of implementing large models, I believe that the problem of value alignment of large models is similar to human education. Therefore, I will explore this issue from the perspective of human education.

What is value alignment doing? I agree with Professor Xu's statement that it is making the model a "person." In human education, we hope that every educated person will comply with basic laws and regulations, meet moral standards, and even go further to be a good person. The value alignment of the model is the same, but it is transformed into requirements for the model. Education itself does not have standardized guidelines, and everyone has their own ideal person in mind. Therefore, it is also difficult for us to find a golden model that everyone agrees with and satisfies everyone.

The methods of value alignment can be inspired by pedagogy. Based on the duality of human education and model value alignment, we can draw on educational methods to consider how to design better model value alignment methods. As one of the main means of model value alignment, the core of reinforcement learning based on human feedback (RLHF) is how to efficiently solve the problem of the source of supervisory signals, making the process more efficient by designing reward models or agent models, similar to how humans use tools or seek assistance in the learning process. For example, in addition to teachers unilaterally teaching students, students also learn from each other. Therefore, in the model training process, we also use methods such as mutual learning to assist in training, drawing inspiration from pedagogy.

Just as the requirements for humans are hierarchical, the requirements for model values should also be hierarchical. First, let the large model obey the law and not engage in harmful or criminal activities. Next, we hope that in addition to complying with the law and regulations, it can also meet moral requirements. Finally, we hope the model can be a "good person." This hierarchical difference is a train of thought that can be considered when formulating laws and regulations for generative large models.

Large models promote educational equity. Whenever new technologies emerge, there are always concerns that they will pose a threat to human beings. However, looking back at human history, no technology has truly posed a threat to humanity or destroyed humanity. Ultimately, humans have found ways to control them within a manageable range to unleash their value. I believe that there is no need to be overly concerned about the emergence of large models at present. On the contrary, I believe that the emergence of large models will break the monopoly of educational resources and enhance the inclusiveness of education. In the past, knowledge was only held by a few people, and the popularization of education gave every child the right to the same education. The emergence of large models further gives everyone the opportunity to access the most cutting-edge expert knowledge or advice in a certain field, which in the long run will be far more beneficial to human civilization than the potential risks that may exist during the process.

Looking Forward Together

From a technical perspective, investments in security and alignment should be at least equivalent to investments in the development of artificial intelligence capabilities. Because the understanding of large models does not directly correspond to human understanding, our research can experimentally convert their internal decisions into two sets of analyzable discrete signals to assess errors or identification issues, and explore feasible technical paths.

From a governance perspective, it is necessary to bridge technology and governance. Evaluation is one aspect, but the risks of AI also require government coordination and international regulation. Large models have very high requirements in terms of data, computing power, and algorithms. It is hoped that in the future, with government leadership, the roles of universities, enterprises, and government can be coordinated to propel large models to make a qualitative leap. We believe that more interdisciplinary or cross-domain cooperation and integration will emerge in the future, shaping consensus and building a trustworthy value system together.

From a developmental perspective, the current state of large models is characterized by battles involving hundreds or even thousands of models, but much of the competition is at a low level. How can we transition to high-level competition? Shanghai can start from the following two aspects: first, addressing the issue of establishing high-quality public databases, including public data and encouraging the sharing of private and corporate data. Second, addressing the issue of computing power sharing. From a developmental perspective, can there be systematic support for training large amounts of computing power?

From a health perspective, in pursuit of something that aligns with human values, it is important to set clear, explicit, and actionable baseline standards to ensure that models do no harm, ultimately promoting the healthy and sustainable development of large models.

From a predictive perspective, we need to take the possibility of strong artificial intelligence emerging in the next 5-10 years seriously, even if it brings significant risks. It is important to propose more specific risk models and probability predictions, as the benefits of being prepared are always greater than the harm of being caught off guard. The underlying premise of today's discussion on value alignment issues seems to imply that we want large models or artificial intelligence to replace us in some value judgments and decisions. However, this inclination itself carries risks, and we need to carefully evaluate its safety boundaries, establish basic principles for the safe application of artificial intelligence, and ensure that large models only generate without making judgments, only advise without making decisions, and perform tasks without making final decisions. The ethical values of humans are too complex, and humans should not delegate their responsibility and obligation for judgment and decision-making to machines, even highly intelligent ones. Value judgment is an unshakable responsibility of human subjectivity.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。