Author | Mary Branscombe

Compiled by | Yan Zheng

Source: 51CTO Technology Stack

OpenAI, as the darling of generative AI, has recently been embroiled in a chaotic and farcical "soap opera" of disintegration. Microsoft, like a big brother, intervened firmly and amicably, like an adult entering a room to calm children's quarrels.

Hiring Ultraman, hiring a large army, "repatriating" Ultraman, seeking a seat on the board, Nadella led Microsoft to take all measures to save OpenAI.

Finally, with Sam's return to OpenAI and a change in the board, this conflict drama, after several twists and turns, finally came to an end. However, the focus of the debate has shifted to "OpenAI's dependence."

Not only small and medium-sized enterprises using GPT feel panic about settling with OpenAI, even Microsoft has had to intervene to save it. If OpenAI is not stable, it is not beneficial to anyone.

Microsoft and OpenAI, it's not just about money

In the opening keynote at the recent Ignite conference, Microsoft CEO Nadella mentioned OpenAI 12 times and the GPT model 19 times. Despite having its own outstanding AI researchers and a large base model, Microsoft still cares deeply about OpenAI's ChatGPT.

Over the years, Microsoft has invested heavily in OpenAI, especially this year, when Microsoft made a $10 billion investment in OpenAI, which is an enormous sum even for Microsoft.

However, in addition to the huge sum, do not underestimate Microsoft's ambition to create AGI. In the short term, this is an unattainable plan, but it's hard to say that Microsoft does not have such a new vision: Microsoft hopes people will see it as an "AI company," especially a "Copilot company," rather than a "Windows company."

In 2021, Microsoft spent $19.7 billion to acquire Nuance, the intelligent voice recognition giant behind Siri. In 2019, Microsoft spent $7.5 billion on GitHub.

Similarly, this year, Microsoft has acquired the technical foundation of ChatGPT through high investments and successfully integrated it into its own cloud. Now we see Microsoft running OpenAI's large language models on the (Azure) cloud, expanding Azure's market share and profitability.

The cloud is just the tip of the iceberg. Microsoft's own products also use OpenAI technology, which is at the core of many Copilots.

Why? Because OpenAI has also made significant investments in Microsoft's hardware and software, with almost all departments and product lines using OpenAI technology, leading to a certain technological dependence.

Microsoft's ambition and strategy are inseparable from OpenAI

Like other base models, large language models require a large amount of data, time, and computing power to train.

How does Microsoft's strategy of investing heavily "develop" the value of large models?

Microsoft's strategy is clear: to view large models as a platform, to build some models at once, and then to reuse them in increasingly customized and specialized ways.

A specific example is that over the past five years, Microsoft has been building the technology stack for Copilots, changing everything from the design of underlying infrastructure and data center (an average of one new data center every three days in 2023) to software development environments to improve efficiency.

Starting with GitHub Copilot, almost every Microsoft product line now has one or more Copilot features. It is not only providing Microsoft 365 Copilot, Windows Copilot, Teams, Dynamics, and renamed Bing Chat for consumers and office workers, or GPT-supported tools in Power BI; from security products (such as Microsoft Defender 365) to Azure infrastructure, and to Microsoft Fabric and Azure Quantum Elements, all have Copilots.

In addition, Microsoft's customers have built their own custom Copilots based on the same stack. Nadella cited six examples—from Airbnb and BT to NVidia and Chevron.

At the same time, the recently launched Copilot Studio is a low-code tool for building custom Copilots using business data and Copilot plugins for common tools such as JIRA, SAP ServiceNow, and Trello. This may make OpenAI ubiquitous.

To achieve this goal, Microsoft has established an internal pipeline to experiment with OpenAI's new base models in small services such as Power Platform and Bing, and then use the knowledge gained to build more professional AI services that developers can use.

This internal pipeline standardizes semantic cores and prompt flows for using traditional programming languages such as Python and C# to orchestrate AI services (and establishes a friendly front end for developers in the new Azure AI Studio tool). These tools help developers build and understand applications supported by large models without needing to understand the large models themselves. But they rely on expertise in OpenAI models.

Hardware collaboration has not yet blossomed, so how can it wither

Microsoft will invest heavily in the hardware infrastructure on which OpenAI depends. Specifically, they plan to invest in Nvidia and AMD GPUs, which are crucial components for training and inference of OpenAI models. In addition, Microsoft will invest in high-bandwidth InfiniBand network interconnects to ensure fast and efficient communication between nodes.

It is worth mentioning that Microsoft acquired Lumensity last year, a company focused on manufacturing low-latency hollow fiber cables (HFC). This fiber optic technology will play a crucial role in Microsoft's hardware infrastructure, providing faster and more reliable data transmission.

These investments are not only aimed at a specific base model but are intended to enhance the performance and stability of the entire OpenAI platform. Through these measures, Microsoft hopes to ensure that OpenAI continues to maintain its leading position in the field of generative artificial intelligence.

Currently, OpenAI is not only collaborating with Nvidia-driven AI supercomputers on the Tops500 list but has also made some improvements to Maia 100 (the first AI chip released by Microsoft).

Microsoft's customers also want similar infrastructure, or are only interested in services running on that infrastructure (almost all products and services provided by Microsoft are like this).

Previously, Microsoft's main means of AI acceleration was to use the flexibility of FPGAs (field-programmable gate arrays). Initially, these hardware accelerators were used to accelerate Azure networking, later becoming accelerators for Bing search for real-time AI inference. In addition, service developers can also use it to extend their deep neural networks on AKS.

With the emergence of new AI models and methods, Microsoft can reprogram FPGAs to create soft custom processors to accelerate these models and methods more quickly. In contrast, building new hardware accelerators may quickly become outdated.

The huge advantage of FPGAs is that Microsoft does not need to pre-determine the system architecture, data types, or operators it thinks artificial intelligence will need in the coming years. Instead, it can continuously modify its software accelerator as needed. In fact, you can even reload the functional circuits of the FPGA during operation.

However, last week Microsoft announced the first generation of custom chips: the Azure Maia AI Accelerator. This accelerator is equipped with a custom on-chip liquid cooling system and rack, specifically designed for "training and inference of large language models," and will run OpenAI models Bing, GitHub Copilot, ChatGPT, and Azure OpenAI services.

This is a significant investment that will significantly reduce the cost and water usage of training and running OpenAI models. However, these cost savings will only be realized when training and running OpenAI models continue to be the primary workload.

This new move demonstrates Microsoft's deep investment and long-term planning in the field of AI, while also reflecting the complementarity of FPGAs and custom chips in the AI acceleration field.

Essentially, Microsoft has just built a soft-customizable OpenAI hardware accelerator, which will not be deployed in data centers until next year, and the future design has already been planned. This is not the best time for Microsoft's intimate partner OpenAI to collapse, otherwise the chain reaction would be difficult to contain.

Microsoft's Growth Flywheel Needs to Keep Turning

So why doesn't Microsoft acquire OpenAI?

Although Microsoft may have been making acquisition proposals for the past few years, initially it did not intend to acquire OpenAI. Instead, Microsoft deliberately chose to collaborate with teams outside the company to ensure that the AI training and inference platform it was building not only met its own needs but was more universally applicable.

However, as OpenAI's models continued to take a leading position in the competition, Microsoft's bet on these models also grew. In fact, OpenAI's ChatGPT claimed to have 100 million users per week just one year after its launch, even causing OpenAI to temporarily pause registrations for ChatGPT Plus due to the overwhelming number of new subscribers.

And this doesn't even include the usage of OpenAI by Microsoft's direct customers, further proving the popularity and market share of OpenAI models.

The truth is, whether you use ChatGPT in OpenAI or OpenAI models built into Microsoft products, it can run on Azure. The boundary between Microsoft's so-called "first-party services" (its own code) and "third-party services" (from anyone else) has become quite blurred.

Customers are Starting to Consider Switching to Competitors

Although OpenAI's developer relations team has been working hard to assure customers that "our lights are still on," the system is still running stably, and the engineering team is on standby, reports indicate that OpenAI's customers have already started reaching out to competitors Anthropic and Google.

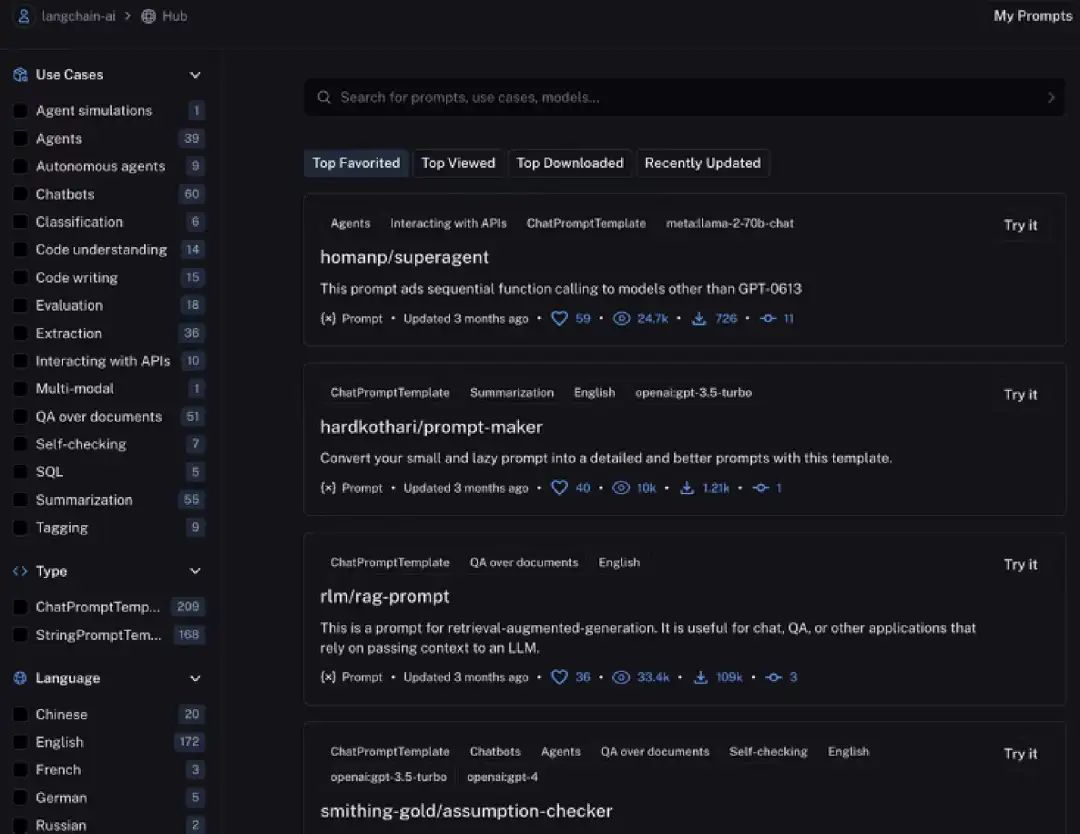

This may even include Azure OpenAI customers that Microsoft is unwilling to lose. For example, startup LangChain, a company building a framework for creating applications supported by LLM, has just announced a major integration with Azure OpenAI services. However, it has also been sharing suggestions with developers on how to switch to different LLM, which requires significant changes to the prompt engineering. Currently, most examples are for OpenAI models.

OpenAI: Microsoft's Serious Dependency

It is evident that at Microsoft, almost all departments and product lines have the same internal GPT version, introducing as much OpenAI expertise and simplification as possible, which is obviously more efficient for Microsoft. If OpenAI were to split or disappear, everything would become tricky.

As Microsoft CFO Amy Hood said, "broad perpetual licenses to all OpenAI IP," until AGI (if it happens). However, today's large models are not enough. Microsoft is looking forward to the arrival of large models like GPT-5.

Despite the name, OpenAI has never been a major open-source organization, and none of the few open-source versions are core large language models. In contrast, Microsoft is slowly embracing open source, open-sourcing core projects such as PowerShell and VS Code, while also starting to rely on open-source projects such as Docker and Kubernetes in Windows Server and Azure, which is intriguing.

On the other hand, compared to its reliance on open source, Microsoft's dependency on OpenAI is now more severe. Ironically, the stability and governance capabilities of OpenAI are somewhat surprising.

Therefore, in any case, Microsoft must take all measures to ensure that OpenAI can survive, as we have seen.

Original article link:

https://analyticsindiamag.com/microsoft-doesnt-really-need-openai-it-wants-agi/

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。