Source: SenseAI

Image Source: Generated by Wujie AI

The automobile is the culmination of the industrial era's mechanical and electronic technologies. With OpenAI's foray into the field of autonomous driving and the addition of a multimodal large-scale language model (MLLM) with universal understanding capabilities, cars will become an important agent on our path to AGI.

Autonomous driving relies heavily on safety and reliability, and MLLMs can play a role in various aspects of the autonomous driving stack. In addition to offline task training for data labeling and simulation, CEO John Hayes hopes to use them directly for driving tasks, which is worth paying attention to.

As a pioneer in consumer car-scalable autonomous driving software, Ghost Autonomy announced on November 8, 2023, that it has received a $5 million investment from the OpenAI Startup Fund to introduce a large-scale, multimodal language model (MLLM) into autonomous driving. This funding will be used to accelerate the ongoing research and development of complex scene understanding based on LLM, which is exactly what the next stage of urban autonomous driving needs. After this round of financing, the company's total financing amount has reached $220 million.

01. Optimizing Multimodal Large Language Models for Autonomous Driving

Brad Lightcap, Chief Operating Officer of OpenAI and Manager of the OpenAI Startup Fund, said: "Multimodal models have the potential to expand the applicability of LLM to many new scenarios, including autonomous driving and cars, by combining video, images, and sound to understand and draw conclusions, potentially creating a whole new way to understand and navigate complex or unusual environments."

LLMs are constantly improving their capabilities and expanding into new application areas, disrupting existing computing architectures across various industries. Based on Ghost Autonomy, large language models will also have a profound impact on the software stack of autonomous driving, and the addition of multimodal capabilities to large language models (accepting text input while also accepting image and video input) will accelerate their application in autonomous driving scenarios.

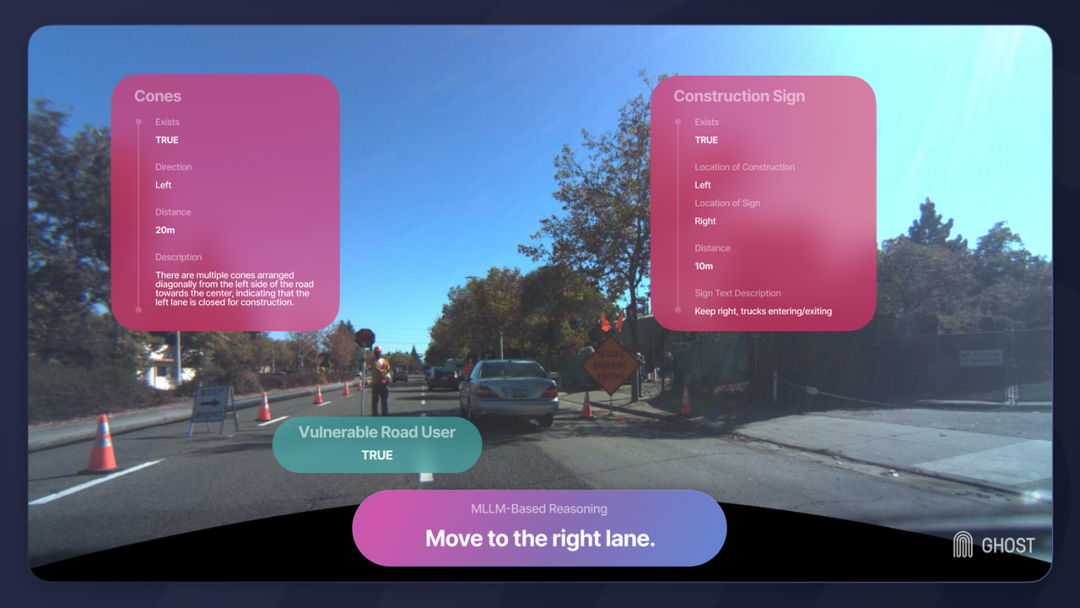

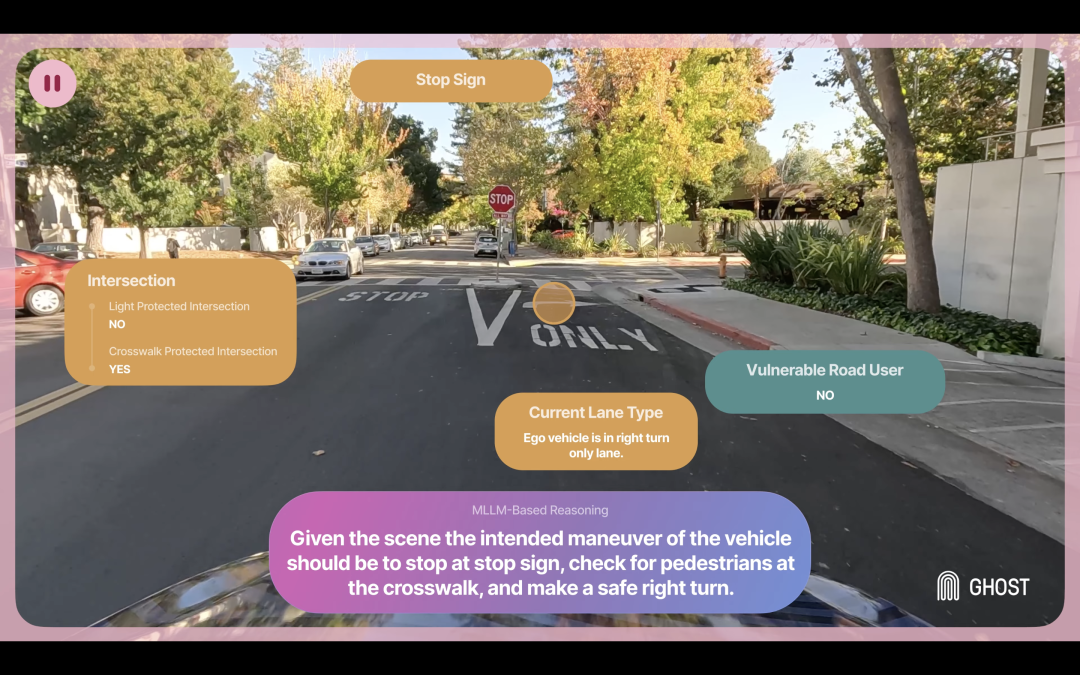

Multimodal large language models (MLLM) have the potential to make overall inferences about driving scenes, integrating perception and planning to provide autonomous vehicles with a deeper understanding of scenes and guidance for correct driving operations through comprehensive consideration of the scene.

MLLMs have the potential to become a new architecture for autonomous driving software, capable of handling rare and complex driving scenarios. Existing single-task networks are limited by their narrow scope and training, while LLMs allow autonomous driving systems to comprehensively reason about driving scenes, using extensive world knowledge to handle complex and unusual situations, even those never seen before.

The ability to fine-tune and customize commercial and open-source multimodal large language models is constantly improving, potentially greatly accelerating the development of MLLM in the field of autonomous driving. Ghost is currently continuously improving the application of MLLM in the field of autonomous driving, while actively testing and validating this capability on the road. Ghost's development fleet sends data to the cloud for MLLM analysis, while also actively developing the use of MLLM insights and providing feedback to the car's autonomous driving functions.

02. Architecture of Large Autonomous Driving Models

The large autonomous driving model provides an opportunity to rethink the entire technology stack for autonomous driving.

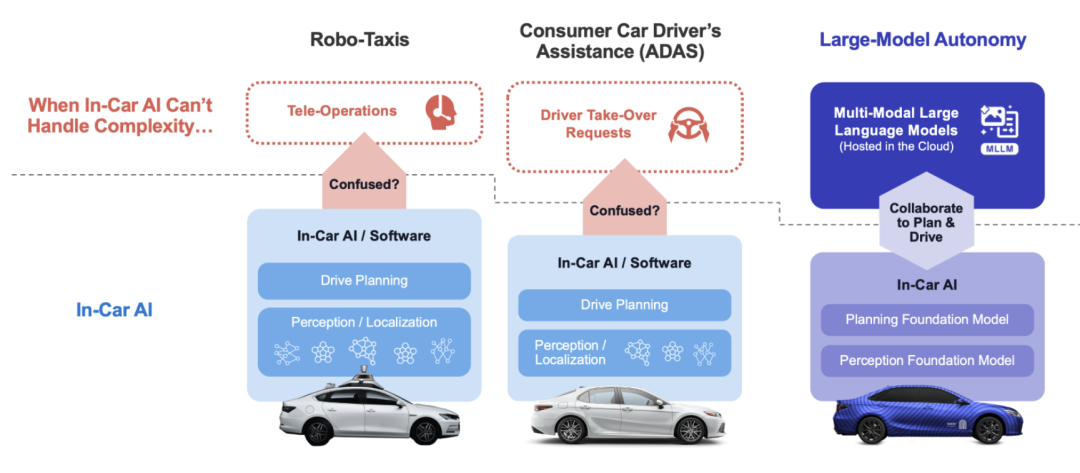

Today's autonomous driving technologies suffer from fragility issues. They are often built "bottom-up," with many pieced-together artificial intelligence networks and driving software logic on top of complex sensor, mapping, and computing stacks to perform tasks such as perception, sensor fusion, driving planning, and driving execution. This approach leads to an intractable "long tail" problem—every corner encountered on the road leads to more and more software patches in an attempt to achieve safe iterations. When the scene becomes too complex for the onboard AI to safely drive, the car must "retreat." If it is a robot taxi, it is operated by remote personnel from a remote operation center; if it is a driver assistance system, the driver is reminded to take over.

MLLM provides an opportunity to solve problems "top-down." If we can use a model trained extensively on world knowledge to reason about driving and optimize it to perform driving tasks, such a model can comprehensively reason about scenes, from perception to suggested driving results, making the construction of the autonomous driving stack simpler and greatly enhancing its capabilities. This stack can reason about complex and variable urban driving scenes, surpassing traditional planning training.

Implementing MLLMs for autonomous driving requires a new architecture, as today's MLLMs are too large to run on embedded in-vehicle processors. Therefore, a hybrid architecture is needed, with a large-scale MLLM running in the cloud collaborating with specially trained models running in the vehicle to share autonomous tasks and long-term and short-term planning between the car and the cloud.

Establishing, delivering, and verifying the safety of this large-scale autonomous driving architecture will take time, but this does not mean that MLLM cannot have a faster impact on the autonomous driving stack. MLLMs can start by improving data center processes, organizing, labeling, simulating autonomous driving training data, and training and validating in-vehicle networks through data centers. MLLMs can also be connected to existing autonomous driving architectures and enhance their capabilities by continuously enhancing them to take on more and more autonomous driving tasks.

John Hayes, Founder and CEO of Ghost Autonomy, said: "For a long time, solving complex urban driving scenes in a scalable way has been the holy grail of this industry. LLMs provide a breakthrough that will ultimately enable everyday consumer vehicles to reason and navigate in the most challenging scenes. Although LLMs have been proven to be valuable for offline tasks such as data labeling and simulation, we are excited to be able to directly apply these powerful models to driving tasks to fully realize their potential."

Ghost's platform helps leading car manufacturers introduce artificial intelligence and advanced autonomous driving software into the next generation of cars, and now expands functionality and application scenarios through MLLMs. Currently, Ghost is actively testing these capabilities through its development fleet and collaborating with car manufacturers to jointly verify and integrate new large models into the autonomous driving technology stack.

References

https://www.ghostautonomy.com/blog/mllms-for-autonomy

https://www.ghostautonomy.com/blog/ghost-autonomy-announces-investment-from-openai-startup-fund-to-bring-multi-modal-llms-to-autonomous-driving

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。