Author: Chen Xi, Silicon Valley 101

Now, who is the company that stands at the forefront of the AI wave, being mythologized and admired while also being fiercely criticized? That's right, it's the leader of this AI wave: Open AI.

But the growth history of OpenAI has not been smooth sailing, reflecting the idealism, conflicts, choices, and power struggles of a group of Silicon Valley giants, top scientists, and capital.

So, this is a fascinating story full of details, about a group of top AI researchers with pure beliefs, about how Musk indirectly triggered Open AI's commercialization path, about Open AI's core leader Sam Altman abandoning idealism to join Microsoft, about the power struggle between Microsoft CEO Nadella and founder Bill Gates, and about the untold story behind the amazing ChatGPT that shocked the world. Our Silicon Valley 101 team has established an internal AI research group, with many professionals joining, hoping to delve deeper into more interesting technologies and stories from a professional perspective. And the rise of Open AI will be one of the most important stories determining the future of humanity.

At the end of March 2022, Elon Musk and a group of technology leaders issued a joint letter, citing concerns about the safety of artificial intelligence, calling for a halt to the development of more powerful AI systems.

And this person, Sam Altman, is too good at public relations and designing business paths. So, as the voices of both the pro-AI development and anti-AI development camps continue to grow louder, Silicon Valley has begun to take sides, and recently many insiders have exposed the details and stories of Open AI's development. Based on the information we have, including some exclusive clues from our Silicon Valley 101 team, we will detailedly review the rise of Open AI.

01 Idealism: A Group of Pure Top Scientists

In 2014, Google acquired the top artificial intelligence research institution DeepMind for $600 million, which later released AlphaGo and defeated Lee Sedol and Ke Jie in the game of Go.

In Silicon Valley, a group of giants couldn't sit still. They saw the potential and threat of artificial intelligence earlier than the public: in the future, whoever possesses the most powerful AI technology will have the most unshakable power.

They feared that Google would become a monopolistic AI powerhouse – and even Google's motto at the time, "Don't Be Evil," couldn't dispel their concerns. According to Wired magazine, on a summer evening in 2015, several of Silicon Valley's most influential figures gathered in a private meeting room at the luxurious Rosewood hotel next to Stanford University.

Yes, that's the most expensive luxury hotel in Silicon Valley. Unlike many Silicon Valley projects that come out of garages, this may indicate that AI projects born with a silver spoon are destined to be money-burning machines.

This meeting was initiated by Sam Altman, the head of the Silicon Valley incubator YC, who wanted to gather some of the top AI researchers to discuss building an AI laboratory together. These researchers included Ilya Sutskever, an artificial intelligence researcher at Google Brain at the time, and Greg Brockman, the Chief Technology Officer of the internet payment processing platform Stripe. Musk's presence at this meeting was also because he was an old friend of Sam Altman's, and AI technology is crucial for Musk's companies – both Tesla and SpaceX – and Musk has been warning about the safety issues of artificial intelligence since very early on. So, with two top resources and capital, they started to make things happen with a few top technical AI researchers.

What they initially wanted to do was to be the opposite of Google, an AI laboratory not controlled by any company, capital, or person, and everyone agreed that this was the right path to bring humanity closer to building general artificial intelligence AGI in a safe manner. (AGI: Artificial General Intelligence, which has the general human intelligence to perform any intellectual task that humans can do.)

When it comes to starting a business, the most important thing is to find people, money, and direction. If you want to be the leader in exploring AGI worldwide, you need not just ordinary talent, but the most top-notch talent in the AI industry. But these talents are distributed among the major tech giants and are highly paid. How can they be attracted to come out?

Brockman first thought of the three giants of neural networks who won the Turing Award in 2018: Yann LeCun, Yoshua Bengio, and Geoffrey Hinton.

Here's a side note: the breakthrough in this round of AI technology is precisely because the "neural network" research route, which was once not favored, has made progress, and it must also be attributed to the perseverance of these old scientists in the AI winter for decades. We are planning to open a series on AI big models to tell the story of its origins. Stay tuned for more from our Silicon Valley 101.

Back to Brockman, of the three giants, Hinton was at Google, LeCun was at Facebook, and they were relatively old and unlikely to come out full-time. Bengio was mainly active in academia and not very interested in the industry, but Bengio gave Brockman a list of the best researchers in the field of AI neural networks. Remember this timeline, there's a little ironic twist about Bengio at the end.

When Brockman got this list, it was like a martial arts treasure, and he quickly came back and started contacting all the people on the list.

But here's the problem: as we just mentioned, these top researchers in science and technology are highly paid in the tech giants. It's not easy to attract them to join a non-profit organization with an uncertain future and just a big pie. How to break through? Oh, this is where another geographical advantage of Silicon Valley comes in: the Napa Valley vineyard.

It's just over an hour's drive from Silicon Valley to San Francisco, the most famous wine-producing region in the United States: Napa Valley. What you can't negotiate at a Starbucks in Silicon Valley, you might be able to negotiate at a weekend in the Napa Valley vineyard. Brockman, being an old hand at entrepreneurship, selected 10 of the most important researchers from Bengio's list and took them to spend a weekend at the Napa Valley vineyard. Brockman described to Wired magazine that when you gather people together at the Napa Valley vineyard, it's easy to create a chemical reaction. You're stuck there, you have to talk, you have to participate.

At the end of the weekend, when they were about to leave the Napa Valley vineyard, Brockman invited these ten researchers to join Open AI and gave them three weeks to consider. During these three weeks, the tech giants in Silicon Valley heard about Open AI and started offering higher salaries to retain these top AI researchers. And because of the retention efforts of the tech giants, Open AI had to postpone the announcement of its establishment. It's worth noting that the salaries offered by these tech giants were already very high.

The original media report was "Eclipsed the cost of a top quarterback prospect in the NFL," and since I'm not a fan of American football and didn't have a concept, I looked it up and was shocked: the salaries of these top football stars are in the millions, even tens of millions of dollars, which means that the salaries of the top AI research scholars in the tech giants are also at this level, and may even include stocks.

Moreover, according to one of the researchers, when they expressed their intention to leave, the tech giants offered them new prices that were 2-3 times higher than the already high salaries in the industry.

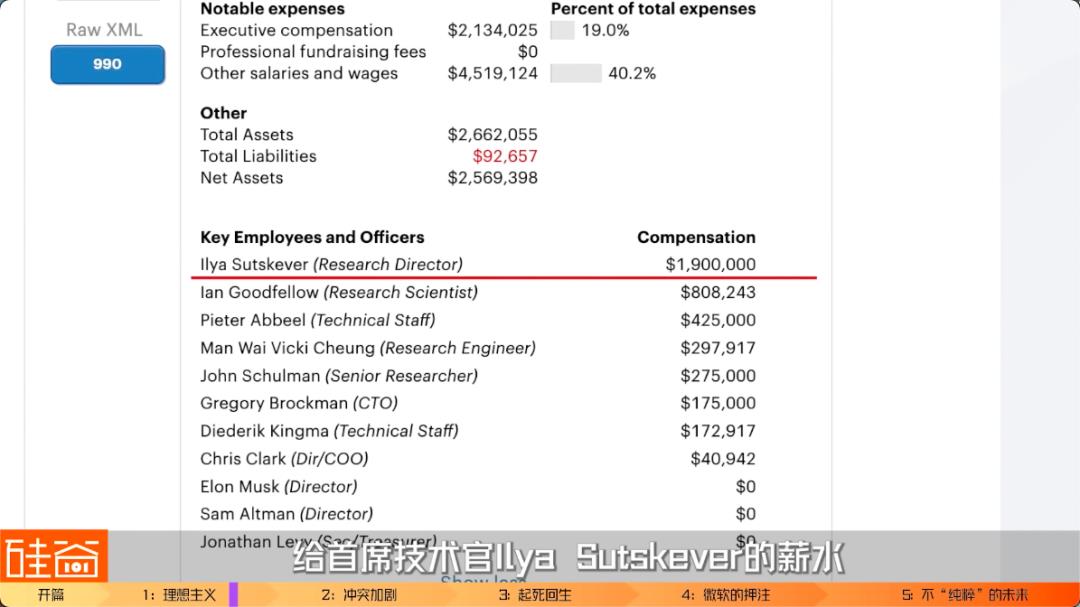

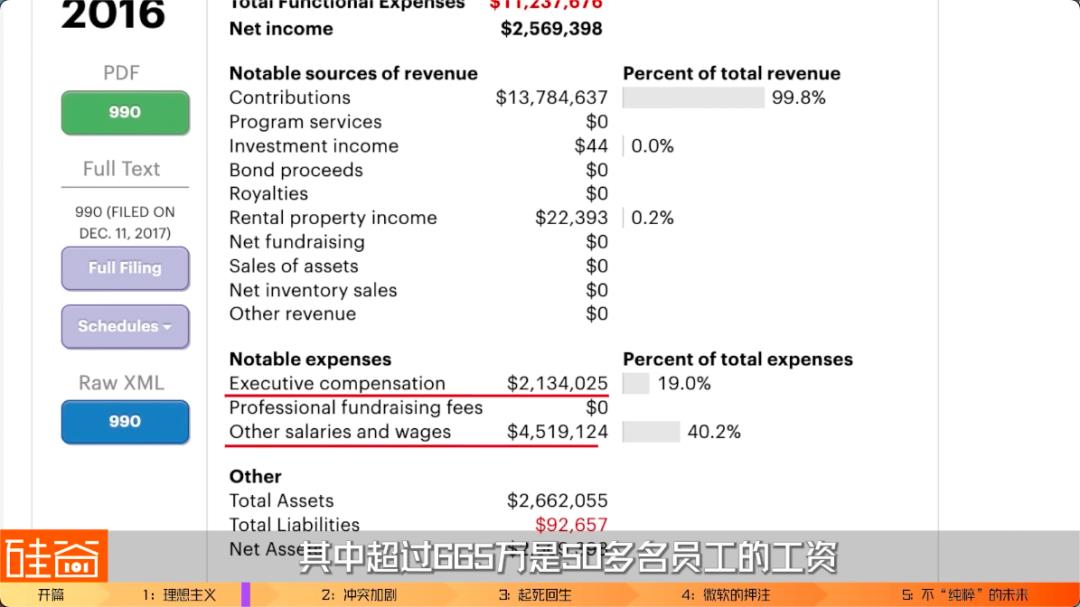

However, even though the big companies offered such tempting salaries, nine out of the ten invited top AI scholars rejected the high salaries and joined Open AI. I don't know if it was the red wine in Napa that worked. Open AI had no strength to match such high salaries because it was initially a non-profit organization, so it had to disclose its expenses annually. Tax documents show that the salary for Chief Technology Officer Ilya Sutskever was approximately $1.9 million, and another chief researcher, Ian Goodfellow, was at $800,000. Both of them were poached from Google, and although their salaries caused some controversy when they were announced, as they were considered too high for a non-profit organization, we need to understand that their salaries decreased significantly when they left Google to join Open AI. I found the tax forms of Open AI from previous years, and there are many interesting pieces of information on them.

OpenAI's first-year expenses were $11.23 million, with over $6.65 million going to the salaries of over 50 employees. On average, it's really not bad for Silicon Valley standards.

And these top scientists who gave up high salaries to join such a non-profit organization full of great uncertainty, with no equity, no generous benefits, no luxurious annual meetings, and no clear path for advancement, did so for a very pure purpose. This purpose is also written in the founding charter of OpenAI: to ensure that General Artificial Intelligence (AGI) benefits all of humanity.

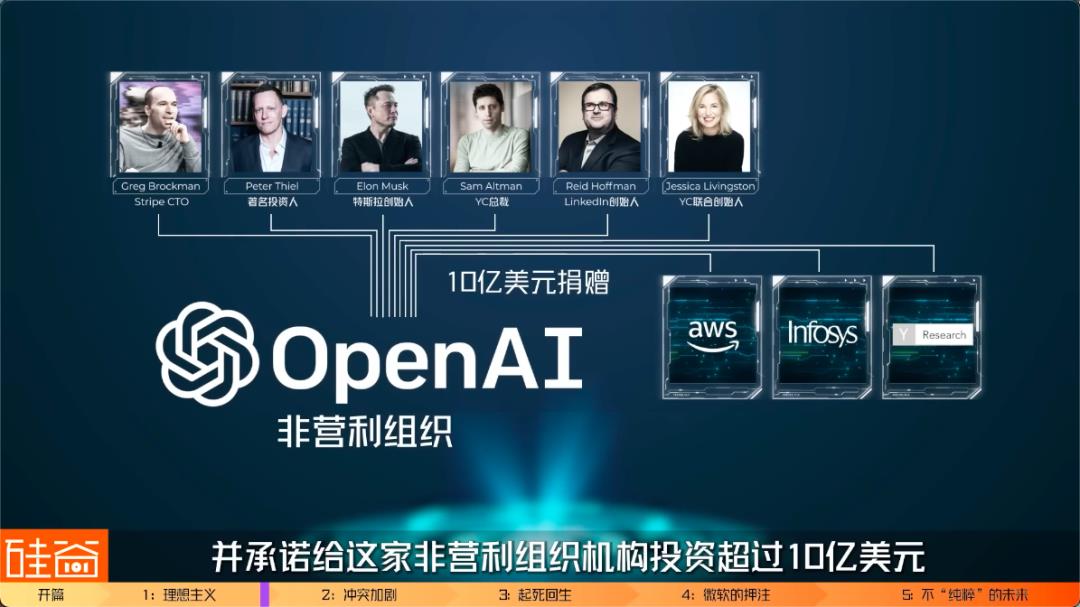

At the end of 2015, the President of YC, Sam Altman, the former CTO of Stripe, Greg Brockman, the founder of LinkedIn, Reid Hoffman, the co-founder of YC, Jessica Livingston, the renowned investor Peter Thiel, the founder of Tesla, Elon Musk, Amazon AWS, Infosys, and YC Research announced the establishment of OpenAI, and pledged to invest over $1 billion in this non-profit organization. Due to the non-profit nature of OpenAI, no one owns any shares or interests in the company, and the company's property and income will never be distributed to any donors or owners. OpenAI stated that it will open its patents and research to the public and will "freely collaborate" with other institutions and researchers.

Doesn't it sound very idealistic and utopian? It was indeed quite inspiring at the beginning, but soon, conflicts arose within OpenAI.

02 Escalating Conflicts: Musk's Rift

The cause of the conflict is very simple: artificial intelligence technology is a money, resource, computing power, and time-consuming matter. Initially, these top researchers and influential Silicon Valley capitalists could work for the love of it, but when they actually started, they found that it was a bottomless pit of money. And look at OpenAI's competitors, which one of them isn't a tech giant with hundreds of billions of dollars in funds. Also, when we talked to an early intern at OpenAI, they recalled that the office seats were surrounded by industry giants, each with different ideas, expertise, and areas of focus, and they hadn't found a focal point to unite this group of the world's top AI scientists. This was the most difficult time, and no one was convinced by anyone.

Indeed, in the early days, OpenAI was being outperformed by Google's DeepMind in various ways.

And from the tax forms, we can also see that initially, the promised $1 billion from Silicon Valley giants did not all come at once, but was divided into many small installments, which means that OpenAI's annual budget was limited and they dared not spend recklessly. For comparison, on OpenAI's tax forms, we see that in 2017, the functional expenses of OpenAI were $28.66 million, with $7.9 million spent on cloud computing, and even the CPU and GPU for training models had to be rented from Google. In contrast, backed by Google, DeepMind's total expenditure in 2017 was $442 million. You can see the difference in resources and funding, it's quite significant.

And earlier, OpenAI's research results did not make much of a splash, but instead, Google's achievements were quite remarkable.

For example, in 2016, OpenAI released OpenAI Gym and Universe, which gave it some recognition in the industry. However, in the same year, Google's DeepMind's AlphaGo defeated top Go players, Lee Sedol, sparking widespread attention to AI. In 2017, after OpenAI finally defeated top human players in the game Dota 2, you would think it would gain traction, but then Google released the Transformer model, which laid the foundation for all large language models, and it was a model that shocked the entire industry. Then in 2018, based on the Transformer, OpenAI released the first generation of GPT, but Google subsequently released the groundbreaking Bert, which had four times the parameters of GPT and almost completely outperformed GPT in all aspects.

A quick note, if the products, technologies, and models mentioned above sound a bit confusing, don't worry, our upcoming series on large models will specifically discuss the evolution of these technologies. Anyway, in today's video, you know that until the end of 2018, Google completely outperformed OpenAI.

At this time, the industry was very pessimistic about OpenAI, and many of the previously invited tech giants had returned to companies like Google and Facebook. The company faced serious talent loss and instability.

In 2018, our Musk couldn't sit still. He came in with a lot of momentum and asked, "What are you all doing? Burning money and not getting results. You promised to lead Google and be the first to achieve AGI, but you're falling behind by so much!" For those familiar with Musk, you know that he is very possessive and controlling. According to sources from the tech media Semafor, Musk directly proposed to the OpenAI board, "I want to take over OpenAI completely, and I will be the CEO."

But at that time in 2018, you may remember, Musk himself was already in a mess with Tesla. The Model 3 had encountered production hell again, was heavily shorted in the market, and was being questioned for imminent bankruptcy, and Musk was sleeping in the Tesla factory every day.

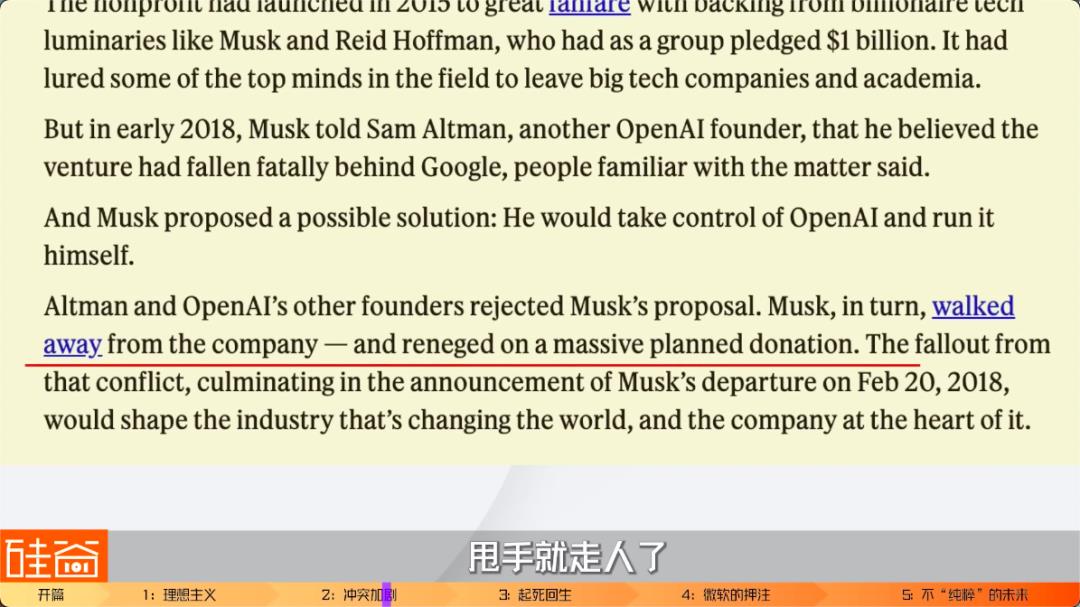

So, Musk wanted to take over OpenAI, which was a completely different track from Tesla, and the OpenAI board certainly thought it was not feasible, so they rejected Musk's proposal. As a result, according to reports from Semafor and Wired magazine, Musk was very unhappy and left in a huff.

Of course, there are other claims, including that Musk first poached OpenAI's core researcher, Andrej Karpathy, to become the head of autonomous driving at Tesla, which made the people at OpenAI unhappy, as they felt their own corner was being dug. So, conflicts and contradictions slowly arose. Oh, and just to add, this guy recently jumped back to OpenAI, it seems there's quite a bit of drama inside. Of course, there are some conspiracy theories that we won't go into here, but in short, in 2018, Musk announced his departure from the board, and at the time, everything seemed amicable in the media, and Musk also said that after leaving the board, he would continue to donate and support OpenAI.

However, after Musk left, he immediately stopped donating.

Wired magazine and the website Semafor reported that previously, Musk had promised to donate $1 billion to OpenAI within a few years, but at the time of his departure from OpenAI, he had only fulfilled $100 million of the donation. In other words, Musk's departure and his unfulfilled donation immediately put OpenAI in a very unfavorable position: training AI models was more expensive than previously imagined, and if the company couldn't find a technological breakthrough and continued to be outperformed by Google and other big companies, it would soon have to close its doors.

It was at this time that Sam Altman realized that he had to step up.

Before 2018, Sam was just a "director" within the company, and the CEO had always been Greg Brockman. According to the recollections of an early intern at OpenAI, Sam was not often seen in the company, because at that time, he was still the head of YC, and a large part of his time was actually spent managing things there and incubating YC's startup projects.

OpenAI's tax documents show that it wasn't until 2018 that Sam added the title of President to his position as a director. Subsequently, Musk left the OpenAI board, and in 2019, Sam succeeded Brockman as the CEO of OpenAI, while Brockman became the CTO. This meant that Sam resigned from his position as the President of YC and officially shifted his focus to OpenAI.

For Sam, it was the rift with Musk that made him question the non-profit organization model. And it was because he abandoned the pure non-profit organization model and instead explored a more realistic business path that OpenAI and ChatGPT were able to appear before everyone today.

03 Resurrection: The Business Path Chosen by Sam Altman

Let's talk about Sam for a moment. Sam is a very mysterious figure, often suspected of being a robot or artificial intelligence, just like Musk. He even joked with reporters, saying, "I need to go to the bathroom a few more times so you won't suspect that I'm an AI."

Sam is very young, born in 1985 to a Jewish family in Chicago, Illinois, and raised in Missouri. He learned programming at the age of 8, came out as gay at 16, and attended Stanford for his undergraduate studies. In his sophomore year, he dropped out to start a business and joined the first batch of incubated projects at Y Combinator (YC), a Silicon Valley incubator. He developed an app called Loopt, which was acquired for $43 million in 2012, earning Sam his first fortune of $5 million at the age of 27. YC founder Paul Graham, who was preparing to retire, pushed the 28-year-old Sam to the position of YC President. While leading YC, Sam also co-founded Hydrazine Capital with other investors to invest in YC projects, which saw a tenfold return after four years, providing Sam with financial freedom.

In just a few lines, we can feel that Sam is a highly talented, extremely intelligent, self-devoted, and pursuit of extreme efficiency.

In 2016, The New Yorker published a very long article about Sam, in which a few details stood out:

First, the description of Sam's appearance, stating that he is very thin, about 5'7" tall, and weighs only 130 pounds, with green eyes that are as sharp as those of a great horned owl in the dark. The article also mentioned that Sam has a strange sitting posture and is often mistaken for having Asperger's syndrome.

In terms of his work style, Sam is very demanding of himself and his colleagues, with high expectations and a somewhat cold and paranoid personality. He is not very patient with things and people he is not interested in and is known to stare at employees without blinking during conversations, putting pressure on them to speak faster. So, he is extremely efficient, hardworking, and intelligent, which is quite similar to Musk and Jobs.

However, Sam's greatest strength lies in his clear thinking and intuitive grasp of complex systems, particularly in terms of business strategy and ambition. He is not particularly interested in technical details, but rather in the potential impact of technology on the world. This ability is crucial for technology entrepreneurship, which is perhaps why Paul Graham unexpectedly chose Sam as the successor to YC.

In his role as the head of YC, Sam completely demonstrated his ambition, essentially restructuring YC's model. YC's previous model involved selecting around 200 startups from tens of thousands of applications each year, providing each with $120,000 for a 7% stake, incubating them for three months, and then presenting demos to numerous well-known Silicon Valley VCs on graduation day, followed by continued funding, growth, and expansion.

However, Sam was dissatisfied with YC's model, believing that the previous approach merely sent entrepreneurs on a shaky adventure, and he wanted to build a powerful fleet of ironclad ships. This meant that YC should not be satisfied with a 7% stake, but should provide more capital to support projects in the early stages and continue to invest in these companies as they grow after incubation. Sam also wanted to create not just two hundred companies a year, but a thousand or even ten thousand. He hoped that these companies, with a market value of trillions, could truly drive human development.

Ironically, in recent years, Silicon Valley has faced an awkward situation: while investors and entrepreneurs have been shouting about changing the world and having ambitious goals, the focus of VCs often falls on "when can we monetize, is the customer growth curve attractive, can the profit be higher," and other "penny-pinching" issues, hoping that founders will focus on building rental platforms or delivery services in a specific area. Meanwhile, they have become increasingly cautious about "moonshot" projects, such as nuclear fusion, biotechnology, and artificial intelligence. Therefore, the statement "Silicon Valley is dead" has appeared frequently in recent years.

After taking over YC, Sam immediately wrote an article titled "Science seems broken" to alert Silicon Valley and academia, and called for companies in energy, biotechnology, artificial intelligence, robotics, and other hard tech fields to apply to YC. Sam believed that as the most influential incubator, YC could only continue to support students' interest in these fields and give entrepreneurs more confidence by openly supporting these hard tech projects. Therefore, under Sam's leadership, YC began to raise more funds to support projects and even established a non-profit organization called YC Research to conduct pure research on visionary scientific ideas. Even the renowned venture capitalist Marc Andreessen commented, "Under Sam's leadership, YC's level of ambition has increased tenfold." Perhaps this is why Paul Graham insisted on Sam taking over, as he realized that "Sam's goal is to create the entire future."

However, after leading YC for several years, OpenAI reached a crossroads in its fate, and Sam resigned from his position at YC to join OpenAI full-time. When Musk withdrew from the board and cut off funding, Sam Altman realized that if he did not step forward to lead the transformation, OpenAI would only have the option of closing down. Now, he had to redesign the structure and find a balance between business and social responsibility.

Sam approached Silicon Valley's renowned investors Reid Hoffman and Vinod Khosla.

The former, as mentioned earlier, was one of the initial donors to OpenAI, while the latter is a billionaire and co-founder of Sun Microsystems, who later established his own venture capital fund, Khosla Ventures. Sam's request was for tens of millions of dollars, but no longer as a donation, but on the condition of agreeing to create a for-profit division of OpenAI. After discussing future returns, Hoffman and Khosla wrote the checks.

On March 11, 2019, OpenAI announced the creation of a for-profit entity called OpenAI LP under the non-profit parent company OpenAI Inc. To retain control, the non-profit entity OpenAI Inc would serve as the General Partner, meaning that the board of OpenAI Inc would be responsible for the management and operation of the new company. Among the board members of OpenAI Inc, three seats were held by employees, including Sam Altman as CEO, Ilya Sutskever as Chief Scientist, and Greg Brockman as Chairman and President.

The non-employee board members included Adam D'Angelo, co-founder and part-time CEO of Quora, investor Reid Hoffman, former Republican Congressman Will Hurd, Helen Toner, Director of Strategy at Georgetown University's Center for Security and Emerging Technology, and Tasha McCauley, CEO of the robot company Fellow Robots. (By the way, her husband is someone you might not expect) That's right, it's Hollywood heartthrob Joseph Gordon-Levitt, known for his roles in "500 Days of Summer," "The Dark Knight," "Inception," and "Snowden."

It's quite gossipy to see this board list all of a sudden, but wait, there's another big scoop. Do you know who Shivon Zilis is? She is the mother of Musk's youngest twins. She served as an advisor to OpenAI in 2016, presumably when she met Musk, and later followed Musk to Tesla, then to Musk's brain-machine interface company Neuralink, but she has always been a board member of OpenAI.

However, after Musk and Sam clashed in March, Zilis probably felt awkward and left the board. Gossip time is over, back to the company structure.

Only a few of these OpenAI board members hold shares in OpenAI LP. Note that everyone's shares are in the for-profit entity OpenAI LP, as the non-profit entity OpenAI Inc cannot hold shares.

Investors and company employees hold shares in OpenAI LP, known as limited partners (LP), with a maximum return of 100 times. OpenAI is unlikely to go public and is unlikely to accept acquisition offers. Therefore, the exit strategy for investors is through annual profit sharing. Once the accumulated dividends exceed a 100-fold return, the funds will automatically flow into the account of the non-profit organization OpenAI Inc. When conflicts arise between LP and the company's mission, non-shareholding board members are responsible for voting.

Here's an important point to note: Sam Altman himself does not hold any shares in this new structure, not a single share, and only receives a basic salary of over $60,000 annually. Reports state that Sam publicly claims that he is already wealthy enough and does not need more money in return. His decision not to take shares has discouraged many investors because the logic in Silicon Valley is that a company's CEO should have enough incentive to start a business, and not taking shares may imply a lack of confidence in the company's prospects. However, based on the company's voting charter, it can be inferred that Sam's decision not to take shares is to ensure that he has voting rights when conflicts arise between LP and the company's mission, in exchange for giving up financial incentives for power incentives.

Just as Paul Graham, who understands Sam the best, said in a media interview: "Why would someone do something that won't make them richer? One answer is that many people do this once they have enough money. Another answer is that he likes power." Sam could be either of the two.

The commercialization transformation of OpenAI has sparked a lot of external controversy and criticism. But for Sam, it seems like he had no choice. When he realized that idealism was not feasible, he resolutely chose pragmatism, but also tried to balance the two as much as possible. His choices seem to be the best solutions.

In 2018, OpenAI had already decided on the GPT path based on the Transformer model and needed a large amount of computing power and parameters for training. Talent and funding are both indispensable for the future.

The new structure of OpenAI LP provides employees with equity incentives similar to those of startups, which stabilizes morale. Additionally, this unprecedented new structure in Silicon Valley has also attracted an equally ambitious major LP investor: Microsoft.

04 Nadella's Choice: Ignoring Gates' Opposition

Now, when we look at Microsoft's investment in OpenAI, many people would say that Microsoft is very clever, this investment is very good, and they have great foresight. But if we go back in time, we will find that this decision was not so simple.

Since taking over from Bill Gates and being appointed as Microsoft's CEO in 2014, Satya Nadella has been very supportive of the development of artificial intelligence technology. However, Microsoft had also stumbled in the field of AI.

In 2016, Microsoft attempted to launch an AI chatbot called Tay, which was released on social media platforms such as Twitter, Facebook, Snapchat, and Instagram. However, Tay was quickly manipulated by internet users, who intentionally taught Tay to make offensive remarks. As a result, Tay was shut down within a day.

Despite this setback, Nadella still highly valued the potential of AI and tried to encourage all Microsoft departments to use AI models to enhance their products. Microsoft also reached an agreement with Nvidia to develop and train AI using GPUs. All these signs made Microsoft a very attractive partner in Sam Altman's eyes, and he made several trips to Seattle to try to make a deal.

However, when Nadella tried to promote cooperation with OpenAI within Microsoft, he faced considerable resistance, some of which came from Microsoft's co-founder, Bill Gates.

Although Bill Gates had largely been in the media for his charity work and projects to combat poverty, disease, and climate issues after stepping down from his daily work at Microsoft in 2008, he still spent about 20% of his time reviewing new Microsoft products. In 2019, when Microsoft was in contact with OpenAI, Bill Gates personally participated in the review of the collaboration with OpenAI and expressed his dislike for the investment, holding a skeptical attitude.

However, in name, Bill Gates no longer participates in the daily operations of Microsoft, and Nadella has the authority to make his own decisions. Therefore, Nadella decided to bet on AI.

In July 2019, Microsoft announced a partnership with OpenAI and invested $1 billion in the startup, becoming a major LP investor in OpenAI.

However, if we carefully examine this deal, we will find that Nadella was very shrewd. The media widely reported that Microsoft had invested $1 billion in OpenAI, but in reality, most of this $1 billion was in the form of Azure cloud service credits, not additional new cash. This means that OpenAI can use Microsoft's cloud services for training and running AI models for free. In return, Microsoft gains exclusive rights to use most of OpenAI's technology in products like Bing search.

For Microsoft, there is also the added benefit of dealing a blow to Google, as OpenAI has been one of Google Cloud's largest customers, paying a total of $120 million in cloud computing fees in 2019 and 2020. So, getting a new partner without using much cash and simultaneously striking a blow to a competitor, Nadella's move was truly a win-win.

However, it should be noted that during the cooperation with OpenAI, Nadella faced a lot of pressure, mostly in terms of finance and resources, because of the massive computing power required by OpenAI.

In 2019, the GPT-2 had 1.5 billion parameters, and in May 2020, the GPT-3 had 175 billion parameters. Each upgrade of GPT saw an exponential increase in the number of parameters.

When training ChatGPT, Microsoft specifically introduced a top-five global supercomputer for OpenAI, using 10,000 Nvidia DGX A100 GPUs. Each DGX A100 server has 8 GPUs and costs $200,000, so ChatGPT used 1,250 A100 GPU servers, totaling $250 million. In addition, training a large model costs millions of dollars each time, and annual cloud costs also amount to over a billion.

At the same time, Microsoft was also facing a capital winter due to the Federal Reserve's interest rate hike cycle starting in 2022, as well as layoffs of tens of thousands of employees. Therefore, Nadella's full support for OpenAI was not without pressure and risk. The New York Times reported that between 2019 and 2023, Microsoft actually invested $2 billion in OpenAI, but the details of this transaction were not made public, so we do not know the conditions of this $2 billion. However, this is not important because soon, Nadella would realize that he had made the right bet.

The release of GPT-3 by OpenAI in May 2020 caused a sensation in the industry, and the subsequent release of ChatGPT based on GPT-3.5 at the end of 2022 was a huge success, directly challenging Google.

In 2023, Microsoft decided to deepen its cooperation. The investment amount this time was $10 billion, with Microsoft holding a 49% stake in OpenAI.

The new terms of the agreement are still very favorable to Microsoft. The investment agreement now includes more detailed provisions for future profit distribution:

Phase 1: 100% of the profit goes to the initial and founding investors until they recoup their investment.

Phase 2: 25% of the profit goes to employees and to pay back the investors' return limit, with the remaining 75% going to Microsoft until it recoups its $13 billion investment.

Phase 3: 2% goes to OpenAI Inc, 41% goes to employees, 8% pays the investors' return limit, and the remaining 49% pays Microsoft's return limit.

Phase 4: Once all investors have been repaid, 100% of the equity returns to OpenAI Inc.

For Microsoft, this is a very advantageous agreement. Firstly, the $10 billion investment is not all in cash, and a large part of it is likely to be in the form of credits for OpenAI to use Microsoft's supercomputing cluster, essentially allowing OpenAI to use Microsoft's resources first and then slowly repay the debt, while also paying out dividends at several times the price. This is essentially a very high-interest loan.

It is important to note that initially, OpenAI stipulated that the maximum return for initial investors was 100 times, and subsequent investors had a discounted return rate. Based on this, Fortune magazine predicts that in the future, in addition to repaying Microsoft's $13 billion investment, Microsoft will receive approximately $92 billion in profit returns. That's quite a profit.

Furthermore, The Information also revealed that OpenAI is considering relaxing the limit of a maximum 100-fold return and instead increasing the annual profit distribution by 20%. This news has not been officially announced and may still be subject to change, but it does offer more potential future returns for investors like Microsoft, making the OpenAI shares that have already been allocated to employees or investors highly sought after by venture capital funds.

Last year, Redpoint Ventures, Tiger Global, Bedrock Capital, and Andreessen Horowitz acquired stakes in OpenAI from employees and previous investors at a valuation of $20 billion. It is imaginable that after the success of ChatGPT this year, OpenAI's stock will be highly sought after in the primary market.

It is confirmed that the terms signed between OpenAI and Microsoft imply that OpenAI will definitely push forward with the commercialization process. Indeed, we have seen OpenAI open its API interface, and ChatGPT has also introduced a paid version. The pressure for commercial monetization is significant, as there are major investors waiting for their returns.

However, for Microsoft, the strategic significance of investing in OpenAI far outweighs the investment return. The emergence of ChatGPT is closely integrated with Microsoft's products, and the New Bing search engine has already integrated ChatGPT, posing a challenge to Google search. Additionally, Microsoft continues to integrate OpenAI's artificial intelligence technology into various software, GitHub coding tools, Microsoft 365 suite, and Azure cloud services, with rapid upgrades across its entire product range. This has propelled Microsoft to become a pioneer in the current AI wave.

Previously, Microsoft had not disclosed the scale of its AI business, but it mentioned in October last year that its machine learning services on Azure had doubled for four consecutive quarters. JPMorgan Chase predicts that AI applications will generate over $30 billion in annual revenue for Microsoft, with about half coming from the Azure cloud service.

In other words, even if OpenAI fails to repay its debts or the dividends are slow, it doesn't matter because Microsoft can use AI to earn it back.

Therefore, it must be said that Nadella's investment in OpenAI was truly visionary.

As for Bill Gates, who almost killed the investment in OpenAI, he now proudly recounts his interactions with the OpenAI team, prompting Elon Musk to harshly retort on Twitter, saying, "Gates had limited understanding of AI back then, and still does now."

Regardless, Gates, due to Nadella's persistence, has once again positioned Microsoft in the most advantageous position, while Musk, the co-founder who "planted the tree for others to enjoy the shade," not only has no connection with OpenAI but can only spar with Sam online, which is somewhat regrettable. Just imagine: if Musk had not walked away and had fulfilled the remaining $900 million commitment, would the outcome have been different?

In some of the latest developments in generative artificial intelligence competitions, the Financial Times reported that Elon Musk has officially joined the competition and started his own AI company.

However, recent media reports indicate that Musk has been assembling an AI team since February, having purchased 10,000 GPU chips from Nvidia and recruited two top talents from Google's DeepMind AI department, and has now entered the AI fray. We also look forward to seeing whether Musk can eventually launch his own large model to compete with OpenAI.

05 The Not-So-"Pure" Future: What Will OpenAI Become?

OpenAI now stands at the forefront of the wave, but the competition has only just begun. While OpenAI has a leading edge, it is not a significant one. Now that the tech giants have seen the success of the large ChatGPT model, they are all entering the fray. Training large models is not difficult, as the papers and technology are readily available, and it's just a matter of pouring in money and computing power. However, the real challenge lies in reducing costs and exploring commercialization models.

In 1965, Gordon Moore, then the head of Fairchild Semiconductor's research, and later one of the co-founders of Intel, published a paper predicting that the number of transistors on an integrated circuit chip would double every 18-24 months, leading to a doubling in the performance of microprocessors or a halving in price. This is the famous "Moore's Law."

Sam predicts that a new version of Moore's Law is about to come, believing that intelligence in the universe doubles every 18 months. However, this statement is somewhat vague, and Sam did not provide a specific explanation, sparking much debate in the academic community and increasing calls for regulation of AI safety.

Two weeks after the release of GPT-4, Musk and over 1,000 others, including many prominent scientists and tech leaders, signed an open letter calling for all AI labs to immediately pause training AI models more powerful than GPT-4 for at least 6 months.

One name on this list particularly caught my attention: Yoshua Bengio. Does that name sound familiar? That's right, it's the Turing Award winner mentioned at the beginning of the article, who contributed to OpenAI's "martial arts manual." Bengio probably never thought that he would one day regret his initial involvement.

Now, it seems that once the AI arms race among the major tech giants begins, it will be difficult to stop unless there is strong global consensus and regulation by human society, similar to the regulation of cloning technology in the past. Currently, Italy has temporarily banned ChatGPT for violating the strict European data privacy law GDPR, and it is possible that other countries may follow suit. However, until then, OpenAI and its competitors will not stop, as being in the lead means future market share, influence, and discourse power. The prisoner's dilemma is a tried and tested concept in human society.

Perhaps, from the day OpenAI was born, it was destined that the path to AGI for humanity would not be pure, but filled with conflicts, power struggles, and competing interests. However, this is human nature. Many pessimistic views argue that as such a profit-driven, greedy, and contradictory species, how can we ensure the purity of the AI we train? However, when speaking with a professional, he reminded me that the current version of OpenAI has been "neutered" for several months, meaning that a large number of human reviewers have been employed to remove harmful, violent, and aggressive language parameters. The unneutered version of the GPT model is a huge monster, and OpenAI is keeping this monster under wraps. Sam is like the giant owl described earlier, keeping watch in the darkness. But can we trust Sam and OpenAI?

Sam and Musk initially did not trust Google, so they created OpenAI. But now, Musk and Sam do not trust each other. They both believe that they are the ones who can control the monster.

In conclusion, let's return to the day when Sam and a group of big shots founded OpenAI. An article in The New Yorker describes how Sam, in the new office of OpenAI in San Francisco, did the first thing: he walked up to the wall of the conference room and wrote a quote from "Father of the Nuclear Navy" Hyman Rickover.

The quote reads: "The great purpose of life is not knowledge, but action. We each have a responsibility to act as if the fate of the world depended on us, and we must live for the future, not for our own comfort or success."

So far, it seems that Sam is doing just that, even though his commercialization path and the early release of ChatGPT have attracted a lot of criticism. However, it must be emphasized that Sam is the one formulating the strategy, but let's not forget that the success of OpenAI is also attributed to a group of top scientists, including those who gave up high salaries to join OpenAI, as well as the authors and research scientists behind the papers on the Transformer model paradigm and RLHF-based human feedback reinforcement learning used in ChatGPT. Furthermore, going back even further, it is the three senior scientists who did not give up on the "neural network" path and persisted for decades. Technological progress cannot be achieved without these cutting-edge minds and pure beliefs.

In this story, there are many conflicts, choices, and power struggles, but at the same time, there is also sincere idealism. The complex story of OpenAI's rise is actually a story of human nature. With such complex human nature, what kind of AGI can we create? We wait and see.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。