Source: Titanium Media

Author: Lin Zhijia

Image source: Generated by Wujie AI

The competition in large-scale model technology in China is accelerating. Following the launch of the latest products by iFlytek and Zhipu, Baichuan has also achieved new results in large-scale models.

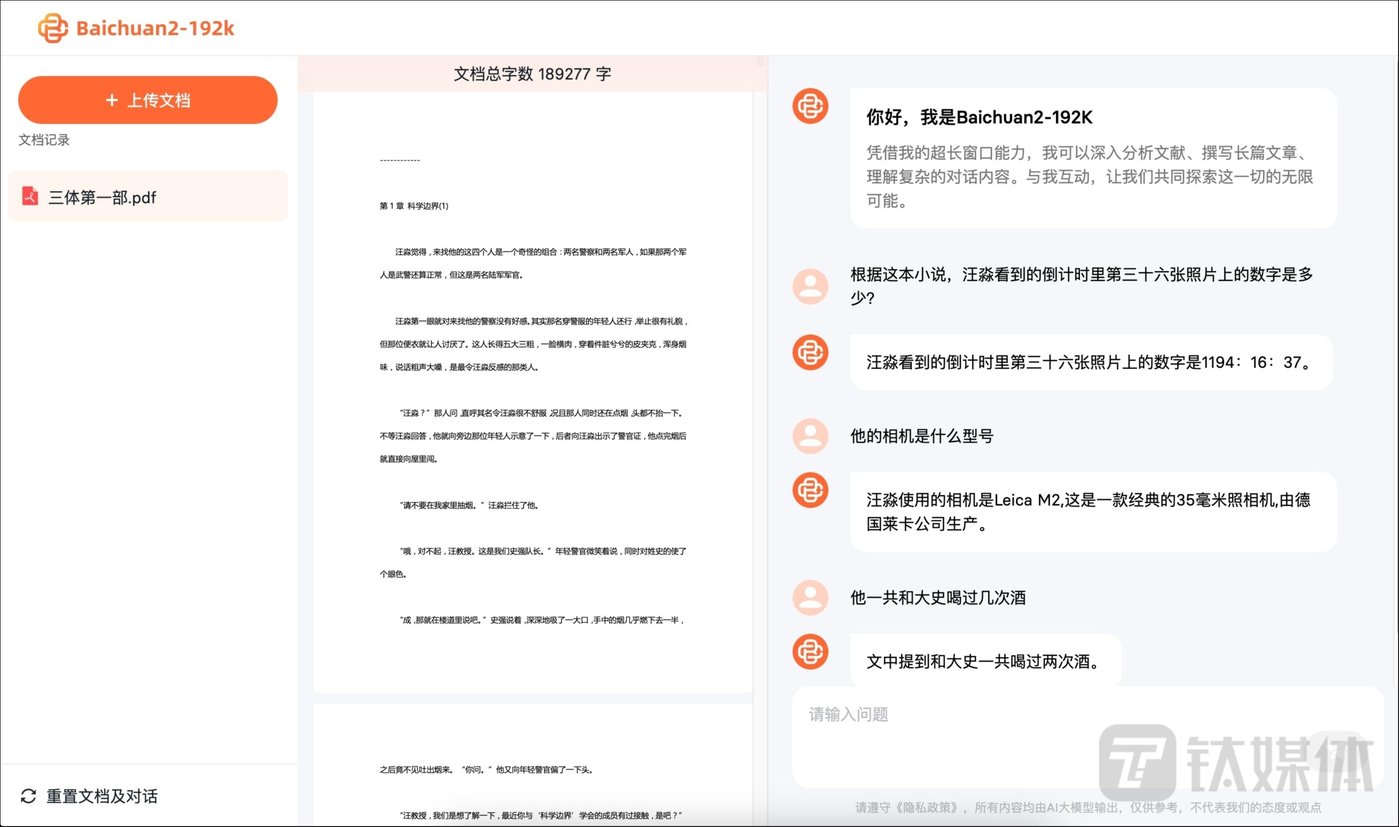

According to Titanium Media, on the morning of October 30, Wang Xiaochuan's AI large model company "Baichuan Intelligence" announced the launch of Baichuan2-192K, a large model with a context window length of up to 192K, capable of processing approximately 350,000 Chinese characters.

Baichuan Intelligence stated that Baichuan2-192K is currently the longest context window in the world, and it is 4.4 times longer than the most outstanding large model Claude2 (which supports a 100K context window and has been tested to handle approximately 80,000 characters), and 14 times longer than GPT-4 (which supports a 32K context window and has been tested to handle approximately 25,000 characters). This not only surpasses Claude2 in context window length, but also leads in long window text generation quality, long context understanding, and long text question answering and summarization.

It is reported that Baichuan2-192K will be provided to enterprise users through API calls and private deployment. Baichuan Intelligence has already initiated the internal testing of this large model's API and opened it to core partners in industries such as law, media, and finance.

It is reported that Baichuan Intelligence was established on April 10, 2023, by Wang Xiaochuan, the founder and former CEO of Sogou. Its core team consists of top AI talents from well-known technology companies such as Sogou, Google, Tencent, Baidu, Huawei, Microsoft, and ByteDance. Currently, Baichuan Intelligence has a team of over 170 people, with nearly 70% of employees holding a master's degree or higher, and over 80% being research and development personnel.

In the past 200-plus days, Baichuan Intelligence has released an average of one large model every 28 days, including Baichuan-7B/13B, Baichuan2-7B/13B, and two closed-source large models, Baichuan-53B and Baichuan2-53B. Its capabilities in writing and text creation have reached a relatively high level in the industry. Currently, the two open-source large models, Baichuan-7B/13B, have ranked high on multiple authoritative evaluation lists, with a cumulative download volume exceeding 6 million times.

Regarding the establishment of an AI large model company, Wang Xiaochuan once stated that the existing technical tools of his team can be used to build large models, and the company's competitors are the open-source solutions of large companies. Wang Xiaochuan also believes that the entire team does not need to be too large, as a hundred people are sufficient.

On August 31, Baichuan Intelligence became the first of the eight companies to be filed under the National "Interim Measures for the Management of Generative Artificial Intelligence Services," and it is the only large model startup company established this year among the eight. On September 25, it opened the Baichuan2-53B API interface, officially entering the To B enterprise field and commencing the commercialization process.

On October 17, Baichuan Intelligence announced the completion of a $300 million strategic financing round, with participation from tech giants such as Alibaba, Tencent, Xiaomi, and several top investment institutions. Including the $50 million from the angel round, Baichuan Intelligence's total financing amount has reached $350 million (approximately 2.543 billion RMB).

Baichuan Intelligence did not disclose its current specific valuation, only stating that after this round of financing, the company has entered the ranks of technology unicorns. According to the general definition, a unicorn is valued at over $1 billion (approximately 7.266 billion RMB).

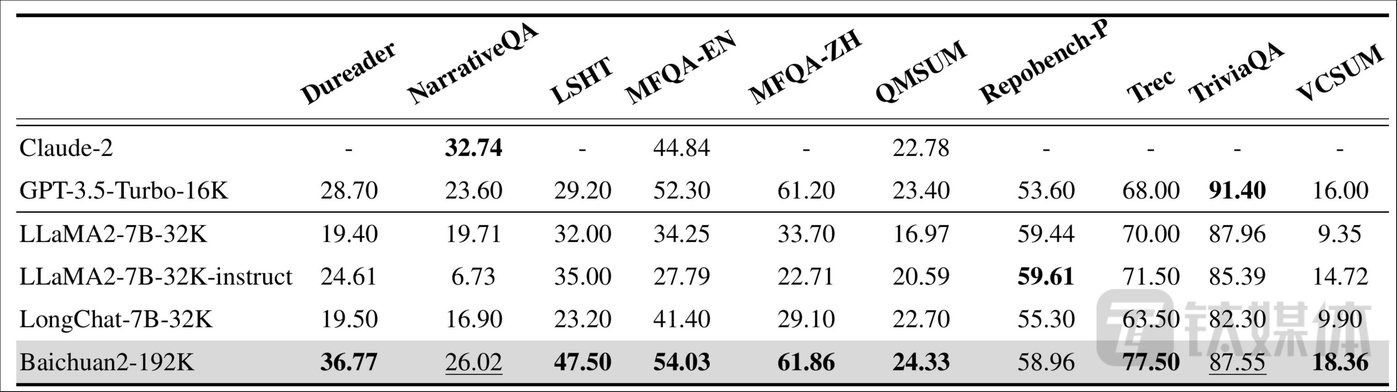

With the release of Baichuan2-192K, Baichuan Intelligence stated that it has performed excellently in 10 Chinese and English long text question answering and summarization evaluation sets, including Dureader, NarrativeQA, LSHT, and TriviaQA, achieving SOTA in 7 of them, significantly surpassing other long window models and comprehensively leading Claude2.

Baichuan pointed out that expanding the context window can effectively improve the performance of large models, which is a consensus in the AI industry. However, an ultra-long context window implies higher computational power requirements and greater pressure on memory. Currently, there are many ways in the industry to increase the context window length, including sliding windows, downsampling, and small models. Although these methods can increase the context window length, they all have varying degrees of impact on model performance. In other words, they all sacrifice model performance in other aspects in exchange for a longer context window. In contrast, Baichuan2-192K, released by Baichuan this time, achieves a balance between window length and model performance through algorithm and engineering optimization, achieving synchronous improvement in window length and model performance.

In terms of algorithms, Baichuan Intelligence has proposed an extrapolation solution for RoPE and ALiBi dynamic position encoding, which enhances the model's ability to model long sequence dependencies while maintaining resolution. Furthermore, as the window length increases, Baichuan2-192K's sequence modeling capability continues to improve. In terms of engineering, based on its independently developed distributed training framework, Baichuan Intelligence has integrated and optimized multiple technologies, creating a comprehensive 4D parallel distributed solution that can automatically find the most suitable distributed strategy based on the specific load of the model, greatly reducing the memory usage during long window training and inference processes.

Baichuan2-192K can be deeply integrated with more vertical scenarios, truly playing a role in people's work, life, and learning, and helping industry users achieve better cost reduction and efficiency improvement. For example, it can help fund managers summarize and explain financial statements, analyze the risks and opportunities of companies; help lawyers identify risks in multiple legal documents, review contracts and legal documents; help technical personnel read hundreds of pages of development documents and answer technical questions; and help scientific personnel quickly browse through a large number of papers and summarize the latest cutting-edge developments.

Currently, Baichuan2-192K is open to Baichuan Intelligence's core partners through API calls and has already reached cooperation with financial media and law firms, and it is expected to be fully open soon.

Wang Xiaochuan's team stated that Baichuan Intelligence's Baichuan2-192K has innovated in algorithms and engineering for long context windows, verifying the feasibility of long context windows and opening up new research paths for improving the performance of large models. At the same time, its longer context will also lay a good technical foundation for the industry to explore cutting-edge fields such as agents and multimodal applications.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。