Video Title: AI Makes Everything Easy to Fake—Crypto Makes it Hard Again | Balaji Srinivasan at SmartCon 2023

Video Author: Balaji Srinivason

Translation: Qianwen, ChainCatcher

About the Author:

Balaji Srinivason__ Balaji S. Srinivasan is an angel investor, technology entrepreneur, and author of the best-selling book "The Network State" in the Wall Street Journal. He was the former Chief Technology Officer of Coinbase and a general partner at A16z, and has been an early investor in many successful technology companies and crypto protocols.__

Recommended Reading: "Balaji Srinivasan: The Most Active Genius Investor in the Crypto Field"

Below is the content of the video:

Today, I want to talk to you about the perennial topics of artificial intelligence and cryptocurrency—how artificial intelligence makes everything fake, and how crypto makes everything real again.

There is a specific intersection between the two, which is that generative artificial intelligence can easily forge content online, so how do we verify? How do we, in a sense, restore the scarcity of information? This is where the role of cryptocurrency/technology comes in. As I just mentioned, AI and blockchain often intersect, and this article will list eight major areas where they overlap, explaining how we can use encryption to rebuild trust.

AI makes forgery easy, while encryption makes forgery difficult. Here is a photo of Trump being arrested, generated by artificial intelligence. News media might say that we can distinguish whether this is artificially generated content by the fingers, because AI cannot currently accurately reproduce fingers, but these technical issues will eventually be resolved.

So, the fundamental question is how do you want to verify the signatures on Ethereum. You want to see that these images and content have been digitally signed, preferably through an ENS (or something similar). This way, you can confirm that this ENS name, along with the Ethereum address and ENS public key associated with it, generated this content.

AI generates content, encryption verifies it. In fact, for encryption verification, we already have some specific architectures, such as ENS/IPFS. If you have the content hash value, you can go and retrieve it, and you can also map it to an ENS name. For example, if the content is signed, then you can use it to determine whether it is artificially generated content or human-generated content. Of course, humans can also sign artificially generated content, but at least you know which ENS name it came from (ENS does not necessarily have to come from an individual, it can also come from a company, etc.).

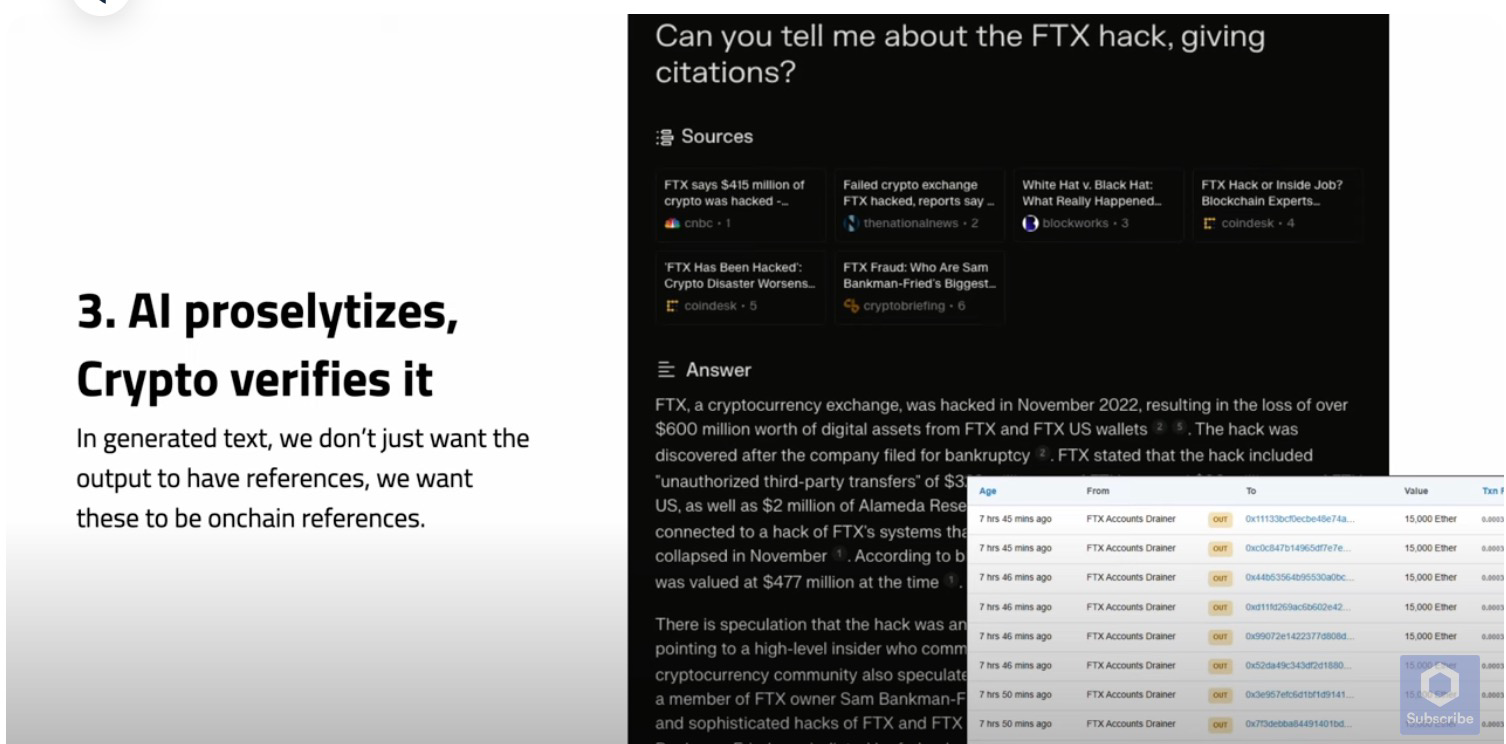

AI propagates information, encryption verifies information. This is important, because once there is a source, once there is a citation, artificial intelligence will perform encryption verification. For example, there is a service called "perplexity.ai," last year I had it tell me about the FTX hacker incident. Ideally, what you want is for these citations to be on the chain. Some people may think that only some content can be proven on the chain, such as financial records. I agree with this view, but it's a bit like saying in the 90s or early 21st century, when there wasn't as much internet content or network content, you didn't know how fast it would develop in the future.

Therefore, one way of thinking is that AI breaks the public network, but Web3 establishes a trust network. The internet is now filled with artificially generated false content, perhaps Google can solve this problem, but the ideal solution is an application like "interface.social." You will find more different types of data, not just financial transaction data, but also social interactions, and so on. It actually shows what a good trust network looks like—many interactions are on the chain, and you can perform encryption verification on many different aspects of these interactions.**** Not just verifying individual actions, such as this entity signing this content, but you can also verify all other actions of this entity, and you can start to calculate whether this entity is actually human. This is why we can build a Web3 trust network. You are not just looking at what the **public key** signed in terms of transactions, but you are macroscopically looking at the interactions of this **public key** and other **public keys** in the entire network, ****

****Our current network has a high weight in determining whether content is "real" or not, such as relying on Google's page ranking to make judgments, but in fact, many false content ranks high, and this method is not reliable. So, we need **a decentralized trust network* that anyone can view, anyone can index, view on-chain data, and visualize it in these next-generation block explorers,** just like a social interface*.

AI makes captchas ineffective, encryption rebuilds captchas. As shown above, a robot is clicking on a captcha saying "I am not a robot." But cryptocurrency can change this situation, if you sign them with Ethereum, you can request small payments, view payment history, or pre-stake a portion of funds, and so on—basically increasing the cost of forgery, and of course, robots can still log in with Ethereum, but you can charge them high fees to prevent them from doing so.

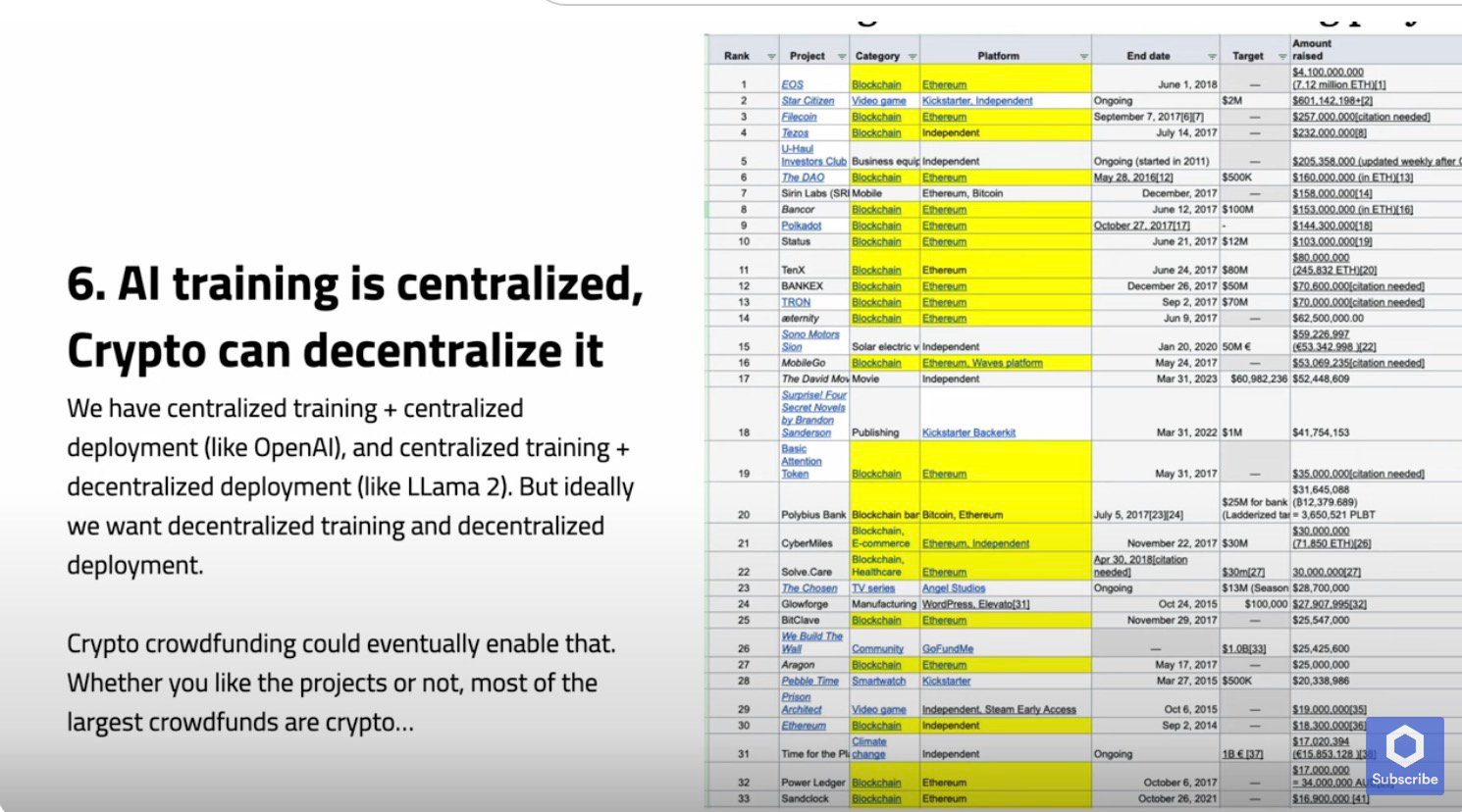

AI training* is usually centralized, and encryption technology can** decentralize** it.* Now we have centralized training and centralized models (like Open AI), and we also have centralized training and decentralized models (like LLama2). But ideally, we also want to adopt decentralized training and decentralized models. If you look into it, you will know that most of these projects involve cryptocurrency. You may not agree with what these projects are doing, but that's not really important—the key is that we can raise a large amount of funds through cryptocurrency crowdfunding, and we can use these funds to train artificial intelligence models.

When you train these models, you are not just training them in a decentralized way or partially decentralized way. When I say decentralized, I mean at least the funding is decentralized. You may still need to train them in a centralized cluster, but at least people are involved.

AI evaluation is centralized, and encryption* can decentralize** it.* Now you can evaluate LLama 2 on Mac studio. This actually means that we can approach a state where everyone who trains models can run them on powerful hardware, similar to running a Solana node.

You can imagine that every time Ethereum or Solana upgrades, people update their nodes, update the models, and hold the models, perhaps every model evaluation requires spending tokens, so the sponsors of the models can get more tokens, and then they can also pay for model evaluations. This is just one way of thinking. But I think it's worth considering how to liberate these things from centralized actors.

AI has created many centers of power, and encryption can decentralize them. People have talked a lot about AGI. I think an implicit background assumption is that people think there will be a huge unified cult AGI (as shown in the figure above). But if our community can achieve the concept of decentralized funding and decentralized evaluation, you can imagine a polytheistic AGI (as shown in the figure above), where each community has its own better version of an oracle, and they can ask the oracle questions, such as, how will the numbers fluctuate? For example, what would George Washington do? Different societies, different communities will have their own intelligent systems, and they can query them. They can ask the intelligent systems for references, and the intelligent systems can even provide on-chain references.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。