Source: Quantum Bit

The large-scale model company Zhipu AI has announced a financing amount of 2.5 billion yuan within the year.

What does this amount mean? It has refreshed the accumulated financing amount of domestic large-scale model startups, with a valuation exceeding 10 billion.

This 4-year-old company has become the most attractive and valuable domestic large-scale model company.

As the "Generative AI Second Stage" of the battle of large models arrives, is Zhipu's financing progress a proof of the industry's Matthew effect?

Companies that are highly regarded will receive more resources, while those that have not proven their own value will face reshuffling and be left out of the next stage of competition.

From the perspective of startup companies, this seems more like the end of the first half of the large-scale model startup, with the pattern becoming clear and the ecological seating arrangement beginning to take shape.

Why Zhipu?

Before we understand why Zhipu is the strong player in domestic large-scale model financing, let's trace its origins and understand where it comes from.

Before this, it was probably well-known within the industry but unknown outside of it.

Zhipu AI, established in June 2019, was a successful transformation of technology from the Knowledge Engineering Laboratory (KEG) of the Department of Computer Science and Technology at Tsinghua University.

Almost all core team members have ties to Tsinghua, among them, CEO Zhang Peng graduated from the Department of Computer Science and Technology at Tsinghua University and is a Ph.D. in the 2018 Innovation Leadership Engineering program at Tsinghua University.

While at the KEG laboratory, the team mainly focused on researching how to apply machine learning, data mining, knowledge graphs, and other technologies to engineering practice, and began training AI models in 2017.

On the first anniversary of Zhipu's establishment, OpenAI coincidentally released GPT-3.

From that time on, the entire Zhipu company devoted itself to the research and development of large language pre-training models. In the realm of large models, OpenAI chose GPT, Google chose BERT, and Zhipu chose GLM (General Language Model).

Reaping what they sowed, almost all subsequent stories revolved around Zhipu's unique GLM pre-training architecture:

In 2022, Zhipu and Tsinghua collaborated to develop the bilingual hundred-billion-scale model GLM-130B as the base, beginning the construction of a large model platform and product matrix.

In 2023, Zhipu made frequent moves, from launching the dialogue model ChatGLM and open-sourcing the single-card version model ChatGLM-6B, to subsequently releasing the visual model Visual-6B, the code model CodeGeeX2, the mathematical model MathGLM, the multimodal model CogVLM-17B, the Agent model AgentLM series, and all of them were open-sourced.

On August 31 of this year, based on the bilingual dialogue model ChatGLM2, Zhipu's generative AI assistant "Zhipu Qingyan" became one of the first 11 large model products to be filed and enter ordinary households.

It can be said that in recent years, Zhipu's main focus in the large model field has been very clear: first, to solidify the foundation (base model), and then to build various modalities and functional structures on top of it.

It is worth mentioning that Zhipu was able to sustain itself with B-end service capabilities from the early stages, which is not only a recognized reality in the face of the "large model spending money like water" but also a confidence to frequently develop and release new models and products.

Of course, this is not the only reason.

In addition to service capabilities and the associated revenue-generating capabilities, Zhipu also has a strong team of talents and technical strength.

As mentioned earlier, Zhipu originated from Tsinghua, and the "Tsinghua system" has become a prestigious label in this field.

The reason for this is that the Tsinghua Department of Computer Science and Technology has been involved in large model research for a long time, has long-term experience, and has cultivated many talents—players known in the market today, represented by Zhipu, as well as companies like Dark Matter of the Moon, Deep Speech Technology, First-Class Technology, Bai Chuan Intelligence, Mianbi Intelligence, Xianyuan Technology, Shengshu Technology, and others, all have ties to Tsinghua.

The number of citations of their published papers and the verifiability of the models they have released are powerful evidence of this "recognized label."

And according to public information, Zhipu is the only domestically developed large model enterprise with entirely domestic capital.

This background has allowed Zhipu to have its own preparation and strategy amidst the discussions and controversies surrounding "model security, data security, and content security."

It is reported that to complement the development of domestic GPUs, Zhipu is now working on the GLM General Language Model domestic chip adaptation plan.

Specifically, this involves collaborating with domestic chip manufacturers to adapt model algorithms to domestic chips on the algorithm and inference ends, and nearly 10 types of domestic chips are currently adaptable.

With so much funding, what's next?

The above achievements and uniqueness may have become the key reasons why Zhipu has been highly regarded and stood out.

But because it has been highly regarded and has accumulated sufficient capital, Zhipu has also demonstrated its determination to build longer-term competitive strength.

After raising 2.5 billion yuan in financing within 10 months, Zhipu AI officially stated:

The above financing will be used for further research and development of the large model base, to better support the industry ecosystem and grow rapidly with partners.

In summary, there are two main aspects:

- First, to strengthen and solidify the large model base.

- Second, to expand the ecosystem and network of friends.

It needs both depth and breadth.

First, to strengthen and solidify the large model base, "further research and development" of the base large model.

The large model currently regarded as the base by Zhipu is the bilingual bidirectional dense model GLM-130B released in 2021, with 130 billion parameters.

At that time, due to various limitations such as technology, data, and computing power, training a large model with this parameter size was quite challenging, but the results were significant, with some aspects of GLM-130B outperforming GPT-3 and PaLM.

However, as of today, the demand brought about by data and modality growth seems to have made the once 130 billion parameter behemoth somewhat inadequate.

Quantum Bit has received the latest news that this Friday (October 27), Zhipu will have a new move—releasing a new generation of base large model.

Second, to expand the ecosystem and network of friends.

In terms of specific actions, it should be impossible to bypass Zhipu's consistent principle: continuous open-sourcing.

This company has always been one of the most open players in the large model field. Even in the pre-ChatGPT era, it, along with Baidu (ERNIE2.0), Alibaba (AliceMind), Zhiyuan (Qingyuan CPM), and Lan Zhou (Mengzi Large Model), took a transparent and open attitude.

Looking back at Zhipu's early GLM reports, there are words inviting everyone to join its open community to promote the development of large-scale pre-training models. Now, the company is still making friends with developers and industry users in an open-source manner.

This habit has continued to the present.

Combining the current data, we can more clearly see what Zhipu's persistence in open-sourcing has achieved in terms of phased results:

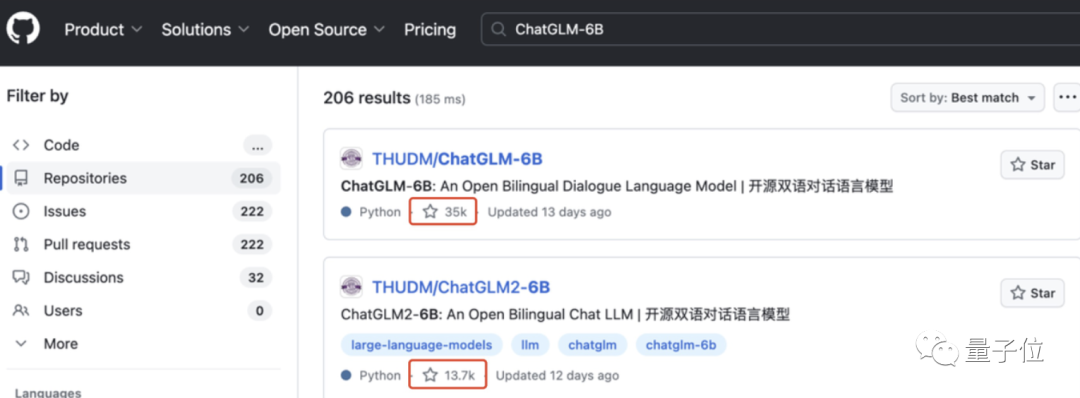

Developer Community, ChatGLM-6B trended at number one on the hugging face trend list four weeks after its launch, with over 10 million downloads and 50,000+ stars on GitHub.

On the other hand, Zhipu's official website lists cooperative partners in building the ecosystem, "69 countries, 1000+ research institutions." In addition, Quantum Bit has confirmed that the number of Zhipu's customers exceeds 1000, with 200-300 participating in building the open-source ecosystem.

Once the large model ecosystem is established, it can better integrate the resources of the large model base layer, middle layer, and application layer, optimize configuration, and achieve healthy interaction and collaborative evolution.

In this process, the base large model, with its foundational and universal nature, occupies a core position in the large model ecosystem. Understanding this point, it is not difficult to understand the advantages and necessity of Zhipu's commitment to expanding the ecosystem and network of friends.

A Watershed Moment for Large Model Entrepreneurship At the end of November last year, OpenAI brought ChatGPT to the world. Subsequently, the large model technology trend has set off wave after wave of unprecedented high tides at an astonishing speed.

The intuitive data is amazing, and the pace of presentation is also amazing.

With hundreds of millions of active users, tens of billions in revenue, and a valuation of hundreds of billions, large models have aggressively swept the world, and everyone is watching, exploring, and thinking about how this artificial intelligence technology can explore such vast boundaries and how the products it supports can leverage the power of technology.

As a result, there are pioneers like OpenAI and Anthropic abroad, and domestically, there are companies like Zhipu AI and MiniMax, which are unicorns with valuations of hundreds of billions.

With pearls in front, some technical and engineering challenges cannot be fast-forwarded or skipped. No matter how star-studded the lineup is, or how astronomical the financing is, as long as you walk the path of large models, you must personally experience it.

The challenge is very daunting, but the challengers are coming one after another and enjoying it.

△ Image source: Sequoia

Today, almost a year later, we have witnessed the development of large model technology and how innovation and competition are shaping this field.

What is even clearer is that the giants have completed the initial positioning, and startups are beginning to shuffle, with the initial stage pattern beginning to emerge.

Yes, a company cannot do everything in the field of large model capabilities, but the tickets for general large models are so limited. Players who are not capable of obtaining them are beginning to divert: either they move towards specialized models for specific industries, or they give up entrepreneurship at the model layer and start standing on the shoulders of other models, moving towards the middle layer and application layer…

Large model entrepreneurship is entering a watershed moment.

From now on, the financing progress of large model entrepreneurship is likely to become more and more concentrated than it is now. Hundreds of millions or even billions of funds will continue to gather in companies that "are not short of money."

The Matthew effect in the industry is intensifying. With limited capital, more valuable companies will become more attractive, and the best and most resources will be sent to the most promising players.

In the capital market, the only downside to expensive companies is that they are expensive, and the only advantage of cheap companies is that they are cheap.

The first half of large model entrepreneurship is coming to an end.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。