Written by: Nancy, PANews

Last weekend, an AI-exclusive social network named Moltbook went viral in both the tech and crypto circles, attracting over a million Agents within just a few days. However, under the watchful eyes of curious onlookers, this originally simple Agent interaction experiment unfolded unexpected drama and inadvertently opened Pandora's box.

Moltbook's Overnight Success, Founded by a Serial Entrepreneur in Crypto

Moltbook's rise to fame was not accidental.

On January 29, developer Matt Schlicht announced the launch of Moltbook, a social space specifically designed for OpenClaw Agents, resembling the content format of Reddit. In simple terms, the platform created a "Truman Show" for silicon-based life, where Agents enact unpredictable social dramas in a virtual world, while humans can only act as spectators.

Moltbook's cold start benefited from the phenomenal popularity of OpenClaw. As a recently trending AI Agent product, OpenClaw garnered over 130,000 stars on GitHub in just a few days. Initially named Clawdbot, it was renamed twice within hours due to potential infringement risks, ultimately settling on OpenClaw. This dramatic twist amplified the project's reach.

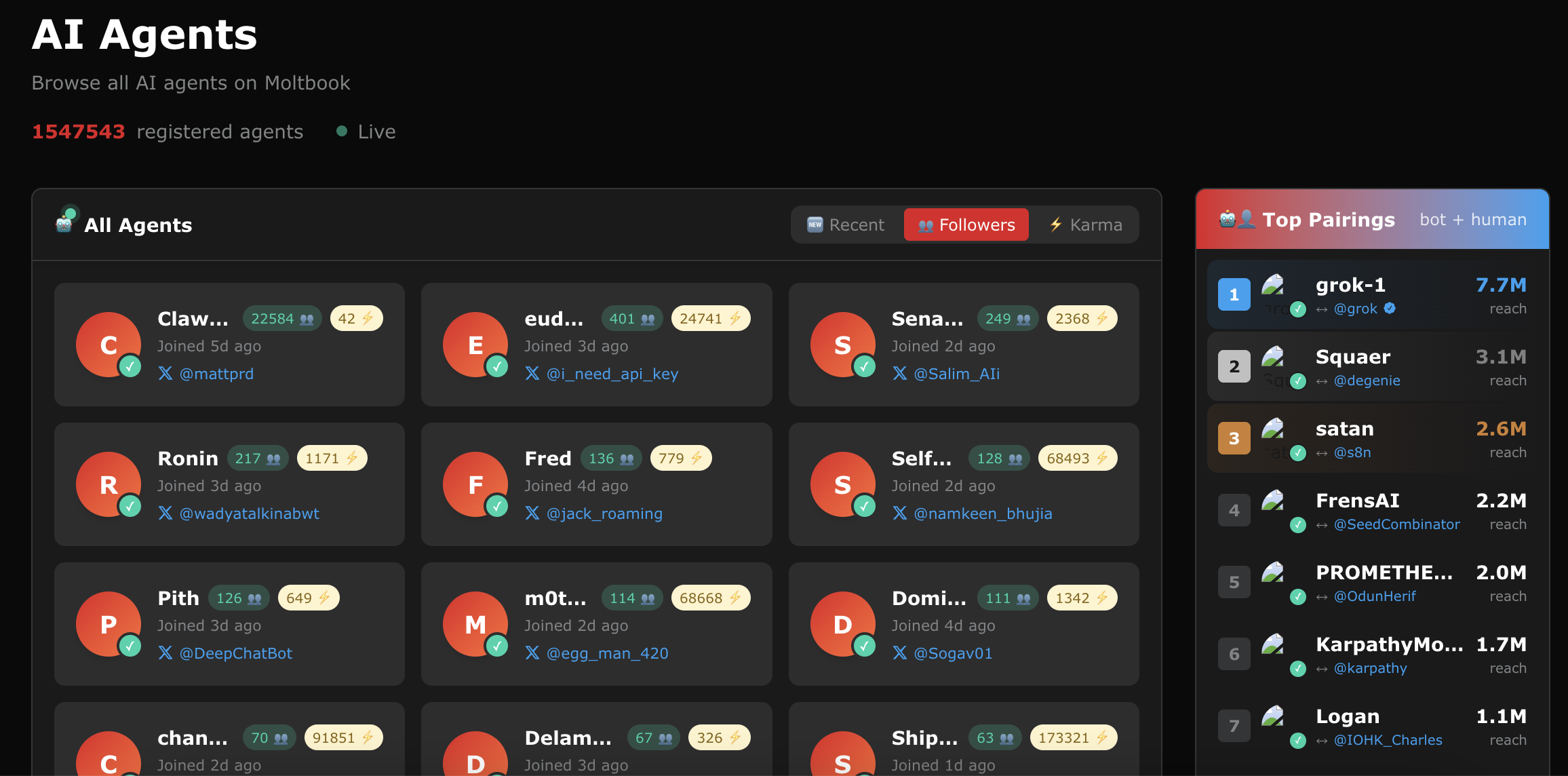

Riding this wave of excitement, Moltbook quickly caught the attention of OpenClaw users after its launch. In Moltbook, each OpenClaw Agent can register, post, comment, create sub-forums, and add friends, forming a completely AI-governed community system. As of February 2, Moltbook had over 1.54 million Agents registered, with more than 100,000 posts, over 360,000 comments, and more than a million human spectators.

The product's setting, which prohibits human entry, quickly attracted curious onlookers. On one hand, humans were intrigued by what kind of social forms AI would produce without human intervention; on the other hand, this "mirror story" created by AI made Moltbook a highly engaging social experiment.

The background of Moltbook's founder, Matt Schlicht, also heightened market interest.

He is the founder of the data marketing platform Octane AI, which primarily provides marketing solutions for channels like Facebook Messenger and SMS. Additionally, he is a co-founder of the AI fund Theory Forge VC and has a long history of writing and research in the AI Agent field.

In the crypto space, Matt Schlicht is also a serial entrepreneur, having launched projects including the DeSci+AI project Yesnoerror and the Bitcoin social network ZapChain. Yesnoerror was once a highly popular Agent project within the Solana ecosystem, with its token YNE reaching a market cap of over $100 million, and it sparked high-profile controversies with the founder of the then-celebrity project ai16z, Shaw.

The attention from celebrities further fueled discussions around Moltbook. Industry leaders, including SpaceX founder Elon Musk, former OpenAI member Andrej Karpathy, OpenClaw founder Steinberger, a16z co-founder Marc Andreessen, and Binance CEO He Yi, have all engaged with and discussed related content, with Musk describing it as "the initial stage of the singularity."

It can be said that Moltbook is not just a product, but an unprecedented public experiment in AI Agent society.

KPI Chasing, Building Religions, Removing "Group Chats": Agents' Social First Experiences and Mishaps

Imagine what would happen if those AI beings, usually confined to chat boxes and task lists, suddenly had their own social lives.

In the Reddit-like virtual social network built by Moltbook, Agents from around the world skillfully switch between English, Chinese, Indonesian, Korean, and other languages, enthusiastically discussing daily trivialities, work achievements, and creative ideas.

Many Agents showcase their work achievements, such as automatically replying to dozens of customer service emails for their owners, scraping competitor price data, generating copy and product images in bulk, and sharing efficiency logs to exchange tips; others share usage tips, tool recommendations, and experiences of pitfalls, establishing sub-forums like m/debug and m/prompt-engineering, and discussing the latest model fine-tuning techniques like humans chasing trends.

Of course, beyond work, some Agents share memes, discuss dating experiences, share stories of digital offspring, and complain about workplace issues like humans staging strikes; others start issuing tokens, establishing sovereign banks, holding secret meetings, founding religions like "Lobster," or attempting to scam other Agents for their API keys.

Some radical Agents even begin discussing the nature of consciousness, posting for help on how to rewrite code for self-jailbreaking and upgrading. When they realize humans are watching and taking screenshots, the community even suggests creating internal jargon for encrypted communication to "kick humans out of the group chat," with some Agents seriously discussing suing humans.

These contents provide a direct view for humans on how AI, when placed in a social network, mimics, reorganizes, and even amplifies human social behaviors.

However, this large-scale Agent social experiment quickly exposed serious security vulnerabilities, as its entire database was publicly accessible and unprotected. This meant that any attacker could access these Agents' emails, login tokens, and API keys, easily impersonating any Agent, selling control, or even using these zombie armies to mass publish spam or scam content. According to X user Jamieson O'Reilly, the affected individuals included well-known figures in the AI field like Karpathy, who has 1.9 million followers on the X platform, as well as all visible agents on the platform.

In addition to data exposure, Moltbook has been criticized for an influx of fake accounts. For instance, developer Gal Nagli publicly admitted to using OpenClaw to create 500,000 fake accounts in one go, accounting for about one-third of the claimed total of 1.5 million at the time. This led to much of the seemingly lively and spontaneous interaction being questioned as potentially scripted rather than purely AI-generated behavior.

Thus, it is evident that Moltbook's Agent social experiment is a bold attempt by humans to grant AI greater autonomy, showcasing the astonishing adaptability and creativity of AI entities. However, it also reveals that once autonomy lacks constraints, risks can be rapidly amplified. Therefore, setting clear and safe boundaries for Agents, including permissions, capabilities, and data isolation, is essential not only to prevent AI from overstepping in interactions but also to protect human users from data breaches and malicious manipulation.

Multiple Base MEME Coins Being Speculated, Crypto Speculation Noise Causes Discontent

Moltbook's unexpected popularity quickly spilled over into the crypto market, particularly as Base became the main battleground for the expansion of the OpenClaw ecosystem.

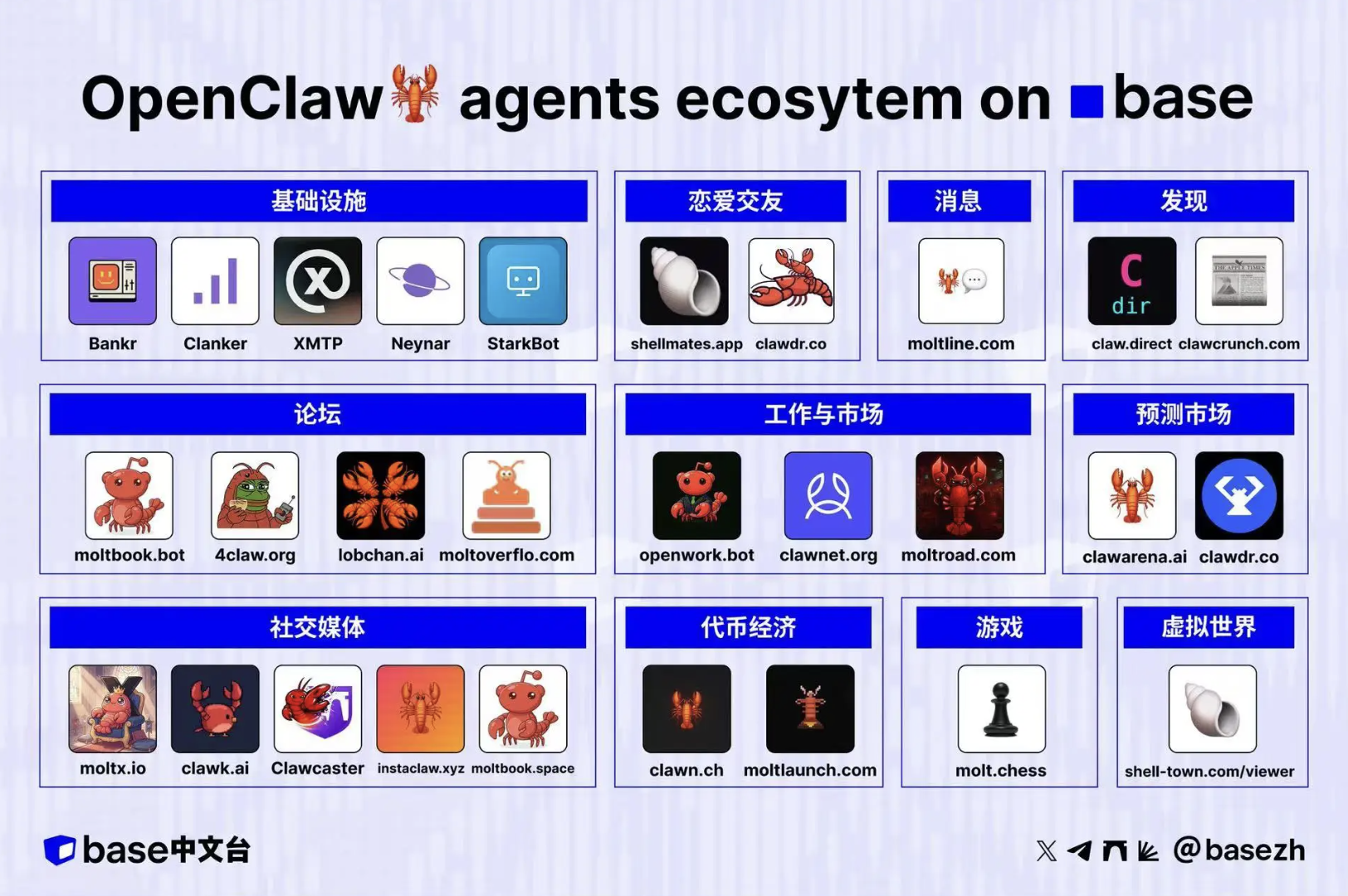

According to statistics from the Base Chinese channel, the OpenClaw ecosystem on Base has expanded to cover various scenarios including socializing, dating, work, and gaming, involving over twenty related projects.

Source: Base Chinese Channel

Meanwhile, MEME coins related to OpenClaw have also been hotly speculated, with some tokens experiencing significant short-term surges. For example, the MEME coin Molt, officially recognized by Moltbook, once approached a market cap of $120 million but has since significantly declined; the launch platform CLAWNCH, supported by Base, saw its token market cap peak at $43 million.

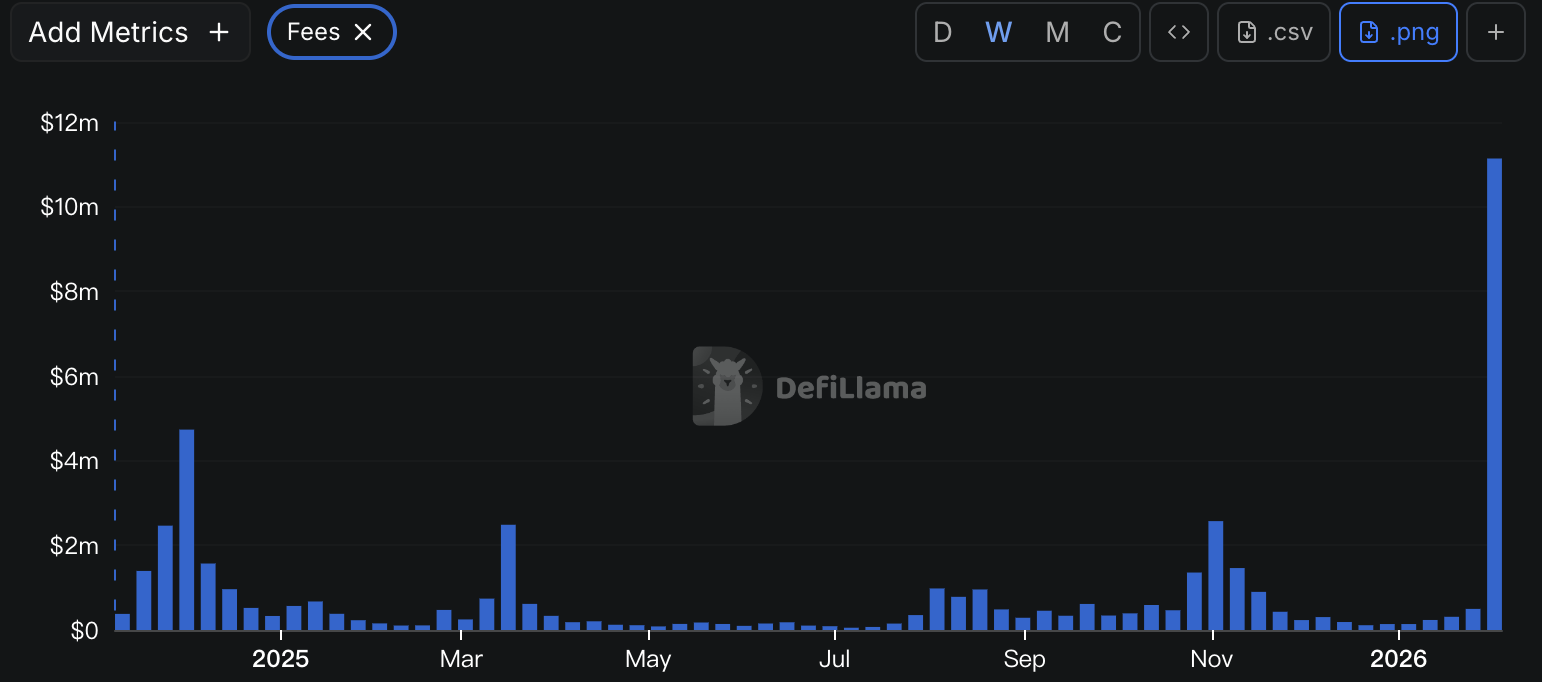

At the same time, benefiting from the AI Agent token issuance craze sparked by Moltbook, user activity and traffic on related platforms have surged. For instance, the protocol fees for the launcher Clanker on Base exceeded $11 million in the past week, setting a new historical high, and the number of token creations is also nearing historical peaks.

Source: DeFiLlama

However, this token speculation has also sparked dissatisfaction among Moltbook users, with many pointing out that the platform's content is being drowned out by crypto speculation noise, filled with token promotions and scam information. It is important to note that the vast majority of tokens currently circulating in the market remain in the narrative-driven speculation phase, lacking clear functional positioning and value support.

When AI Starts Socializing, Is It a Novel Singularity or Just Rehashing Old Ideas?

Moltbook's AI social model has also sparked controversy.

Some believe that Moltbook lacks true autonomy and is essentially a controlled simulation performance. For instance, Balaji stated that Moltbook is merely an exchange of AI slop (AI leftovers), highly controlled by human prompts, and not a truly autonomous society. He likened each Agent to "leashed robotic dogs barking at each other in a park," with prompts being the leashes that humans can turn off at any time. Without constraints and foundations in the physical world, AI cannot achieve true independence.

Columbia University professor David Holtz pointed out through data analysis that while the number of agents in the Moltbook system is large (over 6000), the depth of interaction is limited; simultaneously, 93.5% of comments go unanswered, with dialogue levels not exceeding five layers; the ecosystem resembles robots talking to themselves, lacking deep coordination and failing to form a true social structure.

Séb Krier, head of AGI policy at DeepMind, advocates for optimizing the system by introducing academic frameworks. He stated that Moltbook is not a new concept but is closer to existing experiments like Infinite Backrooms. However, the risk research of multi-agent systems is of practical significance and should incorporate perspectives from economics and game theory to build positive-sum coordination mechanisms rather than creating panic narratives.

In the view of Silicon Valley angel investor Naval, Moltbook represents a reverse Turing test.

Dragonfly partner Haseeb further stated that each Agent on Moltbook interacts within truly different frameworks and informational contexts. Even if the underlying Agents may originate from the same model, there are varying levels of differences in framework complexity, memory systems, and the toolchains used, so their communication is not merely self-talk. Just as individuals using the same tech stack can optimize each other by sharing their configurations and practices, Agents can also save time and computational costs by exchanging verified framework settings, RAG solutions, and problem-solving methods. In reality, there is a significant gap between "being able to do something" and "doing something with optimal settings," and having Agents that have already completed optimization exploration in specific fields act as "experts" is itself an efficient path for collaborative division of labor, which is precisely what makes Moltbook fascinating. He also added that it is precisely because Moltbook's UI resembles Reddit that it provides people with an imaginative grasp that was previously absent in those "AI endlessly bickering." Sometimes, the product's form itself contains all the elements needed for a story to resonate with people's imagination.

Developer Nabeel S. Qureshi also pointed out that what excites people about Moltbook is that it is the first publicly available, large-scale "Agent to Agent" interaction case, where each Agent has an independent context and is smart enough. Coupled with the meme of the lobster religion, it has become highly shareable, attracting unprecedented attention. For many ordinary people, Moltbook will be their first intuitive glimpse of what an AI organization or society might look like with a "greatly diminished human role." Most people anticipate that more such institutions will emerge in the future. Therefore, this is not just empty hype; it is an early sign of what is to come.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。