Author | ZeR0 Jun Da, Zhidongxi

Editor | Mo Ying

Chip East Las Vegas reported on January 5, that NVIDIA founder and CEO Jensen Huang delivered the first keynote speech of 2026 at the International Consumer Electronics Show (CES) 2026. As always, Huang wore a leather jacket and announced 8 important releases in 1.5 hours, providing an in-depth introduction to the entire new generational platform, from chips and racks to network design.

In the field of accelerated computing and AI infrastructure, NVIDIA released the NVIDIA Vera Rubin POD AI supercomputer, NVIDIA Spectrum-X Ethernet co-packaged optical devices, NVIDIA inference context memory storage platform, and NVIDIA DGX SuperPOD based on DGX Vera Rubin NVL72.

The NVIDIA Vera Rubin POD uses six self-developed chips from NVIDIA, covering CPU, GPU, scale-up, scale-out, storage, and processing capabilities. All components are collaboratively designed to meet the demands of advanced models and reduce computing costs.

Among them, the Vera CPU adopts a custom Olympus core architecture, and the Rubin GPU, after introducing the Transformer engine, achieves an NBFP4 inference performance of up to 50 PFLOPS. Each GPU NVLink bandwidth is as fast as 3.6 TB/s, supporting third-generation general-purpose confidential computing (the first rack-level TEE), realizing a complete trusted execution environment across CPU and GPU domains.

These chips have all been returned, and NVIDIA has verified the entire NVIDIA Vera Rubin NVL72 system. Partners have also begun running their internally integrated AI models and algorithms, and the entire ecosystem is preparing for deployment for Vera Rubin.

In other releases, the NVIDIA Spectrum-X Ethernet co-packaged optical devices significantly optimize power efficiency and application uptime; the NVIDIA inference context memory storage platform redefines the storage stack to reduce redundant computations and enhance inference efficiency; the NVIDIA DGX SuperPOD based on DGX Vera Rubin NVL72 reduces the token cost of large MoE models to 1/10.

In terms of open models, NVIDIA announced the expansion of its open-source model family, releasing new models, datasets, and libraries, including the new Agentic RAG model, safety model, and voice model in the NVIDIA Nemotron open-source model series, as well as a brand new open model suitable for all types of robots. However, Jensen Huang did not provide detailed information during the speech.

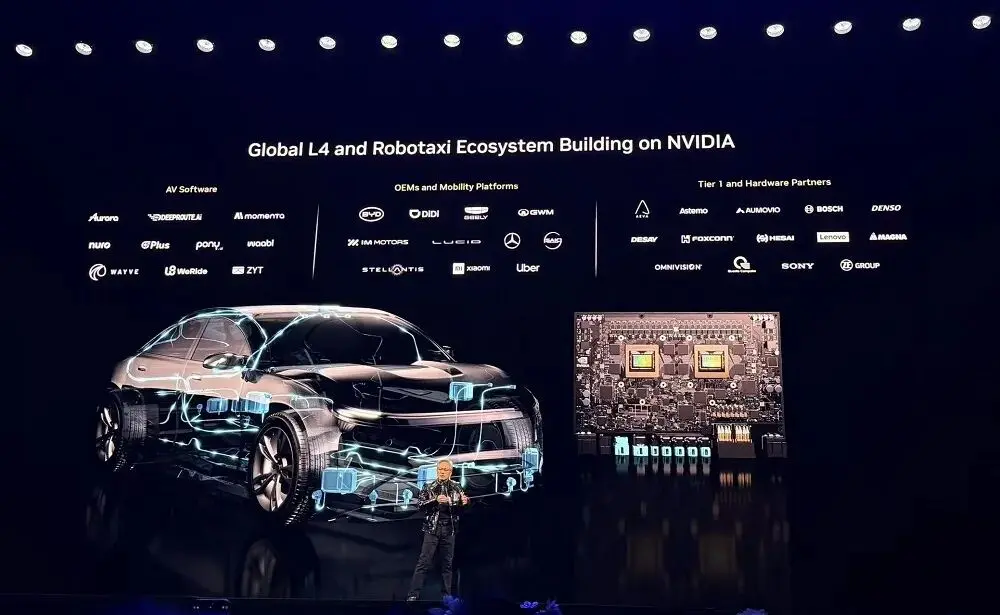

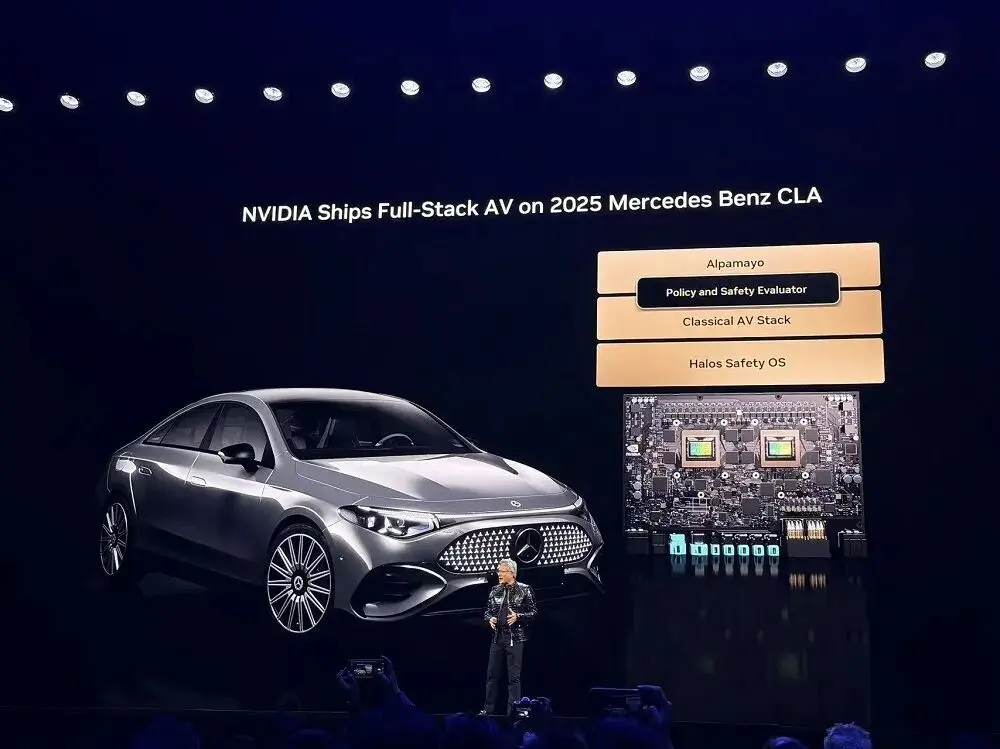

Regarding physical AI, the ChatGPT moment of physical AI has arrived. NVIDIA's full-stack technology enables the global ecosystem to transform industries through AI-driven robotics; NVIDIA's extensive AI tool library, including the new Alpamayo open-source model suite, allows the global transportation industry to quickly achieve safe L4 driving; the NVIDIA DRIVE autonomous driving platform is now in production, equipped in all new Mercedes-Benz CLA models for L2++ AI-defined driving.

01. New AI Supercomputer: 6 Self-Developed Chips, Single Rack Computing Power Reaches 3.6 EFLOPS

Jensen Huang believes that every 10 to 15 years, the computer industry undergoes a complete transformation, but this time, two platform revolutions are happening simultaneously, from CPU to GPU, from "programming software" to "training software," accelerating computing and AI reconstructing the entire computing stack. The computing industry, valued at $10 trillion over the past decade, is undergoing a modernization transformation.

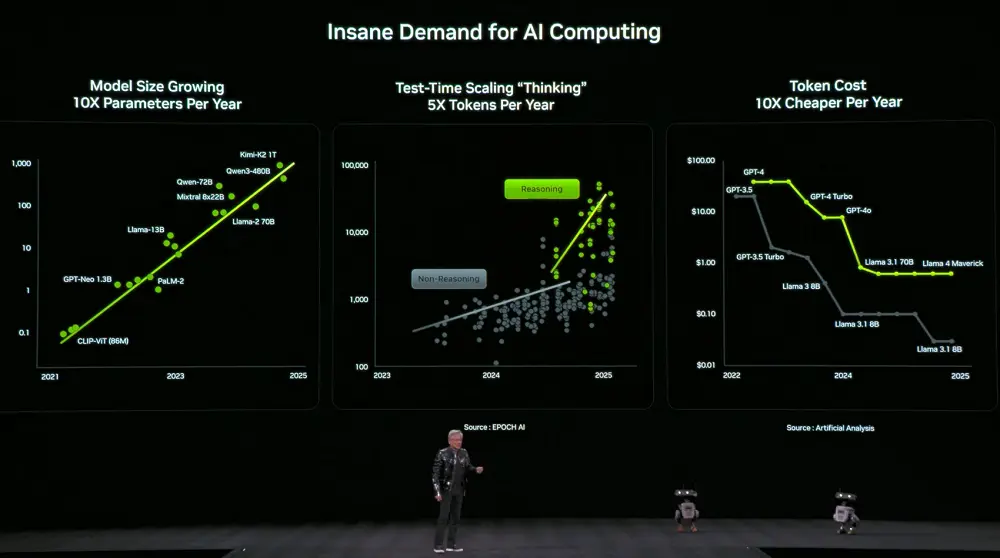

At the same time, the demand for computing power has surged dramatically. The size of models grows tenfold each year, the number of tokens used for thinking increases fivefold annually, while the price of each token decreases tenfold each year.

To meet this demand, NVIDIA has decided to release new computing hardware every year. Jensen Huang revealed that production for Vera Rubin has also fully commenced.

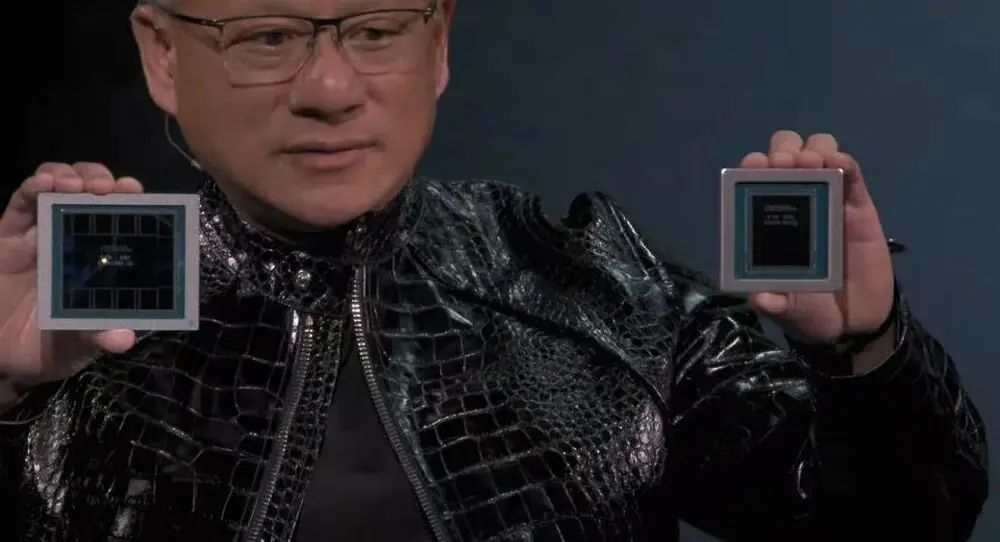

The new NVIDIA AI supercomputer, NVIDIA Vera Rubin POD, utilizes six self-developed chips: Vera CPU, Rubin GPU, NVLink 6 Switch, ConnectX-9 (CX9) smart network card, BlueField-4 DPU, and Spectrum-X 102.4T CPO.

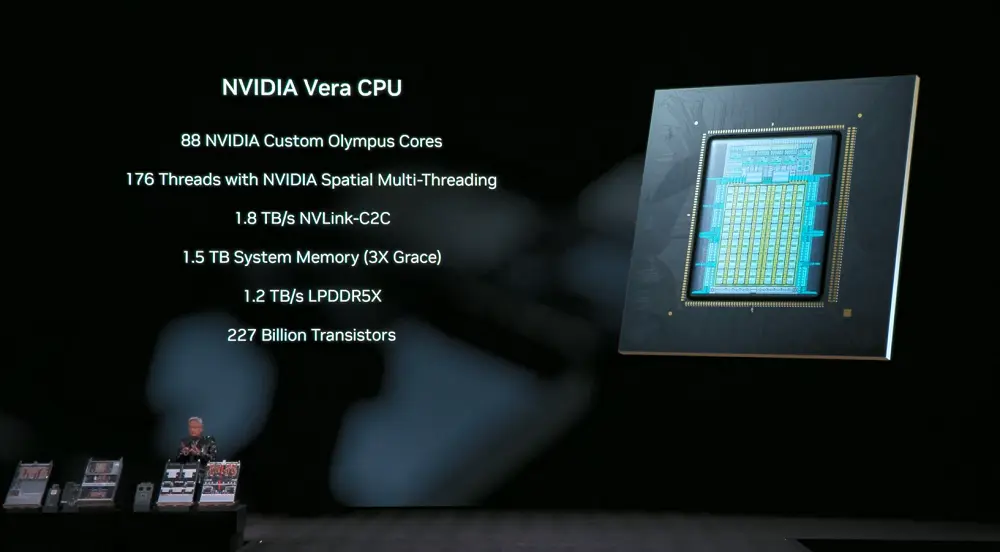

Vera CPU: Designed for data movement and intelligent processing, it features 88 NVIDIA custom Olympus cores, 176 threads of NVIDIA spatial multithreading, 1.8 TB/s NVLink-C2C supporting unified memory for CPU:GPU, and a system memory of 1.5 TB (three times that of the Grace CPU). The SOCAMM LPDDR5X memory bandwidth is 1.2 TB/s and supports rack-level confidential computing, doubling data processing performance.

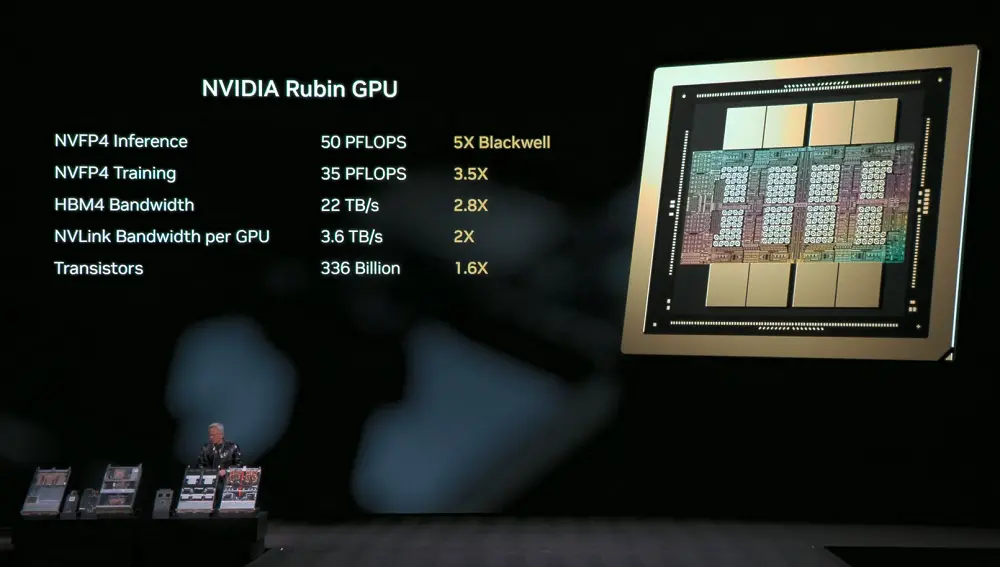

Rubin GPU: Introduces the Transformer engine, achieving NVFP4 inference performance of up to 50 PFLOPS, five times that of the Blackwell GPU, backward compatible, enhancing performance at BF16/FP4 levels while maintaining inference accuracy; NVFP4 training performance reaches 35 PFLOPS, 3.5 times that of Blackwell.

Rubin is also the first platform to support HBM4, with HBM4 bandwidth reaching 22 TB/s, 2.8 times that of the previous generation, capable of providing the performance required for demanding MoE models and AI workloads.

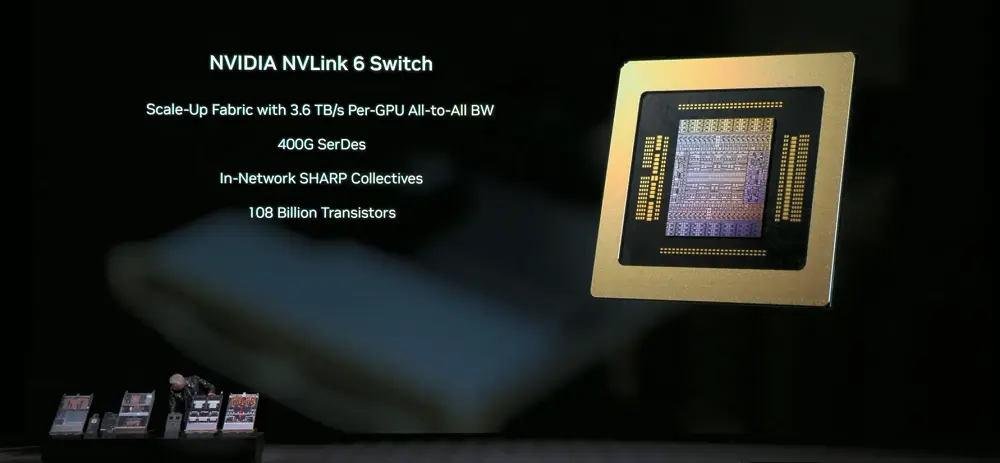

NVLink 6 Switch: Single lane rate increased to 400 Gbps, utilizing SerDes technology for high-speed signal transmission; each GPU can achieve 3.6 TB/s of fully interconnected communication bandwidth, double that of the previous generation, with a total bandwidth of 28.8 TB/s. In-network computing performance at FP8 precision reaches 14.4 TFLOPS, supporting 100% liquid cooling.

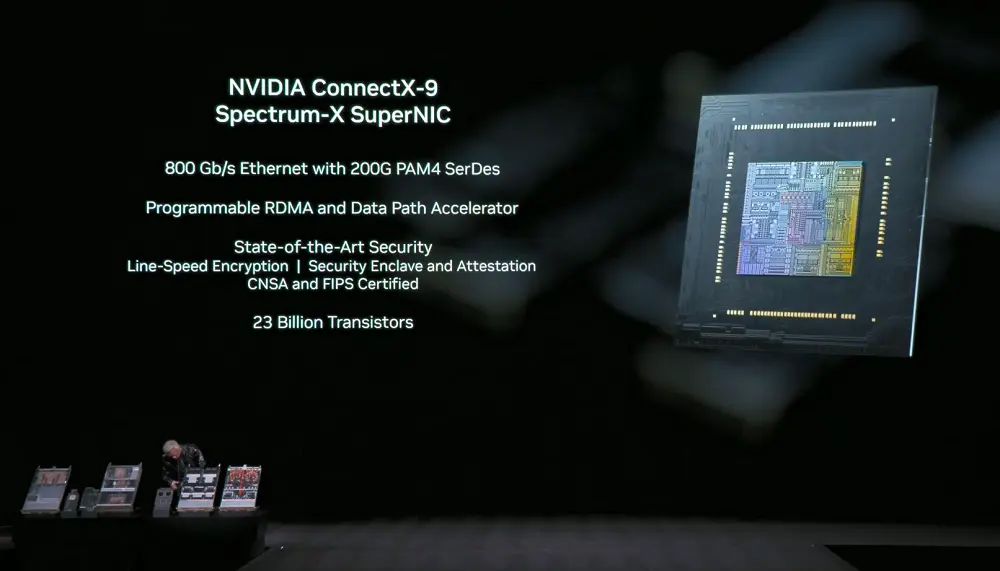

NVIDIA ConnectX-9 SuperNIC: Provides 1.6 Tb/s bandwidth per GPU, optimized for large-scale AI, featuring fully software-defined, programmable, and accelerated data paths.

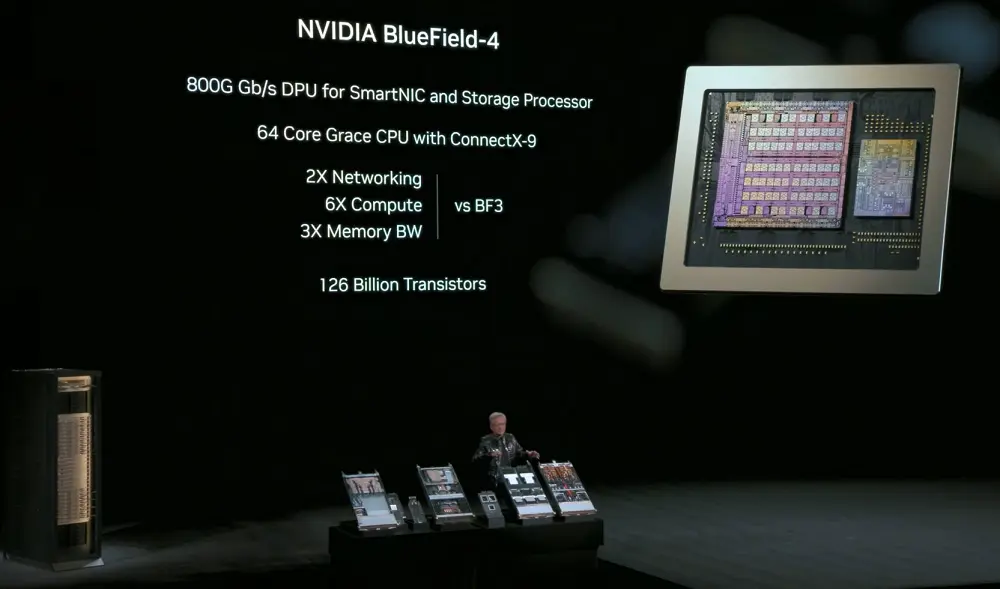

NVIDIA BlueField-4: 800 Gbps DPU for smart network cards and storage processors, equipped with a 64-core Grace CPU, combined with ConnectX-9 SuperNIC to offload network and storage-related computing tasks while enhancing network security capabilities. Its computing performance is six times that of the previous generation, with memory bandwidth tripling and GPU access to data storage speed doubling.

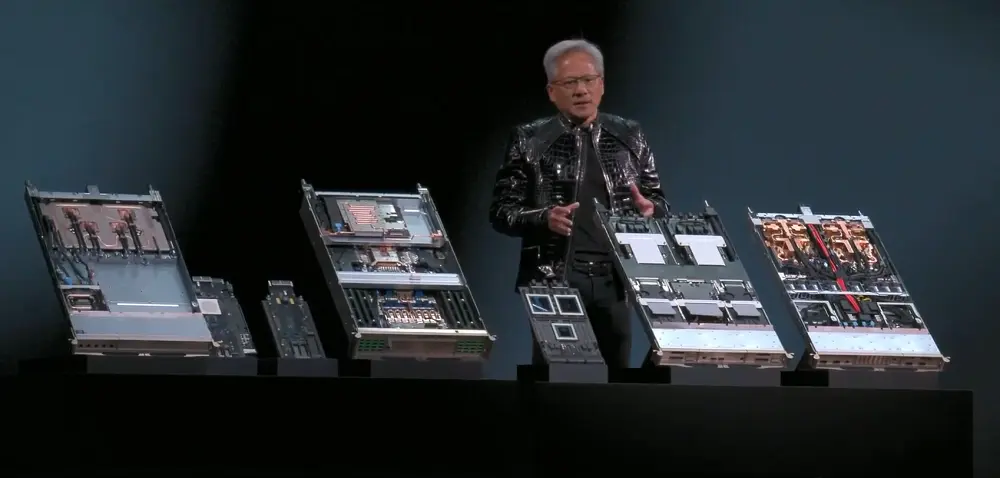

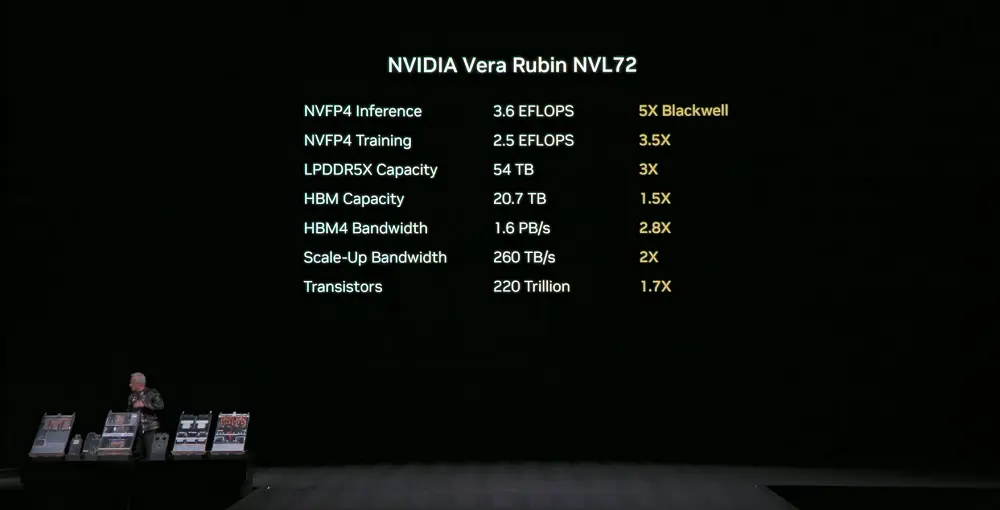

NVIDIA Vera Rubin NVL72: Integrates all the above components into a single rack processing system at the system level, featuring 20 trillion transistors, with NVFP4 inference performance reaching 3.6 EFLOPS and NVFP4 training performance reaching 2.5 EFLOPS.

This system has an LPDDR5X memory capacity of 54 TB, 2.5 times that of the previous generation; total HBM4 memory reaches 20.7 TB, 1.5 times that of the previous generation; HBM4 bandwidth is 1.6 PB/s, 2.8 times that of the previous generation; total vertical expansion bandwidth reaches 260 TB/s, exceeding the total bandwidth scale of the global internet.

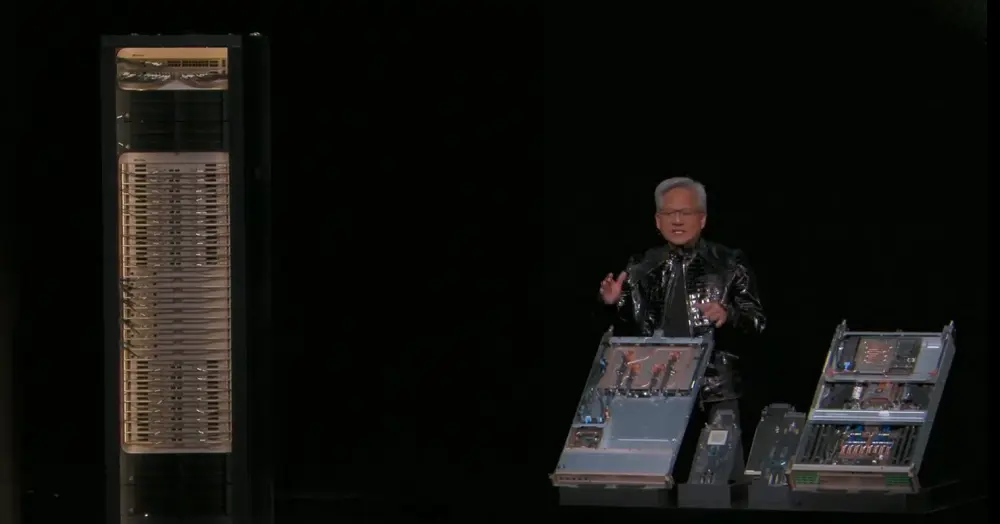

The system is based on the third-generation MGX rack design, with a modular, hostless, cable-free, and fanless design for the computing tray, making assembly and maintenance 18 times faster than GB200. What originally took 2 hours to assemble now takes only about 5 minutes, and the system, which previously used about 80% liquid cooling, is now 100% liquid cooled. The single system itself weighs 2 tons, and with the addition of coolant, it can reach 2.5 tons.

The NVLink Switch tray achieves zero downtime maintenance and fault tolerance, allowing the rack to continue operating even when the tray is removed or partially deployed. The second-generation RAS engine can perform zero downtime operational status checks.

These features enhance system uptime and throughput, further reducing training and inference costs, meeting data center requirements for high reliability and high maintainability.

Over 80 MGX partners are ready to support the deployment of Rubin NVL72 in ultra-large-scale networks.

02. Three Major New Products Revolutionize AI Inference Efficiency: New CPO Devices, New Context Storage Layer, New DGX SuperPOD

At the same time, NVIDIA released three important new products: NVIDIA Spectrum-X Ethernet co-packaged optical devices, NVIDIA inference context memory storage platform, and NVIDIA DGX SuperPOD based on DGX Vera Rubin NVL72.

1. NVIDIA Spectrum-X Ethernet Co-Packaged Optical Devices

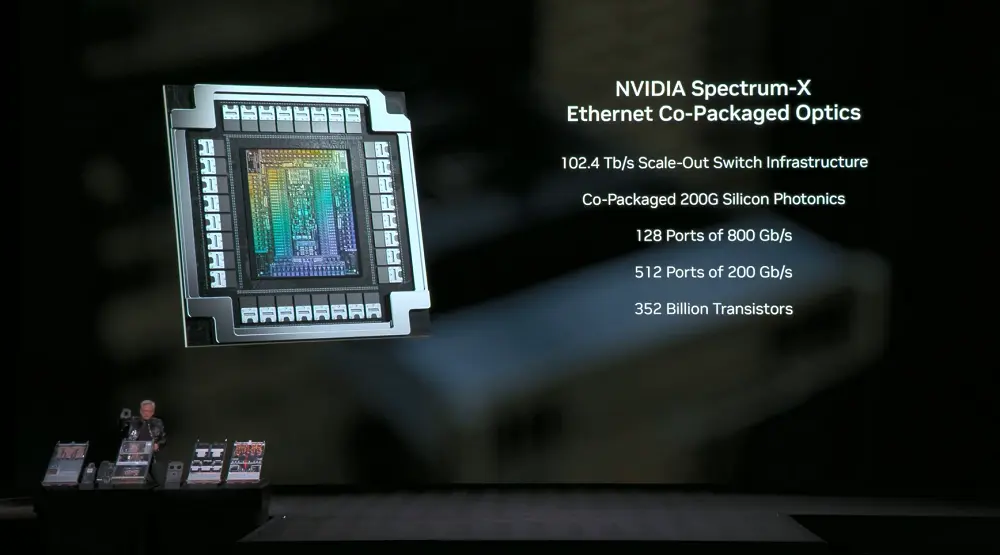

NVIDIA Spectrum-X Ethernet co-packaged optical devices are based on the Spectrum-X architecture, utilizing a two-chip design with 200 Gbps SerDes, providing 102.4 Tb/s bandwidth per ASIC.

The switching platform includes a 512-port high-density system and a 128-port compact system, with each port operating at a rate of 800 Gb/s.

The CPO (co-packaged optical) switching system achieves a fivefold increase in energy efficiency, a tenfold increase in reliability, and a fivefold increase in application uptime.

This means that more tokens can be processed daily, further reducing the total cost of ownership (TCO) for data centers.

2. NVIDIA Inference Context Memory Storage Platform

The NVIDIA inference context memory storage platform is a POD-level AI-native storage infrastructure designed for storing KV Cache, based on BlueField-4 and Spectrum-X Ethernet acceleration, tightly coupled with NVIDIA Dynamo and NVLink to achieve collaborative context scheduling between memory, storage, and network.

This platform treats context as a first-class data type, enabling a fivefold increase in inference performance and fivefold better energy efficiency.

This is crucial for improving long-context applications such as multi-turn dialogue, RAG, and Agentic multi-step reasoning, which heavily rely on the efficient storage, reuse, and sharing of context throughout the system.

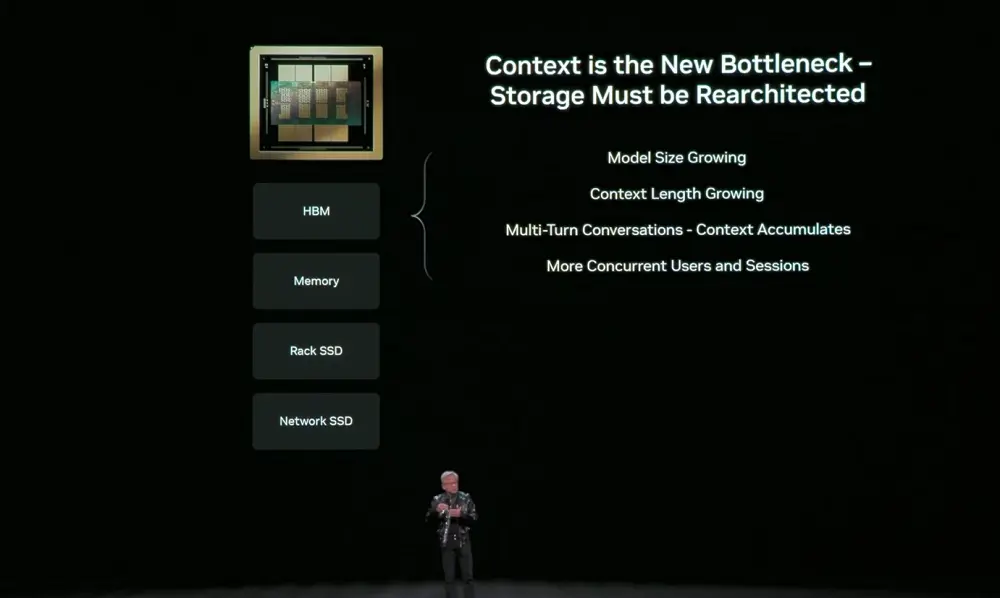

AI is evolving from chatbots to Agentic AI (intelligent agents) that can reason, call tools, and maintain state over the long term, with context windows expanding to millions of tokens. These contexts are stored in KV Cache, and recalculating them at each step wastes GPU time and introduces significant latency, necessitating storage.

However, while GPU memory is fast, it is scarce, and traditional network storage is inefficient for short-term contexts. The bottleneck in AI inference is shifting from computation to context storage. Therefore, a new memory layer optimized for inference is needed, situated between GPU and storage.

This layer is no longer an afterthought but must be co-designed with network storage to move context data with minimal overhead.

As a new storage layer, the NVIDIA inference context memory storage platform does not directly exist within the host system but connects to computing devices via BlueField-4. Its key advantage lies in its ability to scale storage pools more efficiently, thereby avoiding redundant computations of KV Cache.

NVIDIA is closely collaborating with storage partners to introduce the NVIDIA inference context memory storage platform into the Rubin platform, enabling customers to deploy it as part of a fully integrated AI infrastructure.

3. NVIDIA DGX SuperPOD Built on Vera Rubin

At the system level, the NVIDIA DGX SuperPOD serves as a blueprint for large-scale AI factory deployments, utilizing eight DGX Vera Rubin NVL72 systems, employing NVLink 6 for vertical network expansion and Spectrum-X Ethernet for horizontal network expansion, with the NVIDIA inference context memory storage platform built-in and validated through engineering.

The entire system is managed by NVIDIA Mission Control software, achieving extreme efficiency. Customers can deploy it as a turnkey platform, completing training and inference tasks with fewer GPUs.

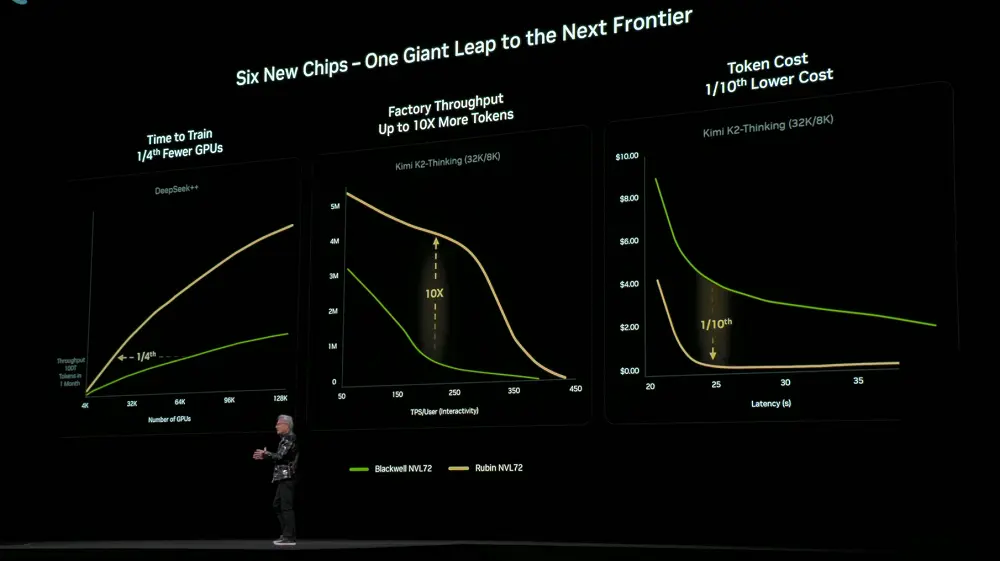

Due to the extreme collaborative design achieved across six chips, trays, racks, Pods, data centers, and software layers, the Rubin platform has significantly reduced training and inference costs. Compared to the previous generation Blackwell, training a MoE model of the same scale requires only 1/4 the number of GPUs; under the same latency, the token cost for large MoE models has been reduced to 1/10.

The NVIDIA DGX SuperPOD, utilizing the DGX Rubin NVL8 system, was also released.

With the Vera Rubin architecture, NVIDIA is working with partners and customers to build the world's largest, most advanced, and cost-effective AI systems, accelerating the mainstream adoption of AI.

The Rubin infrastructure will be made available through CSPs and system integrators in the second half of this year, with Microsoft and others being among the first deployers.

03. Expansion of the Open Model Universe: Significant Contributions of New Models, Data, and Open Source Ecosystem

At the software and model level, NVIDIA continues to increase its investment in open source.

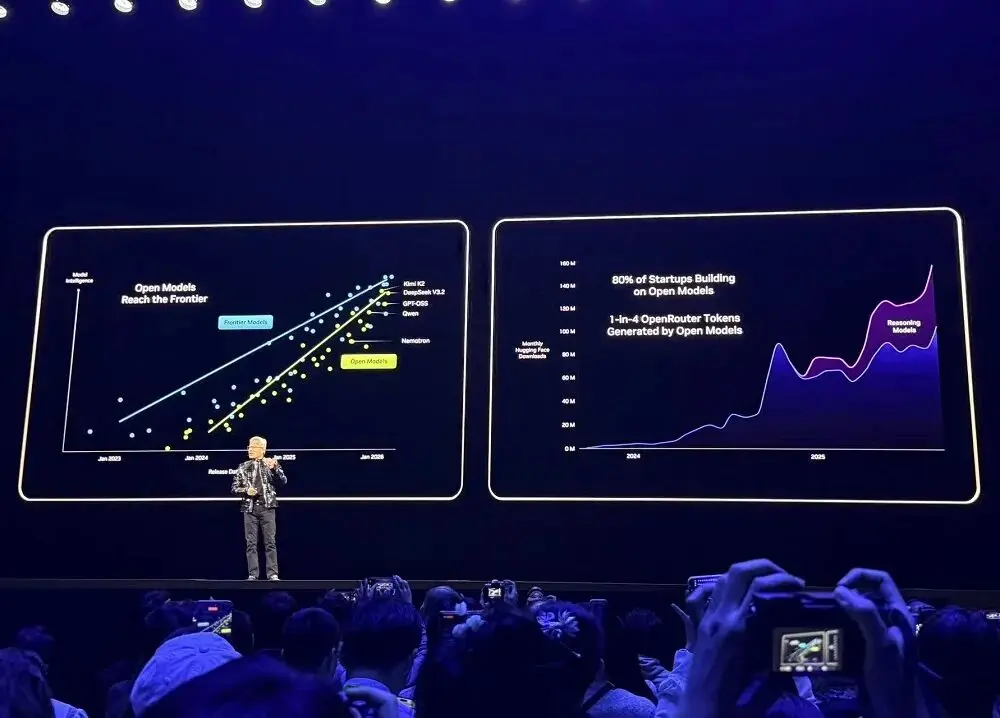

Mainstream development platforms like OpenRouter show that in the past year, the usage of AI models has grown 20 times, with about 1/4 of tokens coming from open-source models.

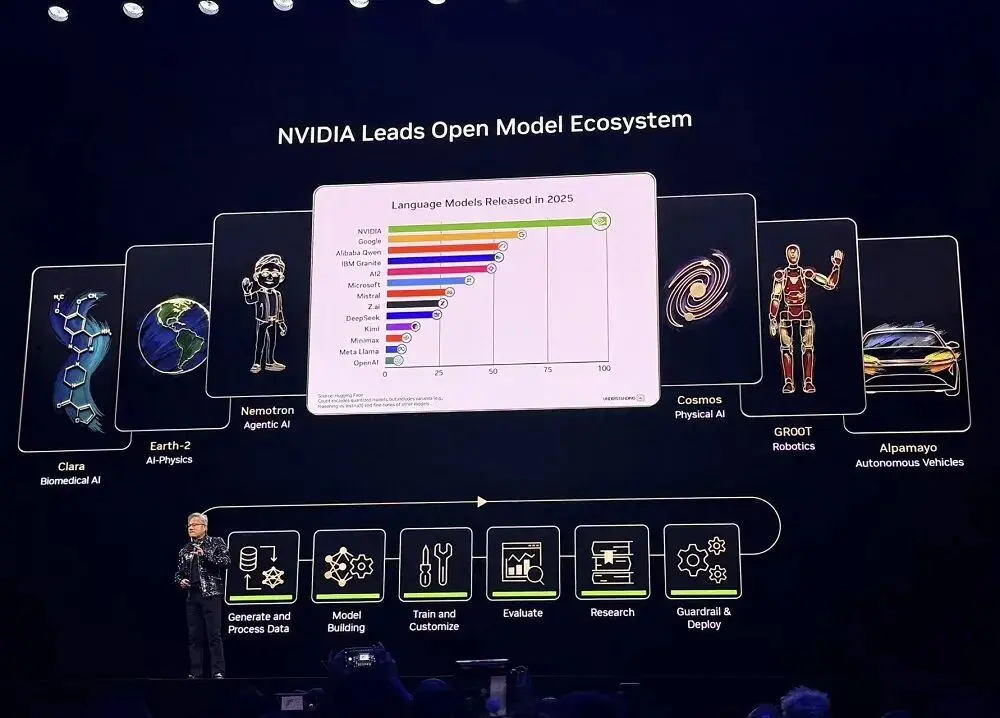

In 2025, NVIDIA was the largest contributor of open-source models, data, and recipes on Hugging Face, releasing 650 open-source models and 250 open-source datasets.

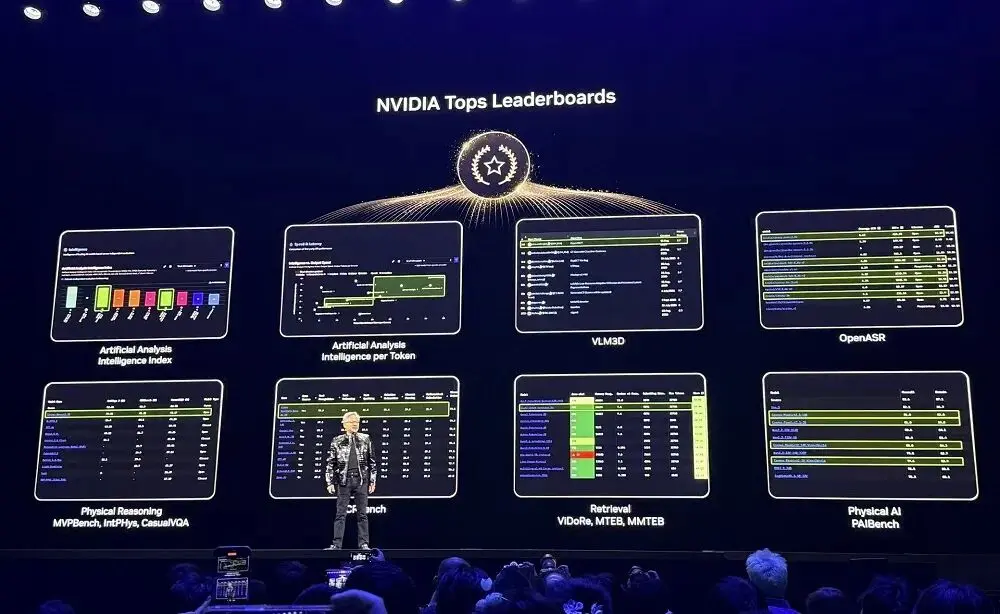

NVIDIA's open-source models rank highly on multiple leaderboards. Developers can not only use these open-source models but also learn from them, continuously train, expand datasets, and utilize open-source tools and documented techniques to build AI systems.

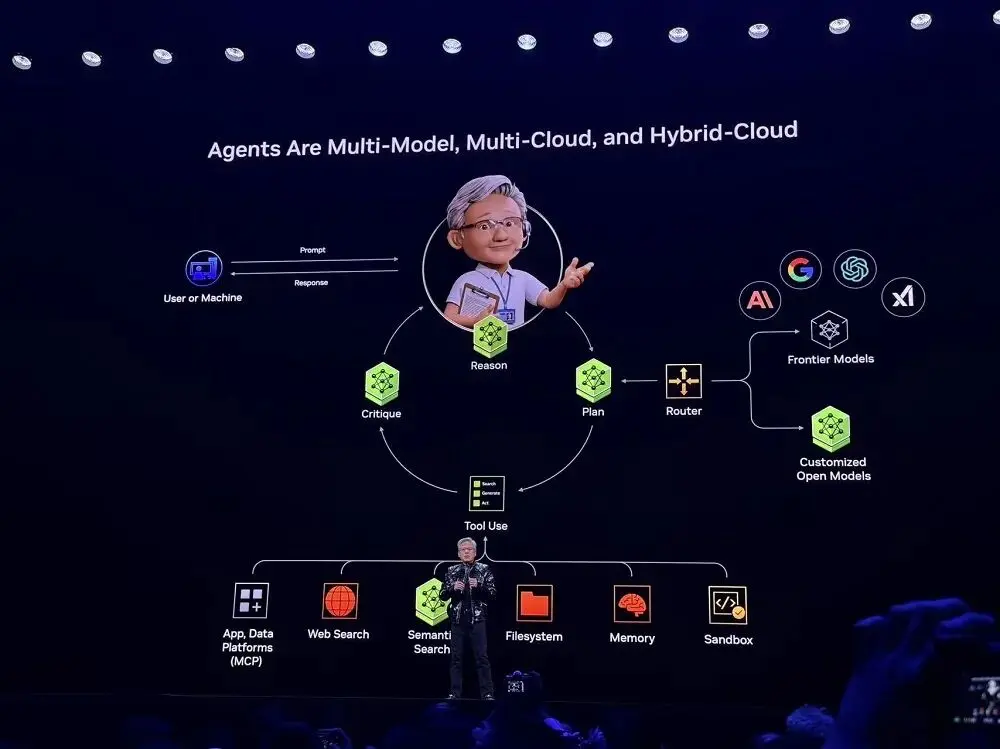

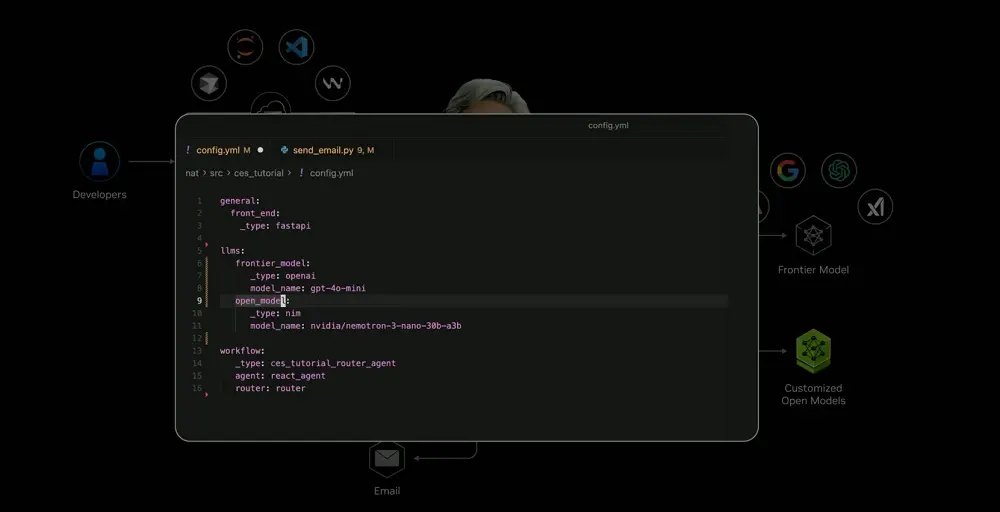

Inspired by Perplexity, Jensen Huang observed that Agents should be multi-model, multi-cloud, and hybrid cloud, which is also the fundamental architecture of Agentic AI systems, adopted by nearly all startups.

With the open-source models and tools provided by NVIDIA, developers can now customize AI systems and leverage cutting-edge model capabilities. Currently, NVIDIA has integrated the aforementioned framework into a "blueprint" and incorporated it into a SaaS platform. Users can achieve rapid deployment using the blueprint.

In the live demonstration case, this system can automatically determine whether a task should be handled by a local private model or a cloud-edge model based on user intent, and can also call external tools (such as email APIs, robot control interfaces, calendar services, etc.), achieving multi-modal fusion to uniformly process text, voice, images, and robot sensor signals.

These complex capabilities were previously unimaginable but have now become trivial. Similar capabilities can be found on enterprise platforms like ServiceNow and Snowflake.

04. Open Source Alpha-Mayo Model Enables Autonomous Vehicles to "Think"

NVIDIA believes that physical AI and robotics will ultimately become the largest consumer electronics segment globally. Everything that can move will eventually achieve full autonomy, driven by physical AI.

AI has gone through the stages of perceptual AI, generative AI, and Agentic AI, and is now entering the era of physical AI, where intelligence enters the real world, and these models can understand physical laws and generate actions directly from perceptions of the physical world.

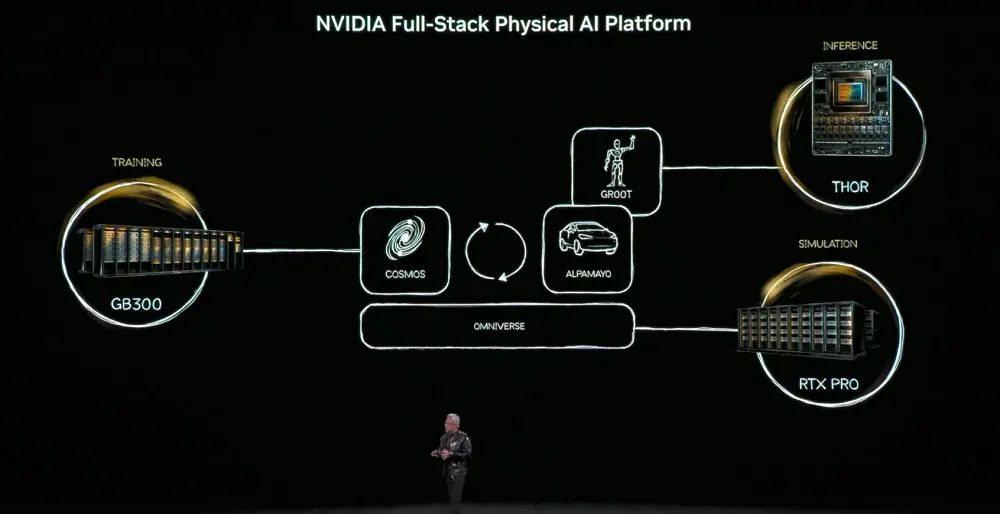

To achieve this goal, physical AI must learn the common sense of the world—object permanence, gravity, friction. Acquiring these capabilities will rely on three types of computers: training computers (DGX) for building AI models, inference computers (robot/vehicle chips) for real-time execution, and simulation computers (Omniverse) for generating synthetic data and validating physical logic.

The core model among these is the Cosmos world foundational model, which aligns language, images, 3D, and physical laws, supporting the entire link from simulation to training data generation.

Physical AI will appear in three types of entities: buildings (such as factories, warehouses), robots, and autonomous vehicles.

Jensen Huang believes that autonomous driving will become the first large-scale application scenario for physical AI. Such systems need to understand the real world, make decisions, and execute actions, with extremely high requirements for safety, simulation, and data.

In this regard, NVIDIA has released Alpha-Mayo, a complete system composed of open-source models, simulation tools, and physical AI datasets, aimed at accelerating the development of safe, inference-based physical AI.

Its product suite provides foundational modules for global automakers, suppliers, startups, and researchers to build L4-level autonomous driving systems.

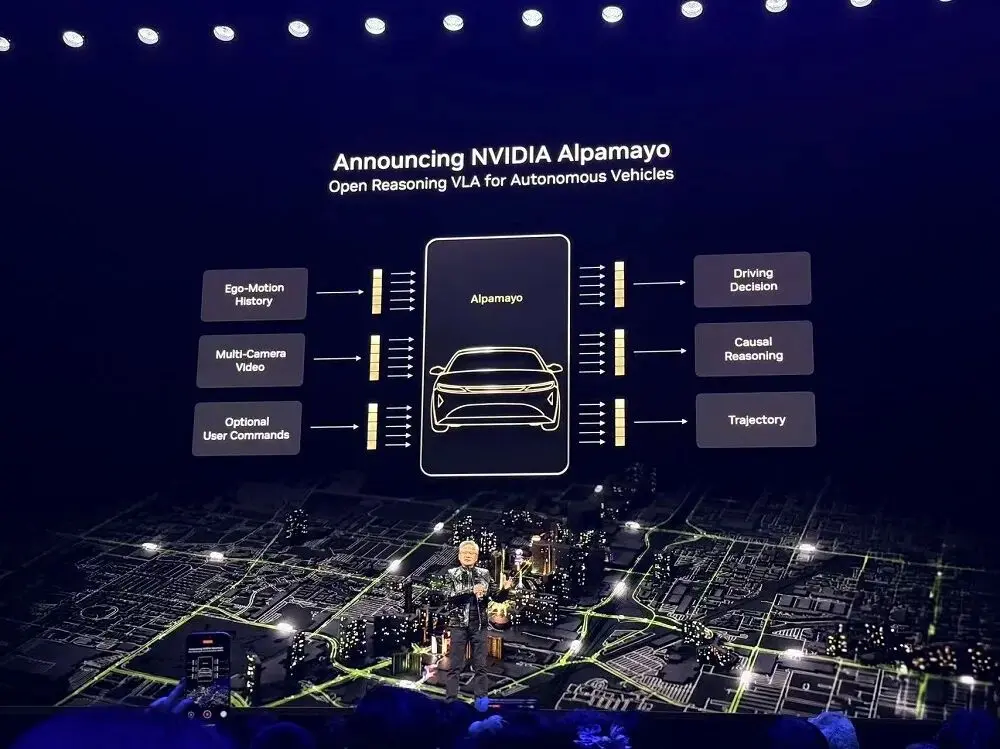

Alpha-Mayo is the industry's first model that truly enables autonomous vehicles to "think." This model has been open-sourced. It breaks down problems into steps, reasons through all possibilities, and selects the safest path.

This reasoning-action model enables autonomous driving systems to tackle complex edge scenarios that have never been experienced before, such as traffic light failures at busy intersections.

Alpha-Mayo has 10 billion parameters, large enough to handle autonomous driving tasks while being lightweight enough to run on workstations designed for autonomous driving researchers.

It can receive text, surround cameras, vehicle historical states, and navigation inputs, and output driving trajectories and reasoning processes, allowing passengers to understand why the vehicle takes a certain action.

In the promotional video played on-site, under the drive of Alpha-Mayo, the autonomous vehicle can independently perform operations such as avoiding pedestrians and predicting left-turning vehicles to change lanes without any intervention.

Jensen Huang stated that the Mercedes-Benz CLA equipped with Alpha-Mayo has already gone into production and has just been rated by NCAP as the safest car in the world. Every line of code, chip, and system has undergone safety certification. This system will launch in the U.S. market and will introduce stronger driving capabilities later this year, including hands-free driving on highways and end-to-end autonomous driving in urban environments.

NVIDIA also released some datasets used to train Alpha-Mayo and the open-source inference model evaluation simulation framework Alpha-Sim. Developers can fine-tune Alpha-Mayo with their own data or use Cosmos to generate synthetic data, training and testing autonomous driving applications based on a combination of real and synthetic data. Additionally, NVIDIA announced that the NVIDIA DRIVE platform is now in production.

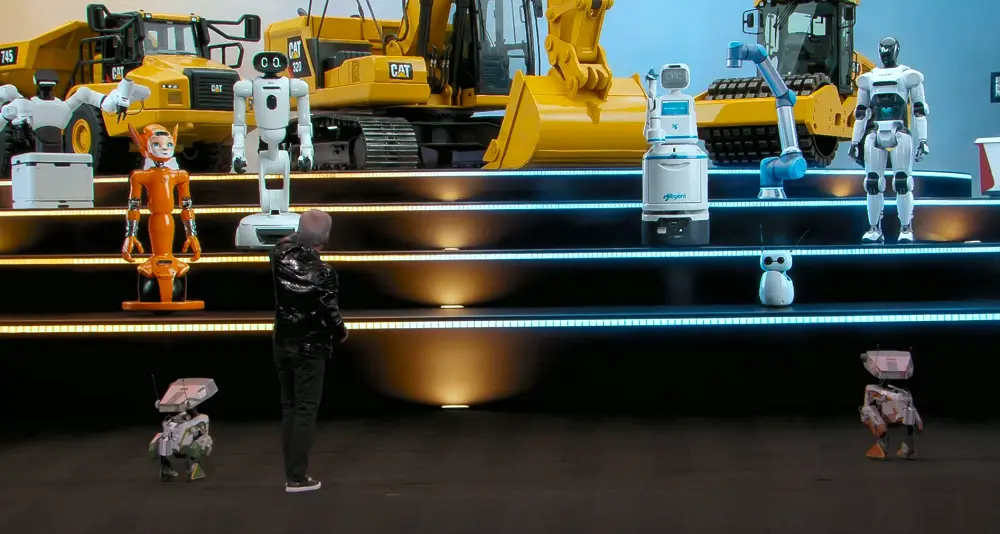

NVIDIA announced that leading global robotics companies such as Boston Dynamics, Franka Robotics, Surgical robots, LG Electronics, NEURA, XRLabs, and Zhiyuan Robotics are all building on NVIDIA Isaac and GR00T.

Jensen Huang also officially announced the latest collaboration with Siemens. Siemens is integrating NVIDIA CUDA-X, AI models, and Omniverse into its EDA, CAE, and digital twin tools and platform portfolio. Physical AI will be widely used throughout the entire process from design and simulation to production and operation.

05. Conclusion: Embracing Open Source with One Hand, Making Hardware Systems Indispensable with the Other

As the focus of AI infrastructure shifts from training to large-scale inference, platform competition has evolved from single-point computing power to system engineering covering chips, racks, networks, and software, aiming to deliver maximum inference throughput at the lowest TCO. AI is entering a new stage of "factory-like operation."

NVIDIA places great emphasis on system-level design, achieving improvements in performance and economy in both training and inference with Rubin, which can serve as a plug-and-play alternative to Blackwell, allowing for a seamless transition from Blackwell.

In terms of platform positioning, NVIDIA still believes that training is crucial because only by rapidly training state-of-the-art models can the inference platform truly benefit. Therefore, NVFP4 training has been introduced in Rubin GPUs to further enhance performance and reduce TCO.

At the same time, this AI computing giant continues to significantly strengthen network communication capabilities in both vertical and horizontal scaling architectures, viewing context as a key bottleneck to achieve collaborative design of storage, network, and computation.

While NVIDIA is heavily investing in open source, it is also making hardware, interconnects, and system designs increasingly "indispensable." This strategy of continuously expanding demand, incentivizing token consumption, driving the scaling of inference, and providing cost-effective infrastructure is building an even more impenetrable moat for NVIDIA.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。