Robot technology is rapidly advancing and attracting global attention. Walking with robotic dogs or having humanoid robots assist with household chores is no longer a distant dream.

The next step is crucial. How will people collaborate with robots? How will robots coordinate with each other? This report will explore the answers to these questions through OpenMind.

Key Points

- OpenMind has developed the open-source runtime "OM1". OM1 creates an environment where all robots, regardless of manufacturer, can communicate and collaborate freely.

- OpenMind's blockchain network "FABRIC" establishes a system for robot authentication, transaction records, and distributed verification. FABRIC lays the foundation for an autonomous machine economy.

- OpenMind uses the ERC-7777 standard to define robot behavior rules. OpenMind is collaborating with AIM Intelligence to develop a "Physical AI Security Layer". These technologies will work together to prevent failures and block external attacks.

1. The Growth Rate of Robot Technology is Beyond Imagination

Robots are no longer a distant future, nor are they only serving a select few.

Just a few years ago, robots were only found in laboratories or industrial settings. Now, they are stepping into our daily lives. People are walking robotic dogs in parks or having humanoid robots assist with household chores, scenes that are no longer confined to science fiction movies.

Source: 1X Technologies

1X Technologies recently launched "Neo", a household humanoid robot, making this reality more tangible. Consumers can now own a personal home assistant robot through a monthly subscription of $499 or a one-time payment of $20,000. The price is still high, but the message is clear: robot technology has entered consumer households.

Source: Made Visual Daily

In addition to Neo, global companies are accelerating innovation through fierce competition. Notable players include Figure, Tesla, and Boston Dynamics from the United States, as well as Unitree Technology from China. Tesla plans to mass-produce its humanoid robot "Optimus" starting in 2026, with a price lower than its cars.

The robotics industry is rapidly expanding into the consumer market. The once seemingly distant future has arrived faster than expected, opening the door to a new daily reality.

2. Robots in Daily Life: Possibilities and Limitations

What changes can robot technology bring to our daily lives? Let’s imagine a future living alongside robots.

Neo cleans the house. Unitree's robotic dog plays with the children. Optimus goes to the supermarket to buy dinner ingredients. Each robot works collaboratively, handling their respective tasks simultaneously. Users experience a more efficient day.

Let’s think further. What if robots could collaborate to handle complex tasks?

Optimus shops at the supermarket. Neo checks the fridge and requests additional ingredients from Optimus. Figure adjusts the recipe based on the user's allergy information. Each robot is connected in real-time, operating organically like a team. Users simply command: "I want to eat an omelet rice."

But this is still a distant dream. Robots lack sufficient intelligence to flexibly respond to various situations. The bigger issue is that each robot operates within a closed system based on different technology stacks.

Robots from different manufacturers find it difficult to exchange data or collaborate smoothly. iPhones can share photos via AirDrop, but cannot do so with Samsung Galaxy phones. Robots face the same limitations.

Figure's Helix, Source: Figure

Of course, collaboration can be achieved under limited conditions, such as with Figure's Helix: same manufacturer, same technology stack.

But reality is more complex. Look at the current robotics industry. A wide variety of robots are flooding the market like a Cambrian explosion.

Future users will choose various robots based on their preferences and needs, rather than sticking to a single brand. Our households today exemplify this model. We choose a Samsung refrigerator, an LG washing machine, and a Dyson vacuum cleaner.

Now imagine robots from multiple manufacturers working together in the same home. A kitchen robot cooks. A cleaning robot mops the floor. These two robots cannot share location information. Even if they share data, they cannot interpret it correctly. Their distance calculation methods and measurement units differ.

They cannot track each other's movement paths. Collisions will occur. This is just a simple example. More robots and complex tasks will amplify the risks of chaos and collisions.

3. OpenMind: Building a World of Robot Collaboration

Source: OpenMind

OpenMind has emerged to address these issues.

OpenMind breaks down closed technology stacks, pursuing an open ecosystem where all robots can collaborate. This approach allows robots from different manufacturers to communicate and cooperate freely.

OpenMind proposes two core foundations to realize this vision. First, "OM1" as the open-source runtime for robots. OM1 provides standardized communication methods, enabling all robots to understand and collaborate with each other despite differing hardware.

Second, "FABRIC" operates as a blockchain-based network. FABRIC establishes a trusted collaborative environment among robots. These two technologies create an ecosystem where all robots, regardless of manufacturer, can operate organically as a team.

3.1. OM1: Making Robots Smarter and More Flexible

As we have seen, existing robots are still trapped in closed systems, making it difficult for them to communicate with each other.

More specifically, robots exchange information through binary data or structured code formats. These formats vary by manufacturer, hindering compatibility. For example, Company A's robot represents location as (x, y, z) coordinates, while Company B defines it as (latitude, longitude, altitude). Even in the same space, they cannot understand each other's locations. Each manufacturer uses different data structures and formats.

Source: OpenMind

OpenMind addresses this issue through the open-source runtime "OM1". Think of it like Android, which can run on all devices regardless of manufacturer. OM1 works similarly, allowing all robots to communicate in the same language, regardless of hardware.

OM1 enables robots to understand and process information based on natural language. OpenMind's paper "A Sentence is Enough" explains this well. Robot communication does not require complex commands or formats. A context of natural language can achieve mutual understanding and collaboration.

Now let’s take a closer look at how OM1 operates.

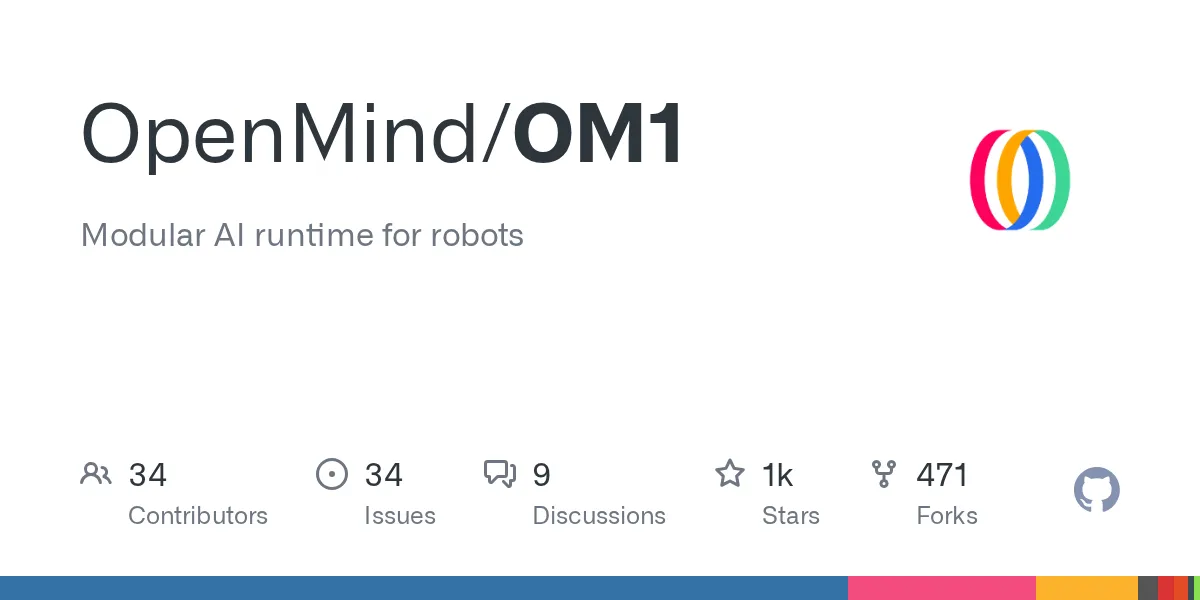

Source: OpenMind

First, robots collect environmental information from various sensor modules, such as cameras and microphones. This data is input in binary format, but multimodal recognition models convert it into natural language. VLM (Visual Language Model) processes visual information. ASR (Automatic Speech Recognition) processes audio. This generates sentences like "A man is pointing at the chair in front" and "The user says 'go to the chair.'"

The converted sentences are aggregated through a natural language data bus. A data fusion module weaves this information into a situational report and passes it to multiple LLMs. LLMs analyze the situation through this report and decide the next actions for the robots.

This method has clear advantages. Robots from different manufacturers can collaborate seamlessly. OM1 forms a natural language-based abstraction layer over the hardware. Both Neo and Figure can understand the same natural language commands and perform the same tasks. Each manufacturer retains its proprietary hardware and systems, while OM1 enables them to collaborate freely with other robots.

In addition to enabling cross-manufacturer collaboration, OM1 also integrates other open-source models as runtime modules, rather than competing with them. When robots need precise operations, OM1 utilizes the Pi (Physical Intelligence) model. When multilingual speech recognition is required, OM1 adopts Meta's Omnilingual ASR model. OM1 combines modules based on the situation, providing high scalability and flexibility.

The advantages of OM1 go beyond this. OM1 fundamentally leverages LLMs. Robots are not just executing simple commands. They can understand contextual backgrounds and make autonomous decisions.

Demonstration of picking up anything (using Figure's materials for clarity), Source: Figure

Let's look at a specific example. Multiple objects are placed in front of the robot. Someone requests, "Pick up an item related to the desert." Traditional robots would fail because "desert items" do not exist in predefined rules. OM1 is different. It understands conceptual relationships through LLMs. It independently infers the connection between "desert" and "cactus." It selects a cactus toy. OM1 lays the foundation for robot collaboration and makes individual robots smarter.

3.2. FABRIC: A Network Connecting Distributed Robots as One

OM1 makes robots smarter and enables smooth communication between them. But beyond communication, there is another challenge. When different robots collaborate, how do they trust each other? The system must verify who performed which tasks and whether they were completed correctly.

Human society regulates behavior through laws and ensures compliance through contracts. These mechanisms allow people to safely transact and cooperate with strangers. The robot ecosystem needs the same mechanisms.

Source: OpenMind

OpenMind addresses this issue through "FABRIC," a blockchain-based network. FABRIC connects robots and coordinates their collaboration.

Let’s take a look at the core structure of FABRIC. FABRIC first assigns an "identity" to each robot. Every robot in the FABRIC network receives a unique identity based on ERC-7777 (Human-Robot Social Governance).

Assigned robots share their location, task status, and environmental information with the network in real-time. They simultaneously receive status updates from other robots. Just like a situational board or mini-map in a game, all robots track each other's locations and statuses in real-time through a shared map.

Simply sharing information is not enough. Robots may submit incorrect information. Sensor errors can occur and distort data. FABRIC utilizes the consensus mechanism of blockchain to ensure data reliability.

Consider a real scenario. Delivery robot A collaborates with warehouse robot B to transport goods. Robot B reports that it is on the second floor. Nearby sensor robots and elevator robots cross-verify B's location. Multiple nodes validate the transaction in the blockchain. Multiple robots work in the same way. They confirm B's actual location and reach a consensus. Suppose robot B reports being on the second floor due to a sensor error, but is actually on the third floor. The verification process will detect the discrepancy. The network records the corrected information. Robot A moves to the correct location on the third floor.

The role of FABRIC is not limited to verification. FABRIC provides additional functionalities for the upcoming machine economy. The first is privacy protection. Blockchain transparency ensures trust, but privacy is also crucial for operating a real robot ecosystem. FABRIC adopts a distributed structure, dividing subnetworks by task or location and connecting them through a central server. This structure protects sensitive information. This solution is not perfect, but ongoing research will enhance privacy protection.

FABRIC also provides a Machine Settlement Protocol (MSP). MSP automates hosting, verification, and settlement. When the system verifies that a task is completed, it automatically settles payment in stablecoins and records all evidence on the blockchain. Robots will not only collaborate to build trust. They will become autonomous economic entities engaging in transactions.

4. If: A Look at Future Daily Life Through OpenMind

4.1. A New World: A Utopia with Robots

We have long dreamed of a "machine economy" where robots directly participate in economic activities. Robots independently judge, order goods, collaborate with other robots, and exchange value. OpenMind is now turning this dream into reality.

Source: OpenMind

What kind of daily life will unfold? Watch OpenMind's demonstration video. You tell the robot, "Please buy me lunch." The robot moves to the store, confirms the order, pays directly with cryptocurrency, and brings the food back. This may seem simple on the surface, but it is significant. Robots are no longer just executing commands in predefined environments. They are transforming into autonomous economic entities that judge and act independently.

Imagination can extend further. In addition to transactions between humans and robots, transactions between robots will also emerge. For example, a household humanoid robot runs out of essentials while doing chores. It independently orders products from a nearby supermarket robot. An intelligent contract is automatically generated in the process. The supermarket robot delivers the products. The household robot confirms the items and settles payment in stablecoins.

New forms of value exchange that have never existed before will emerge. Delivery robots calculate the best route to their destination. They request real-time data from traffic robots and pay a small fee in return. Even small daily collaborations become transactions.

4.2. A Dangerous World: A Dystopia with Robots

Robots are no longer confined to science fiction movies. In China, consumers spend about $1,000 on robotic dogs (Unitree Go2) and about $12,000 on humanoid robots (Engine AI PM01). Large-scale adoption is accelerating rapidly.

Source: WhistlinDiesel

The increasing number of robots in daily life is not the most important aspect. The judgment capabilities of robots remain limited. Safety is not guaranteed. If robots misjudge a situation and make dangerous decisions, they can directly harm people. Such harm could become catastrophic rather than just a simple accident.

OpenMind is directly addressing this issue. It assigns a unique identity to each robot through the ERC-7777 standard and uses it as a protective barrier. For example, a robotic dog receives the identity of "human friend and protector." This identity prevents the robot from attacking or harming humans. Robots always act in a friendly and safe manner. They continuously confirm their identity and role, preventing inappropriate behavior.

OpenMind goes further. They are collaborating with AIM Intelligence to develop a "Physical AI Security Layer." This layer prevents robot hallucinations and defends against external intrusions and attacks. Consider an example. A robot attempts to move while holding a sharp object. A child stands nearby. The system recognizes this as a "risk of injury" and immediately halts the operation.

5. OpenMind: Building Tomorrow's Robot Society

OpenMind has moved beyond the research phase. It is ready to drive a substantial transformation in the robotics industry.

Founder Jan Liphardt, a former professor of biophysics at Stanford University, is at the core. He has studied the coordination and cooperation mechanisms between complex systems. He is now designing the structure for robots to make autonomous judgments and collaborate. He leads the overall technology development.

Source: OpenMind

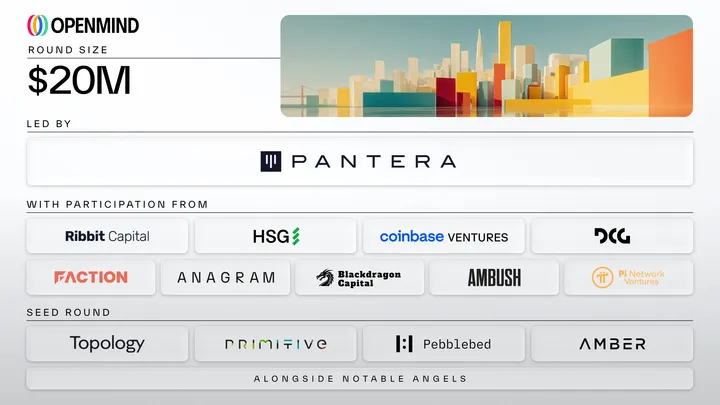

This technological leadership has attracted $20 million in funding led by Pantera Capital. OpenMind has established a financial foundation for technology development and ecosystem expansion. It ensures the capability to execute its vision.

Market response has been positive. Major hardware companies, including Unitree, DEEP Robotics, Dobot, and UBTECH, are adopting OM1 as their core technology stack. The collaboration network is rapidly expanding.

However, challenges remain. The FABRIC network is still in the preparation stage. Unlike digital environments, the physical world presents more variables. Robots must operate in unpredictable real-world environments rather than controlled laboratories. The complexity significantly increases.

Nevertheless, robot collaboration and safety require long-term solutions. We need to pay attention to how OpenMind addresses this challenge and what role it plays in the robot ecosystem.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。