Jensen Huang made a big announcement at the 2025 GTC.

At the 2025 GTC, Jensen Huang revealed a major piece of news: NVIDIA will invest $1 billion in Nokia. Yes, that Nokia, the mobile phone company that was all the rage 20 years ago with its Symbian phones.

In his speech, Huang stated that telecom networks are undergoing a significant transformation from traditional architectures to AI-native systems, and NVIDIA's investment will accelerate this process. Thus, NVIDIA will collaborate with Nokia to create an AI platform for 6G networks, integrating AI into traditional RAN networks.

The specific investment involves NVIDIA subscribing to approximately 166 million new shares of Nokia at a price of $6.01 per share, which will give NVIDIA about 2.9% ownership in Nokia.

At the moment the partnership was announced, Nokia's stock price surged by 21%, marking the largest increase since 2013.

01 What is AI-RAN?

RAN stands for Radio Access Network, and AI-RAN is a new network architecture that embeds AI computing capabilities directly into wireless base stations. Traditional RAN systems primarily handle data transmission between base stations and mobile devices, while AI-RAN adds edge computing and intelligent processing capabilities on top of that.

This allows base stations to apply AI algorithms to optimize spectrum utilization and energy efficiency, improving overall network performance, while also utilizing idle RAN assets to host edge AI services, creating new revenue streams for operators.

Operators can run AI applications directly at the base station site, without having to send all data back to a central data center for processing, significantly reducing the burden on the network.

Huang provided an example, noting that nearly 50% of ChatGPT users access it via mobile devices. Moreover, ChatGPT's monthly mobile downloads exceed 40 million. In an era of explosive growth in AI applications, traditional RAN systems cannot cope with generative AI and agent-dominated mobile networks.

AI-RAN, by providing distributed AI inference capabilities at the edge, enables upcoming AI applications, such as agents and chatbots, to respond more quickly. At the same time, AI-RAN is also preparing for integrated sensing and communication applications in the 6G era.

Huang cited a forecast from analyst firm Omdia, which predicts that the RAN market will exceed $200 billion by 2030, with the AI-RAN segment becoming the fastest-growing subfield.

Nokia's President and CEO, Pekka Lundmark, stated in a joint announcement that this partnership will put AI data centers in everyone's pockets, fundamentally redesigning the transition from 5G to 6G.

He specifically mentioned that Nokia is collaborating with NVIDIA, Dell, and T-Mobile, three different types of companies. T-Mobile, as one of the first partners, will begin field testing AI-RAN technology in 2026, focusing on validating performance and efficiency improvements. Lundmark said this testing will provide valuable data for 6G innovations, helping operators build intelligent networks that meet AI demands.

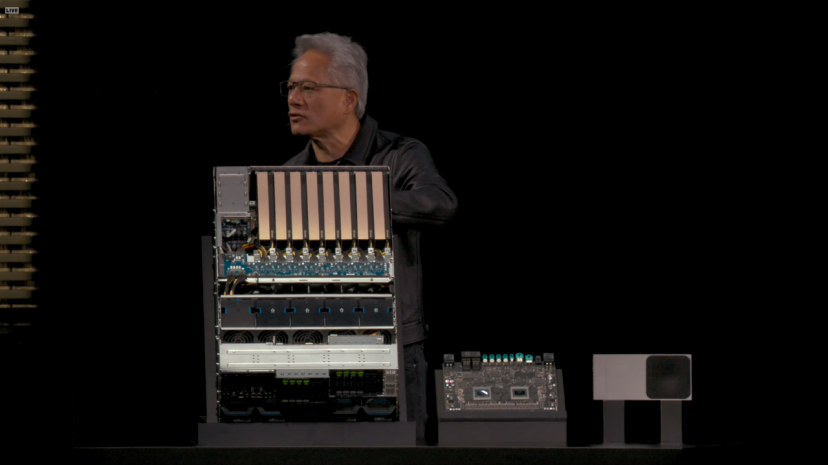

Based on AI-RAN, NVIDIA launched a new product called Aerial RAN Computer Pro (ARC-Pro), which is an accelerated computing platform prepared for 6G. Its core hardware configuration includes two types of NVIDIA GPUs: Grace CPU and Blackwell GPU.

This platform runs on NVIDIA CUDA, allowing RAN software to be directly embedded into the CUDA technology stack. Therefore, it can not only handle traditional wireless access network functions but also run mainstream AI applications simultaneously. This is also NVIDIA's core method for achieving the "AI" in AI-RAN.

Given CUDA's long history, the platform's greatest advantage is actually its programmability. Not only that, but Huang also announced that the Aerial software framework will be open-sourced, expected to be released on GitHub under the Apache 2.0 license starting in December 2025.

The main difference between ARC-Pro and its predecessor, ARC, lies in deployment location and application scenarios. The previous ARC was mainly used for centralized cloud RAN implementations, while ARC-Pro can be deployed directly at the base station site, enabling true edge computing capabilities.

Ronnie Vashita, head of NVIDIA's telecom business, stated that previously, RAN and AI required two different sets of hardware to function, but ARC-Pro can dynamically allocate computing resources based on network needs, prioritizing wireless access functions while running AI inference tasks during idle periods.

ARC-Pro also integrates NVIDIA's AI Aerial platform, which is a complete software stack that includes CUDA-accelerated RAN software, Aerial Omniverse digital twin tools, and the new Aerial Framework. The Aerial Framework can convert Python code into high-performance CUDA code to run on the ARC-Pro platform. Additionally, the platform supports AI-driven neural network models for advanced channel estimation.

Huang stated that telecommunications are the digital nervous system of the economy and security. Collaborating with Nokia and the telecom ecosystem will ignite this revolution, helping operators build intelligent, adaptive networks that define the next generation of global connectivity.

02 Looking ahead to 2025, NVIDIA is indeed investing a lot of money.

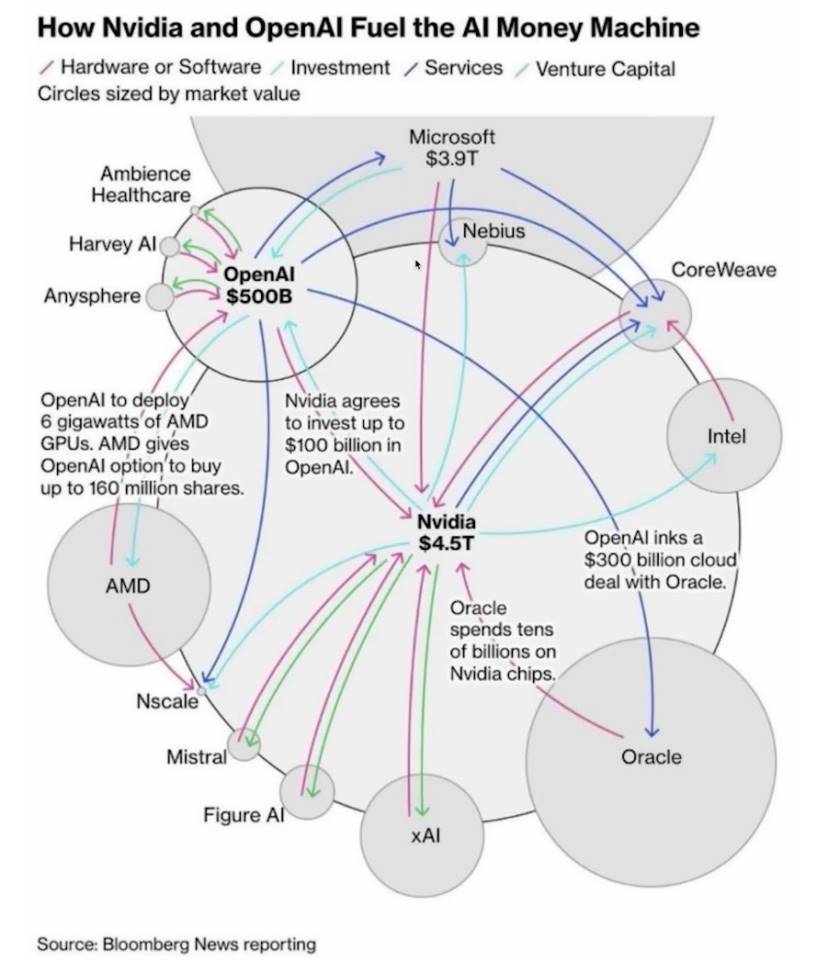

On September 22, NVIDIA and OpenAI reached a partnership, with NVIDIA planning to gradually invest $100 billion in OpenAI, which will accelerate its infrastructure development.

Huang mentioned that OpenAI had sought NVIDIA's investment a long time ago, but at that time, the company had limited funds. He humorously remarked that they were too poor back then and should have given all their money to OpenAI.

Huang believes that the growth of AI inference is not by 100 times or 1,000 times, but by a billion times. Moreover, this collaboration is not limited to hardware but also includes software optimization to ensure OpenAI can efficiently utilize NVIDIA's systems.

This concern may stem from his awareness of OpenAI's collaboration with AMD, fearing that OpenAI might abandon CUDA. If the world's largest AI foundational model does not use CUDA, it would be reasonable for other major model vendors to follow suit.

Huang predicted on the BG2 podcast that OpenAI is likely to become the next trillion-dollar company, with its growth rate setting industry records. He refuted the notion of an AI bubble, pointing out that global capital expenditure on AI infrastructure will reach $5 trillion annually.

It is also because of this investment that OpenAI announced on October 29 that it has completed a corporate capital restructuring. The company has been split into two parts: a non-profit foundation and a for-profit company.

The non-profit foundation will legally control the for-profit part and must consider public interest. However, it can still freely raise funds or acquire companies. The foundation will own 26% of the for-profit company and hold a warrant. If the company continues to grow, the foundation can also receive additional shares.

In addition to OpenAI, NVIDIA also invested in Musk's xAI in 2025. This company's current funding round has increased to $20 billion, with approximately $7.5 billion raised through equity and up to $12.5 billion through debt raised via special purpose vehicles (SPVs).

The operation of this special purpose vehicle is that it will use the raised funds to purchase NVIDIA's high-performance processors and then lease these processors to xAI.

These processors will be used for xAI's Colossus 2 project. The first generation of Colossus is xAI's supercomputing data center located in Memphis, Tennessee. The first generation project has deployed 100,000 NVIDIA H100 GPUs, making it one of the largest AI training clusters in the world. Now, xAI is building Colossus 2, which plans to expand the number of GPUs to hundreds of thousands or even more.

On September 18, NVIDIA also announced a $5 billion investment in Intel, establishing a deep strategic partnership. NVIDIA will subscribe to newly issued common stock of Intel at a price of $23.28 per share, with a total investment of $5 billion. After the transaction is completed, NVIDIA will hold about 4% of Intel's shares, becoming an important strategic investor.

03 Of course, Huang also discussed many other topics at this GTC.

For instance, NVIDIA launched several open-source AI model families, including Nemotron for digital AI, Cosmos for physical AI, Isaac GR00T for robotics, and Clara for biomedical AI.

At the same time, Huang introduced the DRIVE AGX Hyperion 10 autonomous driving development platform. This is a platform aimed at Level 4 autonomous driving, integrating NVIDIA's computing chips and a complete sensor suite, including LiDAR, cameras, and radar.

NVIDIA also launched the Halos certification program, the industry's first system for assessing and certifying the safety of physical AI, specifically targeting autonomous vehicles and robotics technology.

The core of the Halos certification program is the Halos AI system, which is the first laboratory in the industry to receive ANSI certification. ANSI is the American National Standards Institute, and its certification carries high authority and credibility.

The mission of this system is to use NVIDIA's physical AI to detect whether autonomous driving systems meet standards. Companies like AUMOVIO, Bosch, Nuro, and Wayve are among the first members of the Halos AI system testing laboratory.

To promote Level 4 autonomous driving, NVIDIA released a multimodal autonomous driving dataset from 25 countries, containing 1,700 hours of camera, radar, and LiDAR data.

Huang stated that the value of this dataset lies in its diversity and scale, covering different road conditions, traffic rules, and driving cultures, providing a foundation for training more generalized autonomous driving systems.

However, Huang's vision goes far beyond this.

He announced a series of collaborations with U.S. government laboratories and leading companies at GTC, aiming to build the AI infrastructure in the United States. Huang stated that we are at the dawn of an AI industrial revolution, which will define the future of every industry and country.

The highlight of this collaboration is with the U.S. Department of Energy. NVIDIA is helping the Department of Energy build two supercomputing centers, one at Argonne National Laboratory and the other at Los Alamos National Laboratory.

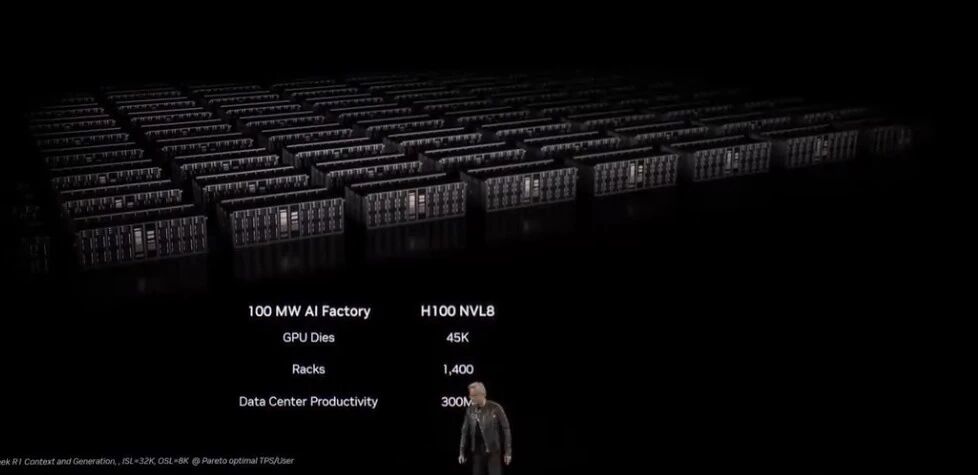

Argonne National Laboratory will receive a supercomputer named Solstice, equipped with 100,000 NVIDIA Blackwell GPUs. What does having 100,000 GPUs mean? This will be the largest AI supercomputer in the history of the Department of Energy. Additionally, there is another system called Equinox, equipped with 10,000 Blackwell GPUs, expected to be operational in 2026. Together, these two systems can provide 2,200 exaflops of AI computing performance.

Paul Koen, the director of Argonne National Laboratory, stated that these systems will redefine performance, scalability, and scientific potential. What will they use this computing power for? From materials science to climate modeling, from quantum computing to nuclear weapons simulation, this level of computing capability is required.

In addition to government laboratories, NVIDIA has also built an AI factory research center in Virginia. What makes this center special is that it is not just a data center, but an experimental ground. NVIDIA aims to test something called Omniverse DSX here, which is a blueprint for building gigawatt-level AI factories.

A typical data center might only require tens of megawatts of power, while a gigawatt is equivalent to the power output of a medium-sized nuclear power plant.

The core idea of the Omniverse DSX blueprint is to turn the AI factory into a self-learning system. AI agents will continuously monitor power, cooling, and workloads, automatically adjusting parameters to improve efficiency. For example, when the grid load is high, the system can automatically reduce power consumption or switch to battery storage for power supply.

This intelligent management is crucial for gigawatt-level facilities, as electricity and cooling costs can be astronomical.

This vision is grand, and Huang stated that it will take him three years to achieve. AI-RAN testing will not begin until 2026, autonomous vehicles based on DRIVE AGX Hyperion 10 will not hit the road until 2027, and the Department of Energy's supercomputers will also be operational in 2027.

With CUDA as its ace in the hole, NVIDIA holds the factual standard for AI computing. From training to inference, from data centers to edge devices, from autonomous driving to biomedicine, NVIDIA's GPUs are everywhere. The investments and collaborations announced at this GTC further solidify this position.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。