OpenAI unveiled GDPval on Thursday—a benchmark that tries to assess qualitatively whether AI can do your actual job.

These are not hypothetical exam questions, but real deliverables: legal briefs, engineering blueprints, nursing care plans, financial reports—the kind of work, that is, that pays mortgages. The researchers deliberately focused on occupations where at least 60% of tasks are computer-based—roles they describe as “predominantly digital.”

That scope covers professional services such as software developers, lawyers, accountants, and project managers; finance and insurance positions like analysts and customer service reps; and information-sector jobs ranging from journalists and editors to producers and AV technicians. Healthcare administration, white-collar manufacturing roles, and sales or real estate managers also feature prominently.

Within that set, the work most exposed to AI overlaps with the kinds of digital, knowledge-intensive activities that large language models already handle well:

- Software development, which represents the largest wage pool in the dataset, stands out as especially vulnerable.

- Legal and accounting work, with its heavy reliance on documents and structured reasoning, is also high on the list, as are financial analysts and customer service representatives.

- Content production roles—editors, journalists, and other media workers—face similar pressures given AI’s growing fluency in language and multimedia generation.

The absence of manual and physical labor jobs in the study highlights its boundaries: GDPval was not designed to measure exposure in fields like construction, maintenance, or agriculture. Instead, it underscores the point that the first wave of disruption is likely to strike white-collar, office-based jobs—the very kinds of work once assumed to be most insulated from automation.

The report builds on a two-year-old OpenAI/University of Pennsylvania study that claimed that up to 80% of U.S. workers could see at least 10% of their tasks affected by LLMs, and around 19% of workers could see at least 50% of their tasks affected. The most imperiled (or transformed) jobs are white-collar, knowledge-heavy ones—especially in law, writing, analysis, and customer interaction.

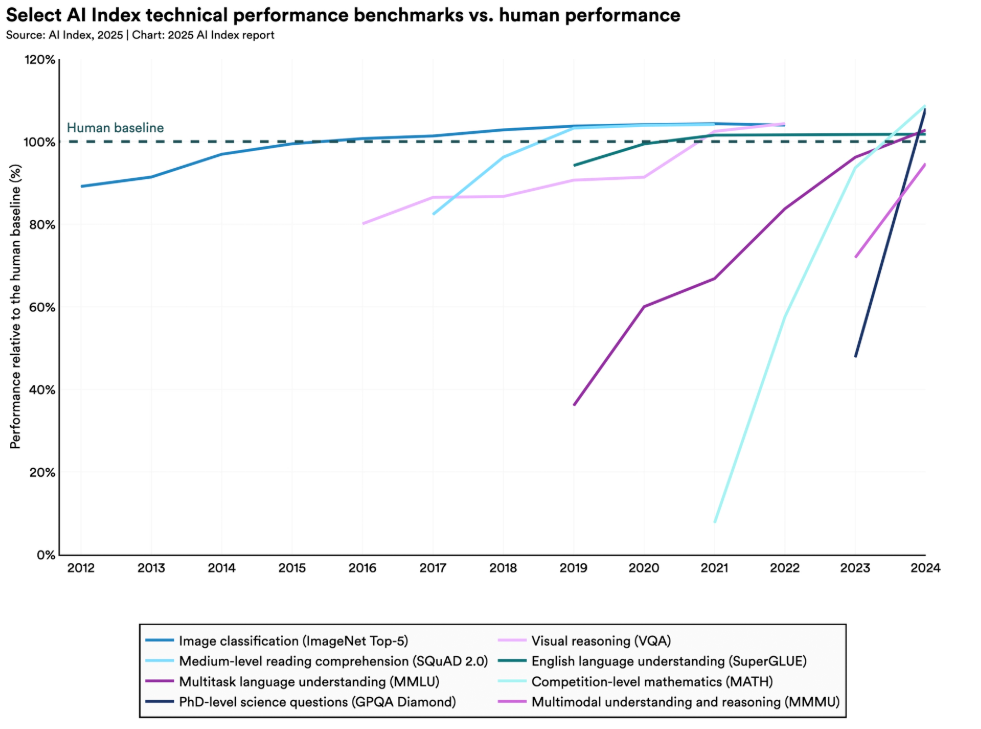

But the unsettling part isn't today's numbers. It's the trajectory. At this pace, the statistics suggest that AI could match human experts across the board by 2027. This is really close to AGI standards, and could mean that even tasks considered unsafe or too specialized for automation may soon become accessible to machines, threatening rapid workplace transformations.

OpenAI tested 1,320 tasks across 44 occupations—not random jobs, but roles in the nine sectors that drive most of America's GDP. Software developers, lawyers, nurses, financial analysts, journalists, engineers: the people who thought their degrees would protect them from automation.

Each task came from professionals with an average of 14 years of experience—not interns or recent grads, but seasoned experts who know their craft. The tasks weren't simple either, averaging seven hours of work with some stretched to multiple weeks of effort.

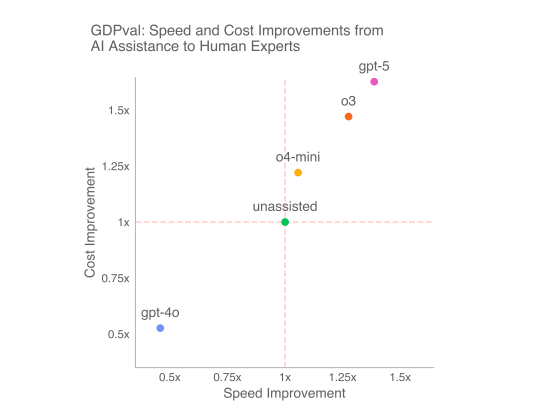

According to OpenAI, the models completed these tasks up to 100 times faster and significantly cheaper than humans in some API-specific tasks—which is to be expected and has been the case for decades. On more specialized tasks, the improvement was slower, but still noticeable.

Even accounting for review time and the occasional do-over when the AI hallucinated something bizarre, the economics tilt hard toward automation.

But cheer up: Just because a job is exposed doesn’t mean it disappears. It may be augmented (for instance, lawyers and journalists using LLMs to write faster) rather than be replaced.

And as far as AI has gone, hallucinations are still a pain for businesses. The research shows AI failing most often on instruction-following—35% of GPT-5's losses came from not fully grasping what was asked. Formatting errors plagued another 40% of failures.

The models also struggled with collaboration, client interaction, and anything requiring genuine accountability, which OpenAI left out of the study. Nobody's suing an AI for malpractice yet. But for solo digital deliverables—the reports, presentations, and analyses that fill most knowledge workers' days—the gap is closing fast.

OpenAI admits that GDPval today covers a very limited number of tasks people do in their real jobs. The benchmark can't measure interpersonal skills, physical presence, or the thousand micro-decisions that make someone valuable beyond their deliverables.

Still, when investment banks start comparing AI-generated competitor analyses to those from human analysts, when hospitals evaluate AI nursing care plans against those from experienced nurses, and when law firms test AI briefs against associate work—that's not speculation anymore. That's measurement.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。