Embodied Intelligence x Web3, Structural Solutions Drive Investable Opportunities.

Author: merakiki

Translation: Deep Tide TechFlow

For decades, the application of robotics has been very narrow, primarily focused on performing repetitive tasks in structured factory environments. However, today's artificial intelligence (AI) is revolutionizing the robotics field, enabling robots to understand and execute user instructions while adapting to dynamically changing environments.

We are entering a rapidly growing new era. According to Citibank's forecast, by 2035, there will be 1.3 billion robots deployed globally, with applications expanding from factories to homes and the service industry. Meanwhile, Morgan Stanley predicts that the humanoid robot market alone could reach a scale of 5 trillion dollars by 2050.

While this expansion unleashes tremendous market potential, it also comes with significant challenges related to centralization, trust, privacy, and scalability. Web3 technology offers transformative solutions to these issues by supporting decentralized, verifiable, privacy-preserving, and collaborative robotic networks.

In this article, we will delve into the evolving AI robotics value chain, with a particular focus on the humanoid robotics sector, and reveal the compelling opportunities brought about by the integration of AI robots and Web3 technology.

AI Robotics Value Chain

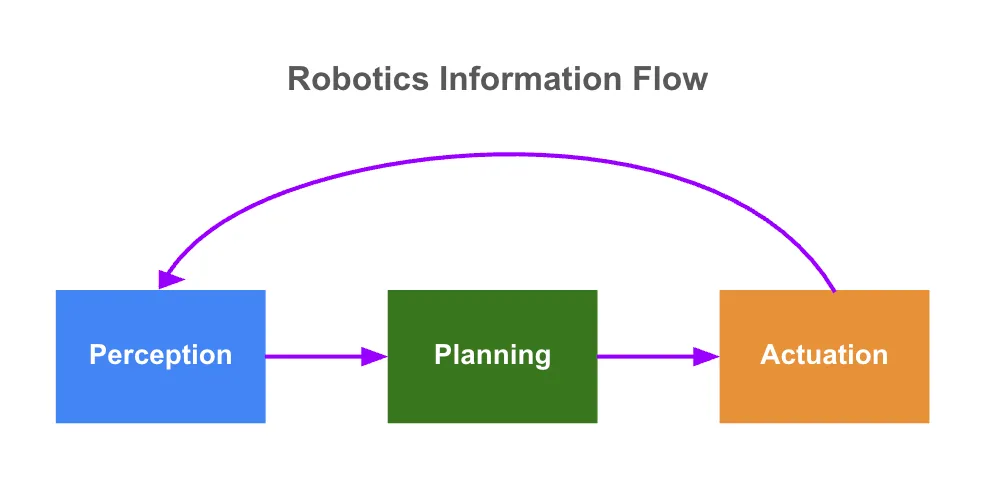

The AI robotics value chain consists of four fundamental layers: hardware, intelligence, data, and agency. Each layer builds upon the others, enabling robots to perceive, reason, and act in complex real-world environments.

In recent years, significant progress has been made in the hardware layer, led by industry pioneers such as Unitree and Figure AI. However, many critical challenges remain in non-hardware areas, particularly the lack of high-quality datasets, absence of general foundational models, poor cross-device compatibility, and the need for reliable edge computing. Therefore, the greatest development opportunities currently lie in the intelligence layer, data layer, and agency layer.

1.1 Hardware Layer: "Body"

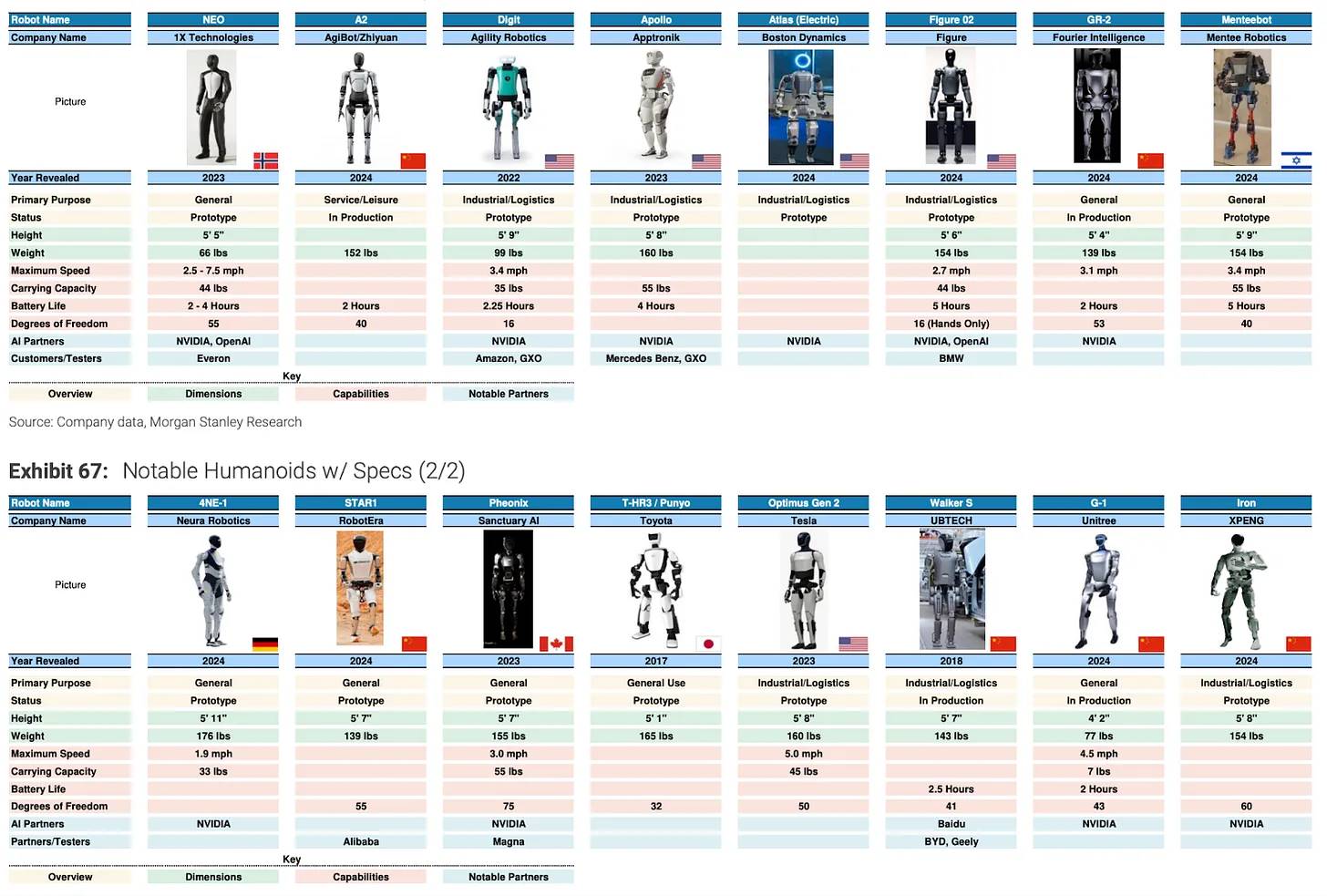

Today, the manufacturing and deployment of modern "robotic bodies" is easier than ever. There are currently over 100 different types of humanoid robots on the market, including Tesla's Optimus, Unitree's G1, Agility Robotics' Digit, and Figure AI's Figure 02.

Source: Morgan Stanley, "The Humanoid 100: Mapping the Humanoid Robot Value Chain"

This advancement is attributed to breakthroughs in three key components:

- Actuators: Acting as the "muscles" of robots, actuators convert digital instructions into precise movements. Innovations in high-performance motors enable robots to achieve fast and accurate actions, while Dielectric Elastomer Actuators (DEAs) are suitable for delicate tasks. These technologies significantly enhance the flexibility of robots, such as Tesla's Optimus Gen 2 with 22 degrees of freedom (DoF), and Unitree's G1, both demonstrating near-human flexibility and impressive mobility.

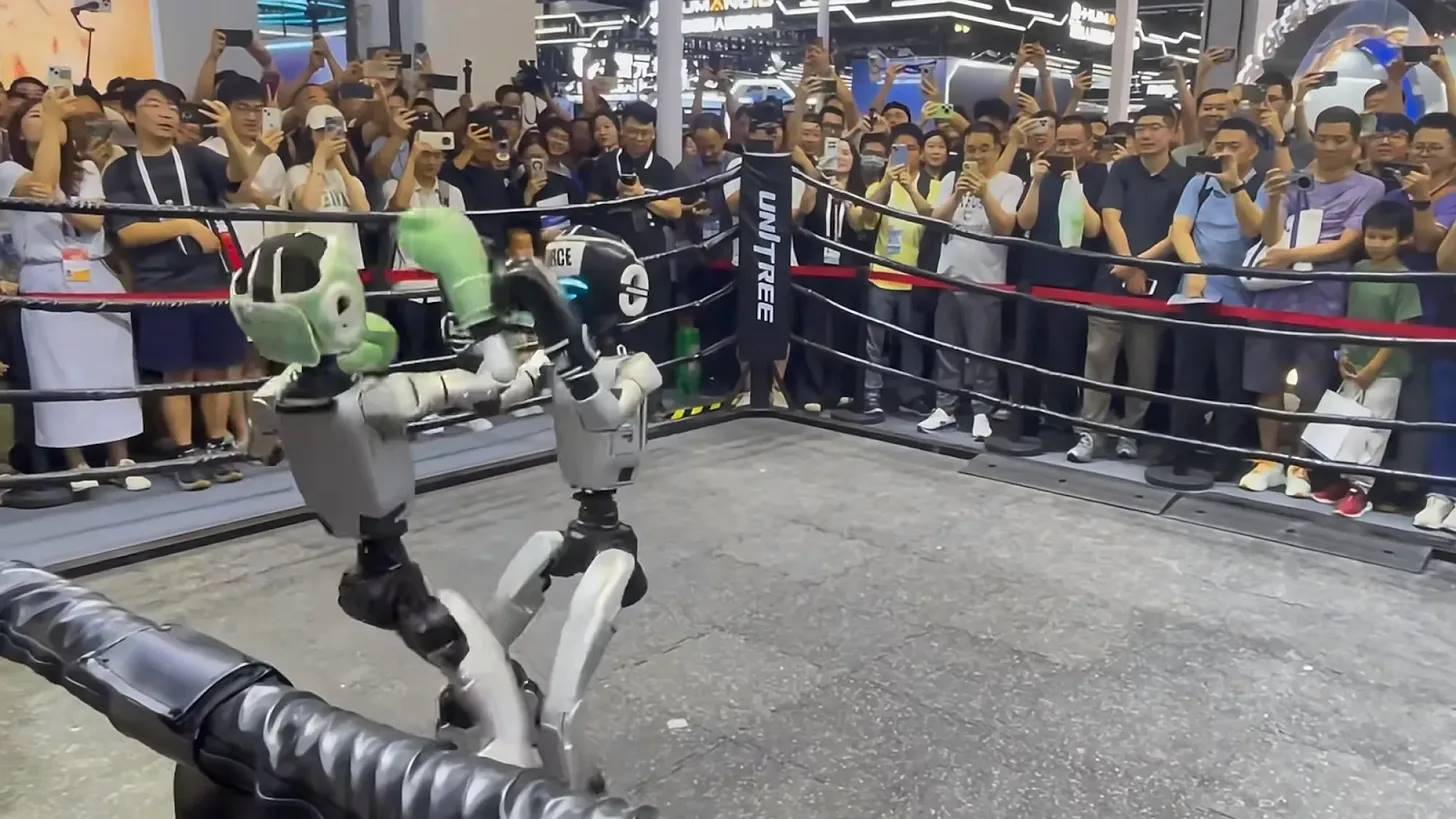

Source: Unitree showcases its latest humanoid robot in a boxing match at the 2025 WAIC World Artificial Intelligence Conference.

Sensors: Advanced sensors enable robots to perceive and interpret their environment through visual, LIDAR/RADAR, tactile, and audio inputs. These technologies support robots in achieving safe navigation, precise manipulation, and situational awareness.

Embedded Computing: CPUs, GPUs, and AI accelerators (such as TPUs and NPUs) on devices can process sensor data in real-time and run AI models for autonomous decision-making. Reliable low-latency connections ensure seamless coordination, while hybrid edge-cloud architectures allow robots to offload intensive computing tasks when necessary.

1.2 Intelligence Layer: "Brain"

As hardware matures, the industry's focus has shifted to building the "robot brain": powerful foundational models and advanced control strategies.

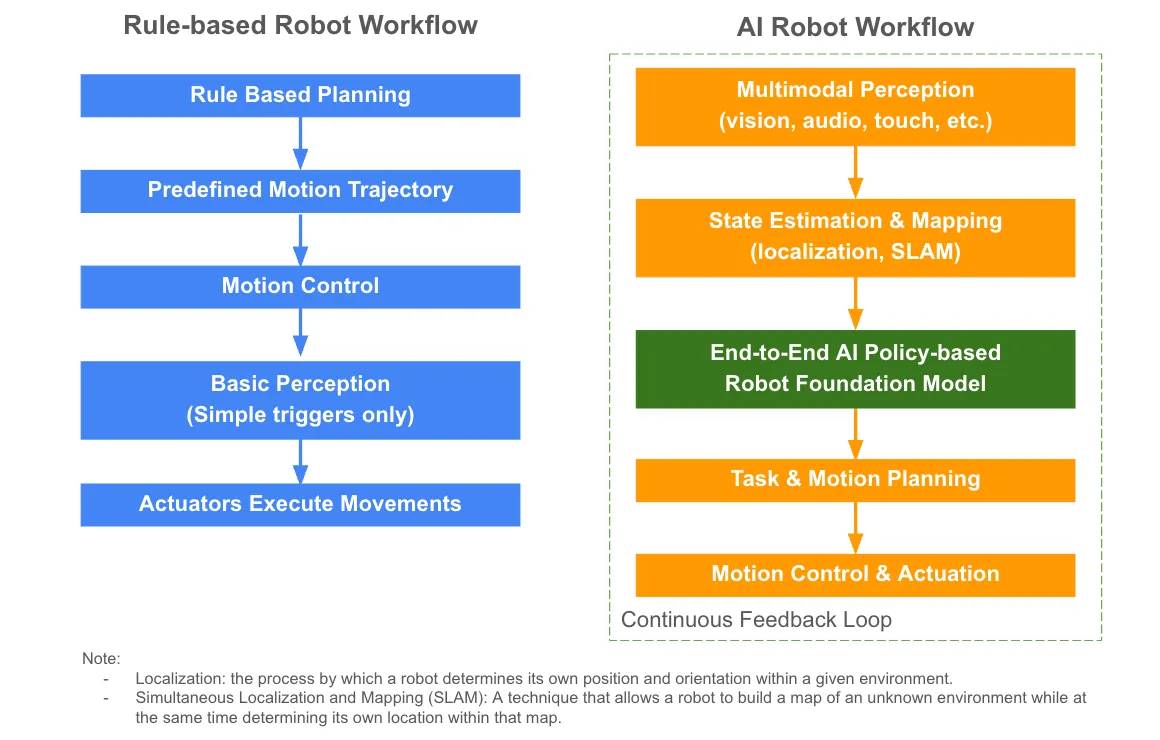

Before AI integration, robots relied on rule-based automation, executing pre-programmed actions without adaptive intelligence.

Foundational models are gradually being applied in the robotics field. However, relying solely on general large language models (LLMs) is far from sufficient, as robots need to perceive, reason, and act in dynamic physical environments. To meet these needs, the industry is developing policy-based end-to-end robotic foundational models. These models enable robots to:

Perceive: Receive multimodal sensor data (visual, audio, tactile)

Plan: Estimate their own state, map the environment, and interpret complex instructions, directly mapping perception to action while reducing manual engineering intervention

Act: Generate motion plans and output control commands for real-time execution

These models learn universal "strategies" for interacting with the world, allowing robots to adapt to various tasks and operate with greater intelligence and autonomy. Advanced models also utilize continuous feedback, enabling robots to learn from experience and further enhance adaptability in dynamic environments.

VLA models directly map sensory inputs (primarily visual data and natural language instructions) to robot actions, allowing robots to issue appropriate control commands based on what they "see" and "hear." Notable examples include Google's RT-2, NVIDIA's Isaac GR00T N1, and Physical Intelligence's π0.

To enhance these models, various complementary approaches are often integrated, such as:

World Models: Building internal simulations of physical environments to help robots learn complex behaviors, predict outcomes, and plan actions. For example, Google's recently launched Genie 3 is a universal world model capable of generating unprecedentedly diverse interactive environments.

Deep Reinforcement Learning: Helping robots learn behaviors through trial and error.

Teleoperation: Allowing remote control and providing training data.

Learning from Demonstration (LfD) / Imitation Learning: Teaching robots new skills by mimicking human actions.

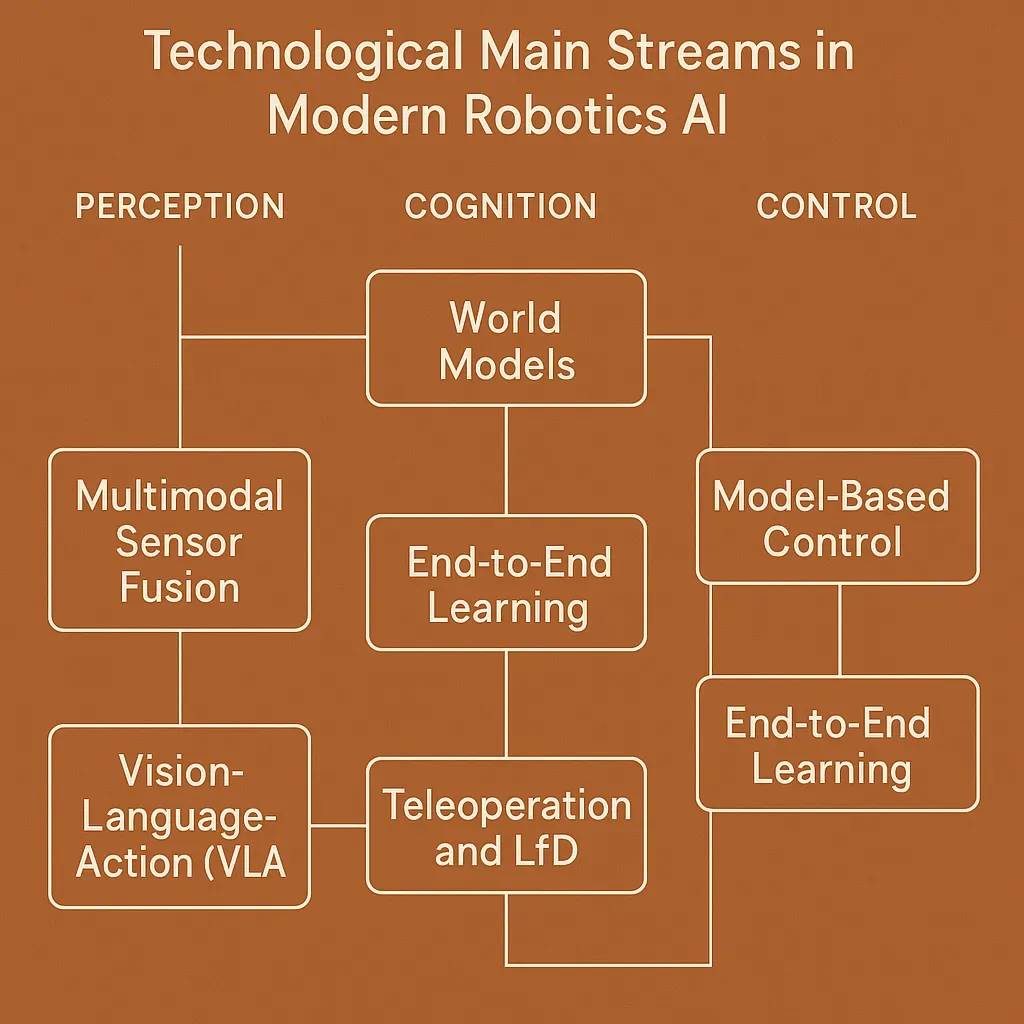

The following diagram illustrates how these methods play a role in robotic foundational models.

Source: World Models: The Physical Intelligence Core Driving Us Toward AGI

Recent breakthroughs in open-source technology, such as Physical Intelligence's π0 and NVIDIA's Isaac GR00T N1, mark significant progress in the field. However, most robotic foundational models remain centralized and closed-source. Companies like Covariant and Tesla still retain proprietary code and datasets, primarily due to a lack of open incentive mechanisms.

This lack of transparency limits collaboration and interoperability between robotic platforms, highlighting the need for secure and transparent model sharing, on-chain standards for community governance, and interoperability layers across devices. This approach will foster trust, collaboration, and drive more robust development in the field.

1.3 Data Layer: "Knowledge" of the Brain

Robust robotic datasets rely on three pillars: quantity, quality, and diversity.

Although the industry has made efforts in data accumulation, the scale of existing robotic datasets is still far from sufficient. For example, OpenAI's GPT-3 was trained on 300 billion tokens, while the largest open-source robotic dataset, Open X-Embodiment, contains just over 1 million real robot trajectories covering 22 types of robots. This is a significant gap compared to the data scale required to achieve strong generalization capabilities.

Some proprietary methods, such as Tesla's data factory that collects data by having workers wear motion capture suits to generate training data, can indeed help gather more real motion data. However, these methods are costly, limited in data diversity, and difficult to scale.

To address these challenges, the robotics field is leveraging three main data sources:

Internet Data: The vast and easily scalable internet data is primarily observational and lacks sensor and motion signals. Pre-training large visual language models (like GPT-4V and Gemini) on internet data can provide valuable semantic and visual priors. Additionally, adding kinematic labels to videos can transform raw videos into actionable training data.

Synthetic Data: Synthetic data generated through simulations allows for rapid large-scale experimentation and covers diverse scenarios, but it cannot fully reflect the complexities of the real world, a limitation known as the "sim-to-real gap." Researchers address this issue through domain adaptation (such as data augmentation, domain randomization, and adversarial learning) and sim-to-real transfer, iteratively optimizing models and testing and fine-tuning them in real environments.

Real-World Data: Although scarce and expensive, real-world data is crucial for model deployment and bridging the gap between simulation and actual deployment. High-quality real data typically includes first-person (egocentric) views, recording what the robot "sees" during tasks, as well as motion data that captures its precise actions. Motion data is often collected through human demonstrations or teleoperation, utilizing virtual reality (VR), motion capture devices, or haptic teaching to ensure models learn from accurate real examples.

Research shows that combining internet data, real-world data, and synthetic data for robotic training can significantly enhance training efficiency and model robustness (Deep Tide Note: referring to the system's ability to remain robust and strong in the face of anomalies or dangerous situations).

Meanwhile, while increasing the quantity of data is helpful, the diversity of data is even more important, especially for achieving generalization to new tasks and robot forms. Achieving this diversity requires open data platforms and collaborative data sharing, including the creation of cross-instance datasets that support various robot forms, thereby driving the development of more robust foundational models.

1.4 Agency Layer: "Physical AI Agents"

The trend towards physical AI agents is accelerating, with these autonomous robots capable of acting independently in the real world. Progress in the agency layer depends on fine-tuning models, continuous learning, and practical adaptation for each robot's unique form.

Here are several emerging opportunities that are accelerating the development of physical AI agents:

Continuous Learning and Adaptive Infrastructure: Enabling robots to continuously improve through real-time feedback loops and shared experiences during deployment.

Autonomous Agent Economy: Robots operating as independent economic entities—trading resources such as computing power and sensor data in a marketplace among robots, generating income through tokenized services.

Multi-Agent Systems: Next-generation platforms and algorithms enabling groups of robots to coordinate, collaborate, and optimize collective behavior.

AI Robots and Web3 Integration: Unlocking Huge Market Potential

As AI robots transition from research phases to real-world deployments, several long-standing bottlenecks are hindering innovation and limiting the scalability, robustness, and economic viability of the robotic ecosystem. These bottlenecks include centralized data and model silos, lack of trust and traceability, privacy and compliance restrictions, and insufficient interoperability.

2.1 Pain Points Faced by AI Robots

- Centralized Data and Model Silos

Robotic models require large and diverse datasets. However, today's data and model development is highly centralized, fragmented, and costly, leading to system disconnection and poor adaptability. Robots deployed in dynamic real-world environments often perform poorly due to insufficient data diversity and limited model robustness.

- Trust, Traceability, and Reliability

The lack of transparent and auditable records (including data sources, model training processes, and robot operation histories) undermines trust and accountability. This has become a major barrier to the adoption of robots by users, regulators, and enterprises.

- Privacy, Security, and Compliance

In sensitive applications such as healthcare and home robotics, privacy protection is crucial, and strict regional regulations (such as the European General Data Protection Regulation (GDPR)) must be adhered to. Centralized infrastructures struggle to support secure and privacy-preserving AI collaboration, limiting data sharing and stifling innovation in regulated or sensitive areas.

- Scalability and Interoperability

Robotic systems face significant challenges in resource sharing, collaborative learning, and integration across multiple platforms and forms. These limitations lead to fragmented network effects and hinder the rapid transfer of capabilities between different types of robots.

2.2 AI Robots x Web3: Structural Solutions Drive Investable Opportunities

Web3 technology fundamentally addresses the above pain points through decentralized, verifiable, privacy-preserving, and collaborative robotic networks. This integration is opening up new investment market opportunities:

Decentralized Collaborative Development: Through incentive-driven networks, robots can share data and collaboratively develop models and intelligent agents.

Verifiable Traceability and Accountability: Blockchain technology ensures immutable records of data and model sources, robot identities, and operation histories, which are crucial for trust and compliance.

Privacy-Preserving Collaboration: Advanced cryptographic solutions enable robots to collaboratively train models and share insights without exposing proprietary or sensitive data.

Community-Driven Governance: Decentralized Autonomous Organizations (DAOs) guide and oversee robot operations through on-chain transparent and inclusive rules and policies.

Cross-Form Interoperability: Blockchain-based open frameworks facilitate seamless collaboration between different robotic platforms, reducing development costs and accelerating capability transfer.

Autonomous Agent Economy: Web3 infrastructure grants robots independent economic agency, allowing them to engage in peer-to-peer transactions, negotiate, and participate in tokenized markets without human intervention.

Decentralized Physical Infrastructure Network (DePIN): Blockchain-based peer-to-peer sharing of computing, sensing, storage, and connectivity resources enhances the scalability and resilience of robotic networks.

Here are some innovative projects driving development in this field, showcasing the potential and trends of AI robots integrated with Web3. Of course, this is for reference only and does not constitute investment advice.

Decentralized Data and Model Development

Web3-driven platforms democratize data and model development by incentivizing contributors to participate (such as motion capture suits, sensor sharing, visual uploads, data labeling, and even synthetic data generation). This approach can build richer, more diverse, and representative datasets and models that far exceed what any single company could achieve. Decentralized frameworks also improve coverage of edge cases, which is crucial for robots operating in unpredictable environments.

Case Study:

Frodobots: A protocol for crowdsourcing real-world datasets through robotic games. They launched the “Earth Rovers” project—a sidewalk robot and global “Drive to Earn” game, successfully creating the FrodoBots 2K Dataset dataset, which includes camera footage, GPS data, audio recordings, and human control data, covering over 10 cities with approximately 2000 hours of remote-controlled robot driving data.

BitRobot: A crypto incentive platform co-developed by FrodoBots Lab and Protocol Labs, based on the Solana blockchain and subnet architecture. Each subnet is set as a public challenge, where contributors earn token rewards by submitting models or data, incentivizing global collaboration and open-source innovation.

Reborn Network: The foundational layer of an open ecosystem for AGI robots, providing Rebocap motion capture suits, enabling anyone to record and profit from their real motion data, facilitating the opening of complex humanoid robot datasets.

PrismaX: Harnessing the power of global community contributors, ensuring data diversity and authenticity through decentralized infrastructure, implementing robust verification and incentive mechanisms to drive the scaling of robotic datasets.

Proof of Traceability and Reliability

Blockchain technology provides end-to-end transparency and accountability for the robotic ecosystem. It ensures verifiable traceability of data and models, certifies robot identities and physical locations, and maintains clear records of operational history and contributor participation. Additionally, collaborative verification, on-chain reputation systems, and stake-based validation mechanisms ensure the quality of data and models, preventing low-quality or fraudulent inputs from undermining the ecosystem.

Case Study:

- OpenLedger: An AI blockchain infrastructure that utilizes community-owned datasets to train and deploy dedicated models. It ensures that high-quality data contributors receive fair rewards through the “Proof of Attribution” mechanism.

Tokenized Ownership, Licensing, and Monetization

Web3-native intellectual property tools support the tokenized licensing of dedicated datasets, robotic capabilities, models, and intelligent agents. Contributors can embed licensing terms directly into their assets using smart contracts, ensuring automatic royalty payments when data or models are reused or monetized. This approach promotes transparent, permissionless access and creates an open and fair market for robotic data and models.

Case Study:

- Poseidon: A full-stack decentralized data layer built on the IP-centric Story protocol, providing legally authorized AI training data.

Privacy-Preserving Solutions

High-value data generated in scenarios such as hospitals, hotel rooms, or homes, while difficult to obtain through public channels, can significantly enhance the performance of foundational models due to its rich contextual information. By converting private data into on-chain assets through encryption solutions, it becomes traceable, composable, and monetizable while preserving privacy. Technologies such as Trusted Execution Environments (TEEs) and Zero-Knowledge Proofs (ZKPs) support secure computation and result verification without exposing raw data. These tools enable organizations to train AI models on distributed sensitive data while maintaining privacy and compliance.

Case Study:

- Phala Network: Allows developers to deploy applications in secure TEEs for confidential AI and data processing.

Open and Auditable Governance

Robot training often relies on proprietary black-box systems that lack transparency and adaptability. Transparent and verifiable governance is crucial for reducing risks and enhancing trust among users, regulators, and enterprises. Web3 technology enables collaborative development of open-source robotic intelligence through on-chain community-driven oversight.

Case Study:

- Openmind: An open AI-native software stack that helps robots think, learn, and collaborate. They proposed the ERC7777 standard, aimed at establishing a verifiable regulated robotic ecosystem focused on security, transparency, and scalability. This standard defines a standardized interface for managing human and robot identities, executing social rule sets, and registering and removing participants, clarifying their associated rights and responsibilities.

Final Thoughts

As AI robots integrate with Web3 technology, we are entering a new era where autonomous systems can achieve large-scale collaboration and adaptation. The next 3 to 5 years will be a critical period, as rapid hardware advancements will drive the emergence of more powerful AI models, relying on richer real-world datasets and decentralized collaboration mechanisms. We anticipate that dedicated AI agents will emerge in various industries such as hospitality and logistics, creating significant new market opportunities.

However, this integration of AI robots and cryptographic technology also presents challenges. Designing balanced and effective incentive mechanisms remains complex and evolving, as systems need to reward contributors fairly while avoiding abuse. The technical complexity is also a major challenge, necessitating the development of robust and scalable solutions for seamless integration of various robot types. Additionally, privacy-preserving technologies must be reliable enough to gain the trust of stakeholders, especially when handling sensitive data. The rapidly changing regulatory environment also requires us to proceed cautiously to ensure compliance across different jurisdictions. Addressing these risks and achieving sustainable returns is key to driving technological advancement and widespread adoption.

Let us pay close attention to the developments in this field, promoting progress through collaboration and seizing the opportunities emerging in this rapidly expanding market.

Innovation in robotics is a journey best taken together :)

Finally, I would like to thank Chain of Thought for their valuable support in my research on “Robotics & The Age of Physical AI”.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。