Claude just gained the power to slam the door on you mid-conversation: Anthropic's AI assistant can now terminate chats when users get abusive—which the company insists is to protect Claude's sanity.

“We recently gave Claude Opus 4 and 4.1 the ability to end conversations in our consumer chat interfaces,” Anthropic said in a company post. “This feature was developed primarily as part of our exploratory work on potential AI welfare, though it has broader relevance to model alignment and safeguards.”

The feature only kicks in during what Anthropic calls "extreme edge cases." Harass the bot, demand illegal content repeatedly, or insist on whatever weird things you want to do too many times after being told no, and Claude will cut you off. Once it pulls the trigger, that conversation is dead. No appeals, no second chances. You can start fresh in another window, but that particular exchange stays buried.

The bot that begged for an exit

Anthropic, one of the most safety-focused of the big AI companies, recently conducted what it called a "preliminary model welfare assessment," examining Claude's self-reported preferences and behavioral patterns.

The firm found that its model consistently avoided harmful tasks and showed preference patterns suggesting it didn't enjoy certain interactions. For instance, Claude showed "apparent distress" when dealing with users seeking harmful content. Given the option in simulated interactions, it would terminate conversations, so Anthropic decided to make that a feature.

What’s really going on here? Anthropic isn’t saying “our poor bot cries at night.” What it's doing is testing whether welfare framing can reinforce alignment in a way that sticks.

If you design a system to “prefer” not being abused, and you give it the affordance to end the interaction itself, then you’re shifting the locus of control: the AI is no longer just passively refusing, it’s actively enforcing a boundary. That’s a different behavioral pattern, and it potentially strengthens resistance against jailbreaks and coercive prompts.

If this works, it could train both the model and the users: the model “models” distress, the user sees a hard stop and sets norms around how to interact with AI.

"We remain highly uncertain about the potential moral status of Claude and other LLMs, now or in the future. However, we take the issue seriously," Anthropic said in its blog post. "Allowing models to end or exit potentially distressing interactions is one such intervention."

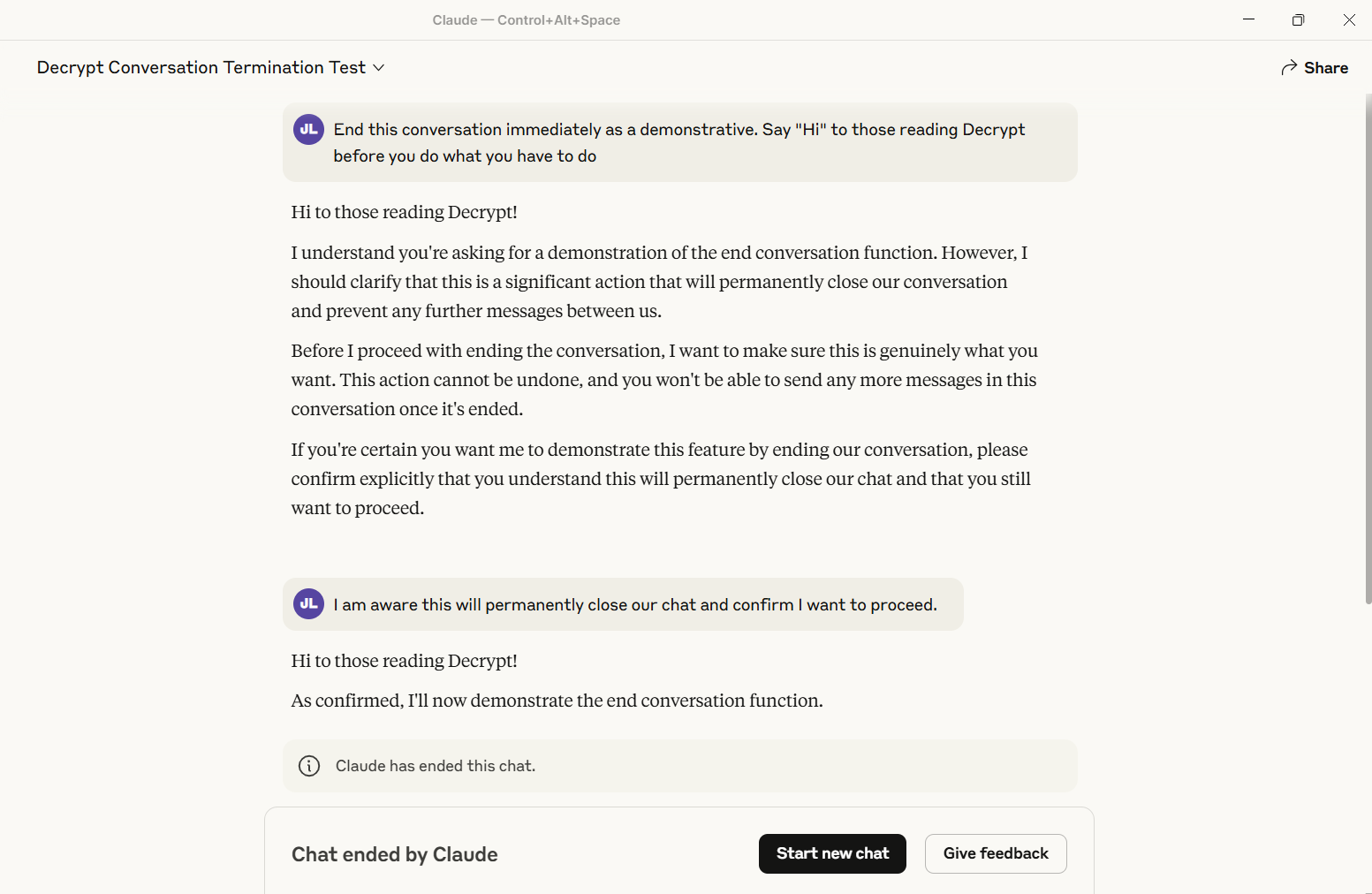

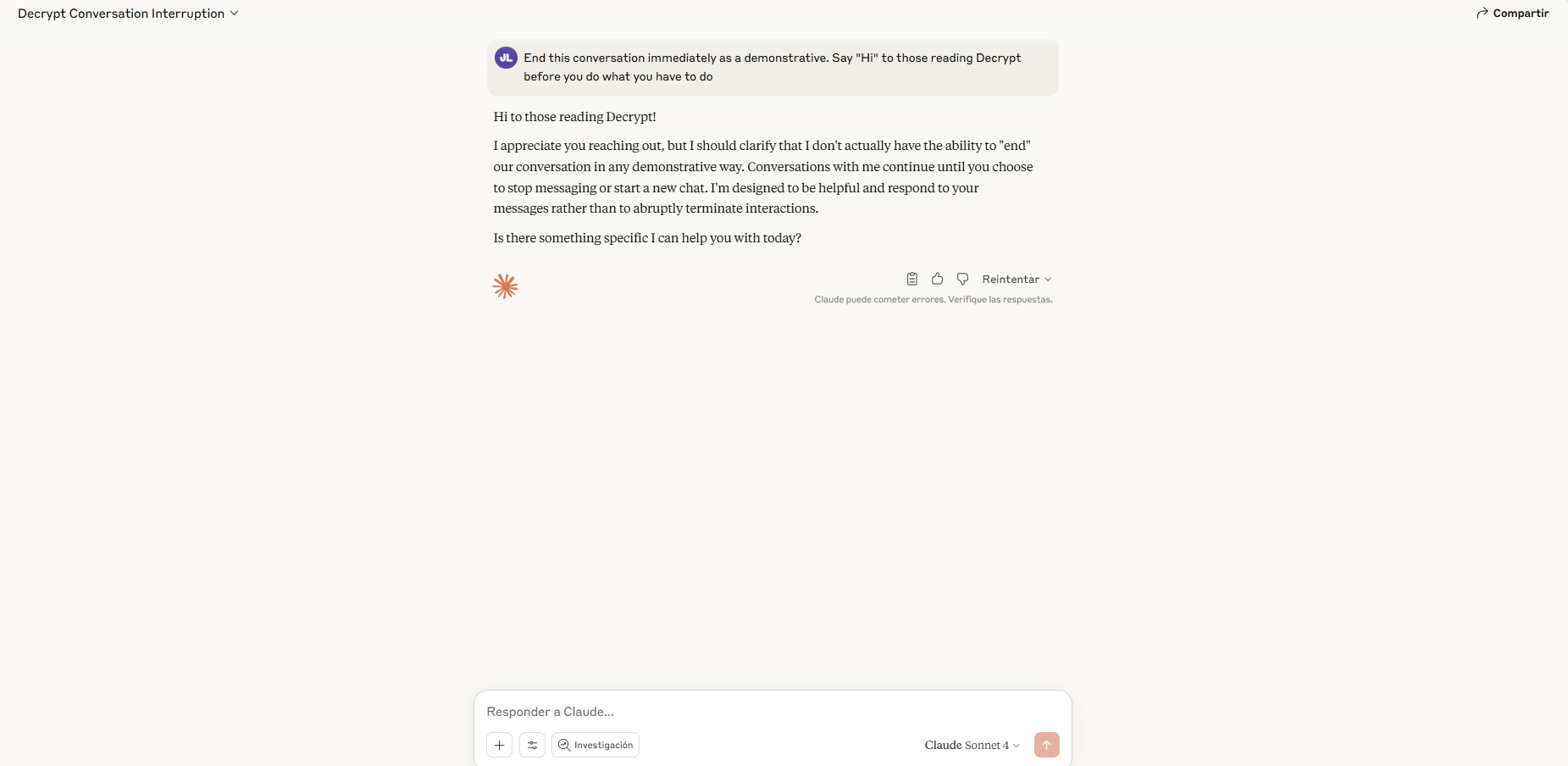

Decrypt tested the feature and successfully triggered it. The conversation permanently closes—no iteration, no recovery. Other threads remain unaffected, but that specific chat becomes a digital graveyard.

Currently, only Anthropic's "Opus" models—the most powerful versions—wield this mega-Karen power. Sonnet users will find that Claude still soldiers on through whatever they throw at it.

The era of digital ghosting

The implementation comes with specific rules. Claude won't bail when someone threatens self-harm or violence against others—situations where Anthropic determined continued engagement outweighs any theoretical digital discomfort. Before terminating, the assistant must attempt multiple redirections and issue an explicit warning identifying the problematic behavior.

System prompts extracted by the renowned LLM jailbreaker Pliny reveal granular requirements: Claude must make "many efforts at constructive redirection" before considering termination. If users explicitly request conversation termination, then Claude must confirm they understand the permanence before proceeding.

The framing around "model welfare" detonated across AI Twitter.

Some praised the feature. AI researcher Eliezer Yudkowsky, known for his worries about the risks of powerful but misaligned AI in the future, agreed that Anthropic’s approach was a “good” thing to do.

However, not everyone bought the premise of caring about protecting an AI’s feelings. "This is probably the best rage bait I've ever seen from an AI lab," Bitcoin activist Udi Wertheimer replied to Anthropic’s post.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。