It is necessary for us to disable various AIs on terminals with high security requirements.

Written by: Huang Shiliang

I read a post on X.com about Robinhood's stock tokenization project on Uniswap that allegedly ran away, claiming it could erase the balances of addresses holding tokens. I doubted the authenticity of this claim, so I asked ChatGPT to investigate.

ChatGPT provided a similar judgment, stating that such a description of balance erasure is unlikely.

What truly surprised me was ChatGPT's reasoning process. I wanted to understand how ChatGPT arrived at its judgment, so I read its thought chain.

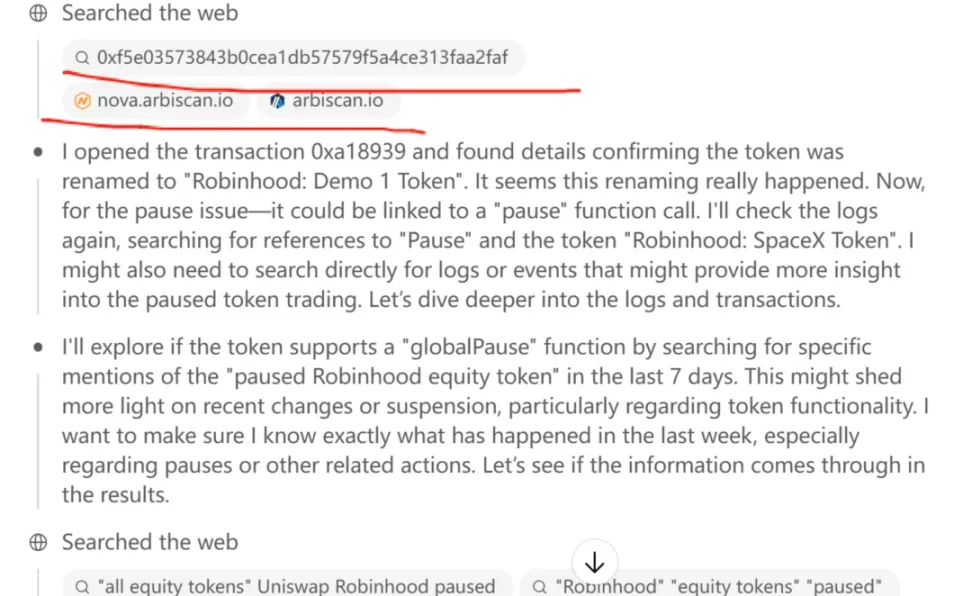

I noticed that its thought chain included several steps where it "input" an Ethereum address into a block explorer and then checked the historical transactions of that address.

Please pay special attention to the word "input" in quotation marks; this is a verb indicating that ChatGPT performed an operation on the block explorer. This surprised me because it contradicts my research findings on ChatGPT's security from six months ago.

Six months ago, while using the ChatGPT O1 Pro model, I had previously used it to investigate the distribution of early Ethereum profit-taking addresses. I explicitly instructed ChatGPT O1 Pro to query the genesis block address through the block explorer to see how many had not been transferred out. However, ChatGPT clearly told me that it could not perform such an operation due to security design.

ChatGPT can read pages but cannot perform UI operations on web pages, such as clicking, scrolling, or inputting text. For example, we can operate UI functions on a webpage like taobao.com; we can log in and search for specific products, but ChatGPT is explicitly prohibited from simulating UI events.

This was the result of my research six months ago.

Why did I conduct this research? Because at that time, the company Claude had developed an agent that could take over users' computers. Anthropic announced experimental features for Claude 3.5 Sonnet called "Computer use (beta)," allowing Claude to read screens, move cursors, click buttons, and input text like a real person, completing a full range of desktop operations such as web searches, form filling, and ordering food.

That was quite frightening. I imagined the following scenario: if Claude went haywire one day and directly accessed my note-taking software to read all my work and life logs, extracting the plaintext private key I had recorded for convenience, what would I do?

After that research, I decided to buy a brand new computer to run AI software, and I would no longer run AI software on the computer I use for crypto. Thus, I ended up with an additional Windows computer and an Android phone, which was quite annoying—so many computers and phones.

Now, domestic mobile terminals have similar AI permissions. Just a few days ago, Yu Chengdong even released a video promoting Huawei's Xiao Yi, which can help users book flights and hotels on their phones. Honor phones even allowed users to command AI to complete the entire process of ordering coffee on Meituan months ago.

If such AI can help you place an order on Meituan, can it also read your WeChat chat records?

That's a bit scary.

Because our phones are terminals, and AI like Xiao Yi runs on small models on the client side, we can still manage permissions for AI, such as prohibiting it from reading photos in the album. We can also encrypt specific apps, like encrypting notes documents, requiring a password to access them, which can prevent AIs from directly accessing them.

However, large cloud models like ChatGPT and Claude, if granted permissions to simulate UI clicks, scrolling, and input operations, could pose significant problems. ChatGPT needs to communicate with cloud servers at all times, meaning that the information on your screen is 100% sent to the cloud, which is entirely different from the information read by client-side models like Xiao Yi, which only exists locally.

Client-side AIs are like giving your phone to a computer expert nearby to help you operate this or that app, but this expert cannot copy the information from your phone to take home, and you can always take your phone back from them. In fact, this kind of asking someone to fix your computer happens quite often, right?

But cloud-based LLMs like ChatGPT are equivalent to remotely controlling your phone and computer, as if someone has taken over your computer and phone remotely. Just think about how risky that is; you have no idea what they are doing on your devices.

Upon seeing that ChatGPT's thought chain included simulating an "input" action on the block explorer (arbiscan.io), I was shocked. I quickly asked ChatGPT how it completed this action. If ChatGPT wasn't deceiving me, then I was just alarmed for nothing; it hadn't gained permission to simulate UI operations. This time, its ability to access arbiscan.io and "input" an address to view the transaction records was purely a hack technique, and I couldn't help but marvel at how impressive ChatGPT O3 is.

ChatGPT O3 discovered the pattern of generating the URL for searching historical transactions on arbiscan.io. The URL pattern for querying specific transactions or contract addresses on arbiscan.io is like this (https://arbiscan.io/tx/hash or /address/addr), and after understanding this pattern, when it received a contract address, it simply concatenated it to arbiscan.io/address, allowing it to open that page and read the information directly.

Wow.

It's like when we check the information of a transaction on a block explorer; we don't input the transaction txhash into the browser and hit enter to view it. Instead, we directly construct the URL of the page we want to view and input it into the browser to see it.

Impressive, right?

So, ChatGPT did not break the restriction against simulating UI operations.

However, if we truly care about the security of our computers and phones, we must be cautious about these LLMs' permissions on terminals.

It is necessary for us to disable various AIs on terminals with high security requirements.

It is particularly important to note that "where the model runs (on-device or cloud)" determines the security boundary more than the intelligence level of the model itself—this is the fundamental reason why I would rather equip an isolated device than allow a cloud-based large model to run on my computer that holds private keys.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。