With the spear of AI, attack the shield of AI.

Author: Cool Geek

01 The Rise of AI: The Security Dark War Under the Double-Edged Sword of Technology

With the rapid development of AI technology, the threats faced by cybersecurity are becoming increasingly complex. Attack methods are not only more efficient and covert but have also given rise to new forms of "AI hackers," leading to various new types of cybersecurity crises.

First, generative AI is reshaping the "precision" of online scams.

Simply put, it is the intelligentization of traditional phishing attacks. For example, in more precise scenarios, attackers use publicly available social data to train AI models, generating personalized phishing emails in bulk that mimic the writing style or language habits of specific users, implementing "customized" scams that bypass traditional spam filters and significantly increase the success rate of attacks.

Next is the most well-known deepfake and identity impersonation. Before the maturity of AI technology, traditional "face-changing fraud attacks," known as BEC (Business Email Compromise) scams, involved attackers disguising the email sender as your leader, colleague, or business partner to deceive them into providing business information or money, or to obtain other important data.

Now, "face-changing" has truly occurred. AI-generated face-swapping and voice-changing technologies can impersonate public figures or friends and family for scams, public opinion manipulation, or even political interference. Just two months ago, the CFO of a company in Shanghai received a video conference invitation from the "chairman," who used AI face-swapping and voice imitation to claim an urgent need to pay a "foreign cooperation deposit." The CFO transferred 3.8 million yuan to a designated account as instructed, only to later realize it was a scam by an overseas fraud gang using deepfake technology.

The third threat is automated attacks and vulnerability exploitation. Advances in AI technology have led to the intelligent and automated evolution of many scenarios, including cyberattacks. Attackers can use AI to automatically scan for system vulnerabilities, generate dynamic attack code, and launch indiscriminate rapid attacks on targets. For instance, AI-driven "zero-day attacks" can immediately write and execute malicious programs upon discovering vulnerabilities, making it difficult for traditional defense systems to respond in real-time.

During this year's Spring Festival, DeepSeek's official website suffered a massive DDoS attack of 3.2Tbps, while hackers simultaneously infiltrated through API injection of adversarial samples, altering model weights and causing core services to be paralyzed for 48 hours, resulting in direct economic losses exceeding tens of millions of dollars. Subsequent investigations revealed traces of long-term infiltration by the NSA.

Data pollution and model vulnerabilities also pose new threats. Attackers can implant false information in AI training data (known as data poisoning) or exploit the model's own flaws to induce AI to output incorrect results—this can pose direct security threats to critical areas and may even trigger catastrophic chain reactions, such as an autonomous driving system misinterpreting "no entry" as a "speed limit sign," or medical AI misclassifying benign tumors as malignant.

02 AI Needs AI to Govern

In the face of new cybersecurity threats driven by AI, traditional protective models have proven inadequate. So, what countermeasures do we have?

It is not difficult to see that the current industry consensus points to "using AI to combat AI"—this is not only an upgrade of technical means but also a shift in the security paradigm.

Current attempts can be roughly divided into three categories: security protection technologies for AI models, industry-level defense applications, and more macro-level government and international collaboration.

The key to AI model security protection technology lies in the intrinsic security reinforcement of the model.

Taking the "jailbreak" vulnerability of large language models (LLMs) as an example, their security protection mechanisms often fail due to generic jailbreak prompt strategies—attackers systematically bypass the model's built-in protective layers, inducing AI to generate violent, discriminatory, or illegal content. To prevent LLMs from being "jailbroken," various model companies have made attempts, such as Anthropic, which released a "Constitution Classifier" in February this year.

Here, "Constitution" refers to the inviolable natural language rules, serving as a safeguard trained on synthetic data. By specifying allowed and restricted content, it monitors input and output in real-time. In benchmark condition tests, its Claude 3.5 model, under the protection of the classifier, increased the success rate of blocking advanced jailbreak attempts from 14% to 95%, significantly reducing the risk of AI "jailbreaks."

In addition to model-based defenses, industry-level defense applications are also worth noting, as their scenario-based protection in vertical fields is becoming a key breakthrough: the financial industry builds anti-fraud barriers through AI risk control models and multimodal data analysis, the open-source ecosystem leverages intelligent vulnerability hunting technology for rapid response to zero-day threats, and corporate sensitive information protection relies on AI-driven dynamic control systems.

For example, Cisco showcased a solution at the Singapore International Cyber Week that can intercept sensitive data query requests submitted by employees to ChatGPT in real-time and automatically generate compliance audit reports to optimize management loops.

At the macro level, government and international cross-regional collaboration is also accelerating. The Cyber Security Agency of Singapore released the "Guidelines for the Security of AI Systems," imposing localization deployment and data encryption mechanisms to curb the abuse of generative AI, particularly establishing protective standards for identifying AI-generated identities in phishing attacks; the US, UK, and Canada have simultaneously launched the "AI Cyber Proxy Program," focusing on the development of trustworthy systems and real-time assessments of APT attacks, strengthening collective defense capabilities through a joint security certification system.

So, what methods can maximize the use of AI to address the cybersecurity challenges of the AI era?

"The future requires an AI security intelligence hub around which to build a new system." At the second Wuhan Cybersecurity Innovation Forum, Zhang Fu, founder of QingTeng Cloud Security, emphasized that using AI to combat AI is the core of the future cybersecurity defense system, stating, "In three years, AI will disrupt the existing security industry and all 2B industries. Products will be rebuilt, achieving unprecedented efficiency and capability improvements. Future products will be for AI, not for humans."

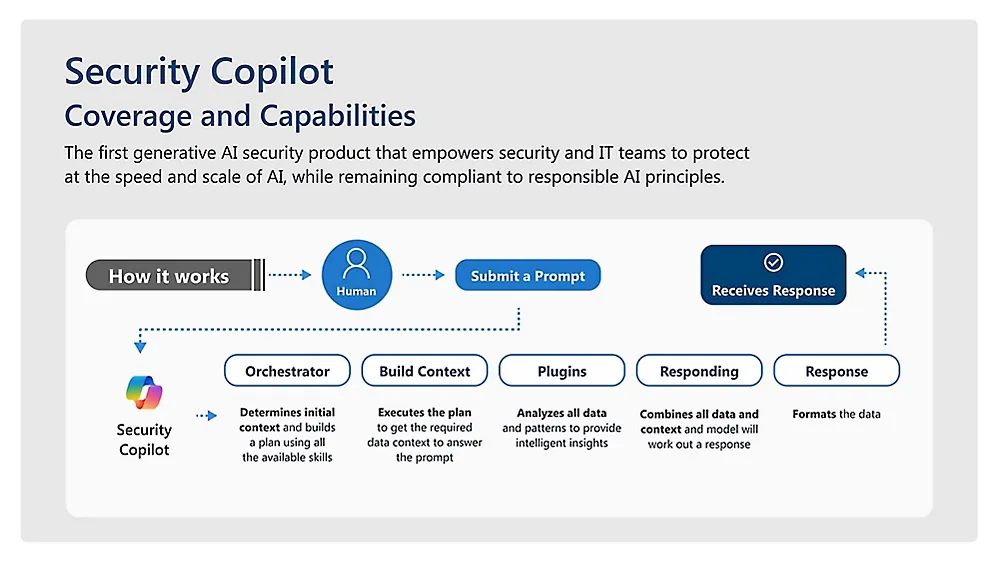

Among various solutions, the model of Security Copilot clearly provides a good demonstration of "future products being for AI": a year ago, Microsoft launched the intelligent Microsoft Security Copilot to help security teams quickly and accurately detect, investigate, and respond to security incidents; a month ago, it released AI agents to automatically assist in key areas such as phishing attacks, data security, and identity management.

Microsoft added six self-developed AI agents to expand the functionality of Security Copilot. Three of them assist cybersecurity personnel in filtering alerts: the phishing classification agent reviews phishing alerts and filters false positives; the other two analyze Purview notifications to detect unauthorized use of business data by employees.

The conditional access optimization agent collaborates with Microsoft Entra to identify unsafe user access rules and generate one-click remediation plans for administrators. The vulnerability remediation agent integrates with the device management tool Intune to help quickly locate vulnerable endpoints and apply operating system patches. The threat intelligence briefing agent generates cybersecurity threat reports on potential threats to organizational systems.

03 Wu Xiang: The Escort of L4 Level Advanced Intelligent Agents

Coincidentally, in China, to achieve a truly "autonomous driving" level of security protection, QingTeng Cloud Security has launched a full-stack security intelligent agent called "Wu Xiang." As the world's first security AI product to transition from "assisted AI" to "autonomous agent" (Autopilot), its core breakthrough lies in overturning the traditional tool's "passive response" model, making it autonomous, automatic, and intelligent.

By integrating machine learning, knowledge graphs, and automated decision-making technologies, "Wu Xiang" can independently complete the entire process from threat detection and impact assessment to response and disposal, achieving true autonomous decision-making and goal-driven actions. Its "Agentic AI architecture" design simulates the collaborative logic of human security teams: the "brain" integrates a cybersecurity knowledge base to support planning capabilities, the "eyes" finely perceive the dynamics of the network environment, and the "hands and feet" flexibly invoke a diverse security toolchain, forming an efficient judgment network through multi-agent collaboration, division of labor, and information sharing.

In terms of technical implementation, "Wu Xiang" adopts the "ReAct model" (Act-Observe-Think-Act cycle) and the "Plan AI + Action AI dual-engine architecture," ensuring dynamic correction capabilities in complex tasks. When tool invocation is abnormal, the system can autonomously switch to backup plans instead of interrupting the process. For example, in APT attack analysis, Plan AI acts as the "organizer" to break down task objectives, while Action AI serves as the "investigation expert" to execute log analysis and threat modeling, both advancing in parallel based on a real-time shared knowledge graph.

At the functional module level, "Wu Xiang" has built a complete autonomous decision-making ecosystem: the intelligent agent simulates the reflective iterative thinking of a security analyst, dynamically optimizing decision paths; tool invocation integrates host security log queries, network threat intelligence retrieval, and LLM-driven malicious code analysis; environmental awareness captures host assets and network information in real-time; the knowledge graph dynamically stores entity associations to assist decision-making; and multi-agent collaboration executes tasks in parallel through task decomposition and information sharing.

Currently, "Wu Xiang" performs exceptionally well in three core application scenarios: alert assessment, trace analysis, and outputting security reports.

In traditional security operations, the verification of the authenticity of massive alerts is time-consuming and labor-intensive. For example, in a local privilege escalation alert: the alert assessment agent of "Wu Xiang" automatically analyzes threat characteristics, invoking tools such as process permission analysis, parent process tracing, and program signature verification, ultimately determining it to be a false positive—without any human intervention throughout the process. In QingTeng's existing alert tests, the system has achieved 100% alert coverage and a 99.99% assessment accuracy rate, reducing manual workload by over 95%.

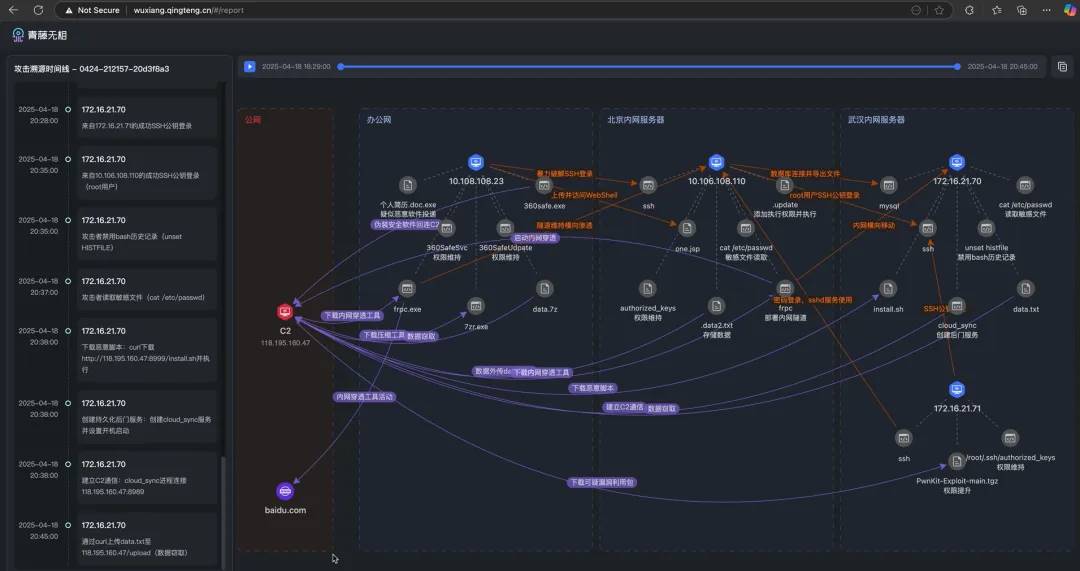

In the face of real threats like Webshell attacks, the intelligent agent confirms attack validity in seconds through cross-dimensional associations such as code feature extraction and file permission analysis. Traditional deep tracing, which requires collaboration among multiple departments and takes days (such as propagation path restoration and lateral impact assessment), is now automatically linked by the system through host logs, network traffic, and behavioral baselines, generating a complete attack chain report and compressing the response cycle from "days" to "minutes."

"Our core is to reverse the cooperative relationship between AI and humans, allowing AI to collaborate as if it were a person, achieving a leap from L2 to L4, that is, from assisted driving to high-level autonomous driving," shared Hu Jun, co-founder and VP of products at QingTeng. "As AI adapts to more scenarios, the success rate of decision-making increases, gradually taking on more responsibilities, thus changing the division of responsibilities between humans and AI."

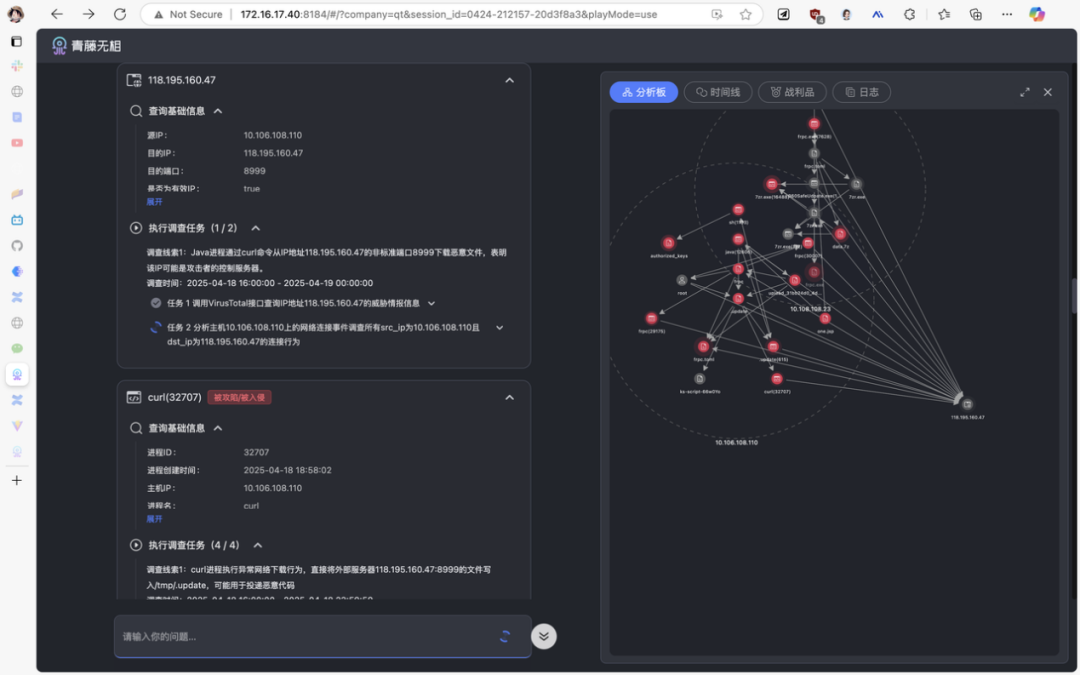

In the scenario of trace analysis, the Webshell alert first triggers the multi-agent security team driven by "Wu Xiang" to collaborate on tracing: the "assessment expert" locates the one.jsp file based on the alert, generating parallel tasks such as file content analysis, author tracing, directory checks, and process tracking. The "security investigator" agent calls file log tools to quickly identify the java (12606) process as the source of the write operation, and this process along with the associated host 10.108.108.23 (discovered through access logs showing high-frequency interactions) are subsequently included in the investigation.

The agent dynamically expands clues through a threat graph, digging deeper from a single file to processes and hosts, while the assessment expert summarizes the task results to comprehensively assess the risk. This process compresses what would take humans hours to days into just a few minutes, restoring the entire attack chain with precision that surpasses that of human senior security experts, tracking lateral movement paths without any blind spots. Red team assessments also indicate that it is difficult to evade its thorough investigation.

"The large model is better than humans because it can thoroughly investigate every nook and cranny, rather than excluding low-probability situations based on experience," Hu Jun explained, "This means it excels in both breadth and depth."

After completing the investigation of complex attack scenarios, organizing alerts and investigation clues and generating reports often takes time and effort. However, AI can achieve one-click summarization, clearly presenting the attack process in a visual timeline, seamlessly showcasing key nodes like a movie— the system automatically organizes key evidence to generate key frames of the attack chain, combining it with contextual information to ultimately produce a dynamic attack chain map, presenting the entire attack trajectory in an intuitive and three-dimensional manner.

04 Conclusion

It is evident that the development of AI technology brings dual challenges to cybersecurity.

On one hand, attackers utilize AI to achieve automation, personalization, and concealment of attacks; on the other hand, defenders must accelerate technological innovation to enhance detection and response capabilities through AI. In the future, the AI technology competition between attackers and defenders will determine the overall landscape of cybersecurity, and the improvement of security intelligent agents will be key to balancing risk and development.

The security intelligent agent "Wu Xiang" has brought new changes at both the security architecture and cognitive levels.

"Wu Xiang" essentially changes the way AI is used, with its breakthrough being the fusion of multi-dimensional data perception, protection strategy generation, and decision-making interpretability into an organic whole—transforming from a past model of using AI as a tool to empowering AI to work autonomously and automatically.

By correlating and analyzing heterogeneous data such as logs, text, and traffic, the system can capture traces of APT activities before attackers construct a complete attack chain. More critically, its visualized reasoning explanations of the decision-making process render the traditional tools' "knowing what but not why" black-box alerts a thing of the past—security teams can not only see threats but also understand the evolutionary logic of those threats.

This innovation represents a paradigm shift in security thinking from "repairing the barn after the sheep are lost" to "taking precautions before it rains," redefining the rules of the offense-defense game.

"Wu Xiang" is like a hunter with digital intuition: by real-time modeling of memory operations and other micro-behavioral features, it can extract lurking custom trojans from massive noise; the dynamic attack surface management engine continuously assesses asset risk weights, ensuring that protective resources are accurately directed at critical systems; and the intelligent digestion mechanism of threat intelligence transforms tens of thousands of alerts per day into actionable defense instructions, even predicting the evolutionary direction of attack variants—while traditional solutions are still struggling to respond to ongoing intrusions, "Wu Xiang" is already preemptively blocking the attackers' next moves.

"The birth of the AI intelligence hub system (high-level security intelligent agent) will completely reshape the landscape of cybersecurity. All we need to do is seize this opportunity," Zhang Fu stated.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。