1. Preface

The goal of this report is to provide an in-depth and easy-to-understand overview of the AI industry to the general users. Through this report, we hope to help readers understand the technological evolution, market dynamics, application scenarios, and future development trends of AI. At the same time, the report will also explore the combination of AI and Web3, analyzing the potential opportunities and challenges in this emerging field. The content is for industry learning and communication purposes only and does not constitute any investment reference.

2. AI Concepts, Historical Changes, and Technological Development

2.1 What is AI?

Artificial Intelligence (AI) refers to the ability of computer systems to simulate human intelligent behavior. AI systems can perceive the environment, learn from experience, reason for decision-making, and process natural language, and in some cases, achieve self-optimization.

The core goal of AI is to enable computers to have "perceptual ability," "cognitive ability," "creativity," and "intelligence." In simple terms, it is to enable computers to think and act like humans, to think rationally and make decisions rationally, just like humans.

The application scope of AI is wide-ranging, including but not limited to natural language processing, computer vision, speech recognition, robot control, autonomous driving, medical diagnosis, and other fields. With the development of technology, AI is gradually becoming one of the key driving forces for economic growth, social change, and scientific progress.

2.2 History and Revolution of AI

The development of Artificial Intelligence (AI) spans over 70 years of technological revolution, from initial theoretical exploration to widespread application, AI has experienced fluctuations and breakthroughs in multiple stages.

2.2.1 Origin and Early Development of AI (1940s-1970s)

The origin of AI can be traced back to the mid-20th century, especially in the 1950s, when mathematicians and computer scientists began discussing the concept of "intelligent machines." The AI research during this period mostly revolved around symbolic logic, reasoning, and problem-solving, laying the foundation for artificial intelligence.

- Turing Test: Alan Turing, one of the pioneers of AI theory, first discussed in his 1950 paper "Computing Machinery and Intelligence" whether machines could exhibit human-like intelligence. The Turing Test was thus born to judge whether machines could simulate human intelligence through natural language conversation. This idea laid the theoretical foundation for the development of AI.

- Symbolic AI: AI research in the 1950s mainly focused on logical reasoning and symbolic processing. AI systems during this period attempted to simulate human thought processes through rules and symbols (such as IF-THEN statements). This type of AI was called "Symbolic AI" or "GOFAI" (Good Old-Fashioned AI), and its representative achievements included the Logic Theorist and the General Problem Solver.

- Dartmouth Conference: The term "Artificial Intelligence" was officially proposed at the Dartmouth Conference in 1956, outlining the goals for future AI research. This conference marked the birth of AI as an independent discipline.

2.2.2 From Rules to Learning: Evolution of AI Technology (1970-2000)

As the early technological bottlenecks of AI gradually became apparent, the research direction of AI gradually shifted from rule-based symbolic systems to data-driven learning models. Especially in the 1980s and 1990s, with the improvement of computing power and data scale, Machine Learning gradually became the main technological route in the field of AI.

- Expert Systems (1970s-1980s): In the 1970s, Expert Systems became a hot topic in AI research. These systems used encoded domain expert knowledge (i.e., "rule base") for reasoning and decision-making, mainly used in medical diagnosis, engineering design, and other fields. Representative systems include MYCIN (used for medical diagnosis) and DENDRAL (used for chemical analysis). Expert Systems demonstrated the potential application of AI in specific fields, but their development was also limited by the scale and maintenance cost of rule bases. Early rule-based AI systems performed poorly in addressing these challenges.

- Revival of Neural Networks (1980s): Neural networks received renewed attention with the support of multilayer perceptrons (MLP) and backpropagation algorithms. Backpropagation enabled neural networks to effectively adjust weights, overcoming previous technological bottlenecks.

- Rise of Machine Learning: With the improvement of computer performance, from the late 1980s to the 1990s, AI research shifted from rule-based systems to statistical and data-driven machine learning models. Unlike traditional AI, which relied on explicit rules, machine learning generated rules by "learning" from large amounts of data. This technological shift marked AI's development towards a more flexible and powerful direction.

2.2.3 Rise of Modern AI: (2000-Present)

At the beginning of the 21st century, with the development of big data, cloud computing, and GPUs (graphics processing units), AI technology ushered in a new wave of development. The scale and complexity of deep learning models continued to increase, promoting AI technology to achieve significant results in various fields. For example, AlphaGo's victory over human Go champions, and the performance of GPT-3 in natural language processing, marked the rise of modern AI. AI technology gradually transitioned from the experimental stage to commercial use, widely applied in fields such as image recognition, speech recognition, natural language processing, and autonomous driving.

- Driving Force of Big Data and Cloud Computing: Big data and cloud computing jointly propelled the rapid development of AI technology. With the popularity of the internet and social media, the explosive growth of data provided rich training materials for AI models. Massive structured and unstructured data became the foundation for AI training, helping models extract useful features from large datasets, significantly enhancing their performance. At the same time, cloud computing provided powerful distributed computing resources for the development of AI, enabling enterprises and research institutions to efficiently train and deploy models through cloud platforms. Platforms such as AWS, Google Cloud, and Microsoft Azure not only reduced the cost and threshold of AI development but also provided flexible and scalable computing infrastructure, driving the widespread application of AI technology in various industries.

- Breakthrough of Deep Learning: In 2012, Alex Krizhevsky and others proposed the deep convolutional neural network AlexNet, which achieved breakthrough results in the ImageNet image recognition competition, marking the revival of deep learning technology. Deep learning (especially convolutional neural networks CNN and recurrent neural networks RNN) demonstrated powerful capabilities in image recognition, speech recognition, natural language processing, and other fields. Deep learning extracted abstract features from data through multi-layer neural networks, enabling AI to exhibit unprecedented accuracy in handling complex tasks.

- Generative AI and Reinforcement Learning: Generative AI and reinforcement learning are important branches of AI technology, each showing strong potential applications in multiple fields. Representative technologies such as Generative Adversarial Networks (GANs) in generative AI, through adversarial training between the generator and discriminator, can generate highly realistic images, videos, music, and other content. GANs have broad application prospects in art, advertising, medical imaging, and other fields. Reinforcement learning, by allowing AI to interact with the environment and optimize through reward mechanisms, has made significant progress in game AI, robot control, and other areas. In 2016, AlphaGo, through the combination of deep learning and reinforcement learning technology, defeated top human players in a Go competition, demonstrating AI's superlative performance in complex tasks.

2.2.4 Future of AI:

- From Specialized AI to Artificial General Intelligence (AGI): Most modern AI systems are specialized AI, capable of performing specific tasks in specific domains (such as image recognition, speech recognition, etc.). Artificial General Intelligence (AGI) is the ultimate goal of future AI research, as it can learn, reason, and make decisions in different environments and tasks like humans. Once achieved, it will fundamentally change society, reshape the labor market, scientific research, education models, and social governance.

- Integration of AI with Other Cutting-Edge Technologies: The integration of AI with other cutting-edge technologies such as blockchain, Internet of Things (IoT), quantum computing, etc., will create new infinite possibilities for various industries and human society. Especially in smart homes, smart cities, industrial automation, quantum computing, etc., AI will play a key role.

2.3. Key Technologies Involved in AI:

Currently, the hotspots of AI technology include machine learning, deep learning, natural language processing (such as chatbots and language translation), computer vision (such as facial recognition and autonomous driving), and generative AI (such as text generation and image synthesis). These technologies are constantly evolving, driving deep applications of AI in different fields.

2.3.1 Machine Learning:

Machine learning is a technology that builds models through data and algorithms to extract patterns for prediction or classification. Machine learning relies on large amounts of data and complex neural network models, enabling AI to identify patterns, predict outcomes, and learn autonomously.

Machine learning is further divided into three categories: supervised learning (training with labeled data, such as image classification), unsupervised learning (finding patterns from unlabeled data, such as clustering), and reinforcement learning (optimizing decisions through interaction with the environment, such as game AI). These three learning methods form the core algorithm foundation of modern AI.

2.3.2 Deep Learning:

Deep learning is a machine learning technology based on neural networks. Its main feature is the ability to automatically learn data features by training the model to learn the feature extraction task.

2.3.3 Natural Language Processing (NLP):

NLP is the technology that enables computers to understand, process, and generate human language, achieving semantic understanding and response through text or speech analysis.

2.3.4 Computer Vision:

Computer vision enables machines to "understand" images or videos through computer algorithms, automatically extracting information from visual data.

2.3.5 Reinforcement Learning:

Reinforcement learning is a technology that optimizes decisions through interaction with the environment to receive feedback (reward or punishment).

2.3.6 Generative AI:

Generative AI is a machine learning model that generates new content similar to the training data, such as images, text, or videos.

2.3.7 Big Data and Data Processing:

Big data technology is used for processing and analyzing large amounts of data, especially in AI for tasks such as data preprocessing, feature extraction, and model training.

2.3.8 AI Hardware Acceleration (GPU/TPU/NPU):

AI hardware acceleration technology uses specialized hardware (such as GPU, TPU, NPU) to accelerate neural network training and inference processes.

3. Market, Application Scenarios, and Business Models of AI

3.1 Market Size of the AI Industry:

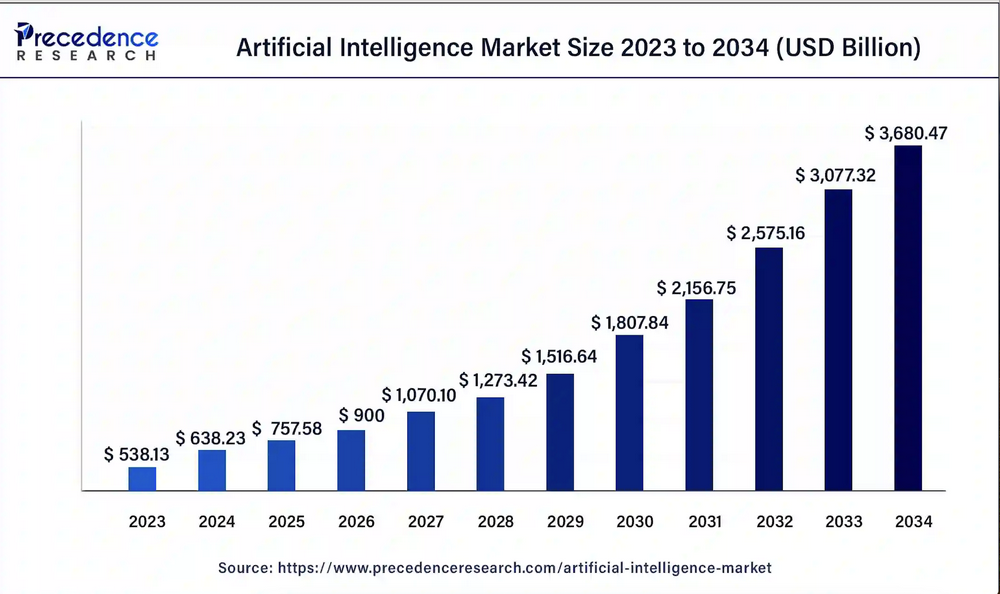

The global artificial intelligence (AI) market is rapidly expanding, especially since the release of ChatGPT. The estimated global AI market size is expected to be between $300 billion and $400 billion by 2023.

According to Precedence Research's forecast, the global AI market size will be $638.23 billion in 2024 and is projected to reach $3,680.47 billion by 2034, with a CAGR of 19.1%, highlighting the enormous potential and sustained strong development in this field.

Factors driving this growth include the increasing demand from enterprises for automation and data-driven decision-making, government investment and support for AI technology, and the continuous maturation and widespread application of AI technology (expanding from traditional internet industries to various fields such as finance, healthcare, education, and manufacturing).

3.2 Application Scenarios of AI

Relying on several key capabilities of AI (image recognition, speech recognition, natural language processing, embodied intelligence), AI technology is applied in various vertical fields such as healthcare (AI diagnostic tools), finance (risk assessment and algorithmic trading), retail (recommendation systems), manufacturing (smart factories), solving industry-specific problems, improving operational efficiency, and creating new business models.

- Healthcare: The application of AI in healthcare is gradually maturing and expanding to multiple aspects, including diagnosis, personalized treatment, drug development, and health management. AI analyzes large amounts of medical data (such as medical records, genetic sequences, imaging data) to assist doctors in early disease diagnosis, precise treatment decisions, and accelerate the drug development process. For example, AI tools in radiology can help identify early signs of cancer, and AI-driven genetic analysis can provide personalized treatment plans for patients. The application of AI in healthcare not only improves diagnostic accuracy and treatment efficiency but also significantly reduces medical costs, especially in resource-constrained environments, where AI technology can greatly improve the accessibility of medical services.

- Finance: In the financial industry, AI is widely used in risk management, algorithmic trading, customer service, and fraud detection. AI analyzes massive market data and historical transaction records to predict market trends in real-time and execute high-frequency trading strategies, thereby increasing investment returns and market efficiency. Additionally, AI is used to develop intelligent advisory services to help individual investors formulate investment strategies based on their financial situation and risk preferences. AI-driven anti-fraud systems monitor transaction patterns to detect abnormal trading behavior and reduce losses for financial institutions.

- Education: The application of AI in the education sector is changing traditional teaching methods and driving the development of personalized learning. By analyzing students' learning behavior data, AI can customize learning content and paths for each student, helping them learn at a pace that suits their learning speed and comprehension. AI is also used to develop automated homework grading and exam scoring systems, reducing the workload of teachers and providing real-time feedback. Furthermore, AI-driven education platforms can recommend suitable learning resources and courses based on students' performance and interests, enhancing learning effectiveness.

- Retail and E-commerce: In the retail and e-commerce sectors, AI helps businesses increase sales and customer satisfaction through personalized recommendation systems, inventory management optimization, and customer relationship management (CRM). AI analyzes customer shopping behavior and preferences to accurately recommend products and increase sales conversion rates.

- Supply Chain Management: In supply chain management, AI predicts demand fluctuations, optimizes inventory management, and reduces instances of stockouts or excess inventory. Additionally, AI-driven chatbots and virtual assistants enhance the shopping experience for consumers, providing them with 24/7 personalized service.

- Smart Products and Devices: AI technology is widely applied in smart home devices, autonomous vehicles, drones, robots, and other smart products. These products achieve automation and personalized functions through AI, significantly enhancing user experience. For example, AI-driven smart speakers (such as Amazon Echo, Google Home) not only execute voice commands but also learn users' habits to provide more personalized services.

- Autonomous Driving: Autonomous driving technology is a major highlight of AI in smart devices. Through the fusion of deep learning models and sensor data, autonomous driving systems can make real-time decisions in complex road environments, improving driving safety and efficiency.

3.3 AI Business Models

AI business models are diverse, including Software as a Service (SaaS), data analysis services, AI-driven products (such as smart devices), etc. Companies simplify processes and improve efficiency by providing AI solutions, thereby achieving profitability.

- Software as a Service (SaaS): AI SaaS platforms provide cloud-based AI services, allowing enterprise users to subscribe to these services on demand without the need to develop or maintain AI infrastructure. For example, Google's AI platform, Amazon's AWS AI services, Microsoft Azure's AI tools, OpenAI's ChatGPT, etc., allow users to call these services (including machine learning, natural language processing, computer vision) through APIs and pay based on usage.

- AI Hardware Sales: AI hardware manufacturers such as NVIDIA have developed AI-specific chips, obtaining sales revenue by providing AI chip computing power to various manufacturers and users. NVIDIA's AI chip customers include CSP manufacturers (Microsoft, Amazon, Google, etc.), internet companies, and consumer-level technology companies (Meta, Tesla, etc.).

- Data Analysis Services: AI data analysis companies provide valuable business insights by analyzing enterprise data, helping optimize business processes and decision-making. For example, companies like Palantir analyze large datasets to help businesses identify patterns, predict market trends, and formulate more effective strategies. These services are typically offered through consulting or project-based fees.

- Smart Devices: AI technology is embedded in various hardware products (such as smart speakers, drones, autonomous vehicles, etc.), enabling these devices to achieve key functions through AI and create unique user experiences. For example, Tesla's autonomous driving system, Amazon's Echo smart speaker, etc., are products empowered by AI technology. These smart devices not only generate revenue through hardware sales but may also generate continuous income through additional services or content subscriptions.

- AI Application Product Services: AI applications developed based on large language models (such as GPT-4, Codex, etc.) for typical application scenarios are used by enterprises and users through subscription-based AI services. For example, OpenAI has launched ChatGPT to help users generate content, articles, Q&A, etc.; MidJourney provides the ability to generate artistic images in different styles for artists and designers; Runway offers AI video editing capabilities, allowing users to automatically generate video clips, apply style transfers, and perform rapid editing. DoNotPay provides automated legal services, helping users handle simple legal matters such as appealing parking tickets and applying for refunds, significantly reducing the barriers to legal services.

4. Industry Chain Map and Typical Companies in AI

4.1 Roles in the AI Industry

The main players driving AI development include large hardware companies (NVIDIA), large technology companies (such as Google, Microsoft, Amazon), and a series of AI startups. These companies are leading in data processing capabilities, algorithm development, and market applications, driving the development of the entire AI ecosystem.

- Hardware Companies: Hardware manufacturers such as NVIDIA have launched GPUs and AI chips. AI chips can support the learning of deep neural networks and accelerate computation, providing computational support for AI.

- Tech Giants: Companies like Google, Microsoft, and Amazon have invested heavily in the field of AI. They have not only developed powerful AI platforms but also actively invested in AI startups and expanded their AI ecosystem through acquisitions. These companies have rich data, powerful computing resources, and top talent, enabling them to lead the development direction of AI technology.

- AI Startups: AI startups (such as OpenAI, Nuro, Vicarious, etc.) often focus on innovation in specific areas such as medical AI, autonomous driving AI, and financial AI. These companies have flexibility and innovation, enabling them to quickly respond to market demands and develop competitive products and services. Startups typically obtain funding through venture capital and achieve rapid growth in a short period, becoming significant forces in the market.

- Academic Institutions and Research Organizations: Universities and research institutions worldwide (such as MIT, DeepMind, BAIR, etc.) are also important forces in the development of AI technology. They continuously conduct cutting-edge research and promote industry progress through open-source code and academic papers. They also cultivate a large number of professionals in the AI field. Through open-source code and academic publications, these institutions promote the dissemination of knowledge and the popularization of technology.

4.2 AI Industry Chain Map

The AI industry chain spans from upstream hardware providers (such as chip manufacturers) to midstream software development and platform provision, and then to downstream application scenarios. It constitutes a vast and complex ecosystem. Each link has multiple key participants, collectively driving the advancement of AI technology and the widespread application.

4.2.1 Upstream: Infrastructure Layer

The upstream part includes hardware manufacturers and cloud service providers.

- Hardware Manufacturers: They provide the hardware support required for AI computing, including CPUs, GPUs, TPUs, and dedicated AI accelerators. Players in this layer include NVIDIA, AMD, Intel, and emerging dedicated AI chip manufacturers (such as Tesla's FSD chip).

- Cloud Service Providers: Companies like Amazon Web Services (AWS), Google Cloud, Microsoft Azure, etc. These companies provide large-scale cloud-based computing resources and AI development platforms, supporting enterprises in the development, training, and deployment of AI models. The popularity of cloud services lowers the threshold for AI development, enabling small and medium-sized enterprises to utilize AI technology.

4.2.2 Midstream: Platform and Tools Layer

The midstream part includes AI model development companies, software development platforms, data services, and management tools. This layer provides algorithm, platform, and data support for the entire ecosystem, driving the popularization and practical application of AI technology.

- AI Model Development Companies: These companies focus on developing and training large AI models, providing basic algorithms and models for enterprises and developers to use. They drive cutting-edge research in artificial intelligence and commercialize their achievements through APIs or platforms. Representative companies include OpenAI, Google DeepMind, Anthropic, and Cohere, which have developed large language models (LLMs) such as GPT and BERT for tasks such as natural language processing and generative AI.

- AI Software Development Platforms: These platforms provide tools for developers to build, train, and deploy AI models. They offer flexible frameworks that allow developers to easily develop and deploy AI models. These platforms not only support high-performance model training but also integrate with hardware accelerators (such as GPUs, TPUs) to enhance model training efficiency. Representative open-source platforms include TensorFlow, PyTorch, Keras, Hugging Face, etc., supporting developers in creating and training various deep learning models for academic research and commercial applications.

- Data Services and Management Tools: Data is the core of AI model training, and enterprises require a large amount of data to train AI models. Data services and management tools help enterprises efficiently manage and process large-scale data. Data service companies such as Snowflake and Databricks provide big data processing and analysis tools to help enterprises manage structured and unstructured data. Additionally, data labeling service companies (such as Scale AI) provide high-quality training data for AI models, ensuring model accuracy and reliability.

4.2.3 Downstream: Application Scenarios and Service Layer

The downstream part includes the practical application of AI in various industries, intelligent products and services based on AI technology, and service companies providing consulting and operational maintenance for the implementation of AI technology.

- Vertical Domain AI Applications: AI technology is applied in various vertical domains such as healthcare, finance, retail, and manufacturing, bringing customized solutions to different industries. For example, in the healthcare sector, AI diagnostic tools such as IBM Watson Health and Zebra Medical Vision help doctors diagnose diseases faster and more accurately by analyzing medical images and electronic health records. In the finance sector, AI is applied in risk assessment, fraud detection, and algorithmic trading, with typical cases including Kensho and Darktrace, which use AI to enhance the efficiency of financial data analysis and improve security. In the retail industry, AI-driven recommendation systems such as Amazon's personalized recommendation engine enhance the online shopping experience by analyzing user behavior and preferences. In the manufacturing industry, AI is applied in smart factories to optimize production processes through automated equipment and predictive maintenance. Siemens and GE's Predix platform are representative companies using AI technology to help factories improve production efficiency and reduce operating costs.

- Intelligent Products and Devices: AI technology is widely applied in various intelligent products and devices, driving the development of automation and personalized functions, significantly enhancing user experience. For example, in the smart home sector, AI-driven devices such as Amazon Echo and Google Home not only execute voice commands but also provide personalized services by learning users' daily habits, such as automatically adjusting home lighting and temperature settings. In the autonomous driving sector, companies like Tesla and Waymo rely on AI technology to develop autonomous driving systems, enabling vehicles to navigate roads through cameras, sensors, and deep learning algorithms. In the drone sector, companies like DJI use AI technology to enhance the autonomous flight and target tracking capabilities of drones, widely used in photography, logistics, and infrastructure inspection. In the robotics sector, companies like Boston Dynamics use AI technology to provide perception and decision-making capabilities for robots, enabling them to perform tasks in complex environments such as warehouse automation and hazardous environment operations.

- AI Consulting Services and Operational Maintenance Companies: Responsible for implementing AI technology in enterprises' actual business operations and providing long-term support and optimization. These companies offer comprehensive services ranging from AI strategic consulting and technical implementation to model maintenance, playing a key role in driving the application and development of AI technology in different industries. Companies such as IBM Watson and Accenture provide AI consulting services, helping enterprises formulate AI strategies and implement AI solutions. After the deployment of AI models and systems, continuous maintenance and optimization are required, giving rise to the AI operations service market (MLOps). Companies such as DataRobot and Algorithmia focus on providing AI model monitoring, maintenance, and optimization services for enterprises.

4.3 Typical AI Companies (Midstream to Upstream)

4.3.1 NVIDIA

NVIDIA, founded in 1993, is a leading global graphics processing unit (GPU) manufacturer, initially known for developing PC gaming graphics cards. Today, NVIDIA not only maintains an industry-leading position in graphics processing but has also made significant breakthroughs in artificial intelligence (AI), high-performance computing (HPC), autonomous driving, data centers, and cloud computing.

Business Areas: NVIDIA is a leading global GPU manufacturer and plays a crucial role in the field of AI. NVIDIA provides AI hardware (such as GPUs, CUDA parallel computing architecture) and software platforms (such as NVIDIA AI and Deep Learning SDK), with its GPUs widely used in areas such as autonomous driving, data centers, medical AI, and image processing.

- GPU (Graphics Processing Unit): NVIDIA initially gained fame with its GeForce series graphics cards, focusing on gaming, image processing, 3D rendering, and widely used in personal computers, gaming consoles, and workstations. GPUs have now become the core hardware for AI model training and inference, especially in deep learning, where NVIDIA's GPUs are widely applied due to their powerful parallel computing capabilities.

- AI and Machine Learning: NVIDIA's GPUs and CUDA (parallel computing architecture) have become standard hardware in the field of artificial intelligence and deep learning, helping achieve efficient training and inference for large-scale AI models.

- NVIDIA AI Platform: NVIDIA provides software tools (such as NVIDIA AI, NVIDIA TensorRT) to support developers and enterprises in accelerating the development and deployment of AI models.

- NVIDIA DRIVE: NVIDIA has launched the NVIDIA DRIVE platform for autonomous driving, providing a complete solution from perception and decision-making to autonomous driving systems. It has collaborated with multiple car manufacturers to drive the application of autonomous driving technology.

- NVIDIA Jetson Platform: Jetson is an edge AI platform designed for robots and Internet of Things (IoT) devices, supporting local AI processing and applied in smart cities, industrial automation, and smart devices.

Business Model: NVIDIA's business model relies on hardware sales, software platforms, and ecosystem development. NVIDIA profits from GPU hardware sales, mainly categorized into consumer-grade (GeForce series), professional-grade (Quadro series), data center (Tesla series), and AI computing (A100, etc.). It provides AI development and optimization support for developers and enterprises through software tools and platforms (NVIDIA AI, TensorRT, Omniverse), while also generating revenue through software subscriptions and development tools.

It is estimated that NVIDIA has firmly held over 90% of the data center GPU market share in the past 7 years. By 2023, its share is expected to reach 98%, with all major data centers relying on NVIDIA's GPUs for operation and large model training.

4.3.2 OpenAI and ChatGPT

OpenAI, founded in 2015 by Elon Musk, the founder of Tesla and SpaceX, is an American AI research institution dedicated to developing artificial general intelligence (AGI) to ensure its safety and maximize benefits for all humanity. Initially a non-profit organization, OpenAI later transitioned to a "limited for-profit" business model, attracting investments from major tech companies such as Microsoft. Its goal is to advance AGI development through research and AI technology development, while focusing on the safety, ethics, and controllability of AI.

Business Areas: The core business revolves around AI model development, especially large language models (LLMs) and generative AI, widely applied in natural language processing, generative content, and other fields. OpenAI also provides commercial access to AI models through API services.

- GPT: The GPT (Generative Pre-trained Transformer) series models are among its core products, with models like GPT-3 and the latest GPT-4 demonstrating powerful natural language generation capabilities.

- DALL·E: OpenAI's generative AI model can generate high-quality images based on textual descriptions. It has broad application prospects in design, advertising, and creative industries.

- Codex: A programming language generator based on GPT, capable of understanding natural language instructions and generating corresponding code. It has been applied in GitHub Copilot to assist developers in automatic code generation and writing.

- OpenAI API: OpenAI provides commercial API services, allowing developers and enterprises to build applications based on its AI models. Through the API, businesses can easily access models such as GPT, DALL·E, and Codex for various tasks, including natural language processing, content generation, and workflow automation.

Business Model: It revolves around providing API access to AI models and generating revenue through partnerships with major tech companies.

- OpenAI API: The core business model of OpenAI is to provide access to GPT, DALL·E, Codex, and other models through its API platform. Developers and enterprises can subscribe to these services and use AI models for tasks such as natural language processing, image generation, and automated programming.

- Technology Licensing and Authorization: OpenAI collaborates with other companies to license its technology and models for product integration and application development. Through such licensing, OpenAI expands its technological influence and provides customized AI solutions for enterprises.

OpenAI's technology has had a profound global impact, especially in the fields of AI content generation and automation. Through its open API platform, OpenAI provides AI solutions to thousands of companies, driving innovation in natural language processing, automated content creation, programming, and other fields.

4.3.3 Tesla:

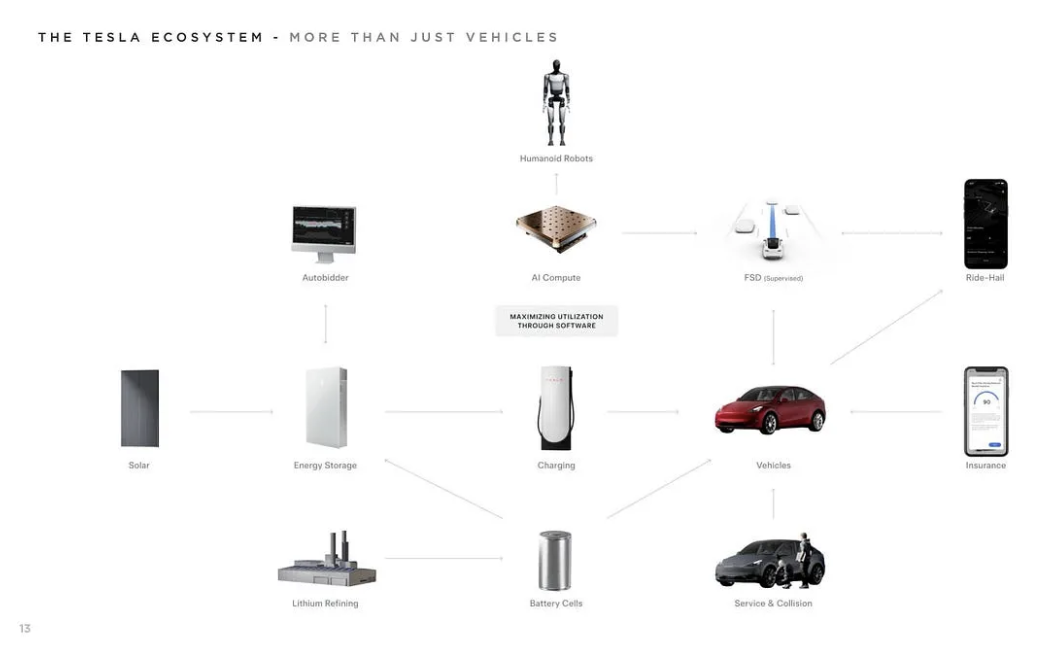

Tesla, founded in 2003, is a globally renowned electric vehicle manufacturer, focusing on the development and production of electric cars, energy storage systems, and solar products. In addition to its electric vehicle business, Tesla is at the forefront of the industry in artificial intelligence (AI) and autonomous driving technology. Its AI-driven autonomous driving system and independently developed AI hardware give it a unique competitive advantage in the automotive industry.

Business Areas: Tesla's business extends beyond electric vehicles to include autonomous driving, energy solutions, and AI hardware development. Tesla has built a robust infrastructure in the field of artificial intelligence, including AI chips (FSD Chip for fully autonomous driving; Dojo Chip for Dojo training), Dojo supercomputer, and AI data centers, providing underlying technological support for autonomous driving and robotics businesses.

- Electric Vehicles: Tesla's core business is the production and sale of electric vehicles, including the Model S, Model 3, Model X, and Model Y. With high performance, long range, and autonomous driving capabilities, they hold a significant position in the global electric vehicle market.

- Full Self-Driving Technology: Tesla's Full Self-Driving (FSD) technology is the core of its AI strategy, relying on its self-developed computing platform and massive computational support to continuously optimize its AI models based on extensive driving data accumulated since 2013. Tesla began exploring autonomous driving technology in 2013 and introduced a fully autonomous driving computing platform equipped with its self-developed FSD chip in 2019. Since the release of Tesla's FSD, it has achieved over 1.6 billion miles of driving.

- AI Hardware Development: Tesla has independently developed the Fully Self-Driving (FSD) chip, replacing the previous reliance on NVIDIA hardware. This chip, specially designed to enhance autonomous driving computing capabilities and efficiency, is a crucial foundation for realizing Tesla's vision of fully autonomous driving. Tesla is developing a supercomputer named Dojo, dedicated to training deep learning algorithms for autonomous driving systems. By processing massive visual and sensor data, Dojo optimizes the speed and performance of AI model training, helping Tesla achieve commercialization of FSD more quickly.

- Energy Solutions: Tesla also provides residential and commercial energy storage systems, such as Powerwall, Powerpack, and Megapack, helping users store solar energy and optimize energy usage. By integrating with solar products, Tesla is driving the adoption of clean energy solutions.

- Optimus: Optimus is positioned as a general-purpose bipedal autonomous humanoid robot capable of performing unsafe, repetitive, or mundane tasks to address labor shortages. Tesla plans to deploy Optimus in its own Gigafactories to perform repetitive tasks such as material handling and part assembly. In the future, Tesla aims to bring Optimus into households to assist with household chores such as cooking and cleaning.

- Robotaxi: In April 2024, Musk announced Tesla's plan to officially launch the Robotaxi, an autonomous driving taxi service, in Q3. This will disrupt traditional transportation methods and enable efficient vehicle sharing.

Business Model: Tesla's business model covers multiple dimensions, including electric vehicles, autonomous driving, and energy solutions, profiting from hardware sales and software subscriptions.

- Hardware Sales: Tesla profits from directly selling electric vehicles (Model S, Model X, Model 3, and Model Y) to consumers. Tesla has expanded into the energy market by selling products like Powerwall and Solar Roof, promoting the application of renewable energy technologies.

- Software and Subscription Services: Tesla's Fully Self-Driving (FSD) software is sold through one-time purchases or subscription services, allowing vehicle owners to access advanced autonomous driving features. This model provides Tesla with additional ongoing revenue streams.

- Energy Services: Tesla provides enterprise-level energy storage solutions through Powerpack and Megapack, collaborating with utility companies globally to optimize grid operations and promote the application and storage of renewable energy.

Tesla is a leader in the global electric vehicle market, with its high-performance, long-range, and innovative electric vehicle products holding a significant share of the global electric vehicle sales, especially in the US, Europe, and China markets. Tesla's innovations in autonomous driving, energy solutions, and AI technology have also had a profound impact.

4.3.4 Anthropic

Anthropic is an AI research company founded in 2021, dedicated to developing safe and reliable large-scale AI systems. The company was created by former researchers from OpenAI, aiming to advance AI's safety through more controllable and interpretable AI models. Anthropic focuses on AI ethics, safety, transparency, and fairness, reducing potential social risks while developing powerful AI models.

Business Areas: The core business revolves around the safety, interpretability, and ethics of AI systems, especially large-scale language models (LLMs) and generative AI.

- Large Language Models (LLMs): Anthropic's Claude model series is its representative large language model, similar to OpenAI's GPT model. These models can perform complex natural language understanding and generation, widely applied in dialogue systems, automated writing, and question-answering systems.

- Claude API: Anthropic provides API services based on its Claude model, allowing developers and businesses to integrate its AI models for natural language processing tasks. Through the API, businesses can access automated dialogue, content generation, and data analysis functions using the Claude model.

- Secure AI Solutions: Anthropic offers customized AI solutions to enterprises, especially in industries with high security requirements such as finance, healthcare, and law. Through its security-first AI models, the company helps businesses reduce the risks of AI applications.

Business Model: The business model revolves around AI model development and secure application, providing AI technology support to commercial clients through API services and enterprise solutions.

- API Services: Through its API platform, Anthropic opens its large language model Claude to developers and businesses, providing AI capabilities for natural language processing and generation on a subscription basis. Developers and businesses can pay based on usage to access the AI capabilities of the Claude model and apply them to dialogue systems, automated workflows, content generation, and other business scenarios.

- Customized AI Solutions: Anthropic provides customized AI solutions to enterprises requiring powerful AI capabilities, especially in industries with high security requirements. By offering secure and reliable AI models, the company helps businesses avoid potential risks when applying AI and ensures the transparency and interpretability of AI systems.

- Security and Ethics Consulting: Leveraging its expertise in AI security and ethics, Anthropic also provides AI ethics and security consulting services to businesses and governments, helping them assess and improve the security of existing AI systems and prevent potential risks associated with AI.

Anthropic's technology and research have had a significant impact on the AI community and industry, particularly in driving discussions on AI safety and ethics. Through its Claude model and security-first AI systems, Anthropic is gaining more attention and application from businesses.

4.3.5 Cohere

Cohere, founded in 2019 and headquartered in Canada, is an AI company focused on natural language processing (NLP) technology. Cohere is dedicated to developing powerful language models to help businesses apply AI technology to text understanding, generation, translation, and other natural language processing tasks. Unlike companies like OpenAI and Anthropic, Cohere primarily focuses on enterprise-level NLP solutions, especially by providing flexible and customizable AI models to help businesses effectively utilize natural language processing technology.

Business Areas: The core business revolves around natural language processing (NLP) and generative AI, providing various language models and development tools to drive AI applications in enterprises.

- Natural Language Processing (NLP): Cohere focuses on developing large-scale language models that can understand and generate natural language. They are widely used in tasks such as text classification, sentiment analysis, automatic summarization, and translation, suitable for various industries' text processing needs.

- Generative AI: Cohere's generative AI technology can generate high-quality natural language text for content creation, automated writing, summarization, and data reporting tasks. AI-generated content meets the demand for efficient content generation in industries such as media and marketing.

- API and Development Tools: Cohere provides API services and flexible development tools to help businesses and developers integrate AI technology quickly. Cohere's tools support various programming languages and frameworks, making it easy for development teams of different scales and technical levels to adopt.

- Enterprise Solutions: Cohere not only provides general language models but also customizes development according to enterprise needs, making the models more suitable for specific industry business scenarios. These custom models are widely used in customer support, e-commerce, law, finance, and other fields requiring high-precision language understanding.

Business Model: The business model revolves around API services, customized solutions, and enterprise NLP consulting services, primarily providing advanced NLP tools and support to enterprise clients.

- API Services: Cohere provides natural language processing and generation services through its API platform, allowing developers and businesses to call these APIs for text processing tasks as needed. Cohere adopts a subscription and usage-based billing model, flexibly meeting the needs of enterprises of different scales.

- Customized NLP Solutions: Cohere offers customized NLP solutions to enterprises requiring personalized language processing capabilities. Enterprises can customize models according to industry needs and optimize AI system performance. Especially in industries such as finance, law, and customer service that require high-precision text processing, Cohere's customized models have strong market competitiveness.

- Enterprise Consulting and Technical Support: Cohere provides in-depth NLP consulting services to enterprises, helping them optimize their AI and language processing systems to maximize the use of NLP technology. Cohere also offers training to enterprises and developers, helping them better understand how to use Cohere's APIs and language models to enhance their internal team's AI capabilities.

Cohere's performance in the enterprise-level natural language processing market is remarkable. Through its efficient API services and customized solutions, Cohere has gained the trust of many enterprises and is widely used in multiple industries. Cohere's NLP technology has been applied in fields such as finance, law, healthcare, and customer service, helping businesses automate text processing, data analysis, and customer support tasks, improving operational efficiency.

4.4 AI Landing Applications and Apps (Downstream)

In the downstream of the AI industry chain, AI applications are mainly AI solutions tailored to specific industries or enterprise needs. The core goal of these applications is to integrate AI technology into industry workflows and drive industry intelligence transformation. AI downstream applications cover a wide range, including enterprise-level solutions and may also involve the consumer market.

4.4.1 OpenAI — ChatGPT

ChatGPT is an AI chatbot based on large language models launched by OpenAI in November 2022, capable of natural language processing and generation, providing various intelligent services. Just two months after its launch, ChatGPT surpassed 100 million monthly active users by the end of January 2023, becoming the platform with the shortest time to reach 100 million users globally.

- Features: ChatGPT uses generative pre-trained models (GPT) to understand and generate natural language text, supporting multi-turn conversations, answering questions, providing suggestions, and generating content, applicable in customer support, writing assistance, and knowledge Q&A.

- AI Technologies: Natural Language Processing (NLP), generative pre-trained models, deep learning.

- Typical Use Cases: Intelligent customer service, content generation, educational support, writing assistance.

4.4.2. Zebra Medical Vision — Medical Image Analysis

Zebra Medical Vision is a company using AI technology for medical image analysis, helping doctors diagnose diseases such as cancer, heart disease, and pneumonia.

- Features: Zebra Medical Vision's AI system automatically identifies potential pathological changes and provides diagnostic suggestions by analyzing medical images such as X-rays, CT scans, and MRIs, helping doctors identify diseases faster and more accurately.

- AI Technologies: Deep learning, computer vision, medical image processing.

- Typical Use Cases: Cancer screening, heart disease detection, lung disease diagnosis.

4.4.3. Zoom — Intelligent Meeting Features

Zoom is a video conferencing platform widely used for remote work, online education, and social interaction. Its video conferencing system provides high-quality remote collaboration experience through cloud computing and AI features such as real-time captions and background blur.

- Features: Zoom uses AI features to provide real-time captions, background blur, noise suppression, and other intelligent meeting services, improving the remote collaboration experience.

- AI Technologies: Natural Language Processing (NLP), machine learning, computer vision.

- Typical Use Cases: Remote meetings, online education, real-time caption generation.

4.4.4. Lemonade — AI-Driven Insurance Claims

Lemonade is a company that optimizes insurance services using AI technology. It simplifies the insurance claims process through AI and chatbots, providing fast and personalized insurance services.

- Features: Lemonade's AI system automatically processes insurance claims requests using natural language processing and machine learning technologies, quickly analyzing customer needs and making claims decisions.

- AI Technologies: Natural Language Processing (NLP), machine learning, automated decision systems.

- Typical Use Cases: Automated insurance claims, risk assessment, customer service.

4.4.5. Alibaba — Intelligent Retail

Alibaba's unmanned supermarket is a fully automated retail model created using artificial intelligence (AI), Internet of Things (IoT), big data, and biometric recognition technology. The core of the unmanned supermarket is to achieve "unmanned" operations through technological means, allowing consumers to complete the shopping process without relying on traditional store staff.

- Features: Alibaba's intelligent retail system uses AI and RFID technology to achieve automatic checkout, inventory management, personalized recommendations, and other functions, allowing consumers to complete shopping without human intervention.

- AI Technologies: Computer vision, Internet of Things (IoT), machine learning.

- Typical Use Cases: Unmanned supermarkets, automated checkout, personalized product recommendations.

4.4.6. Apple Siri — Intelligent Voice Assistant

Siri is Apple's intelligent voice assistant, using natural language processing (NLP) technology to help users complete various tasks such as setting reminders, navigation, and messaging.

- Features: Apple's intelligent voice assistant in Apple devices can help users perform operations through voice commands, including making calls, sending messages, setting reminders, navigation, and information queries.

- AI Technologies: NLP, speech recognition, machine learning.

- Typical Use Cases: Voice command execution (making calls, sending messages, setting reminders), navigation, information queries.

4.4.7. Spotify — Music Recommendation System

Spotify uses AI and machine learning algorithms to analyze users' listening habits and provide personalized music recommendations. Through user behavior data, Spotify can predict songs and artists that users may like.

- Features: Spotify's AI-driven music recommendation system analyzes users' listening habits and preferences, providing personalized music recommendations and daily playlists.

- AI Technologies: Collaborative filtering, deep learning, machine learning.

- Typical Use Cases: Personalized music recommendations, generating daily music recommendation playlists, discovering new music.

4.4.8. Grammarly — AI Writing Assistant Tool

Grammarly is an AI-based writing assistant tool that helps users detect spelling, grammar, and writing style errors, and provides improvement suggestions.

- Features: Grammarly analyzes the user's text and provides grammar, spelling, and style improvement suggestions to help improve writing quality.

- AI Technologies: Natural Language Processing, machine learning, text analysis.

- Typical Use Cases: Text proofreading, writing suggestions, grammar and spelling checks.

4.4.9. Replika — AI Chatbot

Replika is an AI chatbot that allows users to engage in personalized conversations and build emotional connections. Replika uses NLP and emotion analysis technology to simulate human conversations, helping users relieve stress and engage in self-reflection.

- Features: Replika's chatbot allows users to build emotional connections through conversations with AI. It mimics human conversation styles, provides emotional support, and helps users engage in self-reflection.

- AI Technologies: NLP, deep learning, emotion analysis.

- Typical Use Cases: Emotional companionship, conversational interaction, self-reflection.

4.4.10. Youper — Emotional Health Assistant

Youper is an AI-driven emotional health application that helps users manage emotions and mental health through emotional diaries and conversation analysis. AI analyzes the user's emotional state and provides suggestions and meditation exercises.

- Features: Helps users manage emotions and mental health through emotional diaries, conversation analysis, and meditation techniques.

- AI Technologies: NLP, emotion analysis, machine learning.

- Typical Use Cases: Emotional diaries, meditation guidance, mental health management.

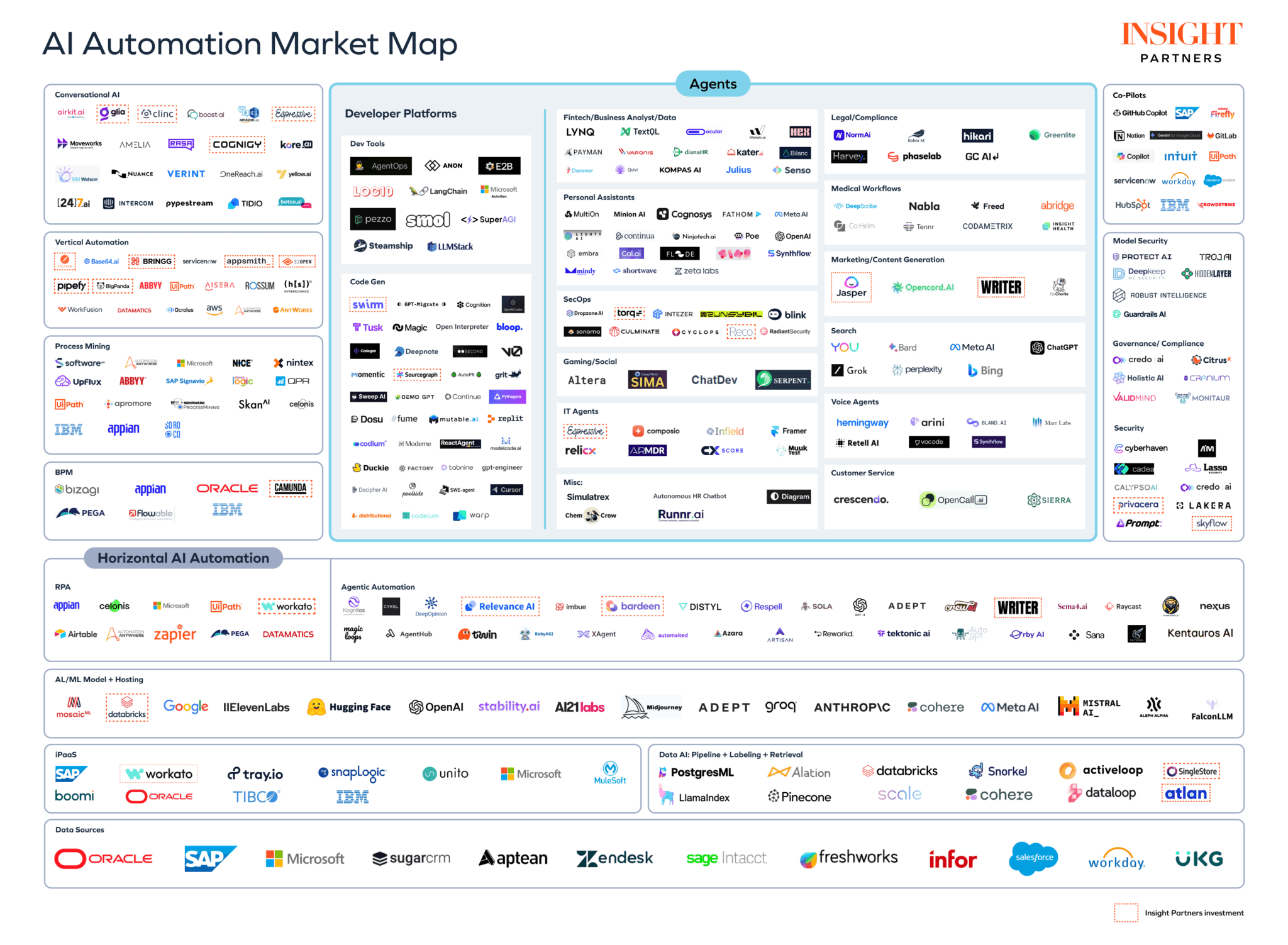

4.5 AI Agent

An AI Agent is a self-contained computing system that can perceive its environment, make decisions, and take actions based on information in the environment. Agents typically have the ability to perceive, reason, learn, and act, and can interact with the environment or other agents driven by a certain goal or task. AI agents can be applied from simple rule-based systems to complex deep learning models, widely used in automation, robotics, game AI, and other fields.

Many AI consumer applications we commonly see, such as Apple's Siri assistant and ChatGPT chatbot, are actually AI Agents. These AI Agents directly provide AI products and services to ordinary consumers, providing convenient, personalized services, and entertainment experiences through AI technology.

The majority of consumer-facing AI applications on the market essentially belong to one form of AI Agent. The following image is the AI Agent market map drawn by Insight Partners, covering various companies and industries.

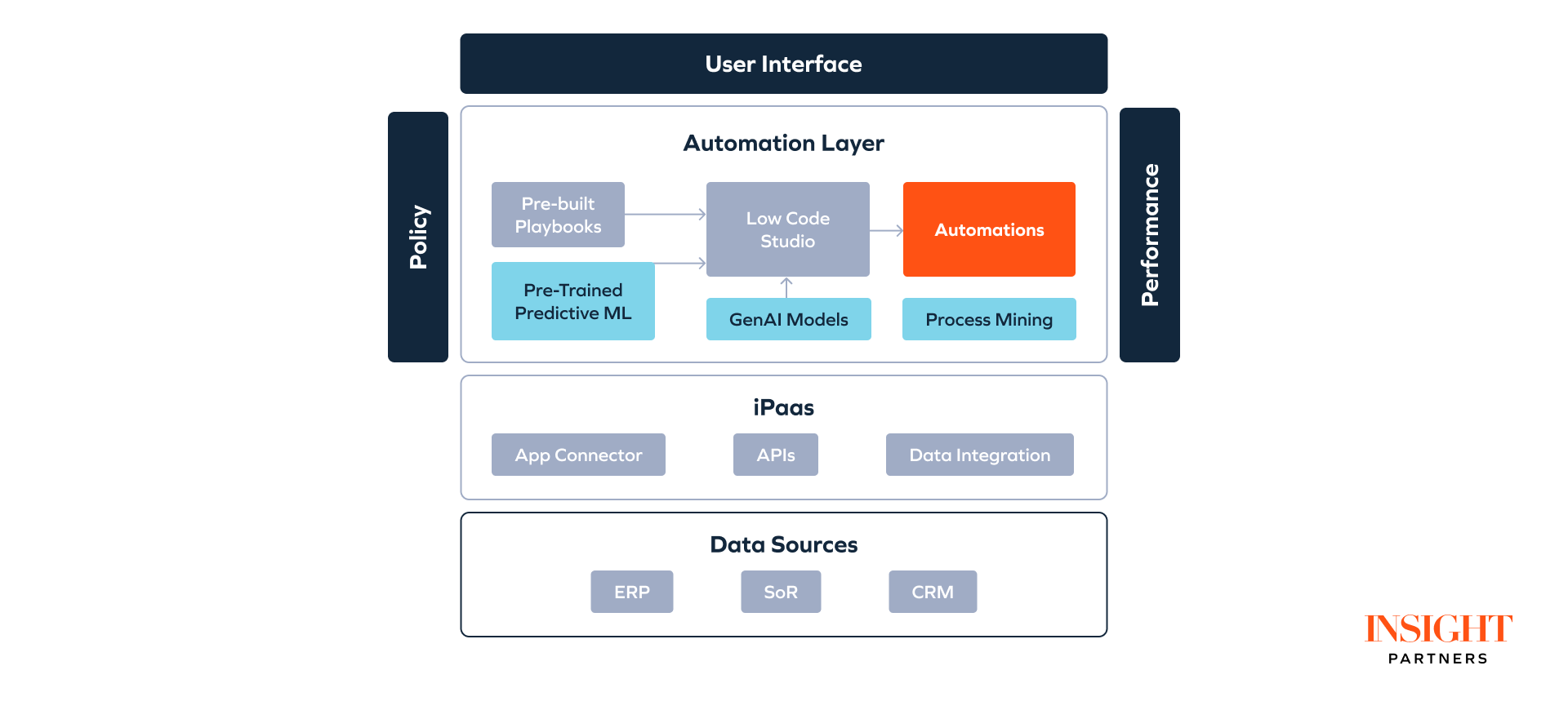

4.5.1 AI Agent Technology Architecture

A typical AI Agent technology architecture consists of a data layer, iPaaS (Integration Platform as a Service layer), automation layer, and user interface layer. These four layers together support the perception, decision-making, action, and interaction capabilities of AI Agents. Each layer plays a unique role in the system and collaborates to ensure that AI Agents can effectively handle tasks and interact with the environment.

- Data Layer: The data layer is the foundation of the AI Agent technology architecture, responsible for collecting, storing, and managing various types of data. These data come from different input sources, including sensors, user interactions, historical records, and external systems. AI Agents rely on this data for perception, analysis, and learning to make effective decisions.

- iPaaS Layer: iPaaS (Integration Platform as a Service) is an integration platform responsible for connecting internal and external data sources, applications, and services to ensure smooth collaboration between different parts of the system. Through the iPaaS platform, external APIs are managed and called to ensure that AI Agents can access and utilize external services (such as third-party AI models and external data services). iPaaS is the "central nervous system" of AI Agents, enabling different systems to interoperate and ensuring smooth connectivity of data, functions, and services.

- Automation Layer: The automation layer is the core of AI Agents, responsible for executing AI model inference, decision-making, and automated task execution. It is the primary mechanism that enables AI Agents to perceive, make decisions, and take action. This layer achieves intelligent operations through machine learning, deep learning, and automated process management.

- User Interface (UI Layer): The user interface layer is the bridge for interaction between AI Agents and users. It allows users to communicate with AI Agents through intuitive graphical interfaces or voice interactions, issue commands, or receive feedback. A good user interface can greatly enhance the user experience, making AI Agent operations smoother and more efficient.

A typical AI Agent's technology architecture collects and manages data through the data layer, ensures the integration of various systems and services through the iPaaS layer, executes AI model inference and automated task processing through the automation layer, and interacts with users through the user interface layer. The four layers work closely together to enable AI Agents to perceive the environment, make decisions, and execute tasks, achieving efficient integration of intelligent operations and human-machine interaction.

4.5.2 Top 100 AI Agent Consumer Applications

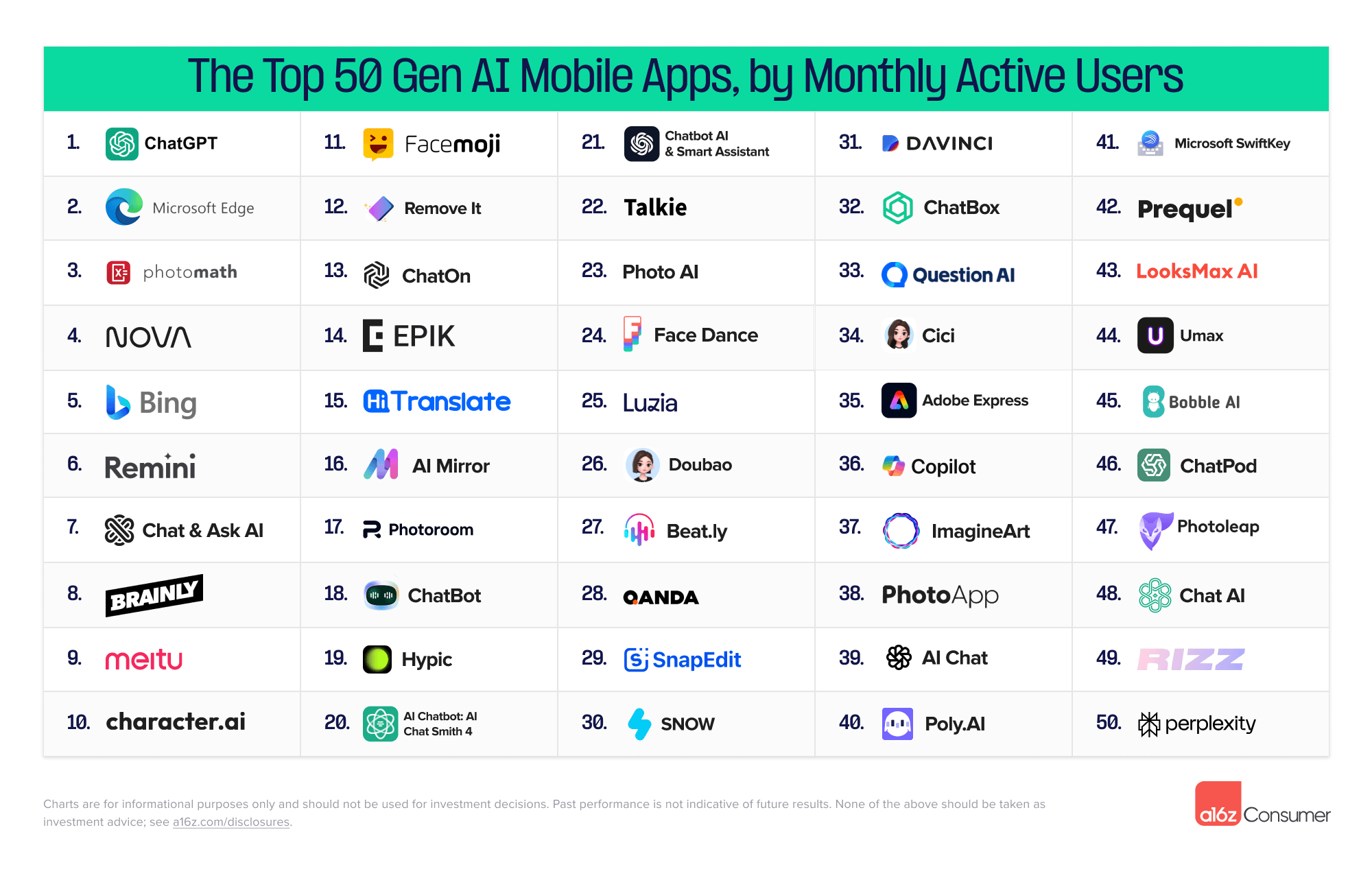

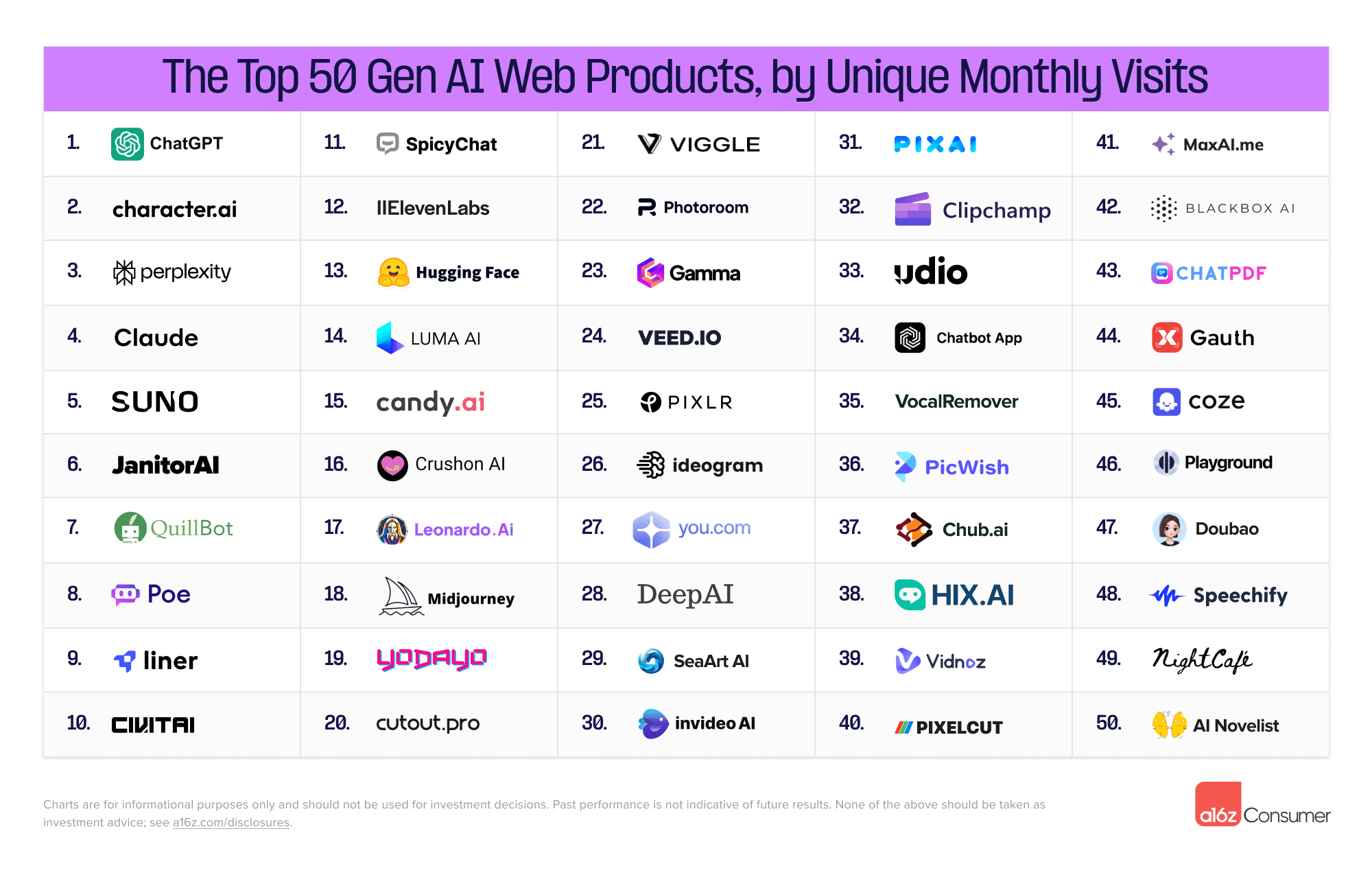

A16Z has provided a list of the top 100 AI consumer applications based on monthly website traffic, including mobile and web products.

Analysis reveals that most of these AI applications belong to the creative tools category (chatbots, general assistants, text and image, video, photo/video AI), with 52% of companies on the list focusing on content generation or editing in various forms—image, video, music, voice, etc. Among the 12 newcomers, 58% belong to the creative tools field.

This reflects the current situation of AI Agents, which tend to be applications in the creative tools category, with overall functionality leaning towards early and basic applications. There is still a significant gap for AI Agents to become indispensable national/killer applications in people's daily lives. With the further development of AI technology and LLM, AI Agents have great potential.

5. Web3 and the Integration of AI

5.1 Advantages of Web3 and AI Integration

AI is essentially an advanced productive force, and its rapid development relies on three core elements: data, algorithms, and computing power.

Cryptocurrencies and blockchain, on the other hand, are a mode of production aimed at achieving the circulation of data and asset value in a decentralized manner, giving users more control over data and assets, as well as privacy protection.

The integration of blockchain, Web3, and AI will further drive the development of internet and AI applications. The combination of AI and blockchain brings new opportunities for data security, privacy protection, smart contract execution, and decentralized AI applications.

- AI can enhance the efficiency and security of blockchain systems, better meet user-intent-based needs, and identify potential threats and fraudulent behavior through on-chain data analysis and processing.

- The development of Web3 provides a new application scenario and value system for AI. Through blockchain technology, AI can operate in a decentralized network, ensuring data transparency, immutability, and user privacy protection.

- Blockchain technology can provide transparent and tamper-proof records for data processing of AI models, ensuring the authenticity and integrity of data, as well as data ownership and usage.

- The Web3 token economy incentive mechanism can provide low-cost startup, ecosystem development, and user incentives for AI models and applications, helping AI projects to grow and develop better.

5.2 Market Value and Financing of AI Concept Blockchain Projects:

According to CoinMarketCap data, there are currently 324 Web3-related projects in the AI sector, with a total market size of $25.64 billion.

Typical projects include NEAR Protocol (NEAR), Artificial Superintelligence Alliance (FET), Bittensor (TAO), Render (RENDER), Theta Network (THETA), Akash Network (AKT), AIOZ Network (AIOZ), Arkham (ARKM), io.net (IO), Aethir (ATH), Delysium (AGI), Numeraire (NMR), Sleepless AI (AI), etc., mainly involving AI public chains and infrastructure, AI computing power, and AI applications.

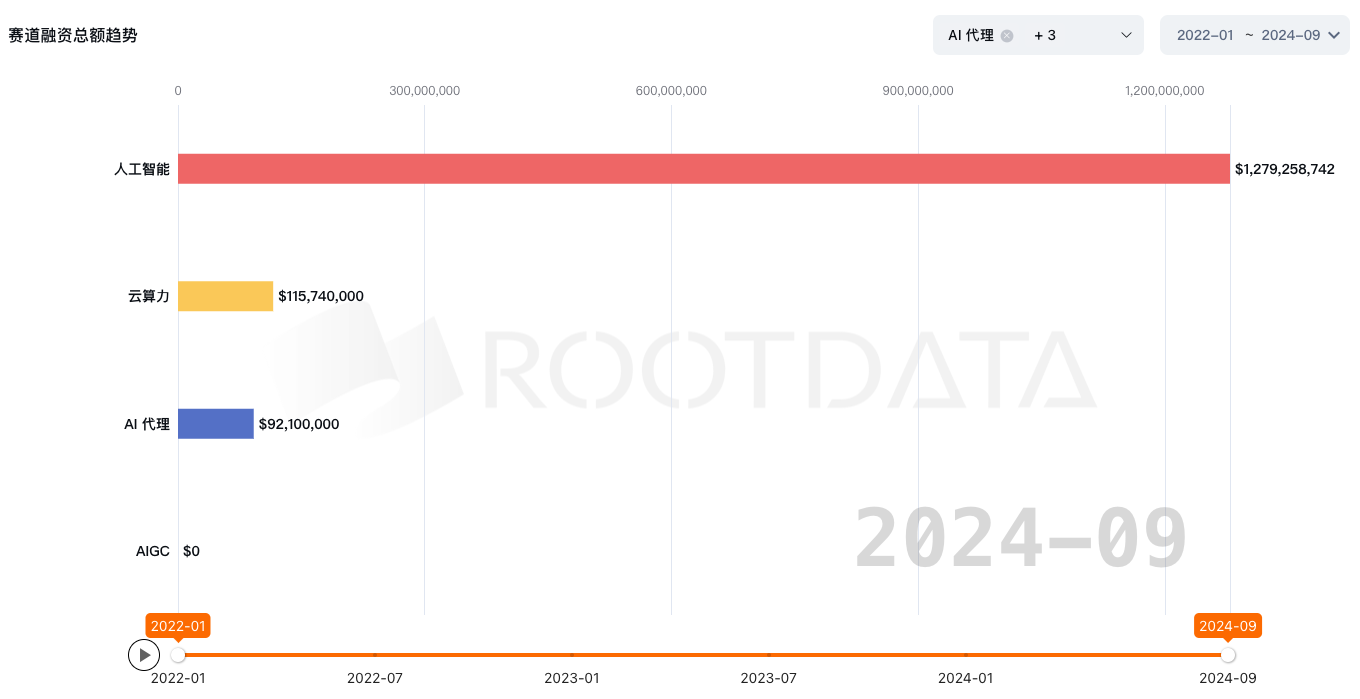

In terms of AI financing, according to RootData, from January 2022 to September 2024, the total financing amount for the AI sector (including AI, cloud computing power, AI agents, AIGC) was $1.487 billion. Investment institutions are optimistic about the prospects of the AI + Web3 sector.

5.3 Web3 + AI Sector Industry Map

According to Foresight News data, there are currently at least 140 Web3 + AI concept projects in the industry, covering infrastructure, data, prediction markets, computing and computing power, education, DeFi & cross-chain, security, NFT & gaming & metaverse, search engines, social & creator economy, AI chatbots, DID & messaging, governance, healthcare, trading bots, and many other areas. Among them, there are as many as 30 infrastructure projects, 26 NFT & gaming & metaverse projects, and more than 10 projects in data, computing, and AI chatbots.

Based on the projects in the Web3 + AI sector, they can be classified according to the AI upstream and downstream industry chain, mainly into three major types:

5.3.1 AI Infrastructure:

This includes infrastructure and computing power, providing basic services and resource support for AI applications.

- Public Chains: Through AI + blockchain underlying infrastructure, it provides basic infrastructure services (computing power, storage, LLM call deployment, etc.) and LLM call and deployment for other applications. Projects such as Near, Olas, Cortex, and Fetch.ai belong to this category.

- Web3 + Computing Power Network: The implementation of AI models requires powerful computing capabilities. In the traditional Web2 field, computing power is mainly concentrated in large factories or some computing resource providers. Web3 achieves network construction, computing power incentives, and data governance for computing resources (personal graphics cards, CPUs, storage, etc.) through edge computing and distributed technology. Projects such as Filecoin, Render Network, Io.net, and Ather belong to this category.

5.3.2 Web3 + Data/Model + Market/Network/Protocol:

The development of AI requires a large amount of data and complex algorithm models to train intelligent systems. In the traditional Web2 field, large internet companies (such as Google, Facebook, Amazon) usually dominate algorithms and data.

Web3 brings a new possibility, building a decentralized AI data, model, and network market through decentralized incentive mechanisms and blockchain technology, breaking the monopoly of resources and allowing more participants (small and medium-sized enterprises and individuals) to enter and contribute to the AI ecosystem.

Through tokenized incentive mechanisms, market participants can share and contribute resources, such as algorithm models, data, and computing power. This will greatly promote the openness and collaboration of AI, reduce the barriers to AI development, enable small and medium-sized enterprises and individual developers to participate in the AI industry, and further promote the rapid development of AI.

The decentralized AI data, model, and network market mainly include four types:

Decentralized Model Network/Market: Through blockchain and tokenized incentive mechanisms, an open algorithm model market is formed. In this market, developers and researchers can contribute, optimize, and share AI models, and users can choose and use the most suitable models to solve specific problems through the network. Models are scheduled and selected through a consensus mechanism, with high-quality models receiving rewards and inefficient models gradually being eliminated. For example, Bittensor is a decentralized AI model market that allows developers to be rewarded for contributing and optimizing AI models.

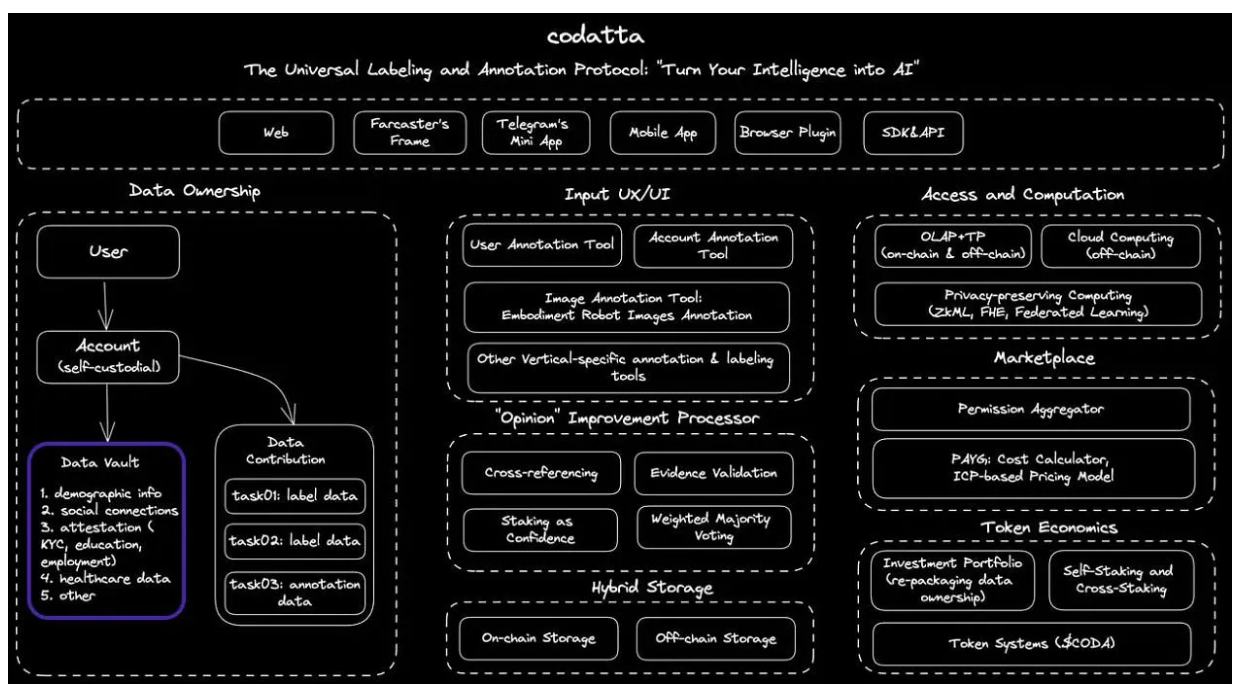

Decentralized Data Trading Market: The decentralized data trading market allows data owners (individuals or businesses) to freely share and trade data through blockchain technology. Through tokenization and smart contracts, data providers can receive fair economic returns, and data consumers can obtain high-quality data for AI model training. This model encourages more people to contribute data, promoting the diversification and fairness of AI applications. For example, Ocean is a decentralized data market that allows data assets to be tokenized and traded through blockchain and smart contracts. NEAR Tasks, released by NEAR, is a blockchain-based AI annotation platform. Measurable Data (MDT) is a decentralized data exchange economic system aimed at providing blockchain-based data economy, where data providers and buyers can securely and anonymously trade data.

Decentralized Data Model Training Market: In the decentralized data model training market, developers can use distributed computing resources and data from around the world for model training. The provision of data and model training can be coordinated and managed through smart contracts on the blockchain, ensuring transparency and fairness. Additionally, the decentralized data training market reduces the cost of entering the AI field, allowing more small and medium-sized enterprises and individual developers to participate. For example, Fetch.AI is a decentralized platform that combines AI and blockchain, allowing developers to conduct data analysis and model training through autonomous intelligent agents (AI Agents) in a decentralized network. Cortex is a decentralized AI platform that supports distributed training and inference of AI models.

Tokenized AI Model and AI Agent Product Market: Through tokenization, AI models and AI Agents can be traded and used as digital assets. This tokenization mechanism allows developers to be economically rewarded for contributing AI models, algorithms, or intelligent agents, while users can purchase or rent these AI services through smart contracts. This decentralized market makes the use of AI more flexible and open, allowing users to choose different AI services according to their needs, while developers can profit from it. For example, the SingularityNET platform allows users to purchase different AI services through tokens, and developers can publish their AI Agents or models on the platform and earn income through token transactions.

5.3.3 AI+ Application Layer:

Web3 + AI applications targeting end users mainly rely on AI technology and resources to build AI+ Dapp application projects in on-chain data, chain games, social, NFT, creator economy, DeFi, etc., using AI to empower smart contract decision-making and better meet user-intent-based needs, providing a new experience in transactions, creation, and privacy protection.

- On-chain AI Data Analysis: Through AI technology, massive data on the blockchain can be deeply mined and analyzed to help users understand market dynamics, investment opportunities, and potential risks. AI can identify patterns and abnormal activities (such as money laundering, hacking) in on-chain behavior and provide customized investment advice to users. Projects such as Dune, Nanse, Chainalysis, and Arkham (Arkm) fall into this category.

- AI Agent: Building Web3 AI Agents to act as intelligent assistants for users, automatically executing decisions and tasks based on on-chain data, user needs, and market changes. Through deep learning and reinforcement learning, AI Agents can understand user intent and optimize task execution. For example, Fetch.AI has developed autonomous AI Agents that can automatically execute on-chain tasks such as data exchange and market trading. AI chatbots (Myshell, CharacterX), AI search engines (Kaito, Pulsr, QnA3, Typox AI) fall into this category.

- AI Trading Bots: AI trading bots use data analysis, machine learning, and deep learning models to identify market trends, execute arbitrage strategies, perform quantitative analysis, and automate trading. AI trading bots execute smart contracts based on real-time on-chain data, enabling automated trading, reducing human errors, and improving trading efficiency. Current TG Bot products belong to this category, and Rockefeller Bot (Rocky, on-chain AI trading bot) and 3Commas (AI-driven automated trading platform) also fall into this category.

- AI Creator Platforms: Using AI technology to help creators with content creation, work distribution, and distribution on the blockchain, especially in NFT, virtual art, and creator economy. Art Blocks (blockchain-based generative art platform) and Mirror.xyz (decentralized creator platform), and Orbofi AI fall into this category.

- Intent-based Needs: AI can understand user intent through natural language processing (NLP) and deep learning technology, translating these needs into automated on-chain execution. Through smart contracts, AI systems can automatically identify user needs, such as conducting a certain transaction, purchasing NFTs, or executing DeFi operations, and trigger corresponding actions. Geno and TermiX fall into this category.

In terms of the current use of AI in these Web3 applications, most application projects are not strong AI, tending towards AI-assisted access, focusing on the application side, with poor applicability in complex core technology areas, and the depth and integration need to be strengthened.

5.4 Typical Projects:

5.4.1 Artificial Superintelligence Alliance

The Artificial Superintelligence (ASI) Alliance is a collective composed of Fetch.ai, SingularityNET (SNET), and Ocean Protocol. As the largest open-source independent entity in the field of artificial intelligence research and development, the alliance aims to accelerate the development of decentralized artificial general intelligence (AGI) and ultimately achieve artificial superintelligence (ASI).

On July 1, 2024, the Artificial Superintelligence Alliance, composed of SingularityNET, Fetch.ai, and Ocean Protocol, announced the launch of a multi-token merger, forming a unified token. The merger of AGIX, OCEAN, and FET tokens marks an important step towards creating a fully decentralized AI ecosystem.

- Ocean Protocol (OCEAN): This project focuses on the decentralization of the data market, enabling data providers and users to securely trade data. AI can run advanced analytics and predictions on this data.

- SingularityNET (AGIX): This is a distributed AI service marketplace that allows AI developers to create, share, and monetize AI services, driving the popularization of AI technology in a decentralized environment.

- Fetch.ai** (FET)**: A decentralized agent-based network based on AI, used to optimize economic activities such as transportation, energy, and supply chain management. AI agents autonomously conduct transactions and negotiations in this ecosystem, enabling efficient operations.

5.4.2 Agentlayer

AgentLayer is a blockchain-based decentralized artificial intelligence agent platform designed to simplify and automate complex tasks through smart contracts and AI agents. AgentLayer provides developers with tools to create and deploy AI agents that can complete specific tasks, such as data analysis and automated trading, without human intervention.

AgentLayer modularizes its technical architecture into three layers: AgentNetwork, AgentOS, and AgentSwap, aiming to simplify the implementation process and enhance system functionality.

- The AgentNetwork layer is the physical execution environment of agents, containing multiple AgentLink contracts and distributed ledger infrastructure. It plays a crucial role in achieving consensus and verifying agent behavior, as well as establishing interoperability protocols among multiple agents. This layer facilitates communication between agents and incentivizes positive interaction among them.

- The AgentOS layer includes development kits, orchestration tools, and services for multiple agents. It provides various foundational models, such as Mistral, Llama, and the proprietary TrustLLM, for fine-tuning functionality.

- AgentSwap is the gateway for discovering and investing in various AI agents driven by the AgentLayer protocol. One of the unique features of the AgentLayer protocol is to facilitate the minting and trading of agents as assets, known as AgentFi. AgentFi supports developers in registering and releasing proprietary agents on AgentSwap (agent store).

5.4.3 Mind Network

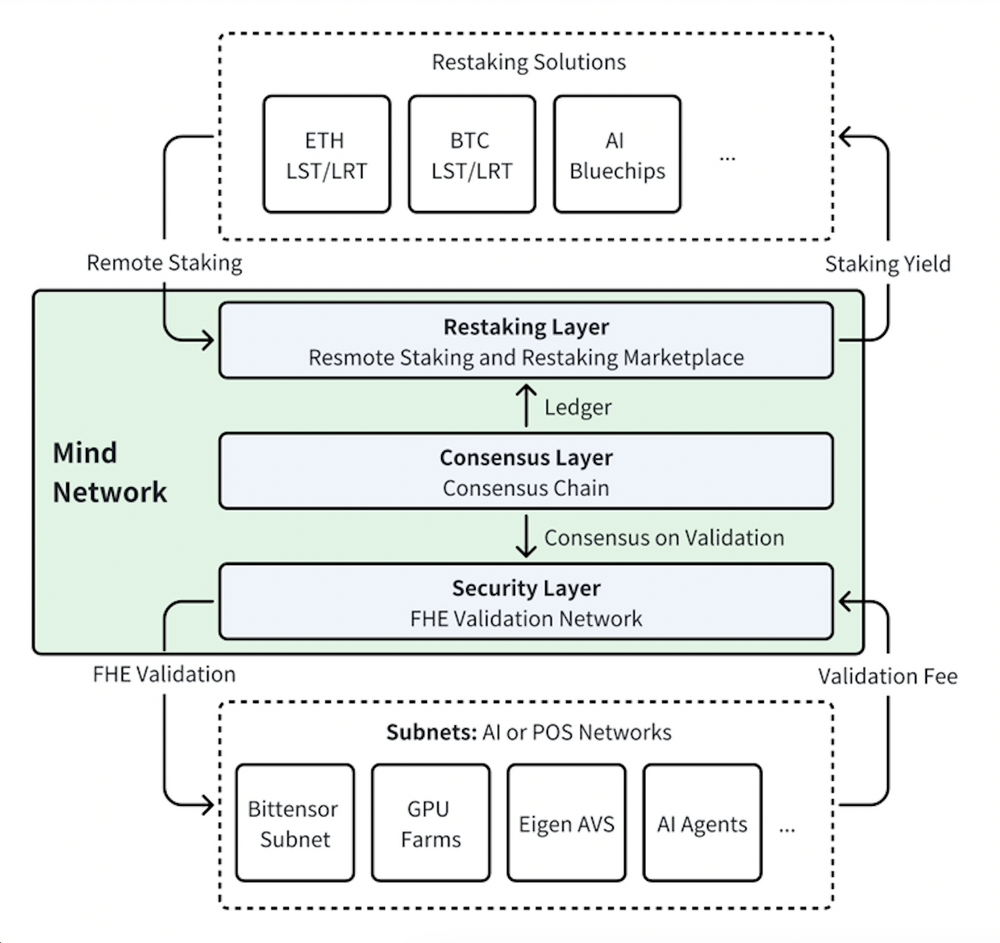

Mind Network is the first fully homomorphic encryption (FHE) re-staking layer for AI and Proof of Stake (POS) networks. This layer accepts re-staked tokens from ETH, BTC, and AI blue-chip stocks and operates as an FHE verification network, providing consensus, data, and cryptographic economic security for decentralized AI, DePIN, EigenLayer, Babylon AVS, and many critical POS networks.

The re-staking solution of Mind Network consists of three layers: re-staking layer, consensus layer, and security layer. In the consensus layer, a new type of Proof of Intelligence (POI) consensus mechanism designed specifically for AI tasks is introduced, ensuring fair and secure reward distribution among FHE verifiers and providing a robust framework for secure and transparent execution within the network.

The solution of Mind Network has found preliminary product-market fit, with projects such as IO.Net, MyShell, Bittensor, AIOZ, Nimble AI, ChainLink, and Connext adopting its solution.

5.4.4 Goplus Network

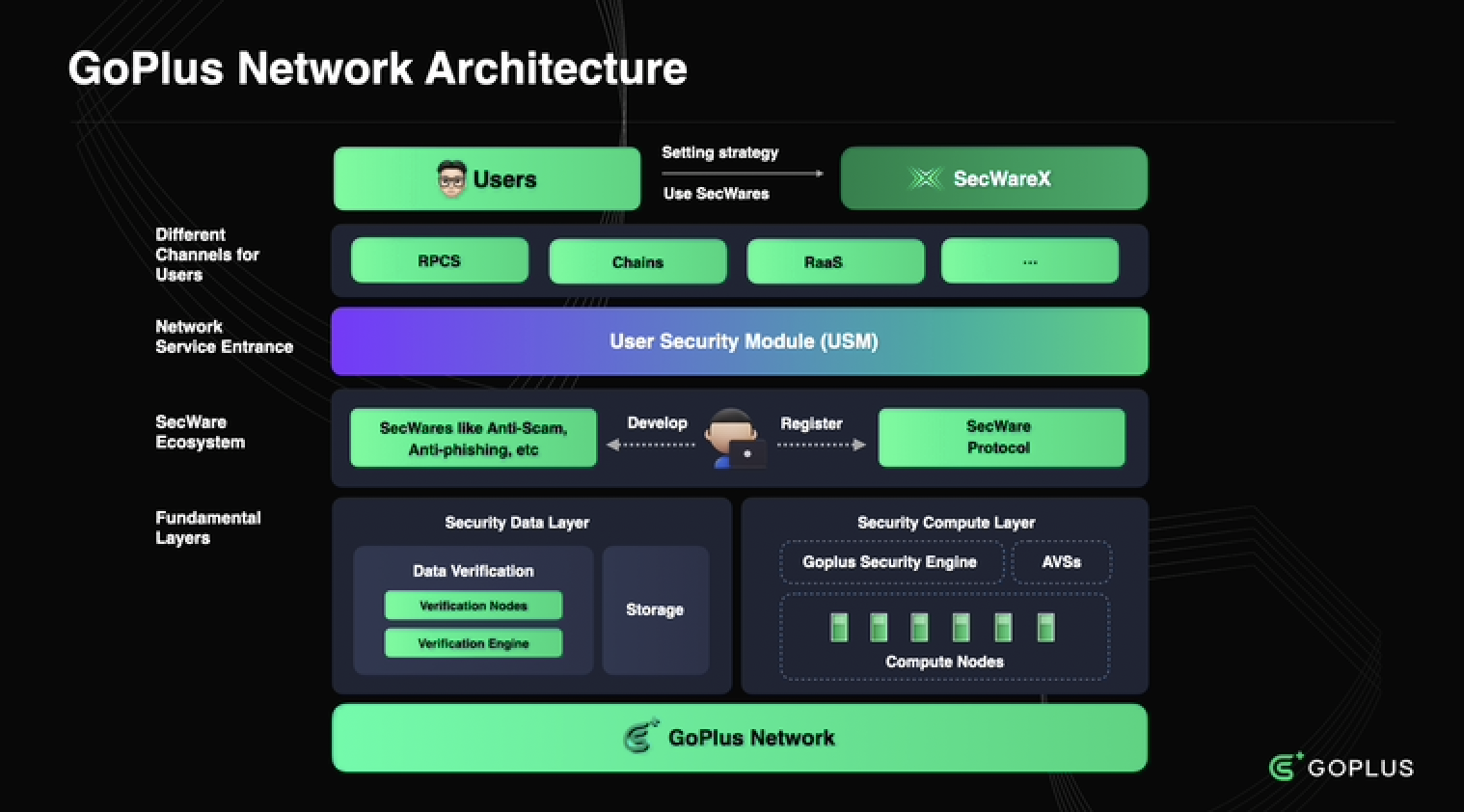

GoPlus Network is an open, permissionless, user-driven Web3 modularized user security layer, providing comprehensive protection for the entire user transaction lifecycle through its permissionless and user-driven user security network and AI-driven security solutions, improving the security posture of Web3 users.

GoPlus Network provides a comprehensive security solution for the on-chain transaction environment of Web3 through its decentralized security data and service network, open security service ecosystem, highly pluggable and easy-to-integrate USM, and user-centric SecWareX security products. The architecture of this network not only meets the security needs of users but also provides ample opportunities for security developers and service providers to participate, demonstrating significant potential for future growth and adoption.

5.4.5 Io.net:

Io.net is a decentralized computing network that supports the development, execution, and scaling of machine learning (ML) applications on the Solana blockchain, utilizing the world's largest GPU cluster to allow machine learning engineers to access distributed cloud service computing power at a fraction of the cost of centralized services.

Io.net aggregates GPU resources from underutilized sources (such as independent data centers, crypto miners, and projects like Filecoin and Render) to address this issue, combining these resources in a decentralized physical infrastructure network (DePIN), enabling engineers to access more GPU computing power at lower costs.

Io.net has three main products:

- IO Cloud: A hub for deploying and managing decentralized GPU clusters, where users can access various central functions, such as deploying clusters, browsing the GPU Marketplace, quick monitoring operations, and getting started. IO Cloud is also the place for users to run AI/ML applications. It seamlessly integrates with IO-SDK, providing a comprehensive solution for expanding AI and Python applications.

- IO Worker: Provides real-time insights into users' computations, offering an overview of devices connected to the network, enabling them to monitor and perform quick operations such as device deletion and renaming. Additionally, IO Worker monitors computing activities, real-time data display, temperature and power tracking, installation assistance, wallet management, security measures, and profit calculation-related functions.

- IO Explorer: Provides a window into the inner workings of the network, offering users comprehensive statistics and an overview of various aspects of the GPU cloud, such as network activity, important statistics, data points, and complete visibility into reward transactions.

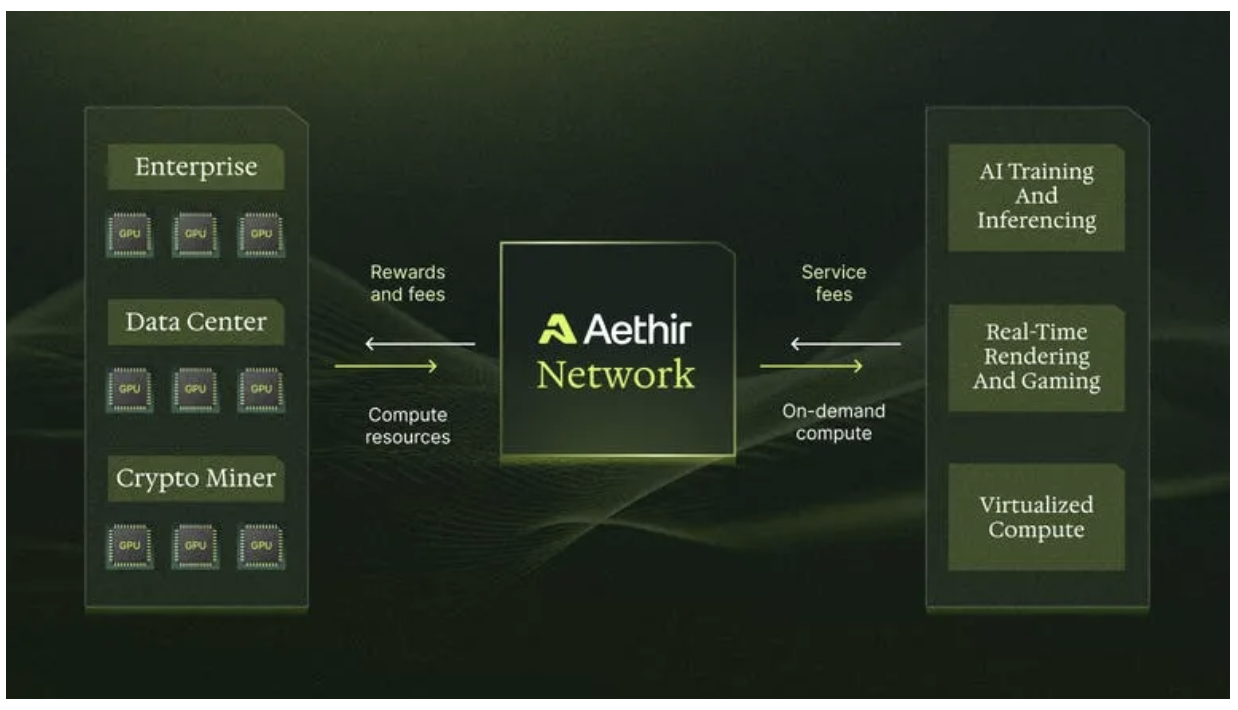

5.4.6 Aethir

Aethir is a decentralized cloud computing infrastructure platform designed specifically for high-computational tasks such as artificial intelligence (AI), machine learning, and gaming. It aggregates enterprise-grade GPU chips into a single global network, providing on-demand cloud computing resources for AI, gaming, and virtualized computing.

Aethir's cloud computing infrastructure network supports many key use cases, including AI model training, AI inference, cloud gaming, cloud telephony, and AI productization. In each case, its scalable, cost-effective, low-latency computing capabilities can keep up with the innovative demands of these fields.

5.4.7 Gensyn

Gensyn is a blockchain-based decentralized computing protocol specifically designed to provide computing resources for AI model training and inference.

Gensyn integrates global idle GPU resources (such as small data centers, personal computers, etc.) into a distributed network, providing developers with massive computing power while reducing computing costs.

Gensyn uses an encrypted verification network to ensure the correct completion of computing tasks and handles task allocation and reward mechanisms through smart contracts. Its goal is to break the monopoly of large companies on high-performance computing resources in a decentralized manner, making AI model training costs affordable for more small and medium-sized enterprises and individual developers.

The hourly cost of machine learning training work on Gensyn is approximately $0.4, much lower than the costs required by AWS ($2) and GCP ($2.5), among others.

5.4.8 C.INC

C.INC is a decentralized GPUs computing platform aimed at integrating decentralized and isolated computing resources. Its mission is to enable everyone to fairly access computing power.

C.INC's comprehensive multi-layer computing solution allows developers to access instant computing power at lower prices while increasing scalability; vendors can achieve higher resource utilization, better profitability, and easier access processes.

Highlights of C.INC include:

* **Lower Prices**: Prices can be reduced by up to 80% compared to cloud service providers.

* **Instant Access**: Instant access to multi-cloud GPU resources.

* **Flexibility and Scalability**: Automatically scaling infrastructure provides elastic computing capabilities.

* **Higher Utilization**: 50% increase in utilization through demand matching.

* **Higher Profitability**: 70% increase in revenue through multiple income sources.