Authors: Raghav Agarwal, Roy Lu, LongHash Ventures

Translation: Elvin, ChainCatcher

AI

Humanity is currently in an AI Oppenheimer moment.

Elon Musk pointed out: "As our technology progresses, it is crucial to ensure that AI serves the interests of the people, not just those in power. People's AI provides a path forward."

At the intersection of cryptocurrency, AI can achieve its own democratization. Starting from open-source models, then people's AI, by the people, for the people. While the goal of Web3 x AI is noble, its actual adoption depends on its availability and compatibility with the existing AI software stack. This is where IONET's unique approach and technology stack come into play.

IONET's decentralized Ray framework is a Trojan horse for launching a permissionless AI computing market into web3 and beyond.

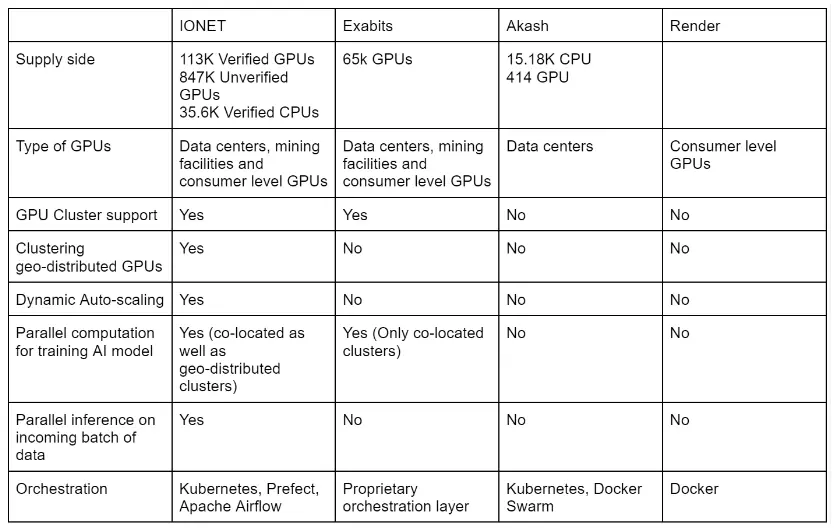

IONET is leading in bringing GPU abundance. Unlike other general computing aggregators, IONET bridges decentralized computing with the industry-leading AI stack by rewriting the Ray framework. This approach paves the way for broader adoption within and outside of web3.

Competition for Computing Power in the Context of AI Nationalism

In the artificial intelligence stack, competition for resources is intensifying. In the past few years, a large number of AI models have emerged. Within hours of the release of Llama 3, Mistral and OpenAI released new versions of their cutting-edge AI models.

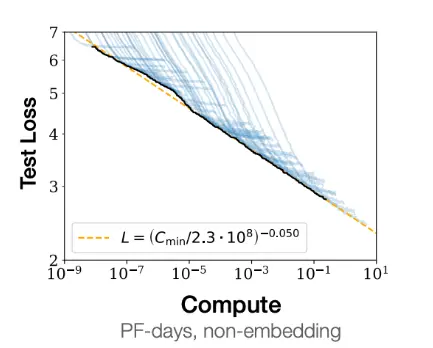

There are three levels of resource competition in the AI stack: 1) training data, 2) advanced algorithms, 3) computing units. Computing power allows AI models to improve performance by scaling training data and model size. According to OpenAI's empirical research on transformer-based language models, performance steadily improves as we increase the amount of computation used for training.

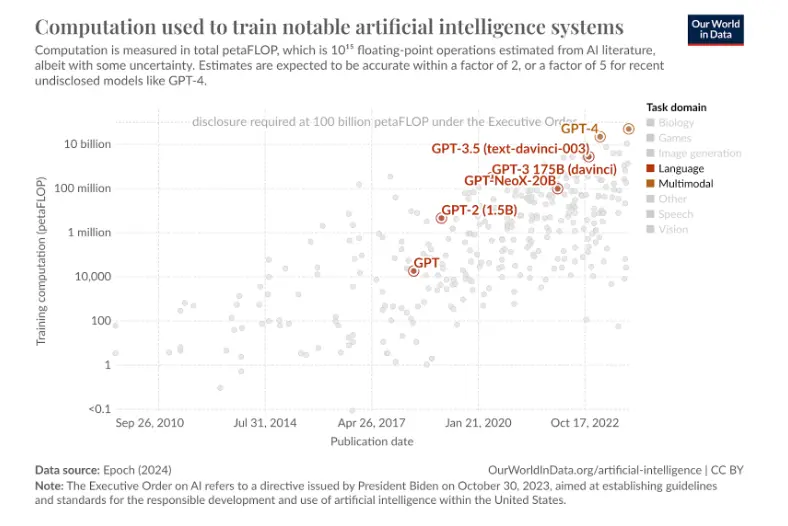

In the past 20 years, computing usage has surged. An analysis by Epoch.ai of 140 models shows that training computation for milestone systems has increased by 4.2 times annually since 2010. The latest OpenAI model, GPT-4, requires 66 times the computation of GPT-3, approximately 1.2 million times that of GPT.

Evident AI Nationalism

Enormous investments from the United States, China, and other countries total approximately $40 billion. Most of the funds will focus on producing GPU and AI chip factories. OpenAI's CEO Sam Altman plans to raise as much as $7 trillion to enhance global AI chip manufacturing, emphasizing that "computation will be the currency of the future."

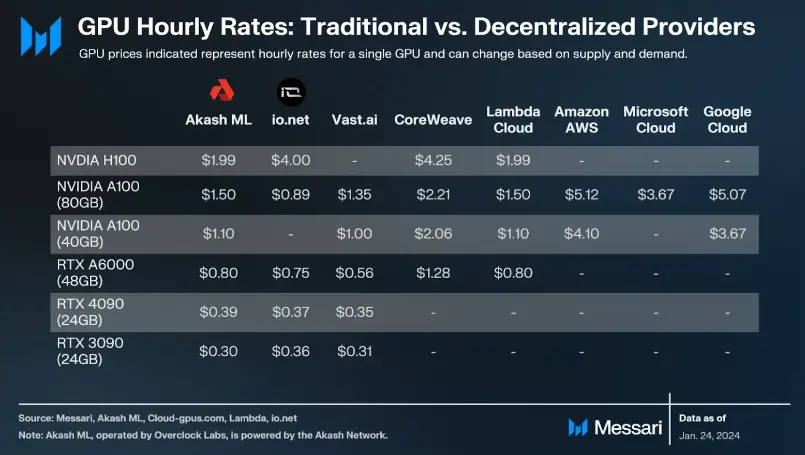

Aggregating long-tail computing resources may significantly disrupt the market. Challenges faced by centralized cloud service providers such as AWS, Azure, and GCP include long wait times, limited GPU flexibility, and cumbersome long-term contracts, especially making it more difficult for smaller entities and startups.

Underutilized hardware from data centers, cryptocurrency miners, and consumer-grade GPUs can meet the demand. A 2022 study by DeepMind found that training smaller models on more data is often more effective than using the latest, most powerful GPUs, indicating a shift towards more effective AI training using accessible GPUs.

IONET Structurally Disrupts the AI Computing Market

IONET is structurally disrupting the global AI computing market. IONET's end-to-end platform for distributed AI training, inference, and fine-tuning aggregates long-tail GPUs to unlock affordable high-performance training.

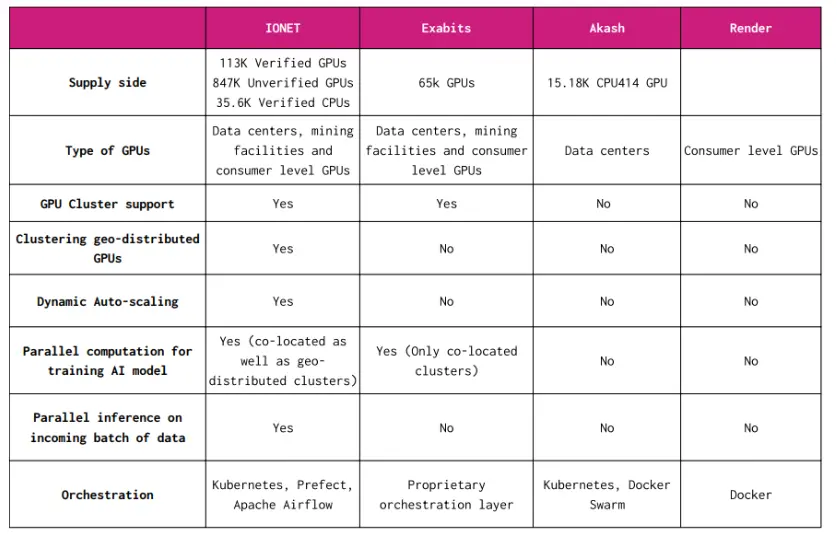

GPU Market:

IONET aggregates GPUs from global data centers, miners, and consumers. AI startups can deploy decentralized GPU clusters in minutes, specifying cluster location, hardware type, machine learning stack (Tensorflow, PyTorch, Kubernetes), and immediately pay on Solana.

Clusters:

A GPU without a compatible parallel infrastructure is like a reactor without a power line, existing but unusable. As emphasized in the OpenAI blog, limitations in hardware and algorithm parallelism significantly impact the computational efficiency of each model, restricting model size and usefulness during training.

IONET leverages the Ray framework to transform thousands of GPU clusters into a unified whole. This innovation enables IONET to build GPU clusters without being affected by geographical dispersion, thereby addressing a major challenge in the computing market.

Ray Framework Stands Out

As an open-source unified computing framework, Ray simplifies the scaling of AI and Python workloads. Ray is adopted by industry leaders such as Uber, Spotify, LinkedIn, and Netflix, facilitating the integration of AI into their products and services. Microsoft offers customers the opportunity to deploy Ray on Azure, while Google Kubernetes Engine (GKE) simplifies the deployment of open-source machine learning software by supporting Kubeflow and Ray.

Ahmad presenting his work on decentralized Ray framework at the 2023 Ray Summit

Decentralized Ray - Extending Ray for global inference (video link: https://youtu.be/ie-EAlGfTHA?)

We first met Tory when he was the COO of a high-growth fintech startup, and we knew he was a seasoned operator with decades of experience, capable of scaling startups to great effect. After speaking with Ahmad and Tory, we immediately realized that this was the dream team to bring decentralized AI computing to web3 and beyond.

Ahmad's brainchild, IONET, was born as an epiphany in practical application. Developing Dark Tick, an algorithm for ultra-low latency high-frequency trading, required a significant amount of GPU resources. To address cost issues, Ahmad developed a decentralized version of the Ray framework, clustering GPUs from cryptocurrency miners, inadvertently creating an elastic infrastructure to address broader AI computing challenges.

Momentum:

By leveraging token incentives, as of mid-2024, IONET has onboarded over 100,000 GPUs and 20,000 ready-to-use GPU clusters, including a large number of NVIDIA H100 and A100. Krea.ai is already utilizing IONET's decentralized cloud service, IO Cloud, to power their AI model inference. IONET recently announced collaborations with multiple projects including NavyAI, Synesis One, RapidNode, Ultiverse, Aethir, Flock.io, LeonardoAI, and Synthetic AI.

By relying on a globally distributed GPU network, IONET can:

- Reduce customer inference time by allowing inference closer to their end-users compared to centralized cloud service providers

- Improve elasticity by organizing resources into regions through highly integrated network backbones connecting multiple data centers

- Lower the cost and access time of computing resources

- Enable companies to dynamically scale and leverage the scale of resources

- Allow GPU providers to achieve better returns from their hardware investments

IONET stands at the forefront of innovation with its decentralized Ray framework. Leveraging Ray Core and Ray Serve, their distributed GPU clusters efficiently orchestrate tasks on decentralized GPUs.

Conclusion

Advancing open-source AI models is a recognition of the original spirit of internet collaboration, where people can access HTTP and SMTP without permission.

The emergence of crowdsourced GPU networks is a natural evolution of the permissionless spirit. By crowdsourcing long-tail GPUs, IONET is opening the gate to valuable computing resources, creating a fair and transparent market, and preventing power from concentrating in the hands of a few.

We believe that IONET is realizing the vision of AI computing as currency through its decentralized Ray cluster technology. In a world increasingly composed of "haves" and "have-nots," IONET will ultimately "reopen the internet."

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。