Writing: Alex Xu

Introduction

In my previous article, I mentioned that compared to the previous two cycles, the current cryptocurrency bull market lacks influential new business and asset narratives. AI is one of the rare new narratives in the Web3 field in this cycle. In this article, the author will combine this year's hot AI project, IO.NET, to try to sort out the thinking on the following two issues:

- The necessity of AI+Web3 in business

- The necessity and challenges of distributed computing power services

Next, the author will sort out the key information of the representative project of AI distributed computing power: the IO.NET project, including product logic, competitive situation, and project background, and deduce the valuation of the project.

The section of this article on the combination of AI and Web3 is inspired by "The Real Merge" written by Delphi Digital researcher Michael rinko. Some of the viewpoints in this article are based on the digestion and quotation of the article, and readers are recommended to read the original text.

This article represents the author's phased thinking as of the time of publication, and may change in the future. The viewpoints are highly subjective and may contain errors in facts, data, and reasoning logic. Please do not use it as investment advice, and welcome criticism and discussion from peers.

The following is the main text.

1. Business Logic: The Combination of AI and Web3

1.1 2023: The New "Miracle Year" Created by AI

Looking back at the history of human development, once technology makes breakthrough progress, it will bring earth-shaking changes from individual daily life, to the pattern of various industries, and to the entire human civilization.

There are two important years in human history, namely 1666 and 1905, which are now known as the two "miracle years" in the history of technology.

1666 is considered a miracle year because Newton's scientific achievements emerged in that year. He opened up the branch of optics, established the branch of calculus, and derived the formula for gravity, all of which are foundational contributions to the future development of human science over the next hundred years, greatly accelerating the overall development of science.

The second miracle year is 1905, when the 26-year-old Einstein published four consecutive papers in the "Annalen der Physik," involving the photoelectric effect (laying the foundation for quantum mechanics), Brownian motion (becoming an important reference for analyzing random processes), the theory of relativity, and the mass-energy equivalence equation (the famous formula E=MC^2). In later evaluations, each of these four papers exceeded the average level of the Nobel Prize in Physics (Einstein himself also won the Nobel Prize for the paper on the photoelectric effect), pushing the historical progress of human civilization several steps forward once again.

And the just-passed 2023 will probably also be called another "miracle year" because of ChatGPT.

We consider 2023 as another "miracle year" in the history of human technology, not only because of the huge progress in GPT in natural language understanding and generation, but also because humans have figured out the law of exponential growth of large language model capabilities through the evolution of GPT—namely, by expanding model parameters and training data, the model's capabilities can be exponentially improved—and this process does not show a bottleneck in the short term (as long as there is enough computing power).

This capability goes far beyond understanding language and generating dialogue, and can be widely used in various technological fields. Taking the application of large language models in the field of biology as an example:

- In 2018, Nobel Prize winner Frances Arnold said at the award ceremony, "Today, we can read, write, and edit any DNA sequence in practical applications, but we still cannot create (compose it)." Just 5 years after her speech, in 2023, researchers from Salesforce Research, an AI startup from Stanford University and Silicon Valley, published a paper in "Nature - Biotechnology." They created 1 million new proteins based on GPT3, and found 2 proteins with completely different structures, both of which have antibacterial properties and are expected to become solutions for bacteria resistance beyond antibiotics. In other words, with the help of AI, the bottleneck of protein "creation" has been broken.

- Prior to this, the artificial intelligence AlphaFold algorithm predicted the structures of almost all 214 million proteins on Earth within 18 months, an achievement hundreds of times greater than all previous work by human structural biologists.

With various AI models, from hard sciences such as biotechnology, materials science, and drug development, to humanities fields such as law and art, will undergo earth-shaking changes, and 2023 is the year of all this.

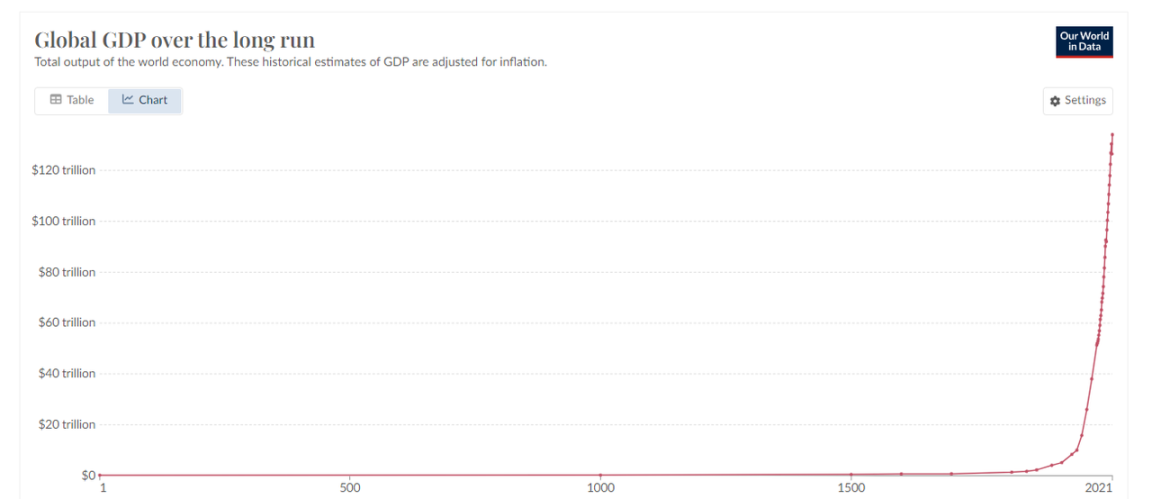

We all know that human wealth creation has grown exponentially over the past century, and the rapid maturation of AI technology will inevitably further accelerate this process.

Global GDP trend chart, data source: World Bank

1.2 The Combination of AI and Crypto

To fundamentally understand the necessity of combining AI and Crypto, we can start with the complementary characteristics of the two.

Complementary Characteristics of AI and Crypto

AI has three attributes:

- Randomness: AI has randomness, and the mechanism behind its content production is a difficult-to-reproduce, exploratory black box, so the results also have randomness.

- Resource-intensive: AI is a resource-intensive industry, requiring a large amount of energy, chips, and computing power.

- Human-like intelligence: AI (soon) will be able to pass the Turing test. After that, it will be difficult to distinguish between humans and machines *

※On October 30, 2023, a research team from the University of California, San Diego, released the Turing test results for GPT-3.5 and GPT-4.0 (test report). The score of GPT4.0 was 41%, only 9% lower than the passing line of 50%, and the human test score for the same project was 63%. The meaning of this Turing test is how many people think the person they are chatting with is a real person. If it exceeds 50%, it means that at least more than half of the people in the group think that the conversation partner is a human, not a machine, and is considered to have passed the Turing test.

While AI creates new leaps in productivity for humans, these three attributes also bring huge challenges to human society, namely:

- How to verify and control the randomness of AI, making randomness an advantage rather than a defect.

- How to meet the huge energy and computing power gap required by AI.

- How to distinguish between humans and machines.

The characteristics of the Crypto and blockchain economy may be the remedy for the challenges brought by AI. The encrypted economy has the following three features:

- Determinism: Businesses run on blockchain, code, and smart contracts, with clear rules and boundaries, resulting in high determinism.

- Efficient resource allocation: The encrypted economy has built a huge global free market, where the pricing, fundraising, and circulation of resources are very fast. Due to the existence of tokens, market supply and demand matching can be accelerated through incentives, reaching the critical point faster.

- Trustless: The ledger is public, the code is open source, and everyone can easily verify, bringing a "trustless" system, while ZK technology avoids privacy exposure during verification.

Next, three examples will be used to illustrate the complementarity of AI and the encrypted economy.

Example A: Solving Randomness with AI Agents Based on the Encrypted Economy

AI agents (representative projects include Fetch.AI) are artificial intelligence programs responsible for executing tasks based on human will. Suppose we want our AI agent to handle a financial transaction, such as "buying $1000 worth of BTC." The AI agent may face two situations:

Situation one, it needs to interface with traditional financial institutions (such as BlackRock) to purchase BTC ETFs, which involves a lot of adaptation issues between AI agents and centralized institutions, such as KYC, data review, login, identity verification, and so on, which is currently very cumbersome.

Situation two, if it operates based on the native encrypted economy, the situation will become much simpler. It will use Uniswap or a certain aggregation trading platform to directly sign and place orders with your account to complete the transaction, receiving WBTC (or other wrapped formats of BTC), making the whole process quick and simple. In fact, this is what various Trading BOTS are doing. They have actually played a role as a basic AI agent, focusing solely on trading. In the future, various trading BOTS, with the integration and evolution of AI, will inevitably be able to execute more complex trading intentions. For example: tracking 100 smart money addresses on the chain, analyzing their trading strategies and success rates, using 10% of the funds in my address to execute similar trades within a week, and stopping when the results are not satisfactory, and summarizing the possible reasons for failure.

AI running in a blockchain system will work better, essentially because of the clarity of the rules of the encrypted economy and the permissionless access to the system. Executing tasks within defined rules, the potential risks brought by the randomness of AI will also be smaller. For example, AI's performance in card games and electronic games has already surpassed humans, because card games and games are closed sandboxes with clear rules. On the other hand, progress in AI for autonomous driving will be relatively slow because the challenges of the open external environment are greater, and we are also less tolerant of the randomness in AI's problem-solving.

Example B: Shaping Resources, Aggregating Resources through Token Incentives

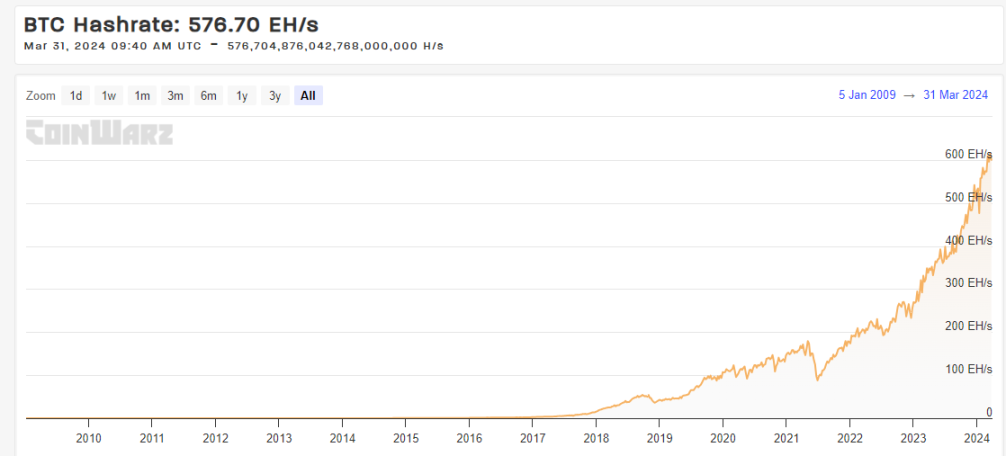

The global computing power network behind BTC, with its current total computing power (Hashrate: 576.70 EH/s), exceeds the comprehensive computing power of any supercomputer in any country. Its development is driven by simple and fair network incentives.

BTC network computing power trend, source: https://www.coinwarz.com/

In addition, projects including DePIN, such as Mobile, are also trying to shape a bilateral market of supply and demand through token incentives to achieve network effects. The upcoming focus of this article, IO.NET, is designed as a platform to aggregate AI computing power, hoping to stimulate more potential AI computing power through a token model.

Example C: Open Source Code, Introducing ZK, Distinguishing between Humans and Machines while Protecting Privacy

As a Web3 project involving OpenAI founder Sam Altman, Worldcoin uses hardware device Orb to generate a unique and anonymous hash value based on human iris biometric features through ZK technology, used for identity verification and distinguishing between humans and machines. In early March of this year, the Web3 art project Drip began using Worldcoin's ID to verify real users and distribute rewards.

In addition, Worldcoin has recently open-sourced the program code of its iris hardware Orb, providing assurance for the security and privacy of user biometric features.

Overall, the encrypted economy, due to the determinism of code and cryptography, the advantages of resource circulation and fundraising brought by permissionless and token mechanisms, and the trustless nature based on open-source code and public ledgers, has become an important potential solution for the challenges faced by human society in the face of AI.

Moreover, the most urgent and demanding challenge for businesses is the extreme thirst for AI products in computing resources, revolving around the huge demand for chips and computing power.

This is also the main reason for the rise of distributed computing projects in the overall AI track in this bull market cycle.

The Business Necessity of Distributed Computing (Decentralized Compute)

AI requires a large amount of computing resources, whether for model training or inference.

In the practice of training large language models, a fact has been confirmed: as long as the scale of data parameters is large enough, large language models will exhibit capabilities that were not present before. The exponential leap in the capabilities of each generation of GPT compared to the previous generation is due to the exponential growth in the computational volume of model training.

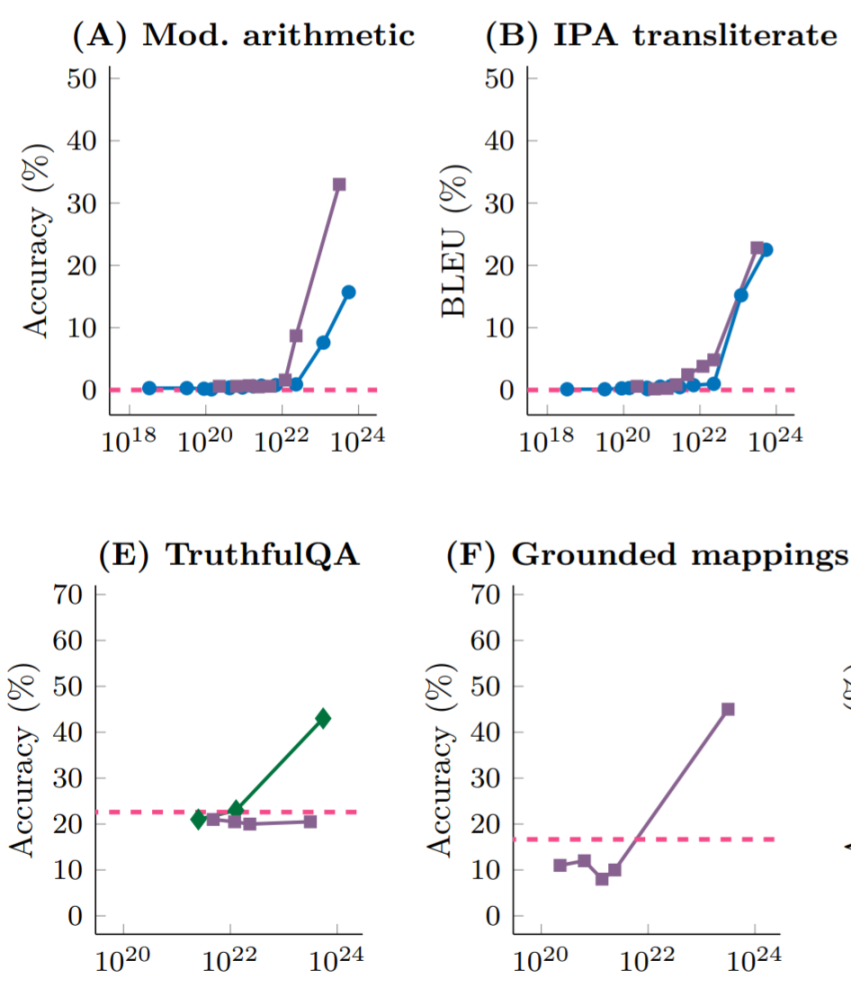

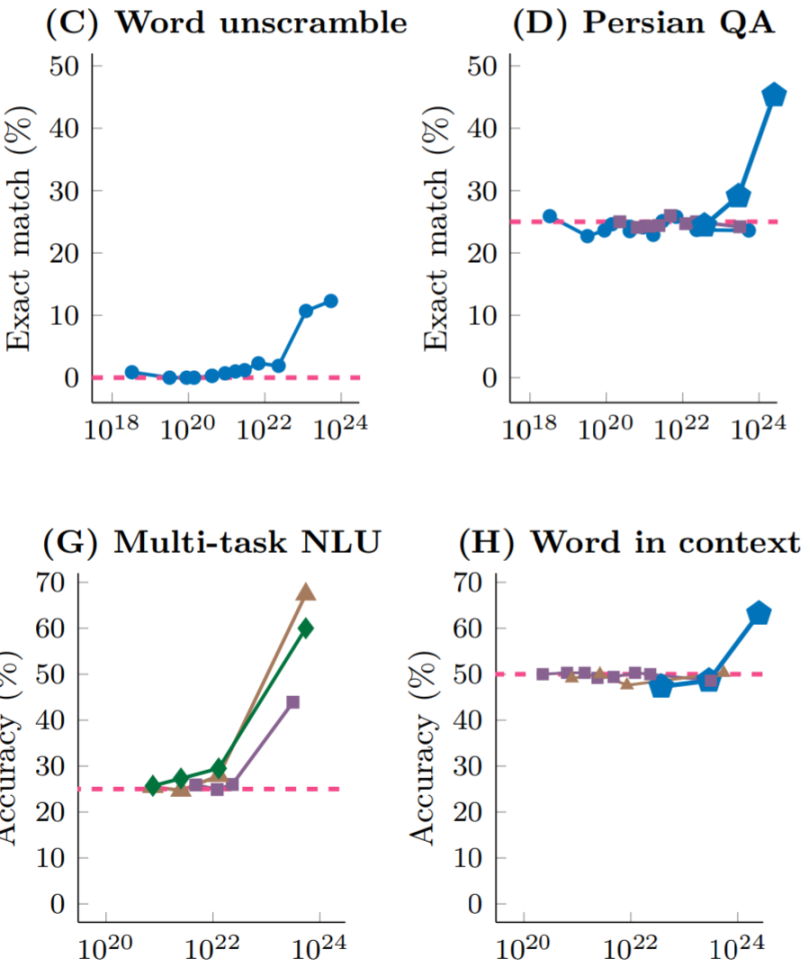

Research from DeepMind and Stanford University shows that different large language models, when faced with different tasks (calculations, Persian language questions and answers, natural language understanding, etc.), as long as the model parameter scale during training is increased (correspondingly, the training volume is also increased), before the training volume reaches 10^22 FLOPs (FLOPs refers to floating-point operations per second, used to measure computational performance), the performance of any task is similar to giving random answers; but once the parameter scale exceeds that critical value, the task performance sharply improves, regardless of the language model.

Source: Emergent Abilities of Large Language Models

Source: Emergent Abilities of Large Language Models

It is precisely the law of "great power comes from great computing" and the verification of this practice in computing power that prompted OpenAI founder Sam Altman to propose raising $70 trillion to build an advanced chip factory ten times the size of TSMC (the expected cost of this part is $15 trillion), and use the remaining funds for chip production and model training.

In addition to the training of AI models requiring computing power, the inference process of the models themselves also requires a large amount of computing power (although smaller compared to the training volume), making the thirst for chips and computing power a norm for participants in the AI track.

Compared to centralized AI computing providers such as Amazon Web Services, Google Cloud Platform, and Microsoft Azure, the main value proposition of distributed AI computing includes:

- Accessibility: Access to computing power chips through cloud services such as AWS, GCP, or Azure typically takes several weeks, and popular GPU models are often out of stock. In addition, in order to obtain computing power, consumers often need to sign long-term, inflexible contracts with these large companies. Distributed computing platforms, on the other hand, can provide flexible hardware choices and greater accessibility.

- Low pricing: By utilizing idle chips and adding token subsidies from network protocol to chip and computing power suppliers, distributed computing networks may be able to provide more affordable computing power.

- Resistance to censorship: Currently, cutting-edge computing power chips and supply are monopolized by large tech companies, and governments, represented by the United States, are increasing scrutiny of AI computing power services. The ability to obtain AI computing power in a distributed, flexible, and free manner is gradually becoming an explicit demand, which is also the core value proposition of web3-based computing power service platforms.

If fossil energy is the blood of the industrial age, then computing power may be the blood of the new digital age opened by AI. The supply of computing power will become the infrastructure of the AI era. Just as stablecoins have become a thriving offshoot of fiat currency in the Web3 era, will the distributed computing power market become a fast-growing offshoot of the AI computing power market?

As this is still a relatively early market, everything is still to be observed. However, the following factors may stimulate the adoption of distributed computing power in the narrative or market:

- Continued supply and demand tension for GPUs. The ongoing supply tension of GPUs may push some developers to try distributed computing platforms.

- Regulatory expansion. To obtain AI computing power services from large cloud computing platforms, KYC and layers of scrutiny are required. This may actually promote the adoption of distributed computing power platforms, especially in restricted and sanctioned areas.

- Token price stimulation. The rise in token prices during a bull market cycle will increase the subsidy value of the platform to the GPU supply side, thereby attracting more suppliers to enter the market, increasing the market size, and reducing the actual purchase price for consumers.

However, the challenges of distributed computing platforms are also quite apparent:

Technical and engineering challenges

- Work verification issues: The calculation of deep learning models, due to their hierarchical structure, requires the validity of the calculation to be verified by executing all previous work, making verification not simple and effective. To solve this problem, distributed computing platforms need to develop new algorithms or use approximate verification techniques that can provide probabilistic guarantees of result correctness, rather than absolute certainty.

- Parallelization challenges: The aggregation of distributed computing power platforms is destined to be a long-tail chip supply, which means that the computing power that a single device can provide is relatively limited. A single chip supplier can hardly complete AI model training or inference tasks independently in a short time, so tasks must be broken down and distributed through parallelization to shorten the overall completion time. However, parallelization inevitably faces a series of problems such as how to decompose tasks (especially complex deep learning tasks), data dependencies, and additional communication costs between devices.

- Privacy protection issues: How to ensure that the purchaser's data and models are not exposed to the recipient of the task?

Regulatory compliance challenges

- Due to the permissionless nature of the supply and purchase bilateral market of distributed computing platforms, it can be used as a selling point to attract some customers. On the other hand, it may become the target of government rectification as AI regulatory standards improve. In addition, some GPU suppliers may also be concerned about whether the computing power resources they rent out are provided to sanctioned businesses or individuals.

Overall, consumers of distributed computing platforms are mostly professional developers or small and medium-sized institutions, unlike cryptocurrency and NFT investors, who have higher requirements for the stability and continuity of the services provided by the protocol, and price may not necessarily be their main decision-making factor. Currently, distributed computing platforms still have a long way to go to gain the approval of these users.

Next, we will analyze and evaluate the project information of the new distributed computing power project IO.NET in this round of the cycle, and estimate its possible valuation level after listing, based on AI projects and distributed computing projects in the current market.

2. Distributed AI Computing Platform: IO.NET

2.1 Project Positioning

IO.NET is a decentralized computing network that has built a bilateral market around chips, with the supply side being chips distributed globally (mainly GPUs, as well as CPUs and Apple's iGPUs), and the demand side being artificial intelligence engineers looking to complete AI model training or inference tasks.

On the official website of IO.NET, it is written:

Our Mission

Putting together one million GPUs in a DePIN – decentralized physical infrastructure network.

Compared to existing cloud AI computing power service providers, its main selling points emphasized externally are:

- Elastic combination: AI engineers can freely select and combine the chips they need to form a "cluster" to complete their computing tasks.

- Rapid deployment: No need for weeks of approval and waiting (as is the case with centralized vendors such as AWS), deployment can be completed in seconds and tasks can begin within tens of seconds.

- Low-cost service: The cost of the service is 90% lower than mainstream vendors.

In addition, IO.NET also plans to launch services such as an AI model store in the future.

2.2 Product Mechanism and Business Data

Product Mechanism and Deployment Experience

Like Amazon Cloud, Google Cloud, and Alibaba Cloud, the computing service provided by IO.NET is called IO Cloud. IO Cloud is a distributed, decentralized chip network that can execute machine learning code based on Python and run AI and machine learning programs.

The basic business module of IO Cloud is called Clusters. Clusters are a group of GPUs that can self-coordinate to complete computing tasks, and AI engineers can customize the clusters they want according to their needs.

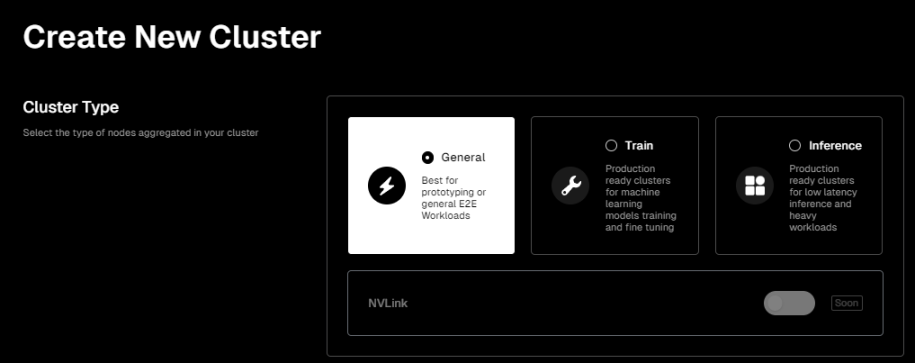

The product interface of IO.NET is very user-friendly. If you want to deploy your own chip cluster to complete AI computing tasks, you can start configuring the chip cluster you want after entering the Clusters product page.

Page information: https://cloud.io.net/cloud/clusters/create-cluster, same below

First, you need to choose your task scenario. Currently, there are three types to choose from:

- General: Provides a more general environment suitable for the early stages of projects with uncertain specific resource requirements.

- Train: Designed specifically for training and fine-tuning machine learning models. This option can provide more GPU resources, higher memory capacity, and/or faster network connections to handle these high-intensity computing tasks.

- Inference: Designed for low-latency inference and heavy-duty work. In the context of machine learning, inference refers to using trained models to make predictions or analyze new data and provide feedback. Therefore, this option focuses on optimizing latency and throughput to support real-time or near-real-time data processing needs.

Then, you need to choose the supplier of the chip cluster. Currently, IO.NET has partnered with Render Network and the Filecoin miner network, so users can choose chips from IO.NET or the other two networks as the supplier for their computing clusters. This means that IO.NET plays the role of an aggregator (but as of the time of writing, Filecoin services are temporarily offline). It is worth mentioning that, according to the page display, the current online available GPU quantity for IO.NET is 200,000+, while Render Network's available GPU quantity is 3,700+.

Next, you will enter the hardware selection process for the cluster of chips. Currently, IO.NET lists only GPU as the available hardware type for selection, excluding CPU or Apple's iGPU (M1, M2, etc.), and the GPUs are mainly from NVIDIA.

In the officially listed and available GPU hardware options, according to the data tested by the author on the day, the total online available GPU quantity for the IO.NET network is 206,001. The GeForce RTX 4090 has the highest available quantity (45,250), followed by the GeForce RTX 3090 Ti (30,779).

Furthermore, the A100-SXM4-80GB chip, which is more efficient in processing AI computing tasks such as machine learning, deep learning, and scientific computing (market price of $15,000+), has 7,965 units online.

The H100 80GB HBM3 graphics card from NVIDIA, designed for AI from the hardware design stage (market price of $40,000+), with training performance 3.3 times that of A100 and inference performance 4.5 times that of A100, has 86 units online.

After selecting the hardware type for the cluster, users also need to choose the region, communication speed, the number of rented GPUs, and the rental time for the cluster.

Finally, based on the comprehensive selection, IO.NET will provide you with a bill. For example, the author's cluster configuration includes:

- General task scenario

- 16 A100-SXM4-80GB chips

- Ultra High Speed connection

- Location: United States

- Rental time: 1 week

The total bill for this configuration is $3,311.6, with a rental price per card of $1.232.

In comparison, the rental prices for a single card of A100-SXM4-80GB on Amazon Cloud, Google Cloud, and Microsoft Azure are $5.12, $5.07, and $3.67, respectively (source: https://cloud-gpus.com/, actual prices may vary based on contract details).

Therefore, in terms of price alone, the computing power of chips from IO.NET is indeed much cheaper than that of mainstream vendors, and the combination and purchase of supply are very flexible, and the operation is also very easy to use.

Business Situation

Supply side situation

As of April 4th this year, according to official data, the total supply of GPUs on the supply side of IO.NET is 371,027, and the CPU supply is 42,321. In addition, as a partner of IO.NET, Render Network has 9,997 GPUs and 776 CPUs connected to the network for supply.

At the time of writing, 214,387 of the total GPUs connected to IO.NET are online, with an online rate of 57.8%. The online rate for GPUs from Render Network is 45.1%.

What does the above supply side data mean?

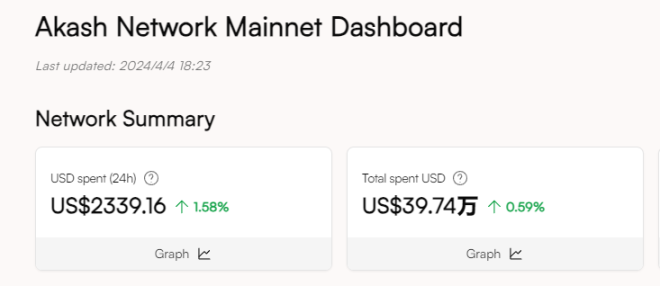

For comparison, let's introduce another well-established distributed computing project, Akash Network, which has been online for a longer time.

Akash Network launched its mainnet as early as 2020, initially focusing on distributed services for CPUs and storage. In June 2023, it launched a testnet for GPU services and launched the mainnet for distributed GPU computing in September of the same year.

According to official data from Akash, since the launch of its GPU network, although the supply side has continued to grow, the total number of GPUs connected so far is only 365.

From the perspective of GPU supply, IO.NET is several orders of magnitude higher than Akash Network and is already the largest supply network in the distributed GPU computing race.

Demand side situation

However, from the demand side, IO.NET is still in the early stages of market cultivation, and the actual usage of IO.NET to perform computing tasks is currently not significant. Most of the online GPUs have a task load of 0%, with only four chip models, A100 PCIe 80GB K8S, RTX A6000 K8S, RTX A4000 K8S, and H100 80GB HBM3, processing tasks. Except for A100 PCIe 80GB K8S, the task load for the other three chip models is less than 20%.

The network pressure value disclosed by the official website is 0%, indicating that most of the chip supply is in an online standby state.

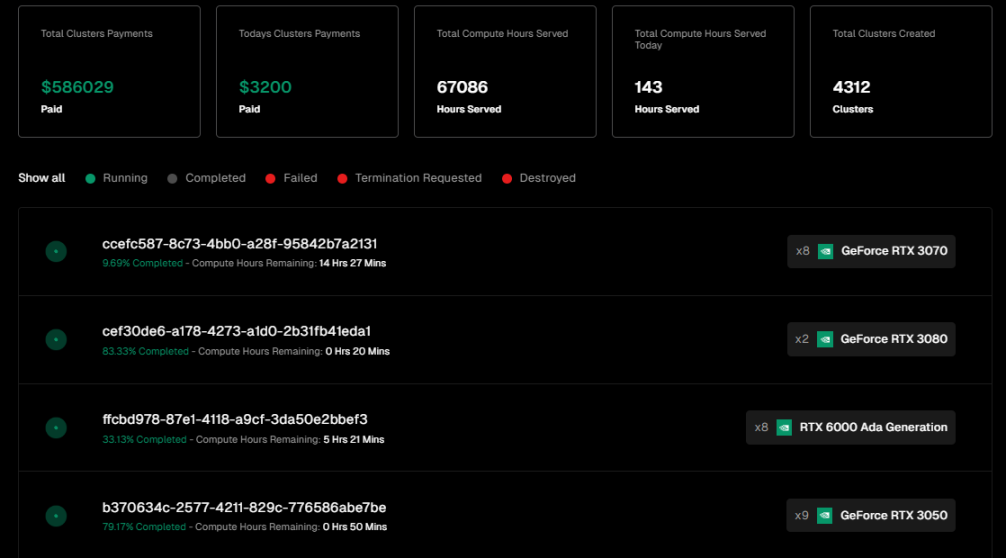

And in terms of network fees, IO.NET has generated service fees of $586,029, with a daily fee of $3,200.

Data source: https://cloud.io.net/explorer/clusters

The scale of the network settlement fees, both in total and daily transaction volume, is in the same order of magnitude as Akash. However, most of Akash's network revenue comes from the CPU side, with a CPU supply of over 20,000.

Data source: https://stats.akash.network/

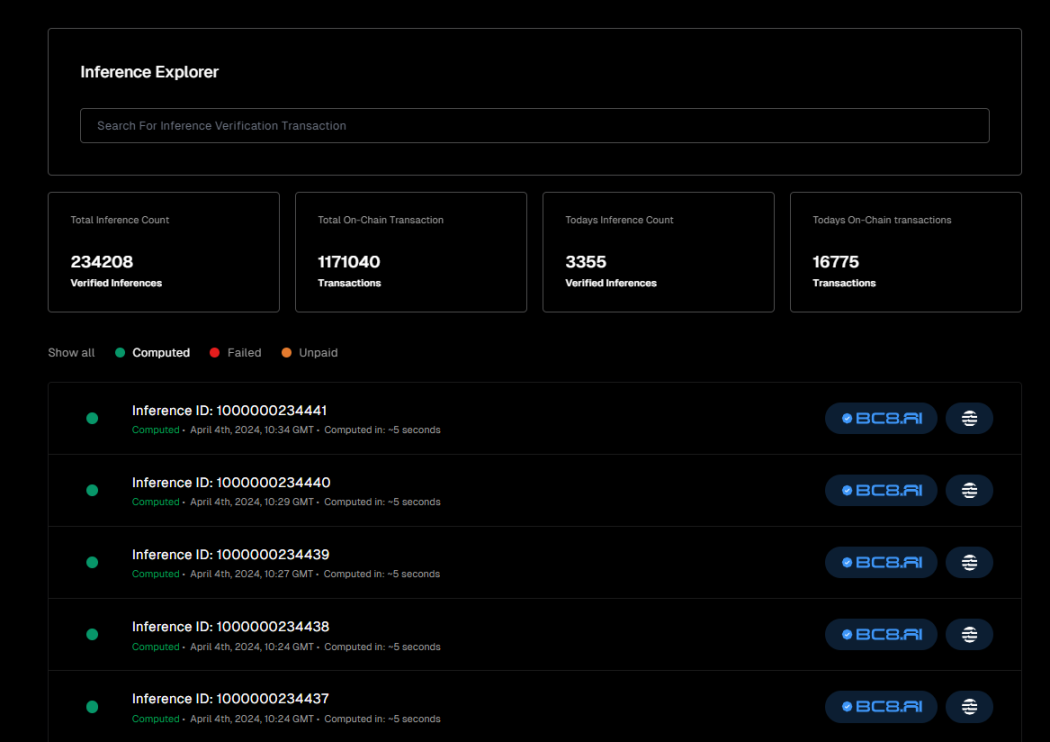

In addition, IO.NET has also disclosed business data on AI inference tasks processed by the network. As of now, it has processed and verified over 230,000 inference tasks, but most of this business volume is generated by the project BC8.AI sponsored by IO.NET.

Data source: https://cloud.io.net/explorer/inferences

From the current business data, IO.NET's supply side expansion is progressing smoothly. Stimulated by the airdrop expectations and the community activity codenamed "Ignition," it has quickly aggregated a large amount of AI chip computing power. However, its expansion on the demand side is still in the early stages, and organic demand is currently insufficient. Whether the current lack of demand is due to the lack of expansion on the consumer side or the unstable service experience, leading to a lack of large-scale adoption, still needs to be evaluated.

However, considering the difficulty in filling the gap in AI computing power in the short term, many AI engineers and projects are seeking alternative solutions, which may be of interest to decentralized service providers. Additionally, IO.NET has not yet launched economic and activity incentives for the demand side, and the gradual improvement of product experience. Therefore, the gradual matching of supply and demand on both sides is still worth looking forward to.

2.3 Team Background and Financing Situation

Team Situation

At the inception of IO.NET, the core team's business was in quantitative trading. Until June 2022, they had been focusing on developing institutional-grade quantitative trading systems for stocks and crypto assets. Due to the system's backend demand for computing power, the team began exploring the possibility of decentralized computing and eventually focused on reducing the cost of GPU computing services.

Founder & CEO: Ahmad Shadid

Ahmad Shadid has been involved in quantitative and financial engineering work before IO.NET, and is also a volunteer for the Ethereum Foundation.

CMO & Chief Strategy Officer: Garrison Yang

Garrison Yang officially joined IO.NET in March of this year. Previously, he was the VP of Strategy and Growth at Avalanche and graduated from the University of California, Santa Barbara.

COO: Tory Green

Tory Green is the Chief Operating Officer of io.net. Previously, he was the Chief Operating Officer of Hum Capital and the Director of Corporate Development and Strategy at Fox Mobile Group. He graduated from Stanford.

According to IO.NET's LinkedIn information, the headquarters is located in New York, with a branch in San Francisco, and the team currently has over 50 employees.

Financing Situation

As of now, IO.NET has only disclosed one round of financing, which is a Series A round completed in March of this year with a valuation of $1 billion and a total raise of $30 million. The round was led by Hack VC, with other participating investors including Multicoin Capital, Delphi Digital, Foresight Ventures, Animoca Brands, Continue Capital, Solana Ventures, Aptos, LongHash Ventures, OKX Ventures, Amber Group, SevenX Ventures, and ArkStream Capital, among others.

It is worth mentioning that, perhaps due to receiving investment from the Aptos Foundation, the BC8.AI project, which was originally settled and accounted for on Solana, has now transitioned to the equally high-performance L1 Aptos.

2.4 Valuation Calculation

According to the founder and CEO Ahmad Shadid, IO.NET will launch its token at the end of April.

IO.NET has two benchmark projects for valuation: Render Network and Akash Network, both of which are representative distributed computing projects.

We can deduce the market value range of IO.NET in two ways: 1. Price-to-Sales ratio, i.e., market value / revenue ratio; 2. Market value / network chip count ratio.

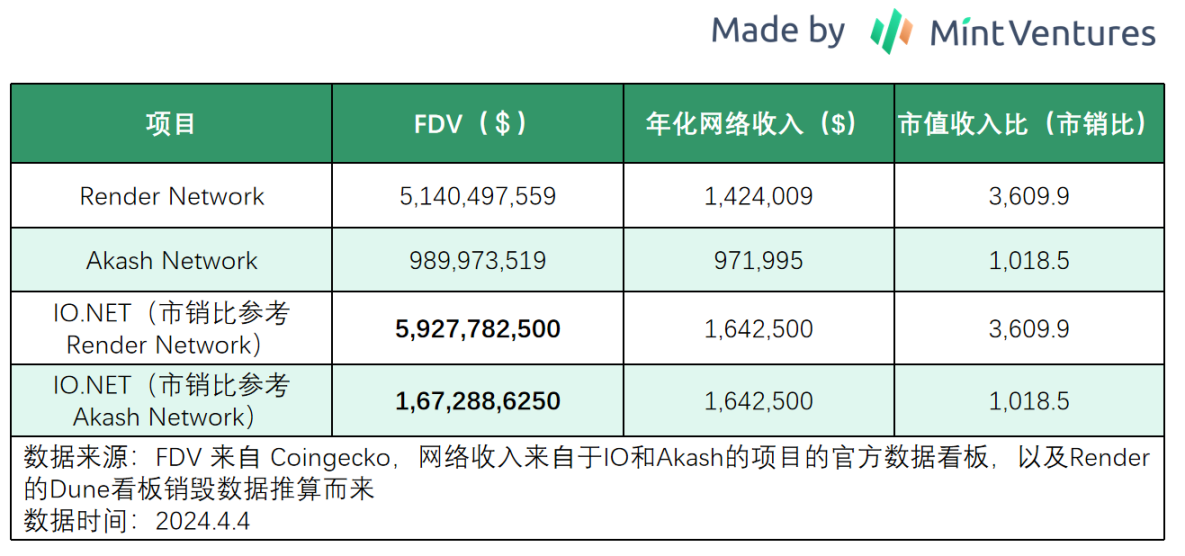

Let's first look at the valuation deduction based on the Price-to-Sales ratio:

From the perspective of the Price-to-Sales ratio, Akash can be used as the lower limit of the valuation range for IO.NET, while Render serves as a high-end pricing reference, with an FDV range of $1.67 billion to $5.93 billion.

However, considering the updates to the IO.NET project and the more heated narrative, along with the smaller early circulating market value and the current larger supply side scale, the possibility of its FDV exceeding Render is not small.

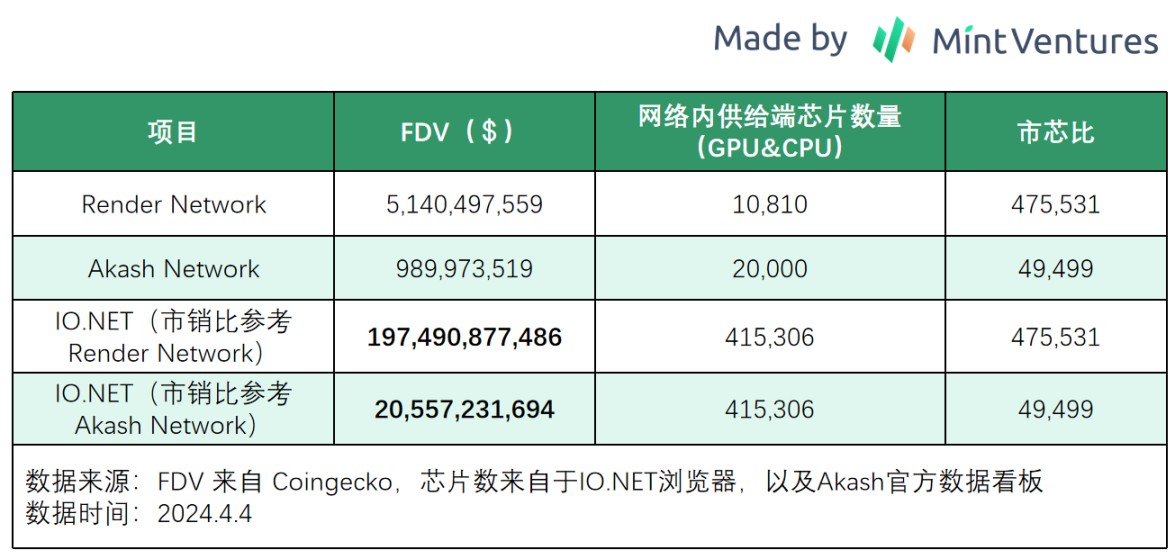

Now, let's look at another perspective for comparative valuation, the "Market-to-Chip" ratio.

In a market background where the demand for AI computing power exceeds supply, the most important factor for distributed AI computing networks is the scale of GPU supply. Therefore, we can use the "Market-to-Chip" ratio for horizontal comparison, using the "project's total market value-to-network chip count ratio" to deduce the potential valuation range of IO.NET as a market value reference for readers.

If we deduce the market value range of IO.NET based on the Market-to-Chip ratio, with Render Network's Market-to-Chip ratio as the upper limit and Akash Network as the lower limit, its FDV range is $20.6 billion to $197.5 billion.

Readers who are optimistic about the IO.NET project may consider this to be an extremely optimistic market value deduction.

Furthermore, we need to consider that the current large number of online chips in IO.NET has been stimulated by airdrop expectations and incentive activities. The actual online number on the supply side still needs to be observed after the project is officially launched.

Therefore, overall, valuation calculations based on the Price-to-Sales ratio may be more indicative.

As a project with the triple halo of AI+DePIN+Solana ecosystem, the market performance of IO.NET after its launch is something we eagerly await.

Reference Information

Delphi Digital: The Real Merge

Galaxy: Understanding the Intersection of Crypto and AI

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。