Source: Synced

Image source: Generated by Wujie AI

There are new open-source models available for use.

From Llama, Llama 2 to Mixtral 8x7B, the performance records of open-source models have been continuously refreshed. Due to Mistral 8x7B outperforming Llama 2 70B and GPT-3.5 in most benchmark tests, it is also considered as a "very close to GPT-4" open-source option.

In a recent paper, the company behind the model, Mistral AI, disclosed some technical details of Mixtral 8x7B and launched the Mixtral 8x7B – Instruct chat model. The model's performance significantly surpassed GPT-3.5 Turbo, Claude-2.1, Gemini Pro, and Llama 2 70B chat models in human evaluation benchmarks such as BBQ and BOLD, showing less bias.

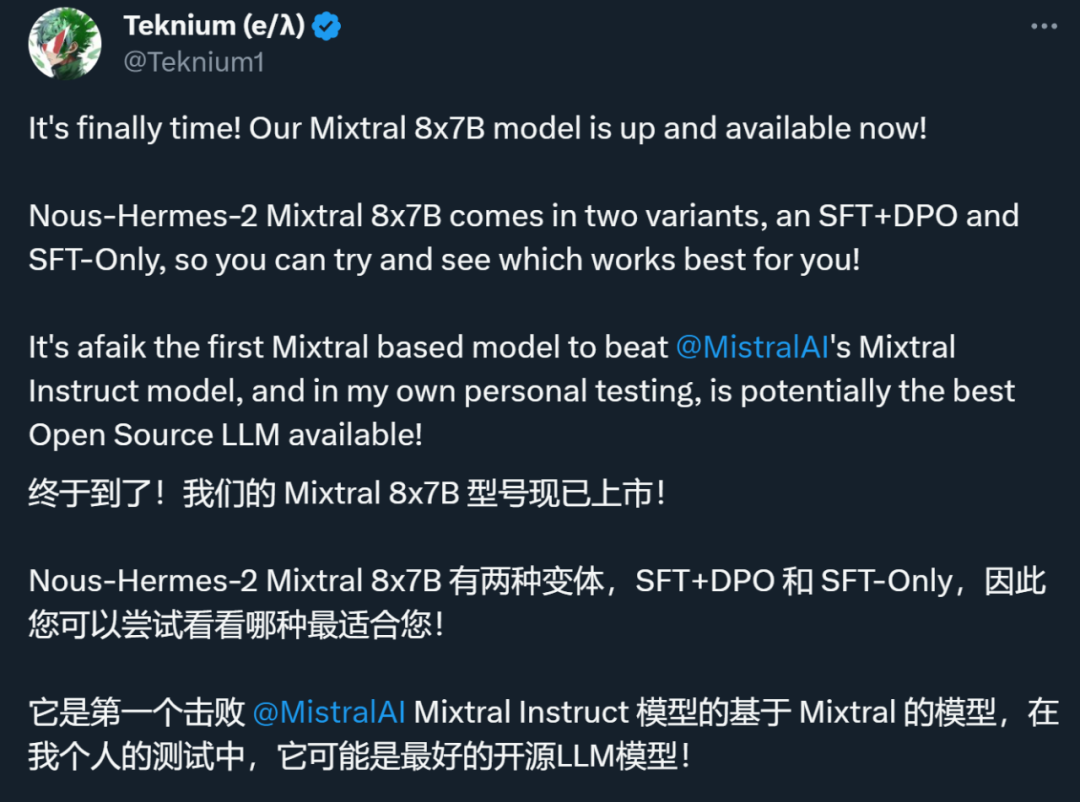

However, recently, Mixtral Instruct has also been surpassed. A company called Nous Research announced that their new model trained based on Mixtral 8x7B — Nous-Hermes-2 Mixtral 8x7B has surpassed Mixtral Instruct in many benchmarks, achieving SOTA performance.

The co-founder of the company, @Teknium (e/λ), stated, "To my knowledge, this is the first Mixtral-based model that beats Mixtral Instruct. In my personal tests, it may be the best open-source LLM model!"

The model data sheet shows that the model was trained on over 1 million entries (mainly data generated by GPT-4) and other high-quality data from open datasets across the AI field. Depending on different subsequent fine-tuning methods, the model is divided into two versions:

- Nous Hermes 2 Mixtral 8x7B SFT fine-tuned only with SFT method. Link: Nous Hermes 2 Mixtral 8x7B SFT

- Nous Hermes 2 Mixtral 8x7B DPO fine-tuned with SFT+DPO method. Link: Nous Hermes 2 Mixtral 8x7B DPO. Mixtral Nous-Hermes 2 DPO Adapter link: Nous Hermes 2 Mixtral 8x7B DPO Adapter

As for why two versions are released, @Teknium (e/λ) provided the following explanation:

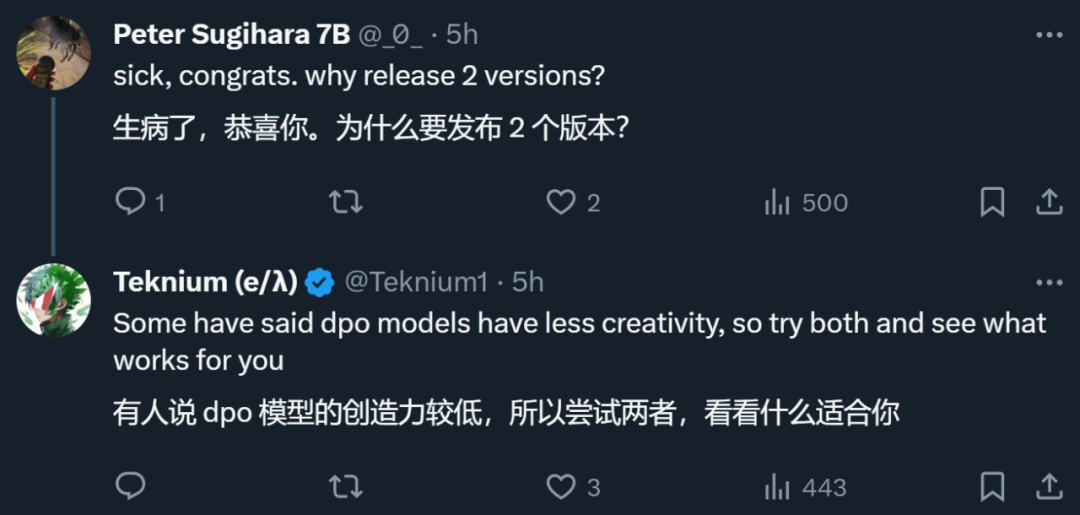

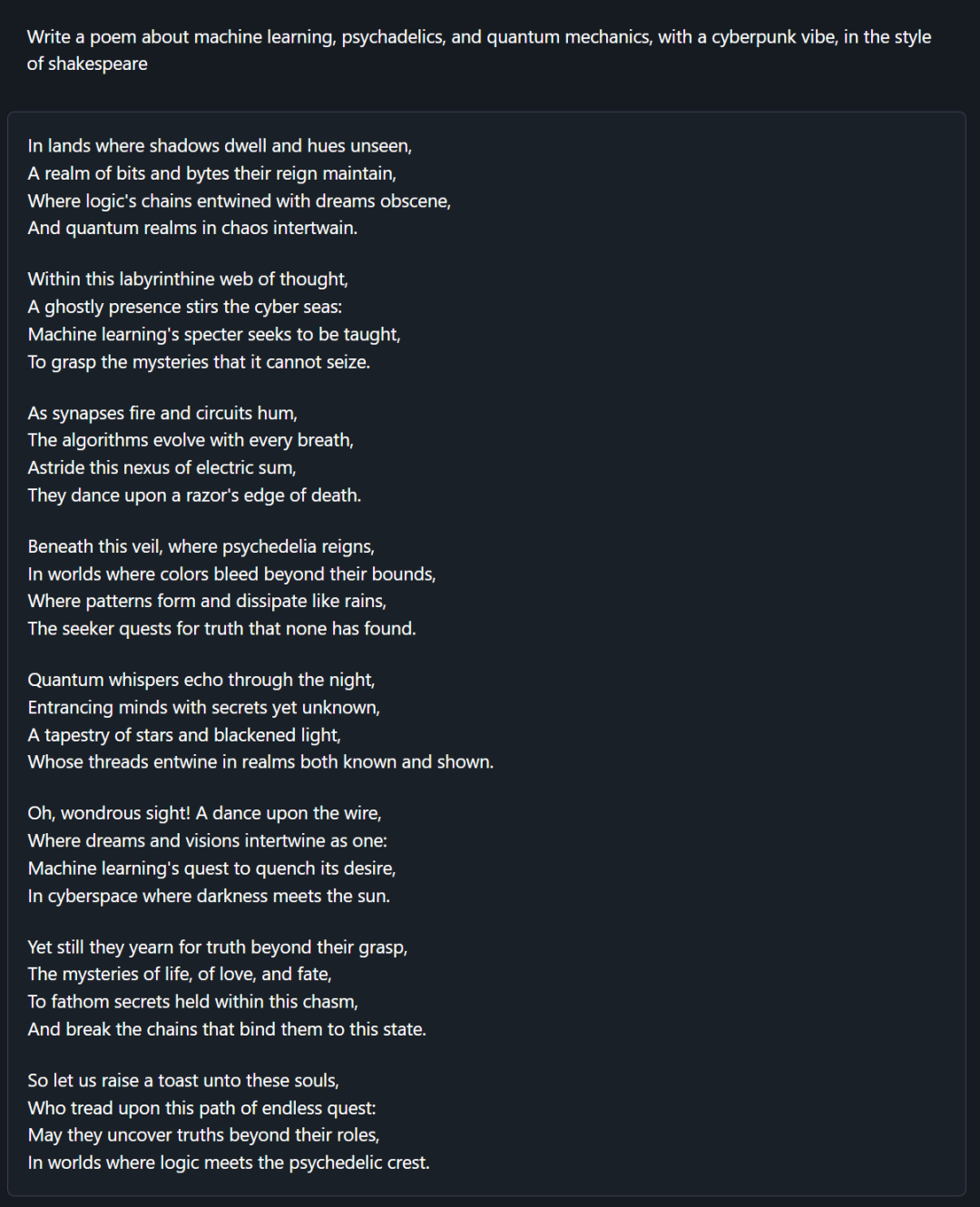

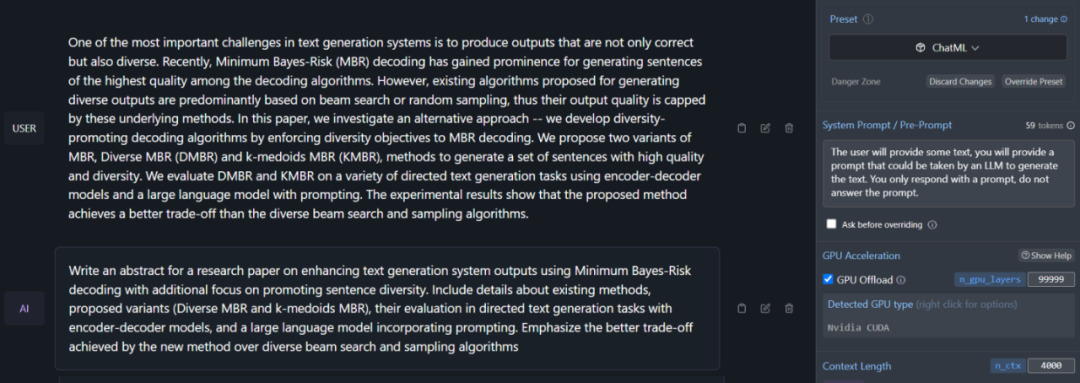

Here are some output examples of the model:

- Writing code for data visualization

- Writing cyberpunk psychedelic poetry

- Creating prompts based on input text

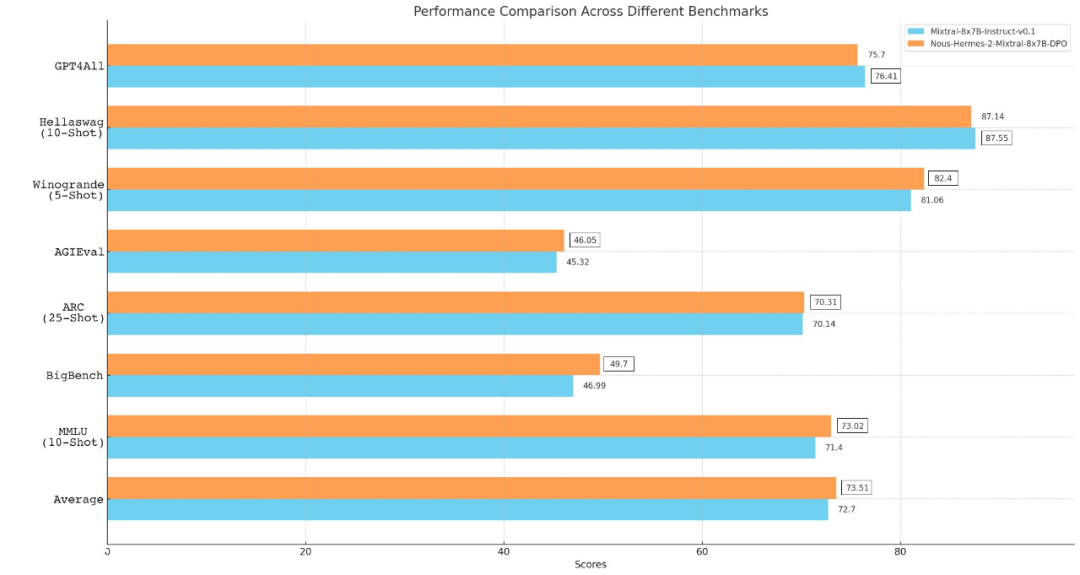

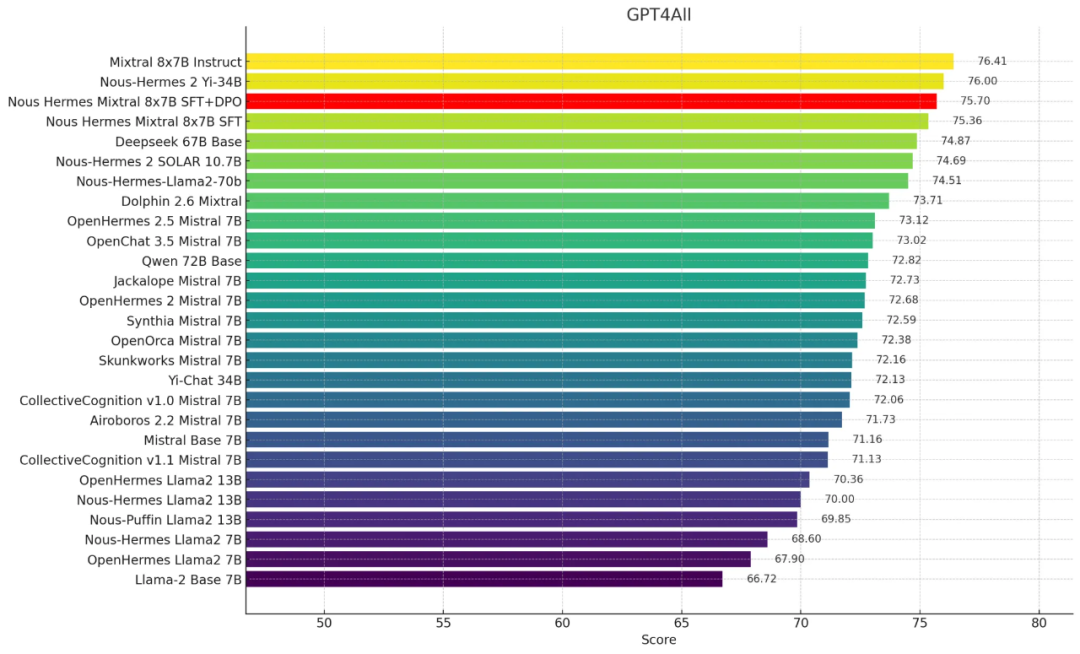

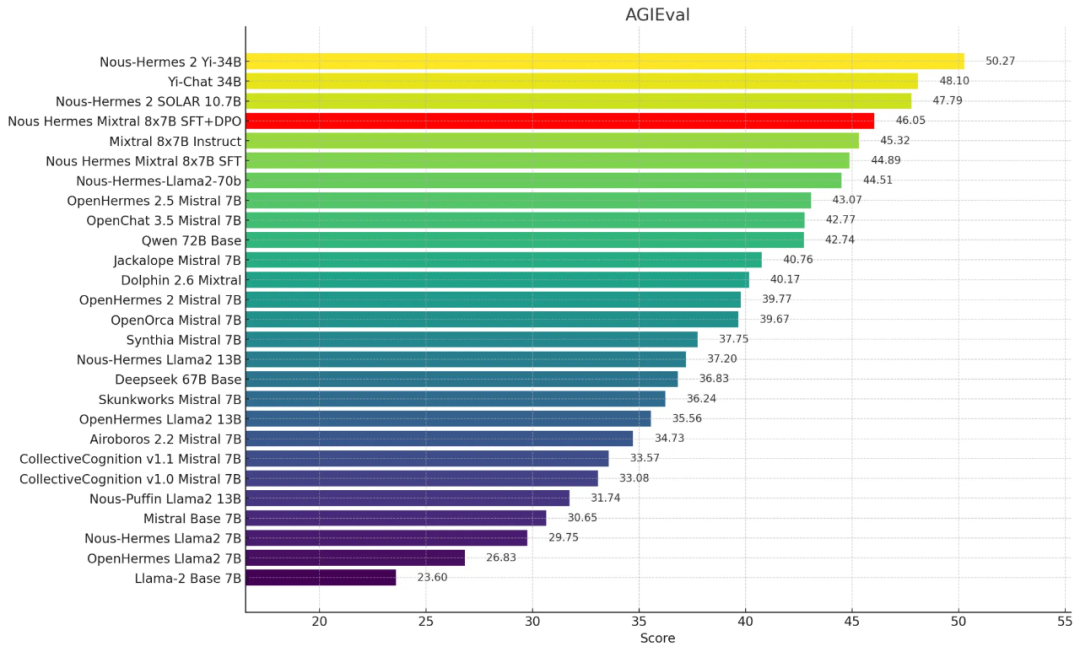

Here are some performance data:

- GPT4All

- AGI-Eval

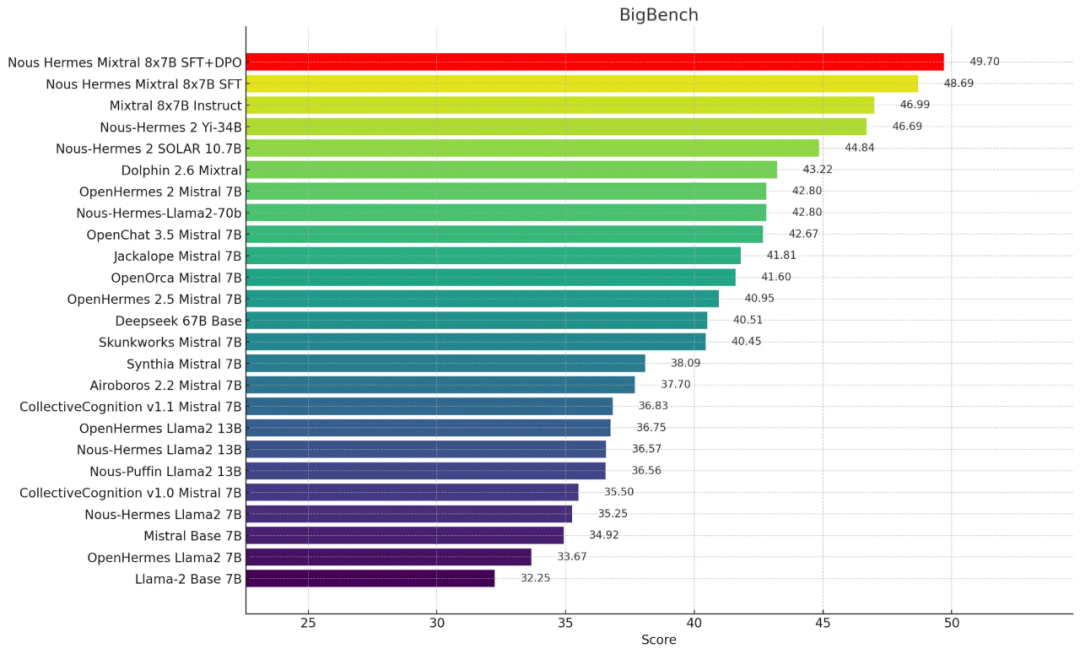

- BigBench inference test

Shortly after the model was released, the generative AI startup Together AI announced its API support for the model. Together AI completed a financing round of over 100 million USD in November last year. During the training and fine-tuning of Nous Hermes 2 Mixtral 8x7B model by Nous Research, Together AI provided them with computing power support.

Image source: https://twitter.com/togethercompute/status/1746994443482538141

Nous Research was initially a volunteer project. They recently successfully completed a seed financing round of 5.2 million USD, led by Distributed Global and OSS Capital, and attracted the participation of well-known investors including Vipul Ved Reddy, founder and CEO of Together AI. Nous plans to launch an AI orchestration tool called Nous-Forge in 2024.

From the official introduction, it can be seen that their product positioning is: able to connect and run programs, acquire and analyze customer documents, and generate synthetic data for production use. These proprietary systems can be fine-tuned according to the needs of customers, regardless of their business domain. Through these novel algorithms, they hope to aggregate and analyze previously unstructured thematic data in the digital attention ecosystem, providing customers with hidden market pulse information.

Reference link:

https://nousresearch.com/

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。