On the morning of January 5th, the "Seeking Win-Win: China AIGC Industry Application Summit and Unbounded AI Ecological Partner Conference" was officially opened in Hangzhou, jointly guided by the Hangzhou Future Technology City Management Committee, the Yuhang District Science and Technology Bureau, and the Yuhang District Enterprise (Talent) Comprehensive Service Center, and hosted by TimeStamp Technology, with special media support from AI New Vision.

This conference will focus deeply on AIGC applications, inviting nearly a hundred partners from all over the country to attend, as well as industry elites and experts from research and development, investment institutions, universities, and AIGC entrepreneurs, to share the progress of AIGC applications over the past year and discuss future development trends.

Zhu Xuqi, Co-founder and CTO of Qingbo Intelligence, attended and delivered a keynote speech on "AIGC Empowering the Clothing Industry Application." He stated that currently, AIGC in the clothing industry mainly solves creative concept design and marketing promotion. However, for industrial processes, due to certain specifications and industrial-level controllable precision adjustments, there are still bottlenecks in achieving fully automated processes without pattern makers or 3D modelers.

The following is the speech content organized by AI New Vision, with some deletions for ease of reading:

We know that the current AI has transitioned from decision-based AI to generative AI, which includes models for images, text, sound, and 3D models. These new AIGC models have iterated and updated some aspects of the original industry chain and processes. Our company originally had a data background, so our contact with the clothing industry was quite accidental. When it comes to landing at the customer level, we did have contact and connections with the traditional clothing industry. From our limited experience in the clothing industry, we believe that the clothing industry has several basic processes, which are the traditional chain, from creative concept design to pre-production processing, including pattern making, and the industrial data entering the production system for production, then entering logistics, retail, and marketing promotion. In this process, we can see that before the emergence of AIGC in each link, it already had its own existing digital systems or decision-making systems. Whether it's creativity or production processing, they already existed, but after AIGC entered, we found that AIGC is most embedded in creative concept design and marketing promotion.

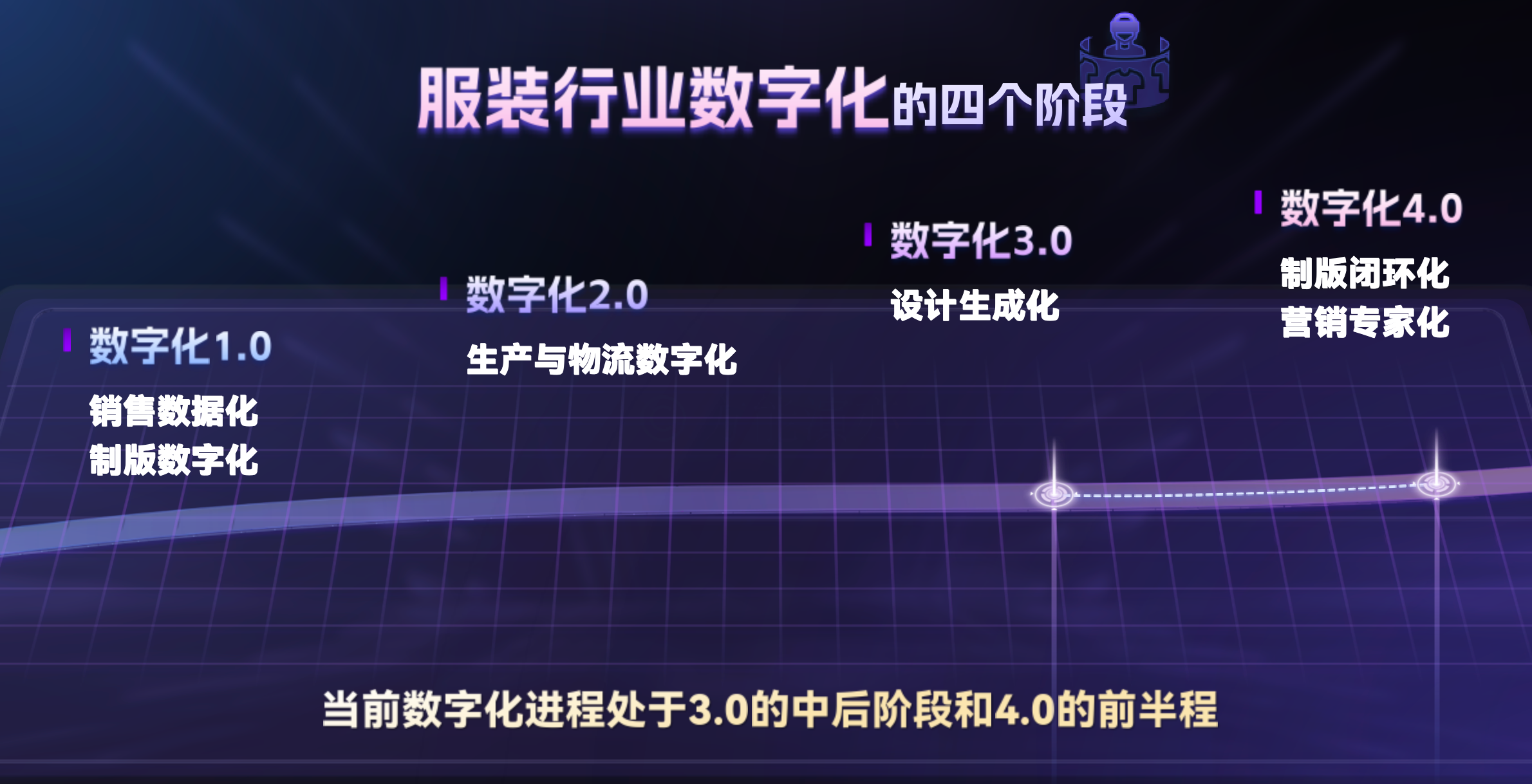

From our perspective, the entire intelligent or digital process of the clothing industry is divided into four stages, and we should currently be in the third and fourth stages. Our design generation, in the footwear industry design, including this kind of conceptual revision, or similar, has become very popular. Currently, there are still some bottlenecks in the project practice, such as the closed-loop of pattern making, which is still relatively difficult.

The first stage, as we know, is relatively simple, like CRM, like PLM, which are already very mature. The second stage, MES and ERP, this area has also already solved traditional data tools. The third stage, design generation, the various cases of clothing and shoes that everyone just saw, basically belong to the field of text-to-image or image-to-image. In addition, the support for PA marketing includes text-to-video and image-to-video. In the field of clothing design, we can also see that with the self-developed models and the popularization of some open-source models, there are a large number of applications within the industry.

For clothing models, of course, here is an example of the Yuan Shang large model, including the models listed earlier, basically have these characteristics. First of all, its base is not a general text-to-image model, it is trained with specific clothing images. It includes some design styles, Prompt engine projects, and texture design elements. When we do clothing models or deliver them to customers, in addition to training on clothing images, the most important thing is that customers need you to do specialized training and targeting for some basic styles and adjustable elements. We know that there are many styles of clothing, men's and women's, and the vocabulary here is particularly rich. In addition to the vocabulary of clothing styles, there are also a large number of material vocabulary, and these material vocabularies need to be translated into Chinese and English, as well as the processing of corresponding synonyms. This process is actually very industrialized, very cumbersome, and also requires industry partners to provide support. In some previous practices, customers have also provided similar data accumulations.

After doing these basic models, on the application side delivered to customers, we also need to achieve multi-angle presentation, as well as line drawing generation, or the posture placement of models, and some scenes. Because some brands have their own models, they also need to train this image, or it must be the image of their VIP customers when they wear clothes, similar functions. And clothing color adjustment, overall, this is more inclined towards creative concept design and marketing promotion, the realization and integration of these two functions.

The most important point, because we originally came from an intelligence background, we have a very strong advantage in this area, which is our ability to collect and analyze industry trends and intelligence. So we can convert trends and industry intelligence into some simple text concepts that can be supported in the model. Designers no longer need to focus on the latest fashion content, they only need to express it in text, and we can combine it to generate.

From our perspective, AIGC not only replaces the traditional business processes of the clothing industry, such as embedding more in creative concept design and marketing at present. It will also reconstruct the entire process. We will integrate marketing promotion with creative design. We know that AIGC can generate various beautiful styles. If you understand the effect you want to show to the customer and generate this image accordingly. The problem that exists next is how this image is integrated with the production system. Here is a problem, the current practice is that after producing this set of images, after the customer's selection or product selection, one of the styles that everyone likes is selected, and then this image cannot directly enter the production process, it still needs manual work. This manual work is equivalent to performing a process, called pattern making by looking at the image, they need to convert this image into industrial data, into something like this. If not done in this way, from another perspective, can I first generate patterns and industrial data, and then use AI methods to convert it into an effect image for the customer to see, is this possible? This possibility should be completely realized in the near future, achieving the empowerment of the entire clothing industry by AIGC, an end-to-end solution, where customers can directly obtain this without the need for manual pattern making. This is also the concept mentioned by the experts earlier, AIGC has become AIGS, becoming an end-to-end closed-loop service.

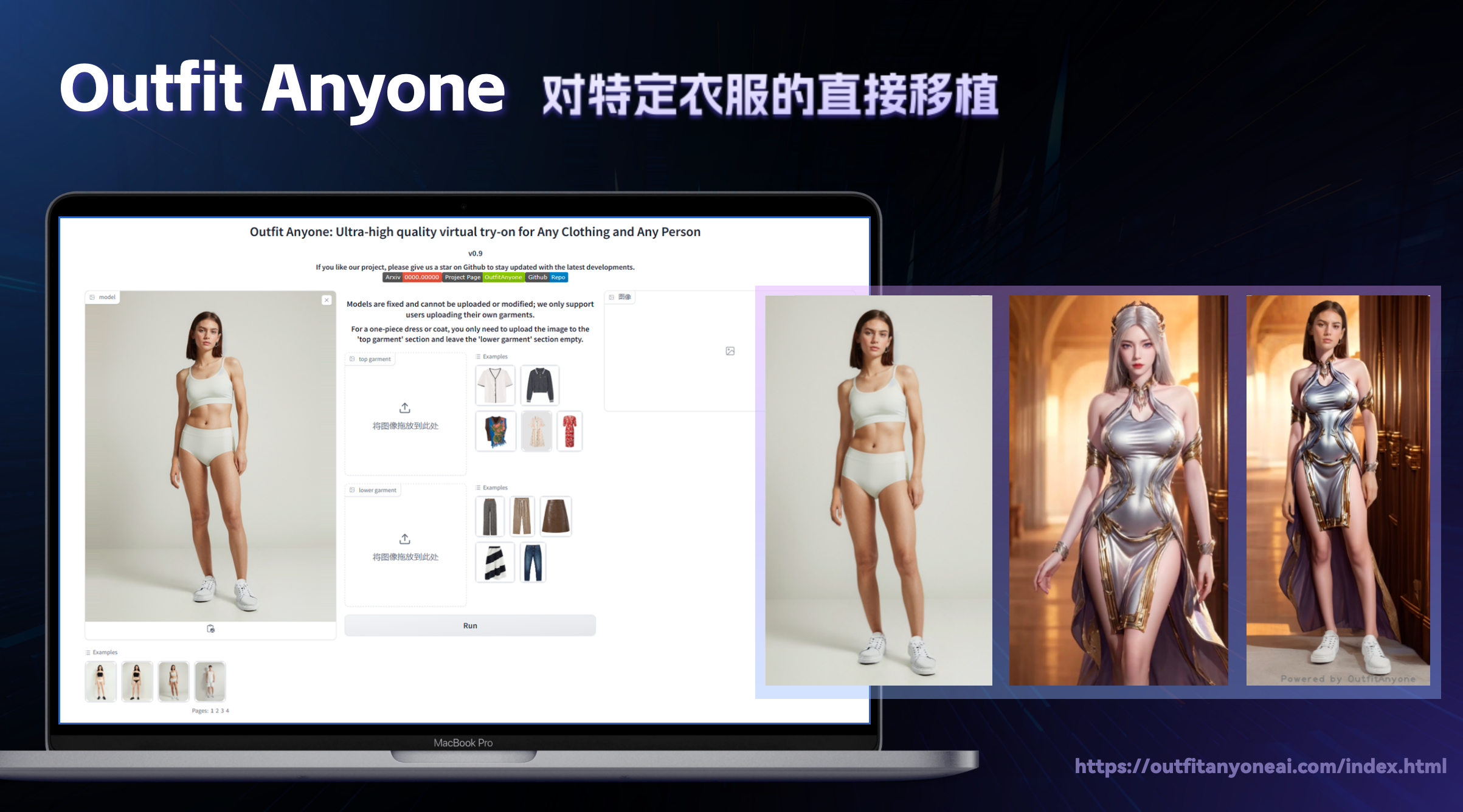

In addition to the basic functions we just saw, we will also see other applications being continuously supplemented, such as the recent popular Outfit Anyone, which actually produces finished clothes, or lays out clothes on a model, and can turn it into a three-dimensional effect, fundamentally still belonging to marketing promotion.

We also have a very well-known company in the industry, Style3D, which integrates industrial data of fabrics, and the styles are in 3D, capable of real-time garment solving. The bottleneck we encountered just now, such as the beautiful AIGC data to pattern making, can it be directly achieved now using Style3D? Currently, it cannot, but I believe that Style3D is also working towards this direction. For example, when a very beautiful piece of clothing comes out from AIGC, and we go to the factory for production, the factory will need to lay out the data after pattern making on a 2D plane. It is quite difficult to have AI unfold a garment worn by a model into a production tool. Style3D integrates some 3D models and clothing, and it can replace the interface. But there is a prerequisite, you have to use Style3D's tools to create a 3D model of the product yourself, which is equivalent to mapping from 2D to 3D. At this point, generating patterns in 2D and modifying them in 3D adds a 3D modeler to the clothing industry, which actually adds an unnecessary step, so there are some challenges here.

You can see that this animated video and Style3D are similar, in that when AIGC empowers the clothing industry, what we are showing everyone now are some finished garments, and we can also see the video effects after the garments are finished. For example, this is a T-shirt with a special texture, and if the T-shirt is worn by a user while dancing, the calculation is done by AI, not by animating frame by frame. We can also use MagicAnimate to further animate these videos for marketing promotion.

In general, creative concept design and marketing promotion are currently being done quite perfectly by AIGC. You can see various models in similar industries, as long as you have a considerable amount of industry data to feed your own model, then you can produce many AIGC effects that your industry needs. But we are currently facing the 4.0 stage, and this bottleneck has not been well resolved so far. Today, many friends from the AIGC xChina Industry Alliance are here, and everyone can find their own industry track in this field and make some breakthroughs. If breakthroughs can be made here, I think it will be a very significant industrial upgrade. For example, for clothing, our AIGC needs to connect with many production processes, which AIGC cannot currently do, and it all depends on manual pattern making. Once this bottleneck is broken, you can imagine that in many places in the future, buying clothes or doing a lot of designs, the speed from your design result to the finished garment will be very fast because it has become fully automatic.

For example, we recently worked with a client on the design of a Hanfu horse-faced skirt, which is an e-commerce brand ranked in the top three on the entire network. When we were designing the horse-faced skirt, because the horse-faced skirt is already the simplest structure in Hanfu, it is equivalent to processing on a long rectangular fabric strip, and the final garment looks like the skirt on the right. Here, if you want to use AIGC for applications, generating a very beautiful model wearing this horse-faced skirt, appearing on the user interface, and letting the user choose which horse-faced skirt looks good, is currently quite easy to achieve with AIGC. The problem is when the customer selects red, places an order, at this point, this information comes over, and the e-commerce still needs to arrange for a professional pattern maker to re-pattern the red color. However, AIGC has turned the pattern making process here into something that the designer used to plan on his own, and now AI tells him which style is most popular, reducing the scale of manual pattern making, but the manual pattern making process has not changed, this is a train of thought. Because directly unfolding the horse-faced skirt using AI, currently seems quite difficult.

Another approach is to directly generate AIGC deliverables on production fabrics or materials, and then use AI to decompose this deliverable, for example, the image on the left, into what kind of fabric should be used, how many colored threads should be used, such as gold, red, black threads, and then break it down into production tools, this path is currently OK. We then use AI to transform this rectangular image into the folded effect, which currently requires 3D mapping. It can be achieved, but it has a drawback, the drawback is that not every style can be mapped in 3D. However, for this kind of client, because the horse-faced skirt in Hanfu is the simplest, we have built-in mapping engines to quickly generate this effect. In other words, the production of the horse-faced skirt can be fully automated from AIGC to the final effect, but currently only limited to the horse-faced skirt. If it involves other clothing sub-tracks, how to proceed with this process, or what kind of better end-to-end landing solution, I also hope to have discussions and exchanges with everyone on-site.

In summary, AIGC in the clothing industry currently mainly solves creative concept design and marketing promotion. However, for industrial processes, because it has some specifications and industrial-level controllable precision adjustments, there are still bottlenecks in achieving fully automated processes without pattern makers or 3D modelers. The core of the customer is the production realization of explosive products, not just producing a lot of very good-looking AIGC deliverables. When we communicate with many clients in the industry, AIGC currently handles product selection quite well, but the real automatic core satisfaction may still require joint efforts from our industry, whether it is industry clients or technology providers, to promote.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。