Author: Ian@Foresight Ventures

TL;DR

After months of in-depth research on the combination of AI and cryptocurrency, I have gained a deeper understanding of this direction. This article compares and analyzes early viewpoints and current trends in the field. For friends familiar with this field, you can start reading from the second section.

- Decentralized Computing Power Network: Facing market demand challenges, it is particularly emphasized that the ultimate purpose of decentralization is to reduce costs. The community attributes and tokens of Web3 bring undeniable value, but for the computing power track itself, it is more of an added value rather than a disruptive change. The focus is on finding ways to integrate with user needs, rather than blindly treating decentralized computing power networks as a supplement to centralized computing power.

- AI Market: Discusses the concept of a fully financialized AI market, emphasizing the value brought by the community and tokens. This market not only focuses on underlying computing power and data, but also includes the models themselves and related applications. The financialization of models is a core element of the AI market, attracting users to directly participate in the value creation process of AI models, while also creating demand for underlying computing power and data.

- Onchain AI, ZKML faces dual challenges of supply and demand, while OPML provides a more balanced solution in terms of cost and efficiency. Although OPML is a technological innovation, it may not necessarily solve the fundamental challenge faced by onchain AI, which is the lack of demand.

- Application layer: Most AI projects in the web3 space are overly naive. A more reasonable focus for AI applications is to enhance user experience and improve development efficiency, or to serve as an important part of the AI market.

1. Review of the AI Track

Over the past few months, I have conducted in-depth research on the topic of AI + crypto. After months of accumulation, I am pleased to have gained insights into the direction of the track at an early stage, but I also see some viewpoints that may not be accurate now.

This article only discusses viewpoints and does not provide an introduction. It will cover several major directions of AI in web3 and demonstrate my previous and current viewpoints and analysis of the track. Different perspectives may provide different inspirations, and can be compared and viewed dialectically.

Let's review the main directions of AI + crypto set in the first half of the year:

1.1 Distributed Computing Power

In "Rationally Considering Decentralized Computing Power Networks," the analysis was based on the idea that computing power will become the most valuable resource in the future, and the value that crypto can bring to computing power networks.

Although the demand for decentralized distributed computing power networks in large-scale AI model training is significant, it also faces the greatest challenges and technical bottlenecks. This includes complex data synchronization and network optimization issues. In addition, data privacy and security are also important limiting factors. Although some existing technologies can provide preliminary solutions, in large-scale distributed training tasks, these technologies are still not applicable due to the huge computational and communication overhead. It is clear that decentralized distributed computing power networks have a greater opportunity to land in model inference, and the potential for future incremental space is also significant. However, it also faces challenges such as communication latency, data privacy, and model security. Compared to model training, the computational complexity and data interaction during inference are lower, making it more suitable for distributed environments.

1.2 Decentralized AI Market

In "Best Attempts at Decentralized AI Marketplace," it was mentioned that a successful decentralized AI marketplace needs to closely integrate the advantages of AI and Web3, utilize distributed, asset ownership, revenue distribution, and the added value of decentralized computing power, reduce the threshold for AI applications, encourage developers to upload and share models, while protecting user data privacy rights, and build a developer-friendly AI resource trading and sharing platform that meets user needs.

At that time, the idea was (which may not be entirely accurate now) that data-based AI marketplaces have greater potential. A marketplace that focuses on models requires support from a large number of high-quality models, but early platforms lack a user base and high-quality resources, making it difficult to incentivize excellent model providers and attract high-quality models. On the other hand, a data-based marketplace, through decentralization, distributed collection, incentive layer design, and guarantee of data ownership, can accumulate a large amount of valuable data and resources, especially private domain data.

The success of a decentralized AI marketplace depends on the accumulation of user resources and strong network effects, where users and developers can obtain more value from the market than they can outside the market. In the early stages of the market, the focus is on accumulating high-quality models to attract and retain users, and then, after establishing a high-quality model library and data barriers, shifting to attracting and retaining more end users.

1.3 ZKML

In "AI + Web3 = ?", the value of onchain AI was discussed before the topic of ZKML was widely discussed.

Without sacrificing decentralization and trustlessness, onchain AI has the opportunity to lead the web3 world to the "next level." The current web3 is like the early stage of web2, and it does not yet have the ability to undertake more extensive applications or create greater value. Onchain AI happens to provide a transparent, trustless solution.

1.4 AI Applications

In "AI + Crypto: Discussing Web3 Female-Oriented Game—HIM," the value brought by large models to web3 applications was analyzed in combination with the portfolio project "HIM"; what kind of AI + crypto can bring higher returns to products? In addition to hardcore infrastructure and algorithms, developing trustless LLM on the chain, another direction is to mitigate the impact of the black box process of inference in products, and find suitable scenarios to implement the powerful inference capabilities of large models.

2. Current Analysis of the AI Track

2.1 Computing Power Network: Great potential but high barriers

The big logic of the computing power network remains unchanged, but it still faces challenges in market demand. Who would need a solution with lower efficiency and stability? Therefore, I believe the following points need to be considered:

What is Decentralization for?

If you were to ask a founder of a decentralized computing power network now, they would probably still tell you that our computing power network can enhance security and resistance to attacks, improve transparency and trust, optimize resource utilization, enhance data privacy and user control, resist censorship and intervention…

These are all common sense, and any web3 project can talk about resistance to censorship, trustlessness, privacy, and so on, but my point is that these are not important. Think about it carefully, isn't it possible for centralized servers to do better in terms of security? Decentralized computing power networks fundamentally do not solve the problem of privacy. There are many such contradictions. So: the ultimate purpose of a decentralized computing power network is definitely to reduce costs. The higher the degree of decentralization, the lower the cost of using computing power.

Therefore, fundamentally, "utilizing idle computing power" is more of a long-term narrative, and whether a decentralized computing power network can be successful, I believe it largely depends on whether they have thought through the following points:

Value Provided by Web3

A clever token design and the accompanying incentives/punishment mechanisms are obviously a strong value add provided by the decentralized community. Compared to the traditional internet, tokens not only serve as a medium of exchange, but also, in conjunction with smart contracts, enable protocols to implement more complex incentive and governance mechanisms. At the same time, the openness and transparency of transactions, cost reduction, and efficiency improvement all benefit from the value brought by crypto. This unique value provides contributors with more flexibility and innovation space.

At the same time, it is also hoped to rationally consider this seemingly reasonable "fit." For decentralized computing power networks, the value brought by Web3 and blockchain technology from another perspective is only "added value," rather than a fundamental disruption, and cannot change the basic operation and breakthrough of the entire network's technical bottlenecks.

In short, the value of Web3 enhances the attractiveness of decentralized networks, but does not completely change their core structure or operating mode. If decentralized networks are to truly occupy a place in the AI wave, the value of Web3 alone is far from enough. Therefore, as will be mentioned later, the appropriate technical solution for the appropriate problem, the play of decentralized computing power networks is by no means simply to solve the problem of AI computing power shortage, but to give this long-dormant track a new play and mindset.

It may be similar to proof of work mining or storage mining, where computing power is monetized as an asset. In this model, computing power providers can earn tokens as rewards by contributing their computing resources. The attractiveness lies in providing a direct way to convert computing resources into economic benefits, thereby incentivizing more participants to join the network. It may also be based on Web3 to create a market for consuming computing power, by financializing the upstream of computing power (such as models), opening up demand points that can accept unstable and slower computing power.

Understanding how to integrate with the actual needs of users, after all, the needs of users and participants are not necessarily just efficient computing power, "being able to earn money" is always one of the most convincing motivations.

The core competitiveness of decentralized computing power networks is price

If we must discuss the practical value of decentralized computing power, then the greatest imagination space brought by Web3 is the opportunity to further compress the cost of computing power.

The higher the decentralization of computing power nodes, the lower the price of unit computing power. The following can be deduced from several directions:

- The introduction of tokens, changing the payment to computing power providers from cash to the protocol's native tokens, fundamentally reduces operating costs;

- Permissionless access and the powerful community effect of Web3 directly promote market-driven cost optimization, allowing more individual users and small businesses to join the network using existing hardware resources, increasing the supply of computing power, and lowering the price of computing power in the market. Under the governance and community management model.

- The open computing power market created by the protocol will promote price bargaining among computing power providers, further reducing costs.

Case: ChainML

In simple terms: ChainML is a decentralized platform that provides computing power for inference and fine-tuning. In the short term, ChainML will achieve demand growth for decentralized computing networks through the attempt of Council, an open-source AI agent framework (a chatbot that can be integrated into different applications). In the long term, ChainML will be a complete AI + Web3 platform (which will be analyzed in detail later), including a model market and computing power market.

I believe that ChainML's technical roadmap is very reasonable, and they have thought through the problems mentioned earlier. The purpose of decentralized computing power is definitely not to be compared with centralized computing power, to provide sufficient computing power supply for the AI industry, but to gradually reduce costs to make suitable demand sources accept this lower-quality source of computing power. Therefore, in the early stages of the project, when the protocol cannot obtain a large number of decentralized computing power nodes, the focus is to find a stable and efficient source of computing power. Therefore, from the product roadmap perspective, it should start with a centralized approach in the early stages, run the product chain, and start accumulating customers through strong business development capabilities, expand and occupy the market, and then gradually disperse the providers of centralized computing power to smaller companies at higher costs, and finally deploy computing power nodes widely. This is the divide and conquer strategy of ChainML.

From the layout of the demand side, ChainML has built an MVP of a centralized infrastructure protocol, which is designed to be portable. Since February of this year, it has been running this system with customers, and started using it in a production environment in April. It is currently running on Google Cloud, but based on Kubernetes and other open-source technologies, it is easy to migrate to other environments (AWS, Azure, Coreweave, etc.). It will gradually decentralize this protocol, disperse it to niche clouds, and finally to the miners who provide computing power.

2.2 AI Market: Greater Imagination Space

This sector is somewhat limited by being called an AI marketplace. Strictly speaking, a truly imaginative "AI market" should be an intermediate platform that financializes the entire model chain, covering from underlying computing power and data to the models themselves and related applications. Previously, the main contradiction of decentralized computing power in the early stage was how to create demand, and a closed-loop market that financializes the entire AI model chain has the opportunity to generate such demand.

It might be like this:

An AI market under the blessing of Web3 is based on computing power and data, attracting developers to build or fine-tune models with more valuable data, and then develop corresponding model-based applications. These applications and models also create demand for computing power while being developed and used. Under the incentives of tokens and the community, real-time data collection tasks based on bounties or regular incentives for contributing data have the opportunity to expand and enhance the unique advantages of the data layer in this market. At the same time, the popularity of applications also returns more valuable data to the data layer.

Community

In addition to the value brought by tokens mentioned earlier, the community is undoubtedly one of the greatest gains brought by Web3, and is the core driving force for platform development. The community and the blessing of tokens allow contributors and the quality of contributed content to potentially surpass centralized institutions. For example, the diversity of data achievement is an advantage of such platforms, which is crucial for building accurate, unbiased AI models, and is also the bottleneck of the current data direction.

I believe the core of the entire platform lies in the models. We realized very early on that the success of an AI marketplace depends on the existence of high-quality models and what motivates developers to provide models on a decentralized platform. But it seems that we also forgot to consider one question: competing in infrastructure is not as strong as traditional platforms, developing a developer community is not as mature as traditional platforms, and reputation does not have the first-mover advantage of traditional platforms. So, compared to the large user base and mature infrastructure of traditional AI platforms, Web3 projects can only overtake on the bend.

The answer may lie in financializing AI models

- Models can be treated as a commodity, and viewing AI models as investable assets may be an interesting innovation of Web3 and decentralized markets. This market allows users to directly participate in the value creation process of AI models and benefit from it. This mechanism also encourages the pursuit of higher-quality models and community contributions, as user earnings are directly related to the performance and application effects of the models.

- Users can invest by staking models, introducing a mechanism for profit sharing. On the one hand, this incentivizes users to choose and support promising models, providing economic incentives for model developers to create better models. On the other hand, the most intuitive way for stakers to judge models (especially for image generation models) is to conduct multiple tests, which provides demand for the decentralized computing power of the platform. This may also be one of the solutions to the previously mentioned "who would want to use less efficient and unstable computing power?"

2.3 Onchain AI: Will OPML Overtake on the Bend?

ZKML: Both Ends of Supply and Demand Stepping on Landmines

Certainly, on-chain AI is a direction full of imagination and worth in-depth research. The breakthrough of on-chain AI can bring unprecedented value to Web3. However, the extremely high academic threshold and the requirements for underlying infrastructure of ZKML are indeed not suitable for most startups to tackle. Most projects may not necessarily need to integrate trustless LLM support to achieve their own breakthrough in value.

But not all AI models need to be moved to the chain using ZK for trustless execution. Just like most people don't care about how a chatbot reasons and provides results for a query, or whether the stable diffusion used is a specific version of model architecture or specific parameter settings. In most scenarios, most users care about whether the model can provide a satisfactory output, rather than whether the reasoning process is trustless or transparent.

If the proving system does not bring a hundredfold overhead or higher reasoning costs, perhaps ZKML still has a fighting chance. But in the face of high on-chain reasoning costs and higher costs, any demand side has reason to question the necessity of Onchain AI.

From the demand side

Users care about whether the results provided by the model make sense. As long as the results are reasonable, the trustless nature brought by ZKML can be said to be worthless. Consider one of the scenarios:

- If a neural network-based trading bot brings users a hundredfold profit every period, who would question whether the algorithm is centralized or verifiable?

- Similarly, if this trading bot starts causing losses to users, the project should focus on improving the model's capabilities rather than spending resources and capital on making the model verifiable. This is the contradiction in the demand for ZKML. In other words, the verifiability of the model does not fundamentally address people's doubts about AI in many scenarios, which is somewhat counterproductive.

From the supply side

The development of a proving system sufficient to support large oracle models is still a long way off. From the attempts of leading projects, there is almost no sign of large models being deployed on-chain.

Referring to our previous article on ZKML, technically, the goal of ZKML is to transform neural networks into ZK circuits, with the difficulties being:

- ZK circuits do not support floating-point numbers.

- It is difficult to convert large-scale neural networks.

Based on current progress:

- The latest ZKML library supports the ZK transformation of some simple neural networks, reportedly capable of deploying basic linear regression models on-chain. However, there are very few existing demos.

- Theoretically, it can support approximately 100M parameters, but this is only theoretical.

The development progress of ZKML has not met expectations. From the progress of the proving systems released by leading projects such as modulus lab and EZKL, some simple models can be transformed into ZK circuits for on-chain model deployment or verifiable reasoning. However, this is far from realizing the value of ZKML, and it seems that the technical bottleneck lacks the core drive to break through. A track that severely lacks demand fundamentally cannot attract attention from the academic community, which means it is even more difficult to create excellent proofs of concept to attract/satisfy the remaining demand, and this may also be the death spiral of ZKML.

OPML: Transition or Endgame?

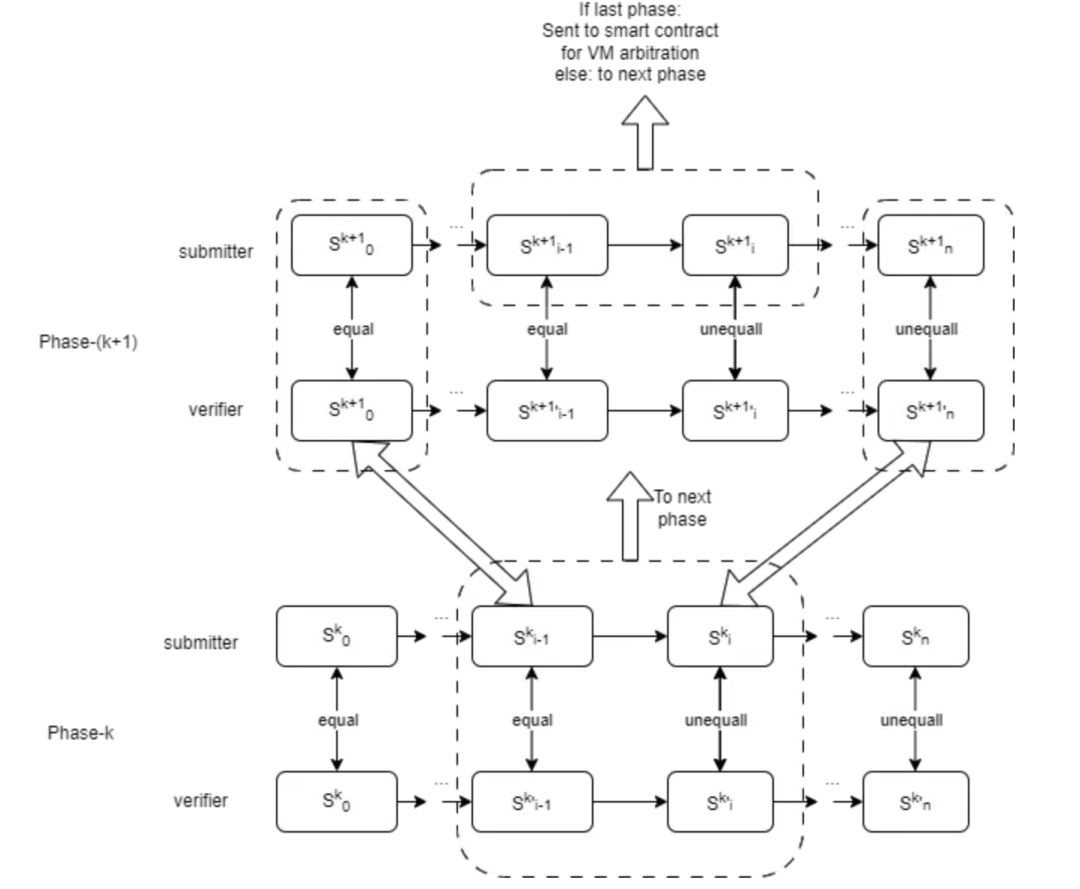

The difference between OPML and ZKML is that ZKML proves the complete reasoning process, while OPML will re-execute part of the reasoning process when the reasoning is challenged. Obviously, the biggest problem that OPML solves is the high cost/overhead, which is a very practical optimization.

As the creator of OPML, the HyperOracle team provided an architecture and advanced process from one-phase to multi-phase OPML in "opML is All You Need: Run a 13B ML Model in Ethereum":

- Building a virtual machine for off-chain execution and on-chain verification to ensure equivalence between the offline VM and the VM implemented in the on-chain smart contract.

- To ensure the inference efficiency of AI models in the VM, a specially designed lightweight DNN library is implemented (independent of popular machine learning frameworks like TensorFlow or PyTorch), and the team also provides a script to convert TensorFlow and PyTorch models to this lightweight library.

- Compiling the AI model inference code into VM program instructions through cross-compilation.

- Managing the VM image through a Merkle tree. Only the Merkle root representing the VM state is uploaded to the on-chain smart contract.

However, this design has a key flaw, which is that all computations must be executed within the virtual machine, preventing the use of GPU/TPU acceleration and parallel processing, limiting efficiency. Therefore, multi-phase OPML is introduced.

- Only in the final phase are computations performed in the VM.

- In other phases, state transition computations occur in a native environment, utilizing the capabilities of CPUs, GPUs, TPUs, and supporting parallel processing. This approach reduces dependence on the VM, significantly improves execution performance, and achieves a level comparable to the native environment.

Reference: https://mirror.xyz/hyperoracleblog.eth/Z_Ui5I9gFOy7-dajI1lgEqtnzSIKcwuBIrk-6YM0Y

LET’S BE REAL

Some opinions believe that OPML is a transitional phase before the full implementation of ZKML, but it is more realistic to consider it as a trade-off for Onchain AI based on cost structure and implementation expectations. Perhaps the day when ZKML is fully implemented will never come. At least, I am pessimistic about it. Therefore, the hype of Onchain AI will ultimately face the most realistic implementation and cost. Therefore, OPML may be the best practice for Onchain AI, just as the OP and ZK ecosystems are not mutually exclusive.

However, do not forget that the previous shortcomings in demand still exist. The cost and efficiency-based optimization of OPML does not fundamentally address the contradictory issue of "since users care more about the reasonableness of the results, why move AI to the chain to make it trustless." Transparency, ownership, trustlessness, these buffs stacked together may indeed be flashy, but do users really care? In contrast, the value should be reflected in the reasoning capabilities of the model.

I believe that this cost optimization is a technically innovative and solid attempt, but in terms of value, it looks more like a clumsy compromise;

Perhaps the Onchain AI track itself is like holding a hammer and looking for nails, but that's okay. The development of an early industry requires continuous exploration and innovative combinations of cross-disciplinary technologies, finding the best fit through continuous adjustment. What is wrong is not the collision and attempts of technology, but the blind follow-up without independent thinking.

2.4 Application Layer: 99% Frankenstein

It must be said that the attempts of AI in the Web3 application layer are indeed continuous, as if everyone is in a FOMO state, but 99% of the integration still remains at the integration level, and there is no need to map the value of the project itself based on the reasoning capabilities of GPT.

From the application layer, there are roughly two ways forward:

Enhancing user experience and improving development efficiency with the help of AI: In this case, AI may not be the core highlight, but more often it quietly works behind the scenes, and even goes unnoticed by users. For example, the HIM team of the web3 game HIM has cleverly combined game content, AI, and crypto, seizing the high compatibility and most valuable points. On one hand, they use AI as a tool to create value, improving efficiency and quality, and on the other hand, they enhance the user's gaming experience through AI's reasoning capabilities. AI and crypto indeed bring very important value, but fundamentally, they are still using the means of technological tooling. The real advantage and core of the project still lie in the team's ability to develop games.

Combining with AI marketplace, it becomes an important part of the entire ecosystem facing users.

III. Conclusion…

If there is really anything that needs to be emphasized or summarized: AI is still one of the most worthy of attention and largest opportunities in the web3 space, and this big logic will definitely not change.

But I believe the most worthy of attention is the gameplay of AI marketplace. Fundamentally, the design of such a platform or infrastructure meets the demand for value creation and satisfies the interests of all parties. In a macro sense, creating a way to capture value unique to web3 outside of models or computing power is attractive enough. At the same time, it allows users to directly participate in the wave of AI in a unique way.

Perhaps in three months, I will overturn my current thoughts, so:

The above is just my very real point of view about this track, and it really does not constitute any investment advice!

Reference

"opML is All You Need: Run a 13B ML Model in Ethereum": https://mirror.xyz/hyperoracleblog.eth/Z_Ui5I9gFOy7-dajI1lgEqtnzSIKcwuBIrk-6YM0Y

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。