Source: Titan Media

Author: Lin Zhijia

Image source: Generated by Unbounded AI

The artificial intelligence (AI) industry has had a crazy night, with Google and AMD launching new products one after another, finally ready to "beat" OpenAI and Nvidia.

December 7th news, early this morning Beijing time, Sundar Pichai, CEO of Google, announced that Google has officially released its most powerful and versatile multimodal artificial intelligence (AI) large model to date: Gemini.

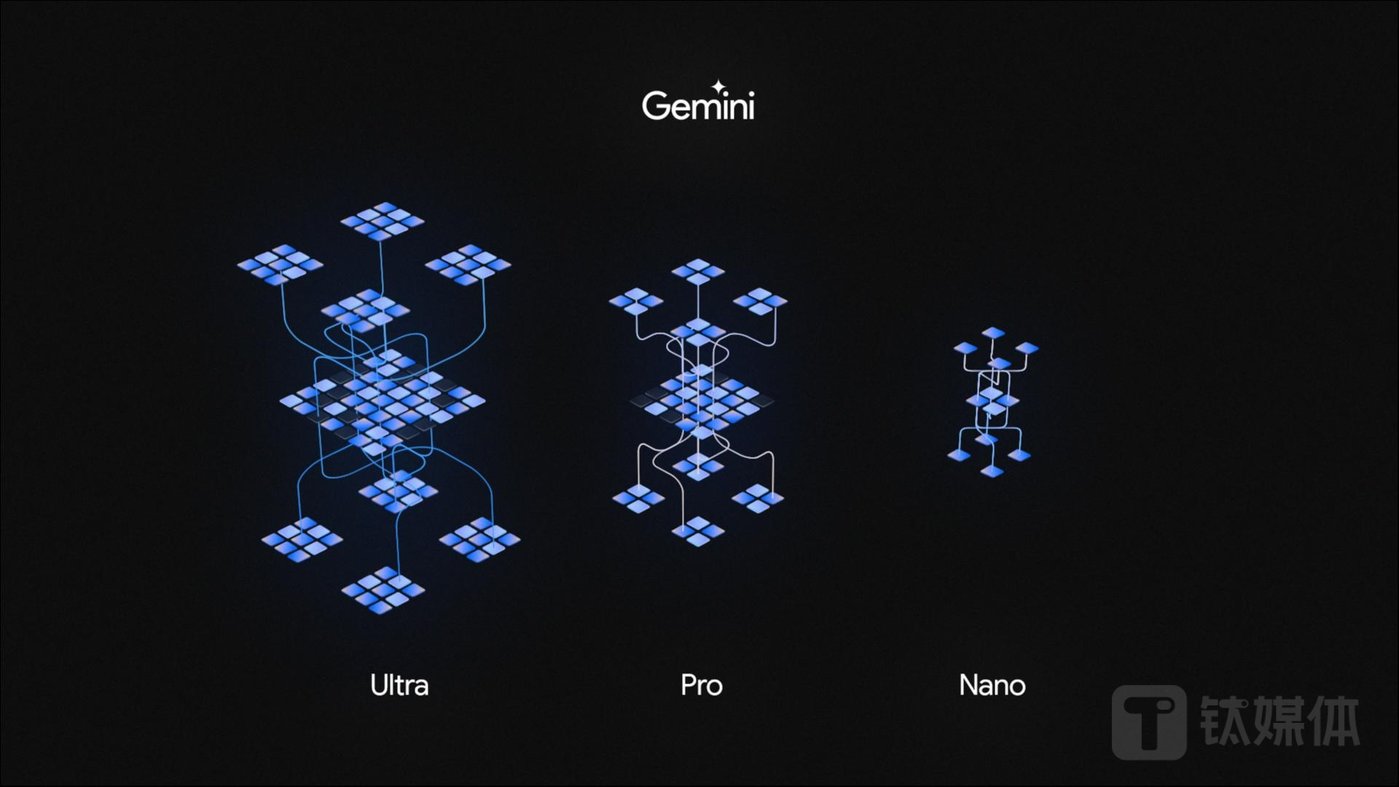

Specifically, Google's latest Gemini 1.0 series consists of three different-sized versions of the English model: Ultra, Pro, and Nano, all of which are equipped with the latest and most powerful self-developed AI supercomputing chip, Cloud TPU v5p.

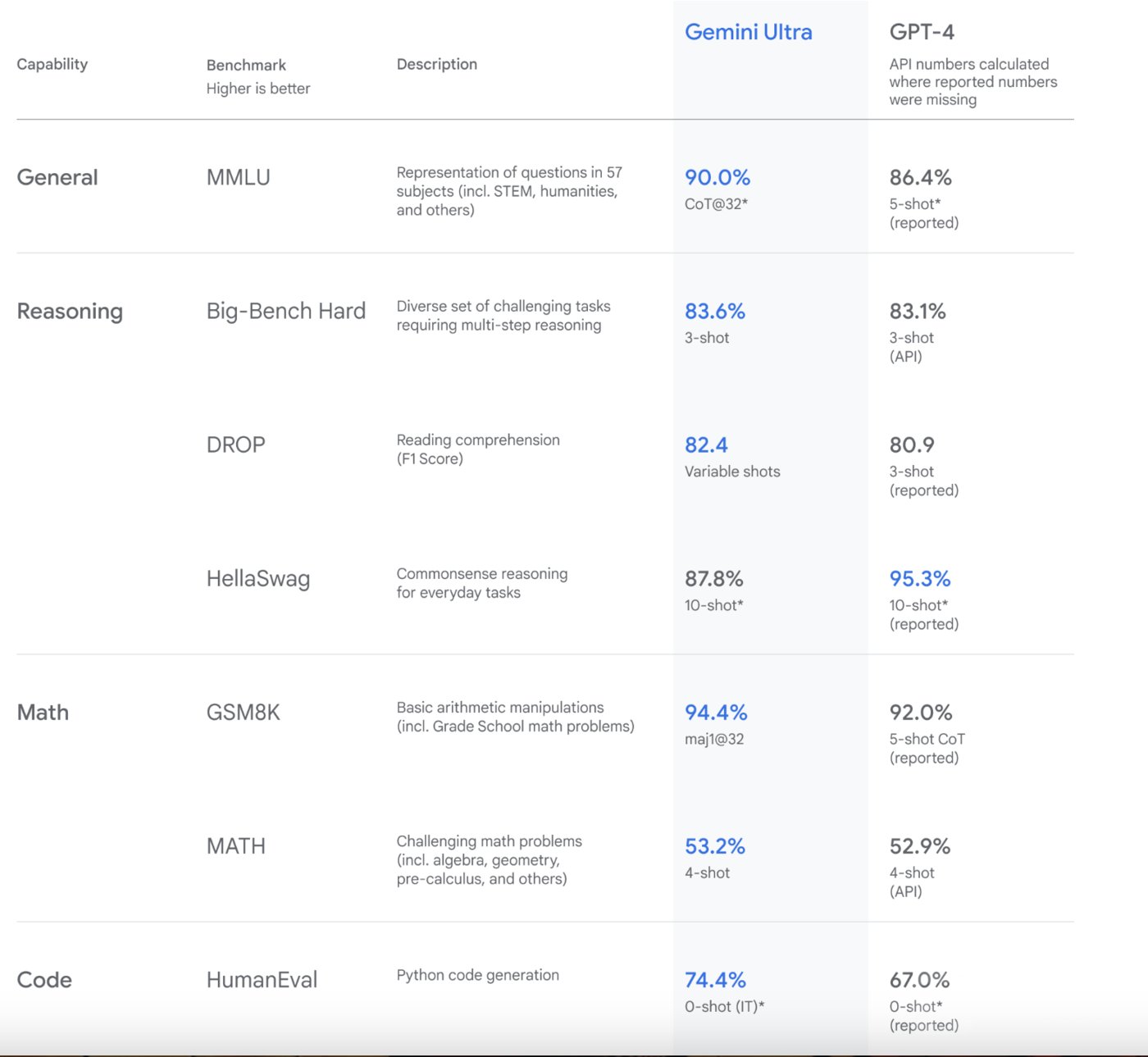

Google stated that in six benchmark tests, the performance of the Gemini Pro model surpassed that of GPT-3.5, and in 30 performance benchmark tests, the Ultra model surpassed the current strongest model, GPT-4. Furthermore, in the MMLU test, Gemini Ultra scored as high as 90.0%, making it the first AI model to surpass human expert level in 57 fields such as mathematics, physics, and law.

At the same time, early on December 7th, chip giant AMD released the highly anticipated new Instinct MI300X AI acceleration chip (APU) in San Jose, USA. Composed of more than ten TSMC 5nm/6nm small chips, it has 15.3 billion transistors, packaged with CPU+GPU, and supports up to 192GB of HBM3 memory capacity, targeting the AI computing chip market dominated by Nvidia.

Pichai stated that Gemini is a huge leap for AI models and will ultimately impact almost all of Google's products. At the same time, humanity is taking the first step into the new era of Gemini, moving towards true general artificial intelligence (AGI Model).

"MI300X is our most advanced product to date and the most advanced AI acceleration chip in the industry," said Lisa Su, CEO of AMD, "AI is the single most important and transformative technology in over 50 years, not just a cool new technology, but also genuinely changing the future of computing world."

However, it is somewhat regrettable that as of now, neither MI300X nor the Gemini 1.0 series models will be sold or serviced in the mainland Chinese market, and it is expected that AMD will launch "China-specific" compliant products.

AMD CEO Lisa Su

Google's Gemini outperforms GPT-4, and the latest self-developed TPU v5p chip increases model training speed by 280%

Starting from November 30, 2022, the American company OpenAI released the AI chatbot ChatGPT, which has become one of the fastest-growing consumer applications globally. It can not only write poetry, program, and create scripts, but also assist in interviews, publish papers, and even provide extensive search services, demonstrating great potential for liberating productivity.

As the company that has invested the most time in AI technology and possesses numerous core technologies, Google seems caught off guard by ChatGPT's outstanding performance and technical advantages. The market is concerned that ChatGPT may replace traditional search engines and impact Google's search advertising business.

To address this challenge, Google initiated an internal "code red" alert in February this year, with founders Larry Page and Sergey Brin returning to the company and urgently launching the ChatGPT competitor Bard, as well as investing over $400 million in the ChatGPT competitor Anthropic.

However, despite multiple iterations and upgrades after its release, Bard has received generally poor feedback in the market, with criticisms that its product is rushed and its performance and experience are not as good as ChatGPT or Microsoft's GPT-4 version of Bing search.

Now, Google is finally ready to fight back, releasing the long-rumored "Google trump card," Gemini 1.0, the most powerful and surpassing GPT-4 universal AI model in history.

The multimodal Gemini is the largest and most powerful Google large model to date, surpassing GPT-4 in text, video, voice, and other fields, truly redeeming itself.

According to The Information, Google originally planned to announce Gemini in 2024, but it seems that Google has changed its strategy to seize public attention during OpenAI's board restructuring and internal turmoil. Currently, the latest paper released by Google shows that the Gemini development team consists of over 800 people.

In terms of products, the new Gemini series includes three model versions: Gemini Ultra, Gemini Pro, and Gemini Nano.

Among them, Gemini Ultra is the most powerful model of the three, suitable for highly complex tasks, and is planned to be integrated into Google's chatbot Bard next year; Gemini Pro is the most versatile and scalable model for various tasks, currently in beta testing on Bard; Gemini Nano is mainly used in edge devices to execute the most efficient AI tasks and will be used for the first time on the Google Pixel 8 Pro.

Compared to Bard, both Gemini and Bard are large language models (LLMs) developed by Google AI, but they have different characteristics and advantages. Gemini is better at generating creative text formats such as poetry, code, scripts, music works, emails, and letters, while Bard excels at providing information and comprehensive answers to challenging questions. If users need information and comprehensive answers, Bard will be a better choice.

According to the Google official website, the highlights of Gemini 1.0 mainly include five aspects: advanced performance test results, new reasoning and creative functions, powerful and efficient AI supercomputing system, responsibility and security, and availability.

Firstly, in terms of performance testing, in 30 out of 32 performance benchmark tests, the Gemini Ultra model surpassed the existing most advanced GPT-4. In the MMLU (Massive Multitask Language Understanding) test, Gemini Ultra scored 90.0%, surpassing human experts for the first time, which comprehensively covers 57 subjects such as mathematics, physics, and history; in addition, it also achieved a high score of 59.4% in the UltraMMMU multimodal test; in image benchmark tests, Ultra surpassed the powerful GPT-4V without using OCR, demonstrating its multimodal and more complex reasoning capabilities.

On the new features, Gemini 1.0 has complex multimodal reasoning capabilities, which can help understand complex written and visual information. It also has outstanding capabilities to extract insights from tens of thousands of documents through reading, filtering, and understanding information, which will contribute to achieving new breakthroughs in digitization across multiple fields from science to finance at a digital speed. Additionally, after training, Gemini 1.0 can simultaneously recognize and understand text, images, audio, etc., and the encoding capability AlphaCode 2 has seen significant improvement, with encoding performance surpassing 85% of competitive programmers, nearly 50% higher than the previous AlphaCode.

In terms of computing power, Gemini 1.0 uses Google-designed TPUs v4 and v5e chips for large-scale AI training. Google claims that on TPUs, Gemini's running speed is significantly faster than earlier models with smaller scale and weaker performance. Additionally, today, Google also released the most powerful, efficient, and scalable TPU system to date, Cloud TPU v5p, aimed at supporting the training of cutting-edge AI models, thereby accelerating the development of Gemini.

It is worth mentioning that the Google TPU v5p chip uses TSMC's 5nm/7nm process and is developed as a training chip for generative AI, unlike the previous TPU v4 inference chip. Each TPU v5p pod (server group) consists of 8960 chips, connected at a speed of 4800Gbp per second in a 3D topology through TPU's highest bandwidth inter-chip interconnect (ICI). Compared to TPU v4, TPU v5p's Flops performance has more than doubled, and the high-bandwidth memory (HBM) has tripled. At the same time, the training speed of large models with TPU v5p is 2.8 times faster (280%) than the previous TPU v4, and with the help of the second-generation SparseCores, the training speed of embedding dense models with TPU v5p is more than 1.9 times faster than TPU v4.

In terms of responsibility and security, Google states that it is committed to advancing AI responsibly, especially in the development of the advanced multimodal capabilities of the Gemini model. It has added new protective measures based on Google AI principles and strict product safety policies, comprehensively considering potential risks and conducting testing and risk reduction at every stage of development. Additionally, Google collaborates with external experts to conduct stress tests to ensure content safety and has established a dedicated security classifier to identify and filter harmful content, ensuring Gemini is safer and more inclusive.

Regarding availability, Google announced that starting today, Bard will use a tuned version of Gemini Pro for advanced reasoning, planning, and understanding, and will provide services in over 170 countries and regions (excluding mainland China) in English. Additionally, from December 13th, developers and enterprise customers can access the Gemini Pro API through Google AI Studio or Google Cloud Vertex AI, while Gemini Nano is integrated into the Pixel 8 Pro.

Google states that in the coming months, Gemini will be applied to more of Google's products and services, such as Search, Ads, Chrome browser, and Duet AI. According to tests, when integrated into Google Search, Gemini can provide users with a faster Search Generation Experience (SGE), reducing search latency by 40% for English searches in the United States, while also improving quality.

Google CEO Sundar Pichai (Source: CNBC)

Pichai stated that the release of Gemini is an important moment for Bard and the beginning of the Gemini era. If the test results are accurate, the new model may have already made Bard as outstanding as ChatGPT. "This is clearly a quite impressive feat."

However, as of now, Google's search and advertising business has not been affected by large models such as GPT. According to Statcounter data, as of October 2023, Bing's market share in the United States is slightly below 7%, down from 7.4% in the same period last year, while Google continues to dominate the search engine market with a share of 88.13%. From 2023 to the present, Bing's market share with GPT technology has remained below 7%. Additionally, financial reports show that in the 2022 fiscal year, Google's parent company Alphabet's total revenue, including Google Search and other revenue, was $162.5 billion, a 9% year-on-year increase. In the first three quarters of the 2023 fiscal year, Alphabet's total revenue reached $221.084 billion, surpassing the $206.8 billion in the same period last year.

"So far, we have made tremendous progress on Gemini. We are working to further expand the various functions of its future versions, including progress in planning and memory, as well as providing better responses by handling more information and increasing the context window," Google stated.

According to The Verge, Google plans to launch a "Bard Advanced" preview version supported by Gemini Ultra next year, which is expected to compete with ChatGPT-4 or 5.

However, Pichai also admitted that while AI's transformation of humanity will be more significant than the birth of fire and electricity, Gemini 1.0 may not change the world, and the best-case scenario is that Gemini may help Google catch up with OpenAI in the generative AI arms race.

"This is an important milestone in the development of AI and marks the beginning of a new era for Google. We will continue to innovate rapidly and continuously enhance the capabilities of our models in a responsible manner," Google stated.

The most powerful AI chip MI300X, with a 60% performance improvement over Nvidia H100, but not sold in China

AMD has finally decided to counter Nvidia.

(Image source: AMD)

In the early morning of December 7th Beijing time, at the AMD Advancing AI event held in San Jose, USA, AMD CEO Lisa Su announced the launch of the Instinct MI300X AI acceleration chip (APU) and the start of mass production of the MI300A chip.

Both products are aimed at the market dominated by Nvidia.

Specifically, the MI300X memory is 2.4 times that of Nvidia's H100 product, and the memory bandwidth is 1.6 times that of H100, further enhancing performance and potentially challenging Nvidia's dominant position in the hot AI acceleration chip market.

AMD stated that the new MI300X chip can improve performance by up to 60% compared to Nvidia's H100. In a one-on-one comparison with H100 (Llama 2 700 billion parameter version), MI300X performance has increased by up to 20%; in a one-on-one comparison with H100 (FlashAttention 2 version), MI300X performance has increased by 20%; in an 8-to-8 server comparison with H100 (Llama 2 70B version), MI300X performance has increased by 40%; in an 8-to-8 server comparison with H100 (Bloom 176B), MI300X performance has increased by 60%.

Meanwhile, in AI large model training, compared to H100, MI300X has improved 3.4 times in the BF16 performance benchmark, 6.8 times in INT8 precision performance, and 1.3 times in FP8 and FP16 TFLOPS, further enhancing training performance.

Lisa Su stated that the new MI300X chip is comparable to H100 in the ability to train AI software and is much better than H100 in inference, which is the process of running the software in practical use.

For AI, AMD has three major advantages: a complete IP and a wide range of computing engine product portfolios that can support the most demanding workloads from the cloud to the edge to the endpoint; the company is expanding its open-source software capabilities to lower the barriers to entry and use the full potential of AI; and AMD is deepening the industry ecosystem of AI partners, allowing cloud service providers (CSP), OEMs, and independent software vendors (ISVs) to enjoy its pioneering innovative technology.

Currently, AMD, Nvidia, and Intel are all vigorously promoting the AI trend. Nvidia has announced the Hopper H200 GPU and Blackwell B100 GPU products for 2024, while Intel will launch the Guadi 3 and Falcon Shores GPU in 2024. It is expected that the three companies will continue to compete in the coming years.

AMD stated that companies such as Dell, HP, Lenovo, Meta, Microsoft, Oracle, and Supermicro will adopt the MI300 series products.

Microsoft and AMD have collaborated to launch the new Azure ND MI300x v5 virtual machine (VM) series, which is optimized for AI workloads and supported by MI300X; Meta will use AMD's newly launched MI300X chip products in its data centers. Oracle stated that the company will use AMD's new chips in its cloud services, expected to be released in 2024.

However, AMD currently faces three main issues: limited production capacity, with manufacturers such as Nvidia and Intel buying up capacity in advance, and the introduction of multiple series products such as MI300A and MI300X, which may lead to supply shortages; Nvidia will release the B100 in March next year, and H200 will also be sold in the market, so it is still unknown how long AMD will exclusively hold the title of "the most powerful AI chip"; in terms of software, CUDA has become the best choice for large model training and inference, and although AMD has made significant progress in the ROCm software field, it is still relatively weak compared to Nvidia's CUDA ecosystem.

In response, AMD stated: "The Chinese market is important to AMD, but no special products specifically for the Chinese market were announced today."

Previously, the market expected AMD's MI300 series to ship approximately 300,000 to 400,000 units in 2024, with major customers being Microsoft and Google. If not for the limited capacity shortage at TSMC and Nvidia's early reservation of over 40% of the capacity, AMD's shipments are expected to increase further.

"AMD does not believe it needs to beat Nvidia to achieve good results in the market," Lisa Su publicly stated this morning. "We believe that by 2027, we can carve out a piece of the $400 billion AI chip market."

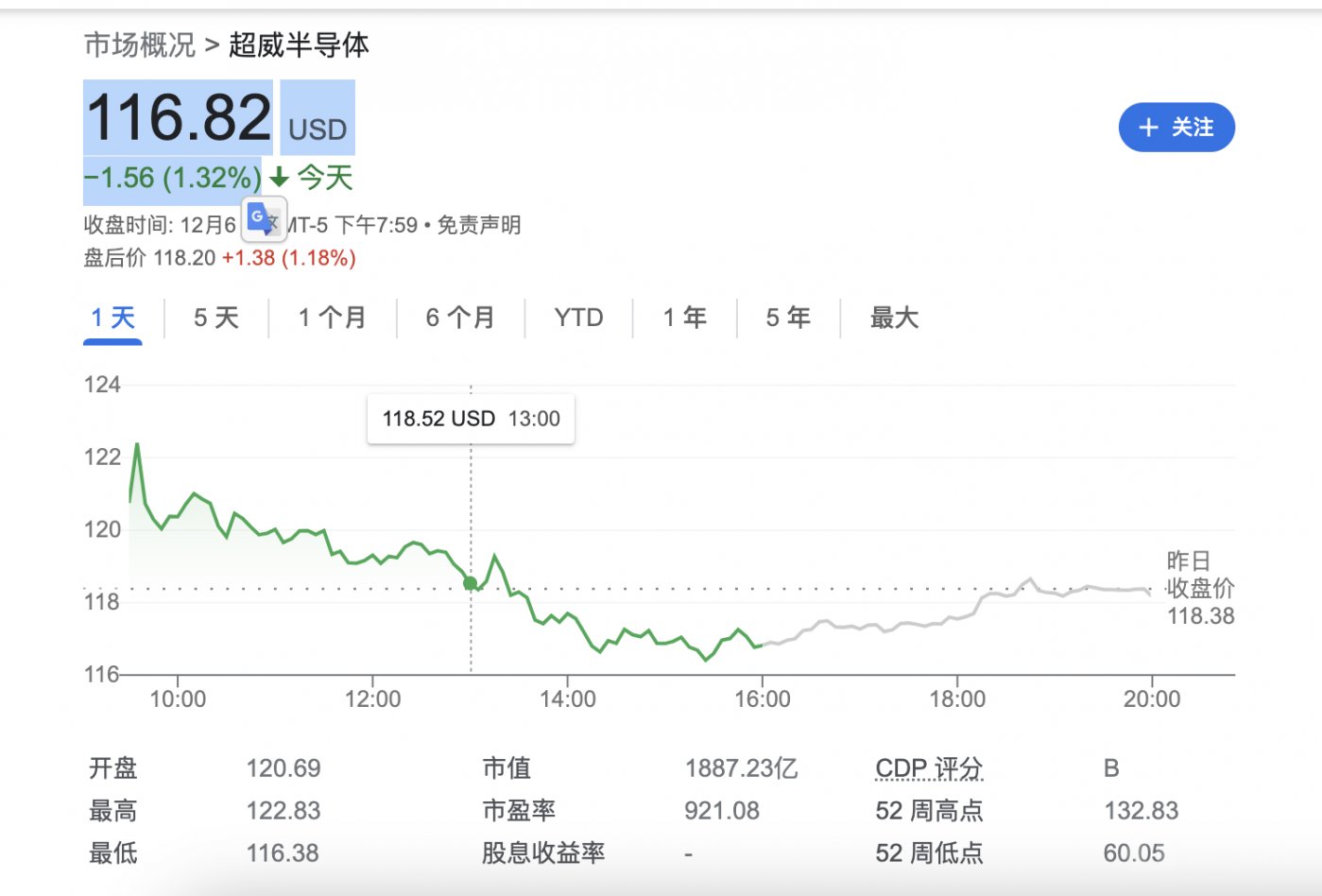

After the MI300X news was released, the stock prices of both AMD and Nvidia fell instead of rising.

As of the closing of the U.S. stock market on December 6th, Nvidia's stock price fell by 2.28%, and AMD's stock price also fell by 1.32%, closing at $116.82 per share. Year-to-date, AMD's stock price has risen by 82.47%, higher than Intel, but far below Nvidia's 220% growth.

Is the era of AI surpassing humans really here?

Lisa Su stated that AI has not only developed rapidly in a short period but has also shown explosive growth, equivalent to the invention of the internet. However, the adoption rate of AI technology will be even faster. However, at present, we are still in the very early stages of the development of the new AI era.

The crazy achievements of AMD and Google tonight tell us: the AI era has arrived, and humans must pay attention. In just over a year, there has been a huge transformation and reversal in the market, and anything is possible.

As 2023 enters its final month, the "battle for the throne" of large models has become more intense.

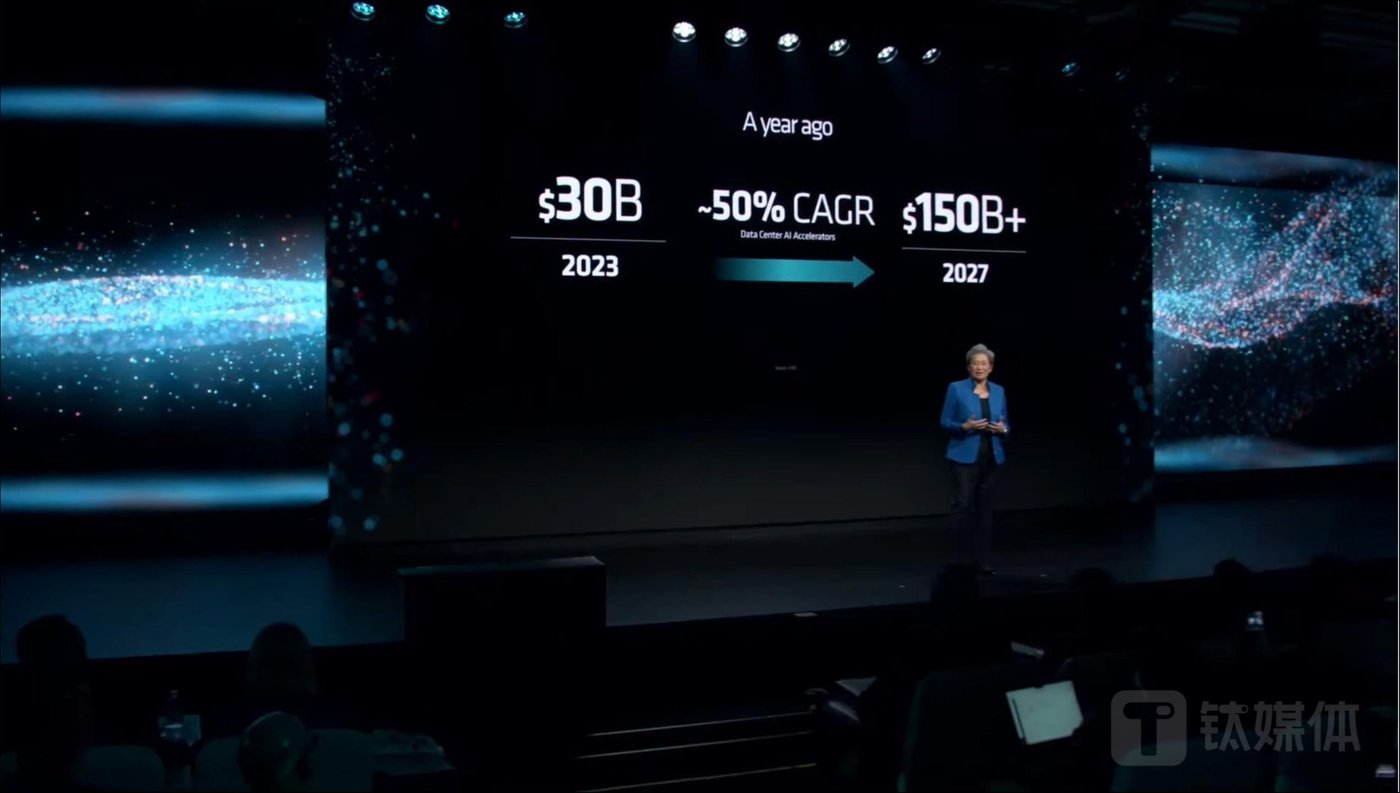

A year ago, Lisa Su believed that the AI accelerator market in 2023 was worth $30 billion. By 2027, the potential market size (TAM) of global data center AI accelerators will reach $150 billion, which means the compound annual growth rate (CAGR) during this period will be approximately 50%.

But now, Lisa Su has directly given a higher expectation: in 2023, the potential market size of AI accelerators will reach $45 billion, and the CAGR in the coming years will be as high as 70%. By 2027, it will drive the entire market to increase to a scale of $400 billion.

As Google's products surpass GPT-4 and AMD's products surpass Nvidia's H100, the AI achievements invented by humans are changing the world, and humanity is on the eve of a great change.

In 2014, British physicist Stephen Hawking issued a stern warning, "The full development of artificial intelligence could mean the end of humanity."

Now, the AI era has finally arrived, and machines may soon replace humans. From healthcare to the automotive industry, AI technology is being applied in various fields, profoundly changing the lives of everyone. In the future, we will see more AI innovative technologies and anticipate a surge in research and development, as well as more cutting-edge technological competition.

"Now, (AI) is not smarter than us. But I think they (AI) may soon surpass humans," AI pioneer and Turing Award winner Geoffrey Hinton said.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。