Source: TMTPOST

Image source: Generated by Wujie AI

AI native applications are facing a "difficult birth".

After the battle of hundreds of models, exhausted entrepreneurs gradually realize that the real opportunity in China lies in the application layer, and AI native applications are the most fertile soil for the next round.

Li Yanhong, Wang Xiaochuan, Zhou Hongyi, and Fu Sheng, the speeches of these big shots in the past few months all emphasize the huge opportunities in the application layer.

Internet giants are talking about AI native applications: Baidu has released more than 20 AI native applications at once; ByteDance has established a new team focusing on the application layer; Tencent has embedded large models into mini programs; Alibaba also wants to redo all applications using Tongyiqianwen; WPS is giving away AI experience cards like crazy…

Startups are even more enthusiastic, with nearly 200 AI native projects after a hackathon. Since the beginning of this year, including Qijichuangtan, Baidu, Founder Park, dozens of events and thousands of projects, but none have come to fruition.

We have to face the fact that although we realize the huge opportunities in the application layer, large models have not overturned all applications, and all products are being transformed in a perfunctory manner. Despite having the best product managers in China, they seem to have "lost their touch" this time.

From the explosion of Midjourney in April to now, after 9 months, what is the reason for the difficult birth of domestic AI native applications that have gathered the "hopes of the whole village"? Choosing is more important than striving. Perhaps at this moment, we need to calmly look back and find the right "posture" to open up AI native applications.

Doing AI native, can't be end-to-end

Why are native applications difficult to produce? We may find some answers in the "production" process of native applications.

"We usually run four or five models at the same time, and choose the one with the best performance." A large model entrepreneur in Silicon Valley mentioned to "Zixiangxian" during a conversation. They develop AI applications based on basic large models, but they do not bind to a specific large model in the early stage. Instead, they let each model run and finally choose the most suitable one.

In simple terms, the horse racing mechanism has now extended to large models.

However, this approach still has some drawbacks, because although it tries different large models, it will eventually be deeply coupled with one of them. This is still an "end-to-end" development approach, that is, one application corresponds to one large model.

But unlike applications, as the underlying large model, it also corresponds to multiple applications, which results in very limited differences between different applications in the same scenario. The bigger problem is that the basic large models on the market each have their own strengths and weaknesses, and there is no single large model that is far ahead in all areas. This makes it difficult for applications developed based on a single large model to achieve balance in various functions.

In this context, decoupling large models from applications has become a new approach.

The so-called "decoupling" actually consists of two aspects.

First is the decoupling of large models from applications. As the underlying driving force of AI native applications, the relationship between large models and native applications can be compared to the automobile industry.

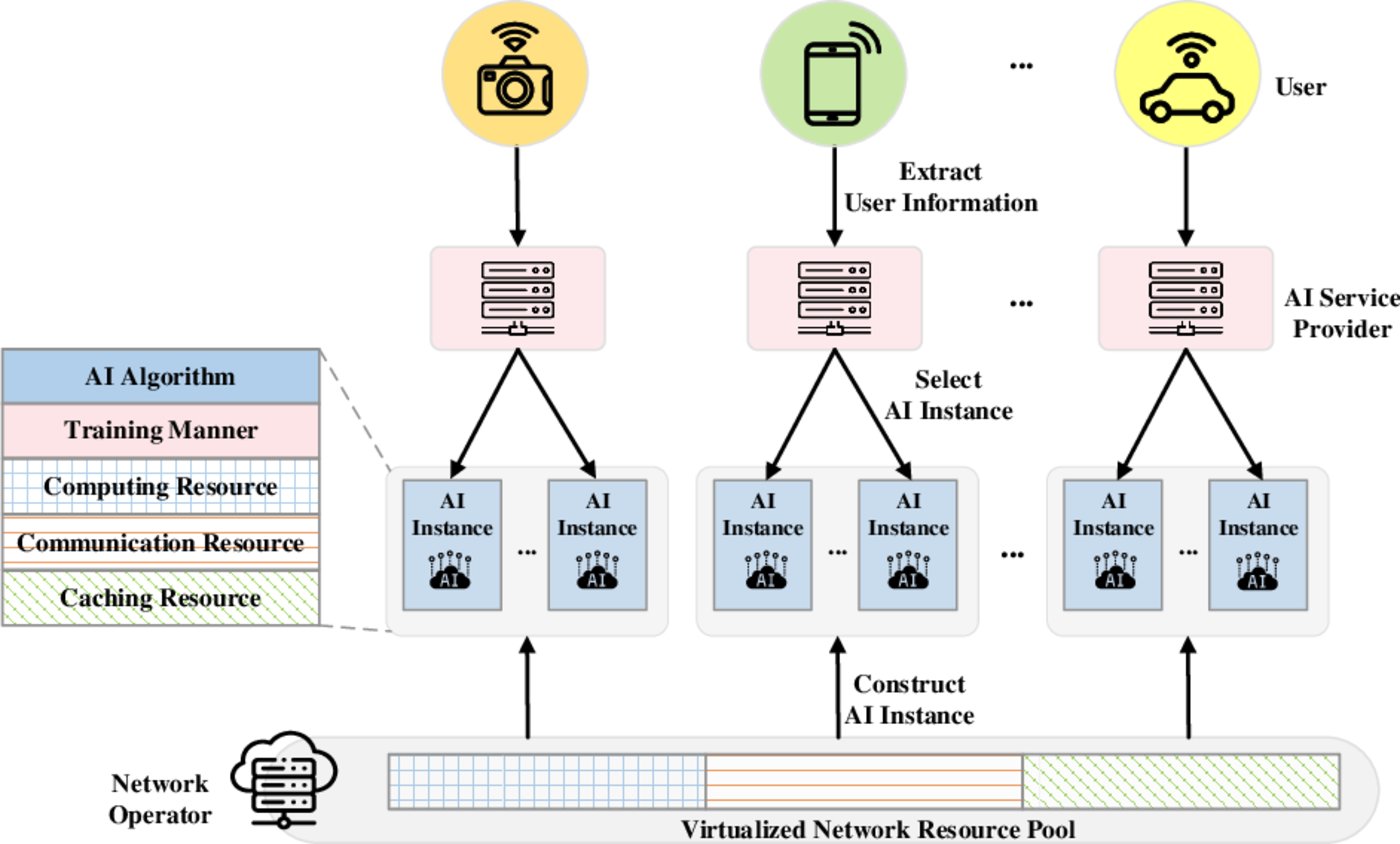

▲ Image source: Internet

For AI native applications, the large model is like the engine of a car. The same engine can be adapted to different car models, and the same car model can also be matched with different engines. Through different adjustments, different positioning from compact cars to luxury cars can be achieved.

So for the entire vehicle, the engine is only a part of the overall configuration and should not define the core of the entire car.

Comparing to AI native applications, the basic large model is the key to driving the application, but it should not be completely bound to the application. One large model can drive different applications, and the same application should also be able to be driven by different large models.

This kind of example has already been reflected in current cases. For example, domestic Feishu and DingTalk, and foreign Slack, can all adapt to different basic large models, and users can choose according to their own needs.

Secondly, within specific applications, large models should be decoupled from different application processes.

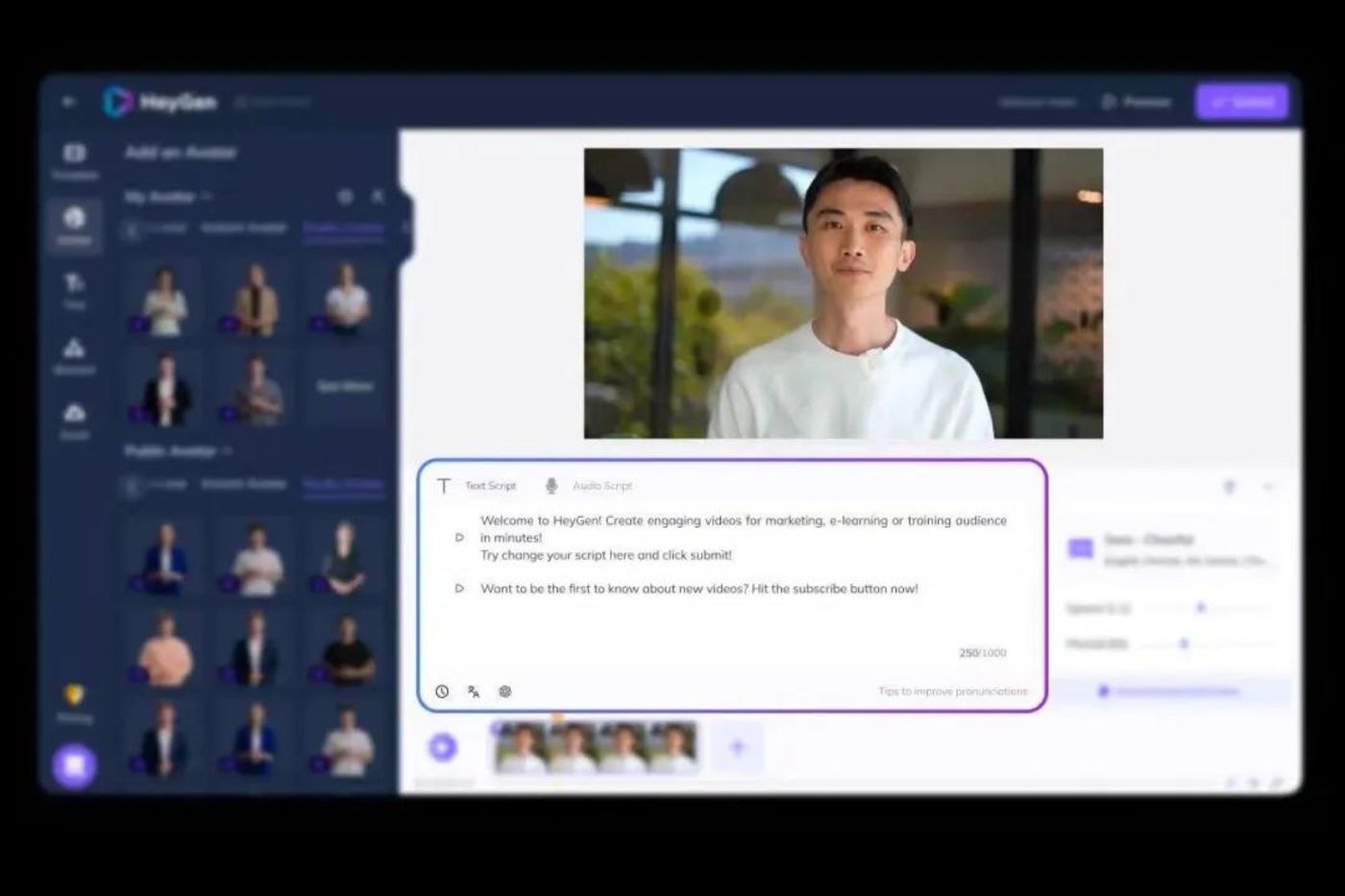

A typical example is HeyGen, a video AI company that has become popular overseas. Its annual recurring revenue reached 1 million USD in March this year and 18 million USD in November.

HeyGen currently has 25 employees, but it has built its own video AI model and integrated OpenAI and Anthropic's large language models and Eleven Labs' audio products. Based on different large models, HeyGen uses different models in different processes such as creation, script generation (text), and sound.

▲ Image source: HeyGen official website

Another more direct example is the plugin ecosystem of ChatGPT. Recently, the Chinese video editing application Jianying joined the ChatGPT ecosystem. After this, users can request Jianying to create a video using ChatGPT's drive.

In other words, the multi-to-multi matching of large models and applications can be refined to choose the most suitable large model to support each process. That is, an application is not driven by a single large model, but by several, or even a group of large models.

Multiple large models correspond to one application, gathering the strengths of many. In this mode, the division of labor in the AI industry chain will be redefined.

Similar to the current automobile industry chain, each part such as the engine, battery, accessories, and body has specialized manufacturers responsible for their respective roles, while the main manufacturers only need to make choices and assemble to form differentiated products and push them to the market.

Reassigning tasks, breaking and reorganizing, no pain, no gain.

The embryonic form of a new ecosystem

In the mode of multiple models and multiple applications, a new ecosystem will be born.

By analogy, we try to imagine the architecture of the new ecosystem based on the experience of the Internet.

At the beginning of the birth of mini-programs, everyone was very confused about the capabilities, architecture, and application scenarios of mini-programs. In the early stage, everyone had to learn the capabilities and play methods of mini-programs from scratch. The development speed of mini-programs was very slow, and the number of mini-programs could not make a breakthrough.

It wasn't until the emergence of WeChat service providers that things changed. Service providers directly connected with the WeChat ecosystem, familiarized themselves with the underlying architecture and layout of mini-programs, and connected with enterprise customers to help them create exclusive mini-programs according to their needs, while cooperating with the play methods of the entire WeChat ecosystem to acquire and retain customers through mini-programs. The service provider group also produced Weimob and Youzan.

In other words, the market may not need vertical large models, but it needs large model service providers.

Similarly, each large model needs to be truly used and operated to understand the related features and how to play. Service providers, as an intermediary, can be compatible with multiple large models and collaborate with enterprises to create a healthy ecosystem.

Based on past experience, we can roughly divide service providers into three major categories:

First type of experiential service provider: Understand and master the characteristics and application scenarios of each large model, cooperate with industry-specific scenarios, and open up the situation through service teams.

Second type of resource-based service provider: Similar to the business model of WeChat at that time, which could obtain low-cost advertising space within WeChat and then outsource it. In the future, the open access to large models is not universal, and service providers who can obtain sufficient access will create early barriers.

Third type of technical service provider: When an application embeds different large models at the same time, how to call and link multiple models, ensure stability, ensure security, and solve various technical challenges, all need to be addressed by technical service providers.

According to observations by "Zixiangxian," the embryonic form of large model service providers has begun to appear in the past six months, but in the form of enterprise services, providing teaching to enterprises on how to apply various large models. The way of doing applications is gradually forming a workflow.

"I'm making a video now. I first propose a script idea to Claud to help me write a story, then copy and paste it into ChatGPT to break it down into a script using its logical ability, integrate the Jianying plugin to convert text into a video, and if some images in the middle are not accurate, use Midjourney to regenerate them, and finally complete a video. If an application can simultaneously call on these capabilities, then it is a truly native application," said an entrepreneur to us.

Of course, the realization of a multi-model, multi-application ecosystem poses many challenges that need to be addressed, such as how multiple models communicate with each other, how to maximize model calls through algorithms, and what is the best solution for collaboration. These are both challenges and opportunities.

Based on past experience, the development trend of AI applications may be scattered and point-like at first, and then gradually unified and integrated.

For example, we need Q&A, graphics, and PPT. At the current stage, there may be many separate applications, but in the future, they may be integrated into a single product. Moving towards platformization. For example, the previous formats of taxi-hailing, food delivery, and ticket booking are gradually being consolidated into a super app, and different needs will also pose further challenges to the diversification of model capabilities.

In addition, AI native applications will further disrupt current business models, and hot money in the industry chain will be redistributed. Baidu becomes a shelf for knowledge, Alibaba becomes a shelf for goods, and all business models return to the most essential part, meeting the real needs of consumers, and redundant processes are replaced.

On this basis, value creation is one aspect, and how to rebuild business models is a more important issue for investors and entrepreneurs to consider.

Currently, we are still on the eve of the explosion of AI native applications, and as the underlying layer becomes basic large models, the middle layer becomes large model service providers, and the upper layer becomes various startups. Only with such clear and cooperative division of labor can AI native applications come in batches.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。