With the development of AI and other fields, many industries will undergo significant changes in their underlying logic. Computing power will rise to a more important position, and various aspects associated with it will also lead to extensive exploration in the industry. Decentralized computing power networks have their own advantages, which can help reduce centralized risks and also serve as a complement to centralized computing power.

Author: Samuel, Researcher at Future3 Campus

Demand for Computing Power

Since the release of "Avatar" in 2009, which opened the first battle of 3D movies with unparalleled realistic visuals, Weta Digital, as a huge contributor behind it, contributed to the entire movie's visual effects rendering work. In its 10,000-square-foot server farm in New Zealand, its computer cluster processes up to 1.4 million tasks per day, handling 8GB of data per second. Even so, it took over a month of continuous operation to complete all the rendering work.

With large-scale machine calls and cost investment, "Avatar" achieved remarkable achievements in the history of cinema.

On January 3 of the same year, Satoshi Nakamoto mined the genesis block of Bitcoin on a small server in Helsinki, Finland, and received a 50 BTC block reward. Since the birth of cryptocurrency, computing power has played a very important role in the industry.

The longest chain not only serves as proof of the sequence of events witnessed, but proof that it came from the largest pool of CPU power.—— Bitcoin Whitepaper

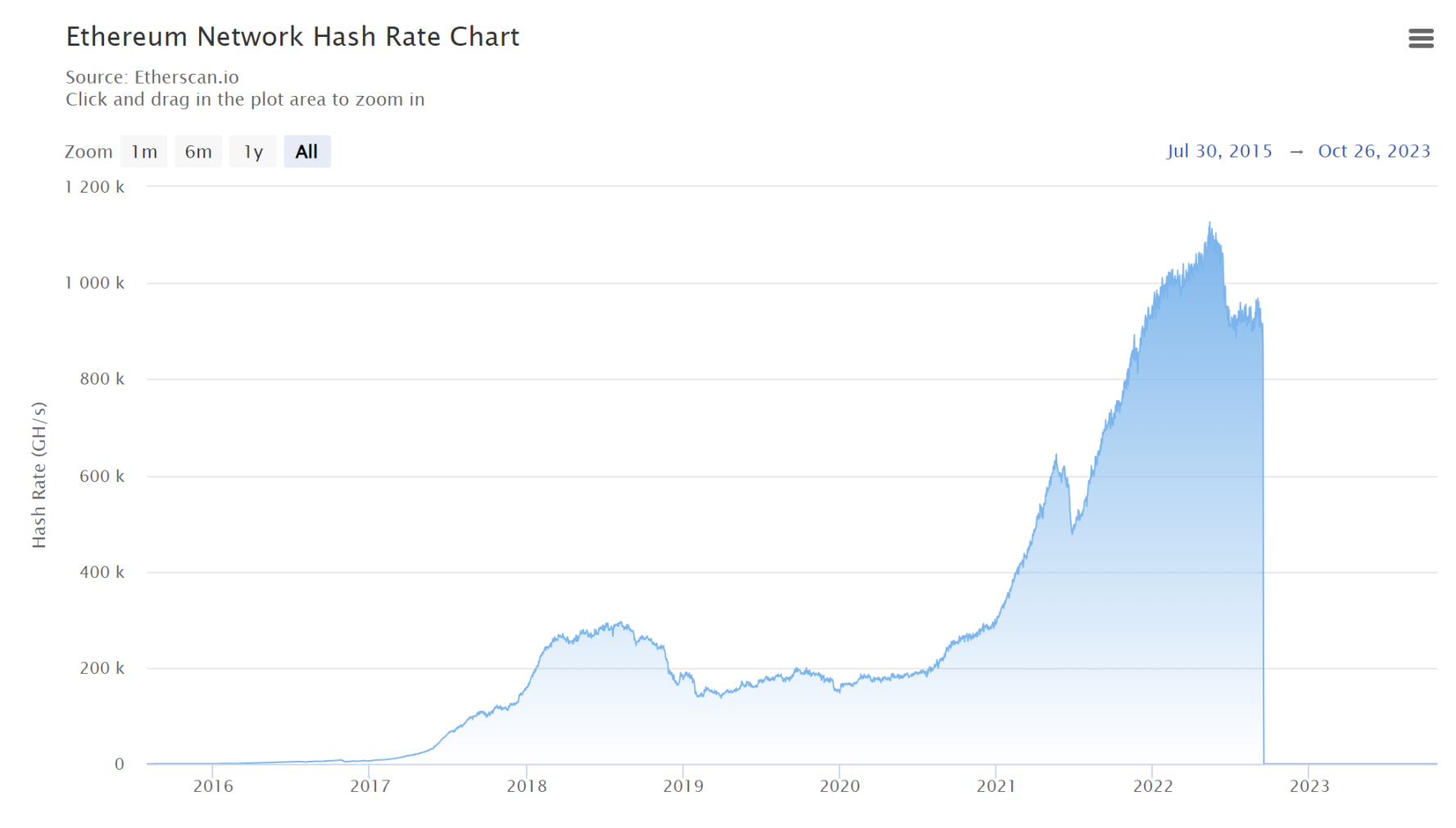

In the context of the PoW consensus mechanism, the contribution of computing power ensures the security of the chain. At the same time, the continuously increasing hashrate also serves as evidence of miners' continuous investment in computing power and positive income expectations. The industry's real demand for computing power has also greatly promoted the development of chip manufacturers. Mining machine chips have gone through the development stages of CPU, GPU, FPGA, ASIC, and more. Currently, Bitcoin mining machines usually use ASIC (Application Specific Integrated Circuit) technology to efficiently execute specific algorithms, such as SHA-256. The huge economic benefits brought by Bitcoin have also driven the increasing demand for mining computing power, but the overly specialized equipment and the clustering effect have led to a capital-intensive and centralized development trend for participants, whether miners or mining machine manufacturers.

With the emergence of Ethereum's smart contracts and its characteristics such as programmability and composability, it has formed a wide range of applications, especially in the field of DeFi, causing the price of ETH to rise all the way. The mining difficulty of Ethereum, which is still in the PoW consensus stage, has also been continuously increasing. Miners' demand for Ethereum mining machines has also been growing day by day. However, unlike Bitcoin, Ethereum requires the use of graphics processing units (GPUs) for mining calculations, such as the Nvidia RTX series. This makes it more suitable for general computing hardware to participate, which even led to a situation where high-end graphics cards were temporarily out of stock due to market competition for GPUs.

Then, on November 30, 2022, ChatGPT developed by OpenAI also demonstrated the epoch-making significance in the field of AI. Users were amazed by the new experience brought by ChatGPT, which can complete various requests from users based on context like a real person. In the new version released in September of this year, a generative AI with multimodal features, including voice and image, has brought user experience to a new stage.

However, the corresponding GPT4 has over trillions of parameters involved in model pre-training and subsequent fine-tuning. These are the two parts of the AI field with the greatest demand for computing power. In the pre-training stage, by learning a large amount of text to master language patterns, grammar, and contextual associations, it can understand language rules, thereby generating coherent and contextually relevant text based on input. After pre-training, GPT4 is fine-tuned to better adapt to specific types of content or styles, improving performance and specialization for specific demand scenarios.

Due to the Transformer architecture used by GPT, which introduces self-attention mechanism, the model's demand for computing power has sharply increased, especially when processing long sequences, requiring a large amount of parallel computing and storage of attention scores, as well as a large amount of memory and high-speed data transfer capabilities. The current mainstream LLM architecture has a huge demand for high-performance GPUs, indicating the enormous cost of investment in the field of large AI models. According to estimates by SemiAnalysis, the one-time model training cost of GPT4 is as high as $63 million. To achieve a good interactive experience, GPT4 also requires a large amount of computing power for its daily operation.

Classification of Computing Power Hardware

Here we need to understand the main types of computing power hardware currently, and how CPU, GPU, FPGA, and ASIC can handle different computing power demand scenarios.

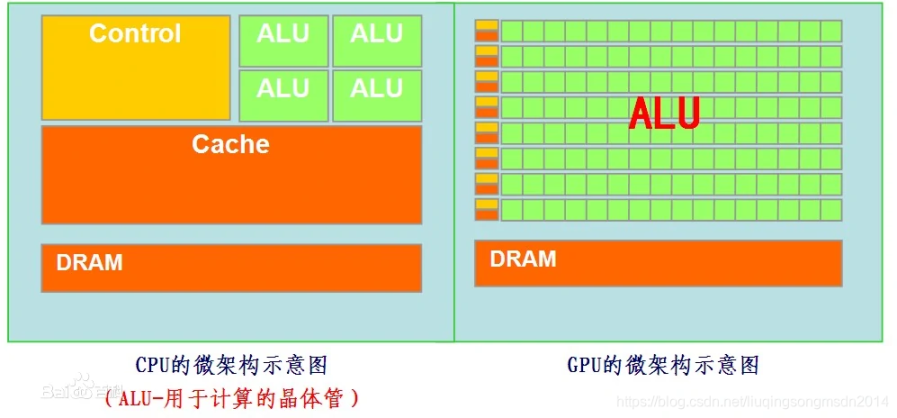

- From the architecture diagrams of CPU and GPU, GPUs contain more cores, allowing them to simultaneously process multiple computing tasks, with stronger parallel computing capabilities, making them suitable for handling a large number of computing tasks. Therefore, they have been widely used in the fields of machine learning and deep learning. CPUs have fewer cores and are suitable for more centralized processing of single complex calculations or sequential tasks, but are less efficient than GPUs in handling parallel computing tasks. In rendering tasks and neural network computing tasks, a large number of repetitive calculations and parallel computing are usually required, so GPUs are more efficient and suitable than CPUs in this regard.

- FPGA (Field Programmable Gate Array) is a semi-custom circuit in the field of Application Specific Integrated Circuits (ASIC). It is an array composed of a large number of small processing units, and can be understood as a programmable digital logic integrated circuit. Its current applications are mainly focused on hardware acceleration, while other tasks are still completed on CPUs, allowing FPGAs and CPUs to work together.

- ASIC (Application Specific Integrated Circuit) is a type of integrated circuit designed for specific user requirements and specific electronic system needs. When mass-produced, ASICs have advantages over general integrated circuits, such as smaller size, lower power consumption, improved reliability, enhanced performance, increased confidentiality, and reduced cost. Therefore, in the inherent scenario of Bitcoin mining, where specific computing tasks need to be executed, ASICs are the most suitable. Google has also introduced a Tensor Processing Unit (TPU) designed specifically for machine learning as a type of ASIC, but currently mainly provides computing power rental services through Google Cloud.

- Compared to ASICs, ASICs are fixed integrated circuits once the design is completed. FPGAs integrate a large number of basic digital circuit gates and memory in the array, and developers can define circuits by burning FPGA configurations, which are replaceable. However, in the current field of AI, the speed of updates makes it difficult for customized or semi-customized chips to execute different tasks or adapt to new algorithms in a timely manner. Therefore, the widespread adaptability and flexibility of GPUs have made them shine in the field of AI. Major GPU manufacturers have also optimized GPUs for the field of AI. For example, Nvidia has introduced the Tesla series and Ampere architecture GPUs designed for deep learning, which contain hardware units optimized for machine learning and deep learning computations (Tensor Cores), allowing GPUs to execute neural network forward and backward propagation with higher efficiency and lower energy consumption. In addition, a wide range of tools and libraries are provided to support AI development, such as CUDA (Compute Unified Device Architecture) to help developers utilize GPUs for general parallel computing.

Decentralized Computing Power

Decentralized computing power refers to the provision of processing capabilities through distributed computing resources. This decentralized approach usually combines blockchain technology or similar distributed ledger technology to aggregate and distribute idle computing resources to users in need, enabling resource sharing, transactions, and management.

Background

- Strong demand for computing power hardware: The prosperity of the creator economy has ushered in an era of mass creation in the digital media processing direction, leading to a surge in visual effects rendering demand. This has led to the emergence of specialized rendering outsourcing studios, cloud rendering platforms, and other forms, all of which require significant upfront investment in computing power hardware.

- Single source of computing power hardware: The development of the AI field has intensified the demand for computing power hardware. Global GPU manufacturers led by Nvidia have reaped huge profits in this AI computing power race. Their supply capacity has even become a key factor that can hinder the development of certain industries. Nvidia's market value has also surpassed one trillion dollars for the first time this year.

- Reliance on centralized cloud platforms for computing power provision: Currently, the beneficiaries of the surge in high-performance computing demand are centralized cloud providers represented by AWS. They have launched GPU cloud computing services. For example, renting a specialized HPC server like the AWS p4d.24xlarge, which includes 8 Nvidia A100 40GB GPUs, costs $32.8 per hour, with an estimated gross margin of 61%. This has led other cloud giants to compete and hoard hardware to gain an advantage in the early stages of industry development.

- Political and human intervention leading to uneven industry development: It is evident that ownership and concentration of GPUs lean more towards organizations and countries with abundant funds and technology, and have a reliance on high-performance computing clusters. This has led semiconductor manufacturing powerhouses, represented by the United States, to impose stricter restrictions on the export of AI chips to weaken the research capabilities of other countries in the field of general artificial intelligence.

- Excessive concentration of computing power resources: The initiative in the development of the AI field is held by a few giant companies. For example, OpenAI, with the backing of Microsoft and the rich computing power resources provided by Microsoft Azure, reshapes and integrates the current AI industry with the release of each new product, making it difficult for other teams to compete in the field of large models.

In the face of high hardware costs, geographical restrictions, and uneven industrial development, are there other solutions?

Decentralized computing power platforms have emerged, with the aim of creating an open, transparent, and self-regulating market to more effectively utilize global computing resources.

Adaptability Analysis

Decentralized Computing Power Supply Side

The high cost of hardware and artificial control on the supply side have provided fertile ground for the construction of decentralized computing power networks.

- In terms of the composition of decentralized computing power, a variety of computing power providers, ranging from individual PCs and small IoT devices to data centers and IDCs, can provide a large accumulation of computing power for more flexible and scalable computing solutions. This can help more AI developers and organizations effectively utilize limited resources. Individuals or organizations can contribute their idle computing power to achieve decentralized computing power sharing, but the availability and stability of this computing power are limited by the user's own usage restrictions or sharing limits.

- A potential source of high-quality computing power is the transformation of mining farms to provide computing power resources after Ethereum transitions to PoS. For example, Coreweave, a leading GPU integrated computing power provider in the United States, was formerly the largest Ethereum mining farm in North America and is based on a complete infrastructure. In addition, retired Ethereum mining machines, which include a large number of idle GPUs, reportedly had around 27 million GPUs working on the network at the peak of Ethereum mining. Activating these GPUs could also become an important source of computing power for decentralized computing power networks.

Decentralized Computing Power Demand Side

From a technical perspective, decentralized computing resources are suitable for tasks such as graphic rendering and video transcoding, which are not highly complex in terms of computation. When combined with blockchain technology and the economic system of web3, it can provide tangible incentives for network participants while ensuring the secure transmission of information and data. This has accumulated effective business models and customer bases. However, in the field of AI, which involves a large amount of parallel computing, communication between nodes, synchronization, and other aspects, there are very high requirements for the network environment. Therefore, current applications are mainly focused on tasks such as fine-tuning, inference, and AIGC, which are more application-oriented.

From a business logic perspective, a market solely based on buying and selling computing power lacks imagination. The industry can only focus on supply chain and pricing strategies, which happen to be the strengths of centralized cloud services. As a result, the market has low limits and lacks more room for imagination. Therefore, it can be seen that networks originally focused on simple graphic rendering are seeking transformation into AI, such as Render Network, which launched the native integrated Stability AI toolset in Q1 2023, allowing users to introduce Stable Diffusion jobs, expanding their business beyond rendering to the AI field.

In terms of the main customer base, it is clear that large B2B clients are more inclined towards centralized integrated cloud services. They usually have sufficient budgets and are typically involved in the development of underlying large models, requiring more efficient forms of computing power aggregation. Therefore, decentralized computing power serves more small and medium-sized development teams or individuals, who are mainly involved in model fine-tuning or application layer development and do not have high requirements for the form of computing power provision. They are more price-sensitive, and decentralized computing power can fundamentally reduce initial capital investment, resulting in lower overall usage costs. For example, Gensyn's price is only $0.4 per hour, compared to AWS's $2 per hour for similar computing power, representing an 80% decrease. Although this business does not currently account for a large portion of industry expenses, with the continuous expansion of AI applications, the future market size should not be underestimated.

- In terms of the services provided, current projects resemble the concept of decentralized cloud platforms, providing a complete set of management processes from development, deployment, launch, distribution, to transactions. This has the benefit of attracting developers to simplify development and deployment using related tool components, while also attracting users to the platform to use these complete application products, forming an ecosystem based on their own computing power network. However, this also places higher demands on project operations. It is particularly important to attract excellent developers and users and achieve retention.

Different Application Fields

1. Digital Media Processing

Render Network is a blockchain-based global rendering platform that aims to assist creators in digital creativity. It allows creators to expand GPU rendering work to global GPU nodes on demand, providing a faster and cheaper rendering capability. After the creators confirm the rendering results, the blockchain network sends token rewards to the nodes. Compared to traditional methods of achieving visual effects, such as establishing rendering infrastructure locally or increasing GPU expenses in purchased cloud services, this approach requires high upfront investment.

Since its establishment in 2017, Render Network users have rendered over 16 million frames and nearly 500,000 scenes on the network. Data released in Q2 2023 also indicates an increasing trend in rendering job counts and active nodes. In addition, Render Network also launched the native integrated Stability AI toolset in Q1 2023, allowing users to introduce Stable Diffusion jobs, expanding their business beyond rendering to the AI field.

Livepeer, on the other hand, provides real-time video transcoding services for creators by leveraging the computing power and bandwidth contributed by network participants. Broadcasters can send videos to Livepeer for various video transcoding tasks and distribute them to end users, facilitating the dissemination of video content. Additionally, users can easily pay for video transcoding, transmission, and storage services in fiat currency.

In the Livepeer network, anyone is allowed to contribute their personal computer resources (CPU, GPU, and bandwidth) to transcode and distribute videos to earn fees. The native token (LPT) represents the stake of network participants in the network. The amount of staked tokens determines the node's weight in the network, affecting its chances of receiving transcoding tasks. LPT also guides nodes to complete distribution tasks securely, reliably, and quickly.

2. Expansion in the AI Field

In the current AI ecosystem, the main participants can be roughly divided into:

From the demand side, there are clear differences in the requirements for computing power at different stages of the industry. For example, in the pre-training stage of developing underlying models, there are very high requirements for parallel computing, storage, communication between nodes, and other aspects, which require the completion of related tasks through large computing power clusters. Currently, the main supply of computing power still relies on self-built data centers and centralized cloud service platforms. However, in subsequent stages such as model fine-tuning, real-time inference, and application development, the requirements for parallel computing and communication between nodes are not as high, which is where decentralized computing power can thrive.

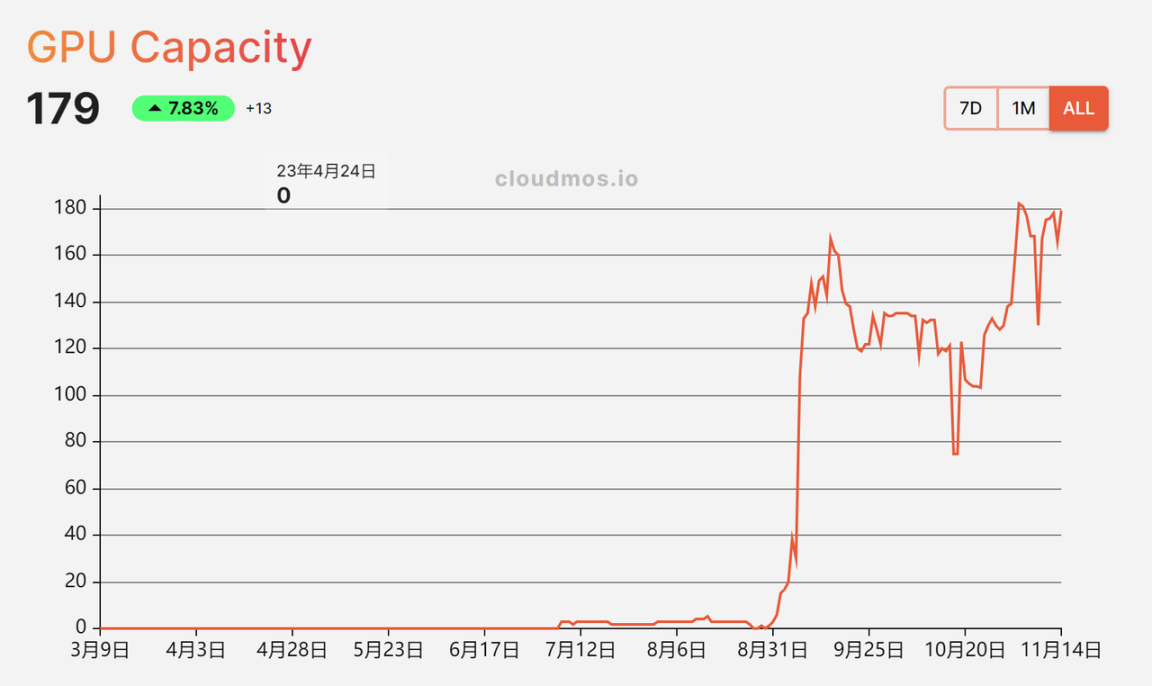

Looking at the previously prominent projects, Akash Network has made some attempts in the direction of decentralized computing power:

Akash Network allows users to efficiently and flexibly deploy and manage applications in a decentralized cloud environment using different technical components. Users can package applications using Docker container technology and then deploy and scale them on the cloud resources provided by Akash through CloudMOS. Akash uses a "reverse auction" approach, making its prices lower than traditional cloud services.

In August of this year, Akash Network announced the launch of the 6th mainnet upgrade, which will incorporate support for GPUs into its cloud services, providing computing power to more AI teams in the future.

Gensyn.ai, a project that has attracted industry attention this year, completed a $43 million Series A financing round led by a16z. Based on the documents released by the project, it is a mainnet L1 PoS protocol based on the Polkadot network, focusing on deep learning. It aims to drive the boundaries of machine learning by creating a global supercomputing cluster network that connects various devices, from data centers with surplus computing power to personal GPUs on PCs, custom ASICs, and SoCs.

To address some of the current issues in decentralized computing power, Gensyn has drawn on some recent theoretical research results from academia:

- It uses probabilistic learning proofs, using metadata based on the gradient-based optimization process to construct proofs of relevant task execution to expedite the verification process.

- The Graph-based Pinpoint Protocol (GPP) serves as a bridge, connecting the offline execution of DNN (Deep Neural Network) with the smart contract framework on the blockchain, addressing inconsistencies between hardware devices and ensuring the consistency of verification.

- Similar to Truebit, it uses a combination of staking and penalties to establish a mechanism that allows economically rational participants to honestly execute task assignments. This mechanism uses cryptographic and game theory approaches to maintain the integrity and reliability of large-scale model training computations.

However, it is worth noting that the above content mainly addresses the verification of task completion, rather than the specific features of decentralized computing power for model training as the main highlight in the project documentation, especially in terms of optimizing parallel computing and communication between distributed hardware. Currently, frequent communication between nodes affected by network latency and bandwidth can increase iteration time and communication costs, which does not bring actual optimization and instead reduces training efficiency. Gensyn's approach to handling node communication and parallel computing in model training may involve complex coordination protocols to manage the distributed nature of computation. However, without more detailed technical information or a deeper understanding of their specific methods, the exact mechanism by which Gensyn achieves large-scale model training through its network will need to be revealed when the project goes live.

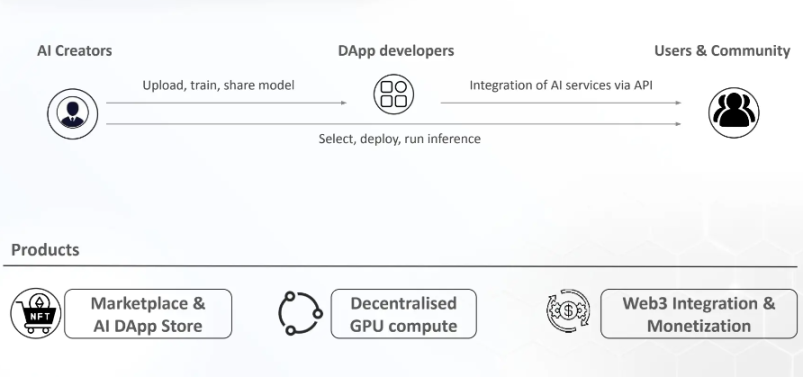

We also note the Edge Matrix Computing (EMC) protocol, which applies computing power to various scenarios such as AI, rendering, scientific research, and AI e-commerce through blockchain technology. It distributes tasks to different computing nodes through elastic computing, improving the efficiency of computing power usage and ensuring the security of data transmission. Additionally, it provides a computing power market where users can access and exchange computing resources, making it easier for developers to deploy and reach users more quickly. Combined with the economic form of Web3, it also allows computing power providers to obtain real income based on actual user usage and protocol subsidies, while AI developers benefit from lower inference and rendering costs. The following is an overview of its main components and functions:

It is expected to launch GPU-based RWA products, with the key being to activate hardware that was previously fixed in data centers and circulate it in the form of RWA to gain additional liquidity. High-quality GPUs can serve as the underlying assets of RWA because computing power can be considered the hard currency of the AI field. There is currently a clear supply-demand imbalance, and this imbalance cannot be resolved in the short term, so the price of GPUs is relatively stable.

In addition, deploying IDC data centers to create computing power clusters is also a key focus of the EMC protocol. This not only allows GPUs to operate in a unified environment but also efficiently handle tasks with significant computing power consumption, such as pre-training models, to meet the needs of professional users. Additionally, IDC data centers can host and run a large number of GPUs of the same high-quality technical specifications, making it convenient to package them as RWA products for the market and open up new DeFi ideas.

In recent years, there have been new developments and practical applications of technology theories in the field of edge computing in academia. As a complement and optimization of cloud computing, edge computing is accelerating the transition of some artificial intelligence from the cloud to the edge, entering increasingly smaller IoT devices. These IoT devices are often very small in size, making lightweight machine learning favored to address issues such as power consumption, latency, and accuracy.

Network3 has built a dedicated AI Layer2 that provides services to AI developers globally through AI model algorithm optimization and compression, federated learning, edge computing, and privacy computing. It focuses on small models using a large number of smart IoT hardware devices to provide corresponding computing power. By building a Trusted Execution Environment (TEE), it allows users to complete related training by only uploading model gradients, ensuring the privacy and security of user data.

In conclusion

With the development of fields such as AI, many industries will undergo significant changes at the fundamental level, and computing power will rise to a more important position. Various aspects associated with it will also lead to extensive exploration in the industry. Decentralized computing power networks have their own advantages, providing a response to reducing centralized risks and serving as a complement to centralized computing power.

The AI teams themselves are at a crossroads, whether to use pre-trained large models to build their own products or to participate in training large models in their respective regions. Such choices are often dialectical. Therefore, decentralized computing power can meet different business needs, and this development trend is encouraging. With the advancement of technology and the iteration of algorithms, breakthroughs are inevitable in key areas.

In the end, we will patiently wait and see.

Reference

[Reference links]

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。