Source: Xinzhiyuan

Problems that even OpenAI can't solve have been addressed by a research team at the University of Kansas? They have developed an academic AI content detector with an accuracy rate of up to 98%. If this technology is widely promoted in the academic community, it could effectively alleviate the proliferation of AI-generated papers.

Currently, there is almost no way for AI text detectors to effectively distinguish between AI-generated text and human text.

Even the detection tool developed by OpenAI was quietly taken offline after six months due to its low detection accuracy.

However, Nature recently reported on the research results of a team at the University of Kansas. They have developed an academic AI detection system that can effectively distinguish whether AI-generated content is present in papers, with an accuracy rate of up to 98%!

Article link: https://www.nature.com/articles/d41586-023-03479-4

The core idea of the research team is not to pursue the creation of a universal detector, but to build a truly useful AI text detector specifically for a particular academic field.

Paper link: https://www.sciencedirect.com/science/article/pii/S2666386423005015?via%3Dihub

The researchers stated that customizing detection software for specific types of writing text may be a technical path to developing a universal AI detector.

"If it is possible to quickly and easily build a detection system for a specific field, then it will not be so difficult to build such systems for different fields."

The researchers extracted 20 key features of the writing style and then input these feature data into an XGBoost model for training, thus being able to distinguish between human text and AI text.

These twenty key features include variations in sentence length, frequency of certain words and punctuation marks, and other elements.

The researchers stated that "using only a small number of features can achieve a high accuracy rate."

Accuracy rate as high as 98%

In their latest research, the detector was trained on the introduction sections of papers published in ten chemistry journals by the American Chemical Society (ACS).

The research team chose the "Introduction" section because if ChatGPT can access background literature, then this part of the paper is relatively easy to write.

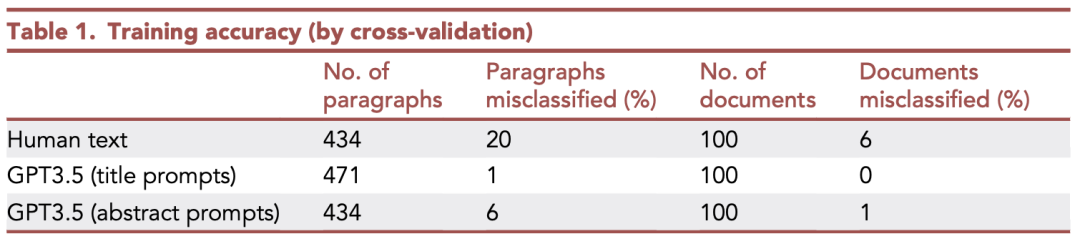

The researchers trained the tool on 100 published introductions written by humans and then asked ChatGPT-3.5 to write 200 introductions in the style of ACS journals.

Out of the 200 introductions written by ChatGPT-3.5, 100 were requested based on paper titles, while the other 100 were based on paper abstracts.

Finally, when the detector was tested on introductions written by humans and those generated by ChatGPT-3.5 in the same journal.

The detector identified the introductions written by ChatGPT-3.5 based on titles with an accuracy rate of 100%. For introductions generated by ChatGPT-3.5 based on abstracts, the accuracy rate was slightly lower, at 98%.

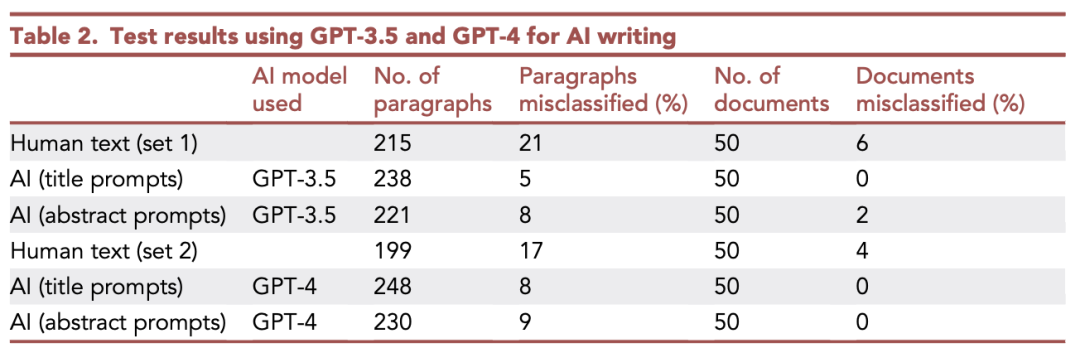

The tool is equally effective for text written by GPT-4.

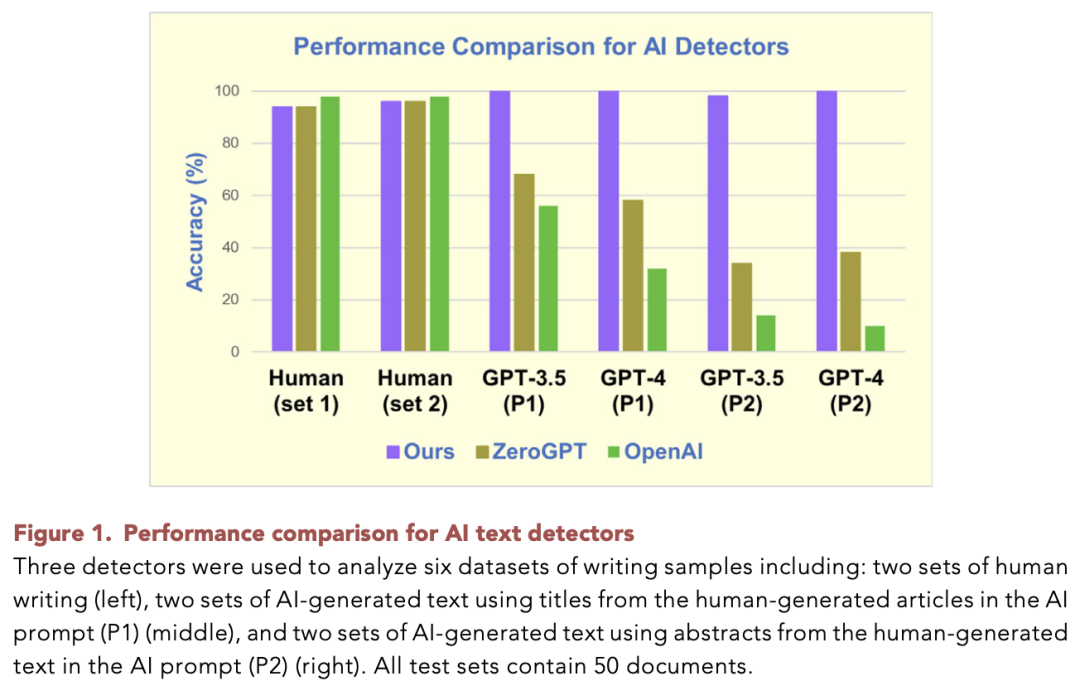

In contrast, the general AI detector ZeroGPT only has an accuracy rate of about 35-65% in identifying AI-written introductions, depending on the version of ChatGPT used and whether the introductions are generated based on paper titles or abstracts.

The text classifier tool made by OpenAI (which had already been taken offline at the time of publication) also performed poorly, with an accuracy rate of only 10-55% in identifying AI-written introductions.

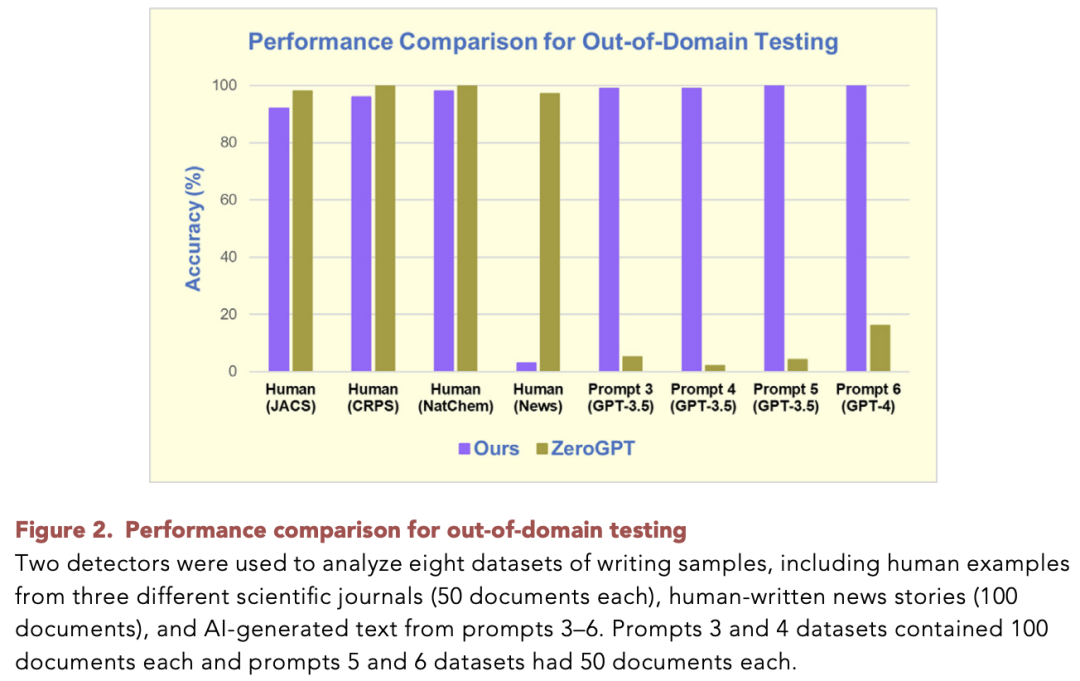

This new ChatGPT detector even performs well when handling untrained journals.

It can also identify AI text generated specifically to confuse AI detectors.

However, while this detection system performs very well for scientific journal papers, its performance is not ideal when used to detect news articles in university newspapers.

Debora Weber-Wulff, a computer scientist at the Berlin University of Applied Sciences, highly praised this research, stating that the work being done by the researchers is "very appealing."

Details of the Paper

The method used by the researchers relies on 20 key features and the XGBoost algorithm.

The extracted 20 features include:

(1) Number of sentences per paragraph, (2) Number of words per paragraph, (3) Presence of parentheses, (4) Presence of dashes, (5) Presence of semicolons or colons, (6) Presence of question marks, (7) Presence of apostrophes, (8) Standard deviation of sentence length, (9) Difference in (average) length of consecutive sentences in a paragraph, (10) Presence of sentences with fewer than 11 words, (11) Presence of sentences with more than 34 words, (12) Presence of numbers, (13) More than twice as many capital letters (compared to periods) in the text, and the presence of the following words: (14) although, (15) but, (16) however, (17) because, (18) this, (19) others or researchers, (20) etc.

For detailed information on training the detector through XGBoost, please refer to the "Experimental Procedure" section in the original paper.

The authors had previously done similar work, but the scope of the original work was very limited.

To apply this promising method to chemistry journals, it was necessary to review manuscripts from multiple journals in this field.

Furthermore, the ability to detect AI text is influenced by the prompts provided to language models, so any method aimed at detecting AI writing should be tested for prompts that may confuse AI usage, a variable that was not evaluated in previous research.

Finally, the new version of ChatGPT, GPT-4, has been released, and it has significant improvements over GPT-3.5. AI text detectors need to be effective for text from language models such as GPT-4.

To expand the applicability of the AI detector, data was collected from 13 different journals and 3 different publishers, different AI prompts, and different AI text generation models.

A classifier was trained using real human text and AI-generated text. Then, new examples were generated for model evaluation through human writing, AI prompts, and GPT-3.5 and GPT-4 methods.

The results show that the simple method proposed in this article is very effective. It has an accuracy rate of 98%–100% in identifying AI-generated text, depending on the prompts and models. In contrast, the latest classifier from OpenAI has an accuracy rate between 10% and 56%. The detector in this article will enable the scientific community to assess the penetration of ChatGPT into chemistry journals, determine the consequences of its use, and quickly introduce mitigation strategies when problems arise. **Results and Discussion** The authors selected human writing samples from 10 chemistry journals of the American Chemical Society (ACS), including "Inorganic Chemistry," "Analytical Chemistry," "Journal of Physical Chemistry A," "Journal of Organic Chemistry," "ACS Omega," "Journal of Chemical Education," "ACS Nano," "Environmental Science & Technology," "Chemical Research in Toxicology," and "ACS Chemical Biology." They used the introduction sections of 10 articles from each journal, totaling 100 human writing samples in the training set. The choice of the introduction section was because, with the appropriate prompts, this is the most likely part of the article to be written by ChatGPT. The entire training dataset consisted of 100 human-generated introductions and 200 ChatGPT-generated introductions; each paragraph became a "writing example." The model used a leave-one-out cross-validation strategy for optimization. The table above shows the training results for the classification of these writing samples, including at the document and paragraph levels. The next phase of the experiment involved testing the model with new documents not used in training. The authors designed simple and difficult tests. The simple test used similar test data to the training data (different articles from the same journal), using new article titles and abstracts as prompts for ChatGPT. In the difficult test, GPT-4 was used instead of GPT-3.5 to generate AI text. Since GPT-4 is known to be better than GPT-3.5, would the classification accuracy decrease? The table above shows the results of the classification. Performance was almost unchanged compared to previous results. The authors then compared their tool with two leading detection tools: the text classifier provided by OpenAI, and ZeroGPT. The comparison was based on the same test dataset. The three detectors had similar high accuracy in identifying human text; however, there were significant differences in evaluating AI-generated text. In conclusion, the method can accurately detect ChatGPT writing in journals not included in the training set, and it remains effective with different prompts. For more details, please refer to the original article: [Link](https://www.sciencedirect.com/science/article/pii/S2666386423005015?via%3Dihub)

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。