Image Source: Generated by Wujie AI

Although semiconductor chips are small in size, their design difficulty is extremely challenging worldwide. At yesterday's half-hour Apple event, the brand-new M3 series made its debut. Despite being criticized for being slow-paced, the fact repeatedly proves that not everyone can handle the task, even if it's squeezing toothpaste. The difficulty of designing and manufacturing semiconductor chips may be even greater than that of an aircraft carrier, although at first glance, the two are not on the same scale in terms of size.

At the just-opened ICCAD 2023 conference, NVIDIA demonstrated the capability of testing chips with large models, causing a stir in the industry. It has been previously stated that the cross-section of a single hair can accommodate 2 million transistors, showcasing its precision. Under a microscope, top products like the M3 appear as meticulously planned city blocks, with hundreds of billions of transistors connected on streets that are ten thousand times finer than a hair.

To construct such a digital city block, multiple engineering teams need to persistently collaborate, ranging from months to years. Some teams are responsible for determining the overall chip architecture, others for creating and placing various ultra-small circuits, and still others for conducting tests. Each task requires specialized methods, tools, software programs, and computer programming languages.

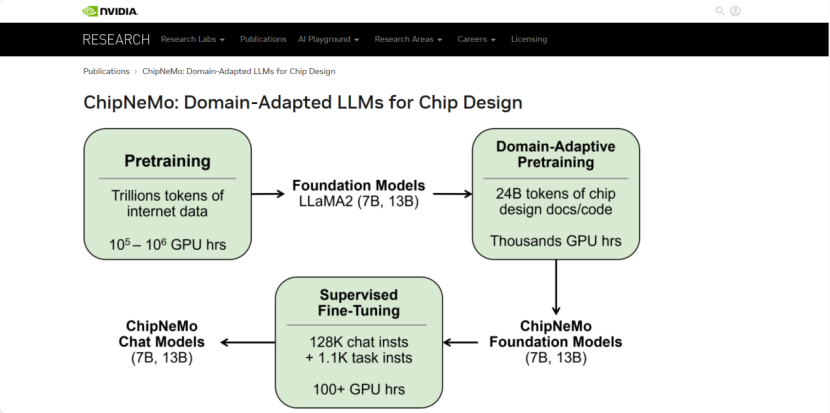

Recently, a research team from NVIDIA developed a custom large model called ChipNeMo, trained on internal data to generate and optimize software and assist human designers. Link to the paper: https://research.nvidia.com/publication/2023-10_chipnemo-domain-adapted-llms-chip-design

The NVIDIA team did not directly deploy ready-made commercial or open-source large models, but instead utilized the following domain adaptation technologies: custom tokenizer, domain-adapted continuous pre-training (DAPT), supervised fine-tuning with domain-specific instructions (SFT), and domain-adapted retrieval model. The final results show that compared to general-purpose base large models like the 70-billion-parameter Llama 2, these domain adaptation technologies significantly improve the performance of large models, achieving similar or better performance in a range of design tasks while reducing the scale of the large model. The custom ChipNeMo large model has 13 billion parameters.

Specifically, the NVIDIA team evaluated three chip design applications: engineering assistant chat AI, EDA code generation, and error summarization and analysis. The chat AI can answer various questions about GPU architecture and design and help engineers quickly find technical documents; the code generator can now create approximately 10 to 20 lines of code snippets using two common professional languages for chip design; and the analysis tool can automatically complete the time-consuming task of maintaining and updating error descriptions.

In response, NVIDIA's Chief Scientist Bill Dally stated that even a 5% increase in productivity is a huge victory, and ChipNeMo represents an important first step for large models in the complex semiconductor design field. This also means that for highly specialized and refined fields, it is entirely possible to use internal data to train useful AIGC large models.

While the larger-scale Llama 2 may also achieve similar accuracy to ChipNeMo, considering the smaller large model and its additional cost-effectiveness is equally important. NVIDIA's ChipNeMo can be directly loaded into the memory of a single A100 GPU without any quantization, significantly improving its inference speed. Other related research also indicates that the inference cost of large models with relatively fewer parameters is several times, or even tens of times, lower than that of larger large models.

More than two centuries ago, the first industrial revolution brought about the industrial age, ultimately marked by the ability of "machines making machines." By extension, the AI 2.0 era should be able to achieve "AI training AI." Although it still seems distant, AI is now being used in scientific research and industries, especially in ceiling-level industries such as chip manufacturing. Perhaps the dawn of the future begins here.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。