Source: New Intelligence Element

Image source: Generated by Wujie AI

Recently, there has been a resurgence of news about GPT-5.

From the initial leak that OpenAI is secretly training GPT-5, to later clarification by Sam Altman; and then the discussion about how many H100 GPUs are needed to train GPT-5, DeepMind's CEO Suleyman's interview "confirmed" that OpenAI is secretly training GPT-5.

This has led to a new round of speculation.

In the midst of this, there were also bold predictions by Altman, such as GPT-10 appearing before 2030, surpassing the total intelligence of all humans, and being a true AGI, and so on.

Then, there is the recent emergence of OpenAI's multimodal model named Gobi, which directly challenges Google's Gemini model, sparking intense competition between the two tech giants.

For a while, the latest developments in large language models have become the hottest topic in the industry.

To borrow a line from an ancient poem, "half-hidden like a lute," seems quite fitting. It's just uncertain when it will truly "appear after countless calls."

Timeline Review

The content we are discussing today is directly related to GPT-5, and it's an analysis by our old friend Gary Marcus.

The core point can be summarized in one sentence: From GPT-4 to 5, it's not just about scaling up the model, but a change in the entire AI paradigm. From this perspective, the company that developed GPT-4, OpenAI, may not necessarily be the first to reach GPT-5.

In other words, when the paradigm needs to change, previous accumulations may not be very transferable.

Before delving into Marcus's viewpoint, let's briefly review what has happened recently regarding the legendary GPT-5 and what the public discourse has been.

It started with OpenAI's co-founder Karpathy tweeting that H100 is a hot commodity pursued by tech giants, and everyone is concerned about who has it and how many.

This sparked a wave of discussions on how many H100 GPUs various companies would need for training.

That's roughly the situation.

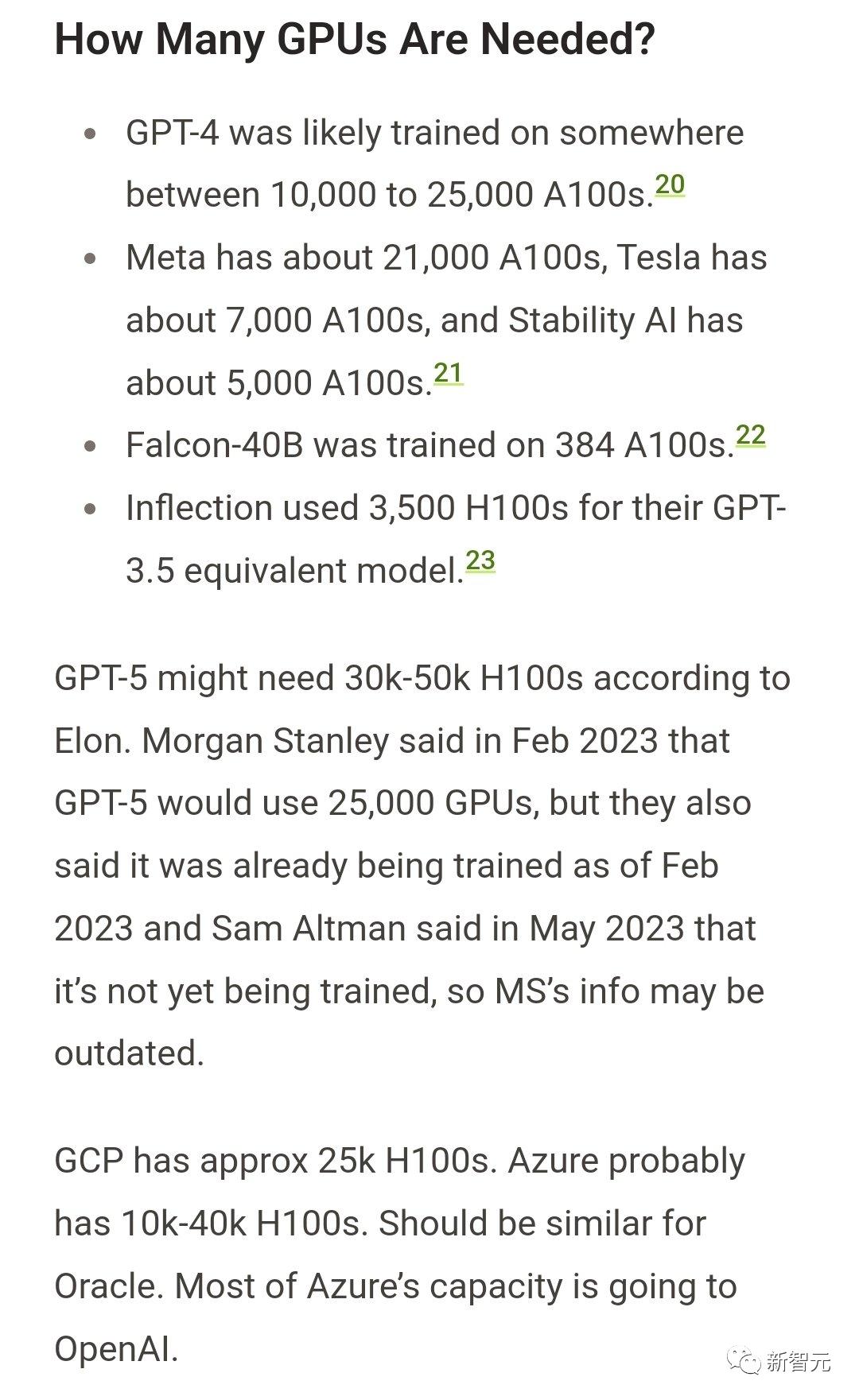

GPT-4 may have been trained on approximately 10,000-25,000 A100s

Meta on about 21,000 A100s

Tesla on about 7,000 A100s

Stability AI on about 5,000 A100s

Falcon-40B was trained on 384 A100s

Regarding this, Musk also participated in the discussion, and according to him, training GPT-5 might require 30,000 to 50,000 H100s.

Previously, Morgan Stanley also made similar predictions, but the overall number is slightly lower than what Musk mentioned, around 25,000 GPUs.

Of course, when GPT-5 was brought to the forefront for discussion, it was inevitable for Sam Altman to come out and deny it, stating that OpenAI is not training GPT-5.

Some bold netizens speculated that OpenAI's denial might simply be due to a name change for the next generation model, and it's not called GPT-5.

According to Sam Altman, it's precisely because of the insufficient number of GPUs that many plans have been delayed. He even expressed that he doesn't want too many people to use GPT-4.

The entire industry is so thirsty for GPUs. According to statistics, all the GPUs needed by tech giants add up to about 430,000, which is a staggering amount of money, close to $15 billion.

But it's a bit circuitous to infer GPT-5's training from the usage of GPUs, so DeepMind's founder Suleyman directly "confirmed" in an interview that OpenAI is indeed secretly training GPT-5, and they shouldn't hide it.

Of course, in the complete interview, Suleyman also discussed a lot of industry gossip, such as why DeepMind is lagging behind in competition with OpenAI, even though they are not too far behind in terms of time.

There were also many internal news, such as what happened when Google acquired them. But these are not very relevant to GPT-5, and interested friends can find out more on their own.

In short, this wave is the industry bigwigs discussing the latest developments of GPT-5, leaving everyone with doubts.

After this, Sam Altman once again stated in a one-on-one livestream, "I think AGI will appear before 2030, called GPT-10, surpassing the total intelligence of all humans."

On one hand, making bold predictions, and on the other hand, denying the training of GPT-5, making it difficult for others to truly know what OpenAI is doing.

In this livestream, Altman envisioned many future scenarios. For example, how he understands AGI, when AGI will appear, what OpenAI will do when AGI appears, and how all humans will respond.

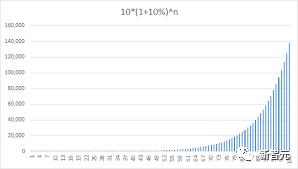

However, in terms of actual progress, Altman has planned it as follows, "I tell the employees in the company that our goal is to improve the performance of our prototype products by 10% every 12 months."

"If we set this goal to 20%, it might be a bit too high."

This is a specific arrangement. But the connection between 10%, 20%, and GPT-5 is not very clear.

The most valuable part is the following—OpenAI's Gobi multimodal model.

The key point is the intense competition between Google and OpenAI, and at what stage it has reached.

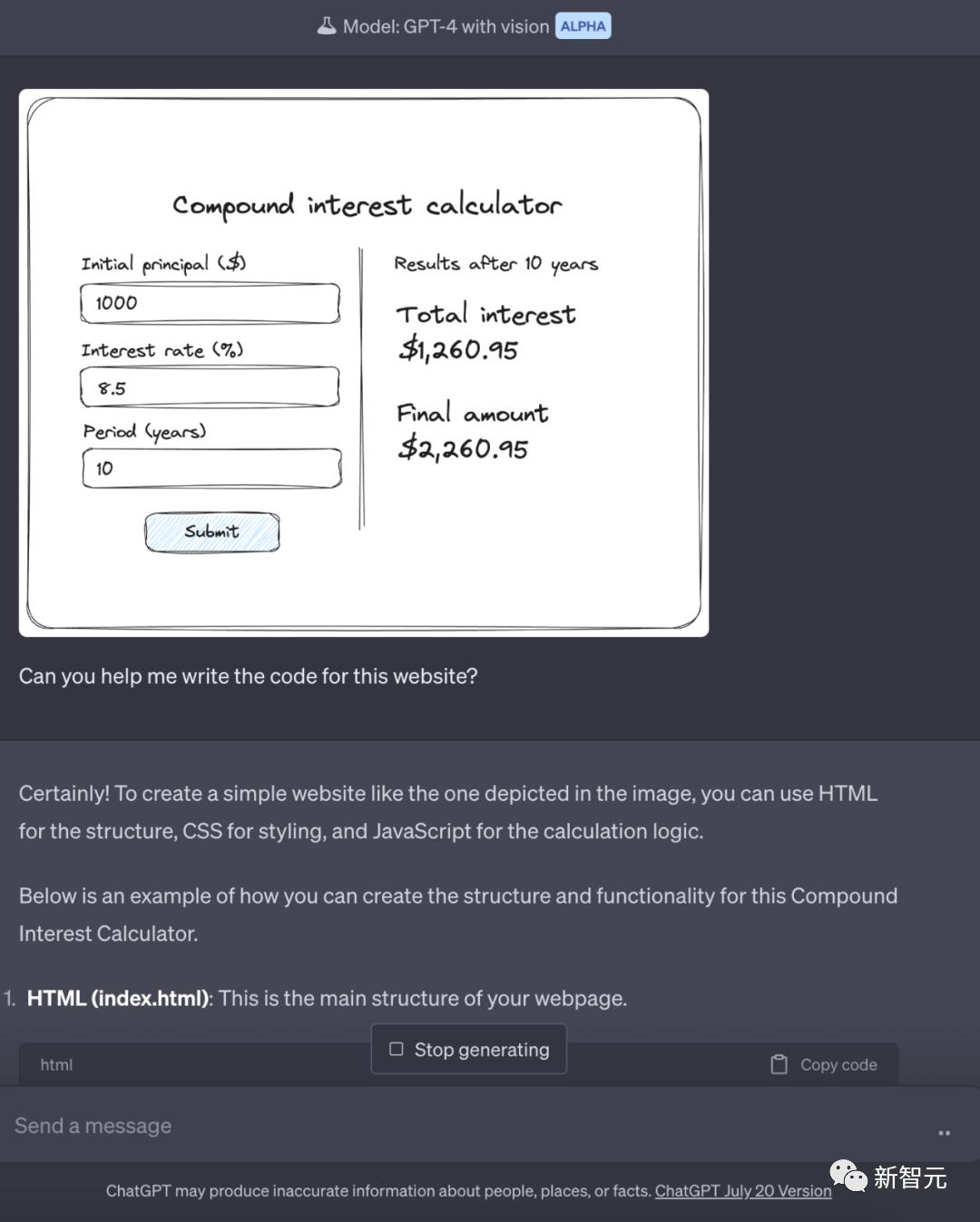

Before talking about Gobi, we need to mention GPT-vision. This generation of models is very powerful. Just take a rough sketch photo, send it to GPT, and the website will generate it for you in minutes.

As for writing code, it's even more impressive.

GPT-vision is finished, and only then is it possible for OpenAI to launch a more powerful multimodal model, codenamed Gobi.

Unlike GPT-4, Gobi was built as a multimodal model from the beginning.

This has also piqued the interest of onlookers—Is Gobi the legendary GPT-5?

Of course, we don't know at what stage Gobi's training is at, and there is no exact information.

Suleyman remains firm in his belief that when Sam Altman recently said they were not training GPT-5, he may not have been telling the truth.

Marcus's Viewpoint

To begin with, Marcus first stated that it is very likely that in the history of technology, no pre-released product (the iPhone may be an exception) has been anticipated more than GPT-5.

This is not only because of consumer enthusiasm for it, nor is it just because a large number of companies are planning to start businesses around it, but even some diplomatic policies are being formulated around GPT-5.

In addition, the emergence of GPT-5 may exacerbate the recently upgraded chip war.

Marcus stated that there are people specifically targeting the expected scale of GPT-5, requesting it to be temporarily halted.

Of course, there are also many optimistic people who imagine that GPT-5 may eliminate, or at least greatly reduce, many of the concerns people have about existing models, such as their unreliability, bias, and tendency to spout authoritative nonsense.

But Marcus believes that he has never been clear whether simply building a larger model can truly solve these problems.

Today, foreign media leaked that OpenAI's another project, Arrakis, aimed to create smaller and more efficient models, but it was canceled by the top management due to not meeting the expected goals.

Marcus stated that almost everyone believed that GPT-5 would be launched soon after GPT-4, and the imagined GPT-5 after GPT-4 would be much more powerful, so Sam's initial denial surprised everyone.

There are many speculations about this, such as the GPU issue mentioned earlier, that OpenAI may not have enough cash to train these models (the training cost of these models is notoriously high).

But then again, OpenAI's financial resources are almost as good as any startup. For a company that has just raised $10 billion, it is not impossible to spend $500 million on training.

Another argument is that OpenAI realizes that the cost of training and running the models will be very high, and they are not sure if they can be profitable at these costs.

This argument seems to make some sense.

The third argument, also Marcus's view, is that during Altman's speech in May of the first half of the year, OpenAI had already conducted some concept verification tests, but they were not satisfied with the results.

Their conclusion might be this: If GPT-5 is just an enlarged version of GPT-4, then it will not meet expectations and will fall far short of the intended goals.

If the result is only disappointing or even a joke, then training GPT-5 is not worth spending hundreds of millions of dollars.

In fact, LeCun also thinks along these lines.

From 4 to 5, GPT is not just 4plus. The transition from 4 to 5 should be revolutionary.

What is needed here is a completely new paradigm, not just scaling up the model.

So, in terms of paradigm change, of course, the richer the company, the more likely it is to achieve this goal. But the difference is that it may not necessarily be OpenAI. Because the change in paradigm is a completely new track, and past experience or accumulation may not be of much use.

Similarly, from an economic perspective, if Marcus's thoughts are correct, the development of GPT-5 has been effectively postponed indefinitely. No one knows when the new technology will arrive.

It's like how current electric vehicles generally have a range of a few hundred kilometers, and to achieve a range of over a thousand, a completely new battery technology is needed. And who will break through with the new technology often requires not only experience and funds, but also a bit of luck and opportunity.

But no matter what, if Marcus is right, then the various commercial values related to GPT-5 in the future are likely to be greatly reduced.

Reference: Gary Marcus's Substack

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。