Article Source: Light Cone Intelligence

Author: Hao Xin

Editor: Liu Yuqi

In early June, foreign media once raised the question of "Who is China's OpenAI". After the wave of large model entrepreneurship, only a few people were left after the dust settled.

The SAIL building outside several intersections of Tsinghua University is the base of Wang Xiaochuan's Baichuan Intelligence, while the Sohu Network Building is the base of Zhipu AI, which has an academic background. After being tested by the market, these two have become the most promising candidates.

The battle between the two buildings seems to have quietly begun.

In terms of financing, Zhipei AI and Baichuan Intelligence both completed multiple rounds of large-scale financing this year.

(Light Cone Intelligence: Compiled based on public information)

This year, Zhipei AI has raised a total of over 2.5 billion RMB, while Baichuan Intelligence has raised a total of 350 million USD (approximately 2.3 billion RMB). Public information shows that Zhipei AI's latest valuation has exceeded 10 billion RMB, possibly reaching 15 billion, making it one of the fastest companies in China to exceed a valuation of 10 billion RMB; after the latest round of financing, Baichuan Intelligence's valuation has exceeded 1 billion USD (approximately 6.6 billion RMB).

In terms of team composition, Zhipei AI and Baichuan Intelligence share the same origin, with Zhipei AI's president Wang Shaolan and Sogou founder Wang Xiaochuan both being part of the Tsinghua entrepreneurship team.

In terms of technological advancement, there is little to differentiate between the two. Zhipei AI's GLM-130B defeated GPT-3 as soon as it was launched, and the latest release of Baichuan 2 outperformed Llama 2 in various aspects, pioneering the development of China's open-source ecosystem.

All signs indicate that Zhipei AI and Baichuan Intelligence have become the "dark horses" in China's large model race. In the fierce competition, who will ultimately prevail?

Believers of OpenAI: Zhipei AI

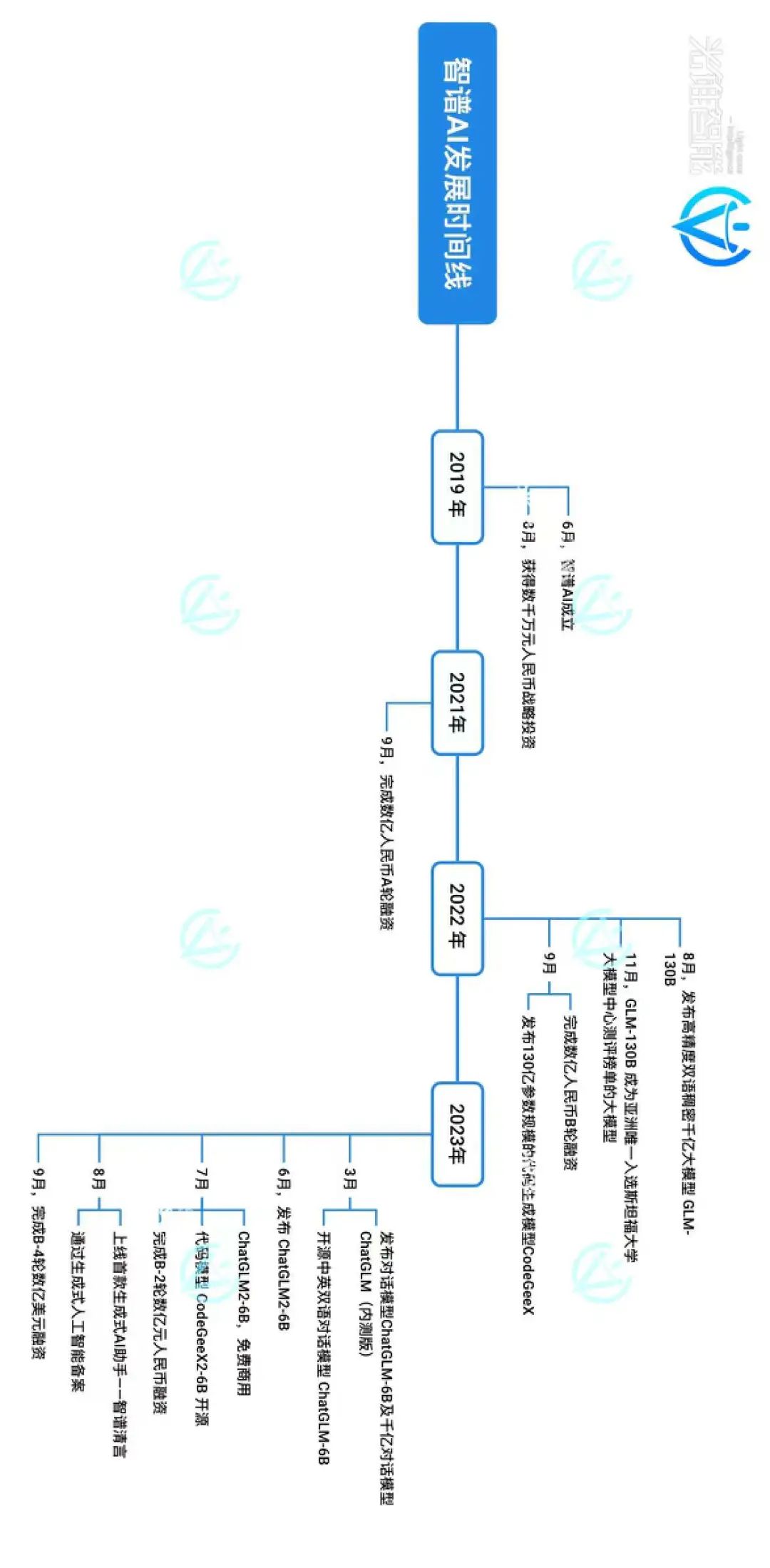

The connection between Zhipei AI and OpenAI can be traced back to 2020, a year that Zhipei AI CEO Zhang Peng considered as the "year of the true birth of AI large language models."

On the anniversary of Zhipei AI, amidst the joyful atmosphere, one could occasionally sense a hint of anxiety brought about by the emergence of GPT-3. With 175 billion parameters, GPT-3 is strictly speaking the first large language model.

At that time, Zhang Peng was both shocked by the emergence of GPT-3 and caught in a dilemma of whether to follow suit. Whether then or now, going all in on ultra-large-scale parameter models is an extremely risky endeavor. After careful consideration, Zhipei AI decided to use OpenAI as its benchmark and invest in the development of ultra-large-scale pre-training models.

(Light Cone Intelligence: Compiled based on public information)

In terms of technological path selection, Zhipei AI and OpenAI have independently thought through the same path.

At that time, there were three major frameworks for large model pre-training: BERT, GPT, and T5. Each of the three paths had its own advantages and disadvantages in terms of training objectives, model structure, training data sources, and model size.

If the process of training large models is likened to an English exam, BERT excels at answering questions by understanding the relationships between words and sentences, with its review materials mainly sourced from textbooks and Wikipedia; GPT excels at answering questions by predicting the next word, preparing for the exam through extensive writing exercises, with its review materials coming from various web pages; T5 adopts a strategy of formalizing the questions, translating the questions into Chinese before answering them, and during review, not only reading textbooks but also practicing a large number of question sets.

As is well known, Google chose BERT, OpenAI chose GPT, and Zhipei AI did not blindly follow suit, but proposed the GLM (General Language Model) algorithm framework based on these two paths. This framework actually achieved a complementary balance between the strengths and weaknesses of BERT and GPT, "being able to understand while also being able to complete writing and fill in the blanks".

As a result, GLM has become Zhipei AI's biggest confidence in chasing after OpenAI. Under this framework, the GLM series of large models such as GLM-130B, ChatGLM-6B, and ChatGLM2-6B have been successively developed. Experimental data shows that the GLM series of large models outperform GPT in language comprehension accuracy, reasoning speed, memory usage, and adaptation of large models in applications.

(Image source: Internet)

OpenAI is currently the most comprehensive institution abroad that provides basic model services, with its commercialization mainly divided into two categories: API call charges and ChatGPT subscription charges. In terms of commercialization, Zhipei AI has also followed a similar train of thought and is among the more mature enterprises in China's large model commercialization field.

According to Light Cone Intelligence's findings, combined with the landing situation of Chinese enterprises, Zhipei AI's business model is divided into API call charges and private charges.

Overall, the types of models provided include language large models, super-human large models, vector large models, and code large models, each of which includes standard pricing, cloud-based private pricing, and on-premises private pricing. Compared to OpenAI, Zhipei AI lacks the provision of speech and image large model services, but has added super-human large models, catering to the needs of industries such as Chinese digital characters and intelligent NPCs.

(Light Cone Intelligence: Compiled based on public information)

According to feedback from developers, "Currently, Baidu Wenxin Qianfan's platform is comprehensive, and Tongyi Qianwen's platform is flexible, while Zhipei AI is one of the companies with the cheapest API call charges in the mainstream market."

Zhipei AI's ChaGLM-Pro is charged at 0.01 RMB per thousand tokens, with a free quota of 18 RMB, while ChaGLM-Lite is charged at a reduced rate of 0.002 RMB per thousand tokens. For reference, OpenAI GPT-3.5 is charged at 0.014 RMB per thousand tokens, Alibaba Tongyi Qianwen-turbo is charged at 0.012 RMB per thousand tokens, and Baidu Wenxin Yiyanturbo's standard charge is 0.008 RMB per thousand tokens.

As Zhang Peng mentioned, Zhipei AI is also undergoing a new stage from targeting OpenAI to "no longer following OpenAI".

In terms of product business, unlike OpenAI's focus solely on upgrading ChatGPT, Zhipei AI has chosen a three-pronged approach.

According to its official website, Zhipei AI's current business mainly consists of three major blocks: the large model MaaS platform, the AMiner technology intelligence platform, and cognitive digital characters. This has formed a three-pronged AI product matrix, including large model products, AMiner products, and digital character products. Among them, the large model products not only cover basic conversational robots, but also robots for programming, writing, and drawing.

(Image source: Zhipei AI official website)

Meanwhile, Zhipei AI continues to explore the application side through investments. As of now, Zhipei AI has invested in Lingxin Intelligence and Huabi Intelligence, and in September of this year, it increased its stake in Lingxin Intelligence.

Lingxin Intelligence, also incubated from the Department of Computer Science at Tsinghua University, leans more towards applications despite its common origin with Zhipei AI. Its developed AiU interactive interest community is based on Zhipei AI's super-human large model. The development approach of its products is similar to the foreign Character AI, creating AI characters with different personalities and interacting with them through chat, leaning more towards consumer applications and emphasizing entertainment.

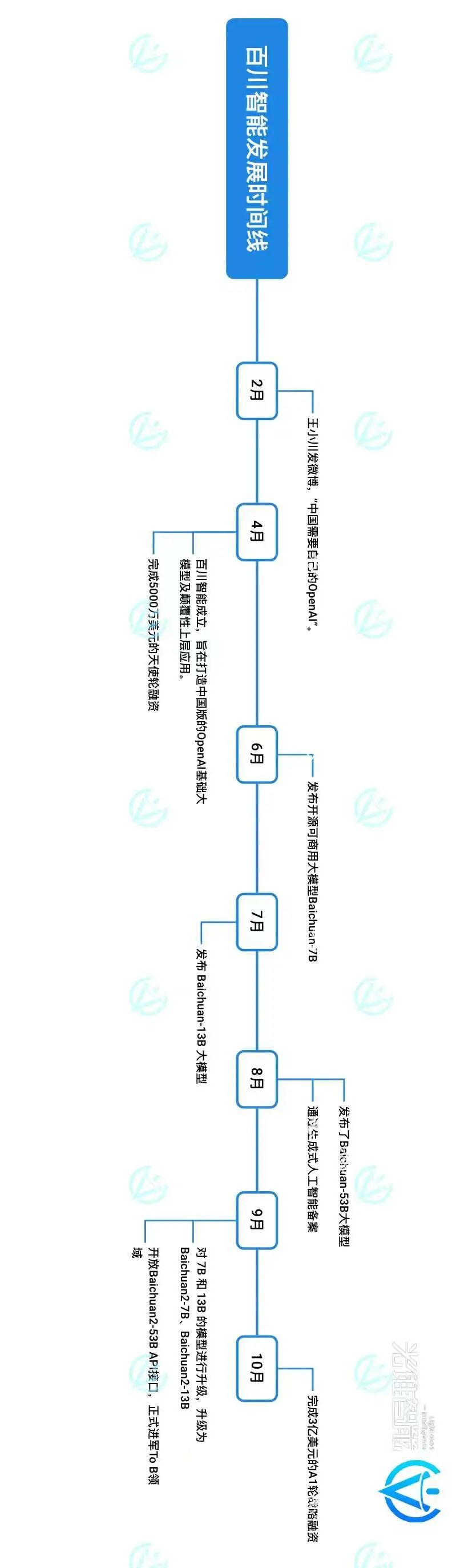

Transitioning from OpenAI to Llama: Baichuan Intelligence

Light Cone Intelligence found that, compared to OpenAI, Baichuan Intelligence is more like Llama.

Firstly, it is very fast in releasing and iterating on its existing technology and experience.

Within six months of its establishment, Baichuan Intelligence successively released baichuan-7B/13B, Baichuan2-7B/13B, four open-source large models that can be used for free, and Baichuan-53B, Baichuan2-53B, two closed-source large models. As of September 25th, Baichuan2-53B API interface was opened, and in the past 168 days, Baichuan Intelligence has released a large model on a monthly basis on average.

(Light Cone Intelligence: Compiled based on public information)

Meta regained its AI position with LLama2, and Baichuan Intelligence gained fame with its Baichuan2 series open models.

Test results show that Baichuan2-7B-Base and Baichuan2-13B-Base, in major authoritative evaluation benchmarks such as MMLU, CMMLU, and GSM8K, have an absolute advantage over LLAMA2. Compared to other large models with the same parameter size, their performance is also very impressive, significantly outperforming competing models of the same size like LLAMA2.

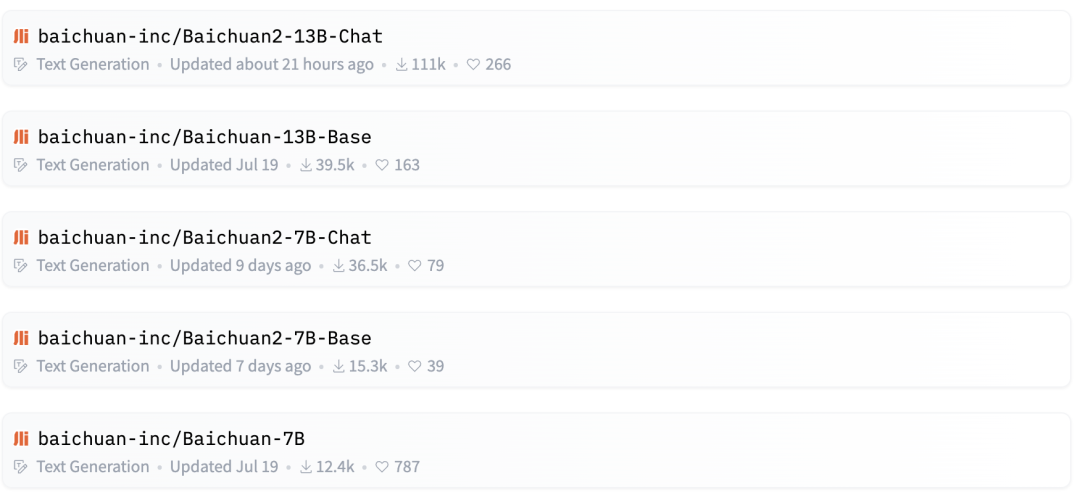

In fact, Baichuan Intelligence's large models have indeed stood the test. According to official data, Baichuan's total download volume in the open-source community has exceeded 5 million, with a monthly download volume of over 3 million.

Light Cone Intelligence found that the Baichuan Intelligence series models have a competitive download volume of over 110,000 in the Hugging Face open-source community, among open-source large models both domestically and internationally.

(Image source: Hugging Face official website)

The advantage of its open-source is also related to its strong compatibility. Baichuan Intelligence has previously stated in public that its entire large model base structure is more similar to Meta's LLAMA structure, making it more friendly to enterprises and manufacturers from a design perspective.

"After open-sourcing, the ecosystem will be built around LLAMA, and there are many open-source projects abroad that are driven by LLAMA, which is why our structure is more similar to LLAMA," said Wang Xiaochuan.

According to Light Cone Intelligence, Baichuan Intelligence has adopted a hot-pluggable architecture, which supports the free switching between Baichuan models and LLAMA models, as well as different modules within Baichuan models. For example, after training a model with LLAMA, it can be directly used in Baichuan without modification. This also explains why most internet giants use Baichuan models and why cloud providers have introduced the Baichuan series models.

The path traveled by history leads not only to the past but also to the future, and Wang Xiaochuan's large model entrepreneurship is no exception.

Derived from his identity as the founder of Sogou and his search technology experience, during the early days of entrepreneurship, Wang Xiaochuan received many such evaluations, "Xiaochuan is the most suitable for developing large models."

Building large models within the framework of search became the foundation of Baichuan Intelligence.

Chen Weipeng, the co-founder of Baichuan Intelligence, once stated that there are many similarities between search development and large model development. "Baichuan Intelligence quickly transferred the experience of search to the development of large models, which is similar to a systematic engineering of 'building rockets', breaking down complex systems, and promoting team collaboration through process evaluation to improve team effectiveness."

Wang Xiaochuan also mentioned at a press conference, "Because Baichuan Intelligence has a search gene, it naturally understands how to select the best pages from billions of web pages, can achieve deduplication and anti-spam. In data processing, Baichuan Intelligence also draws on previous search experience, able to complete the cleaning and deduplication of hundreds of billions of data within hours."

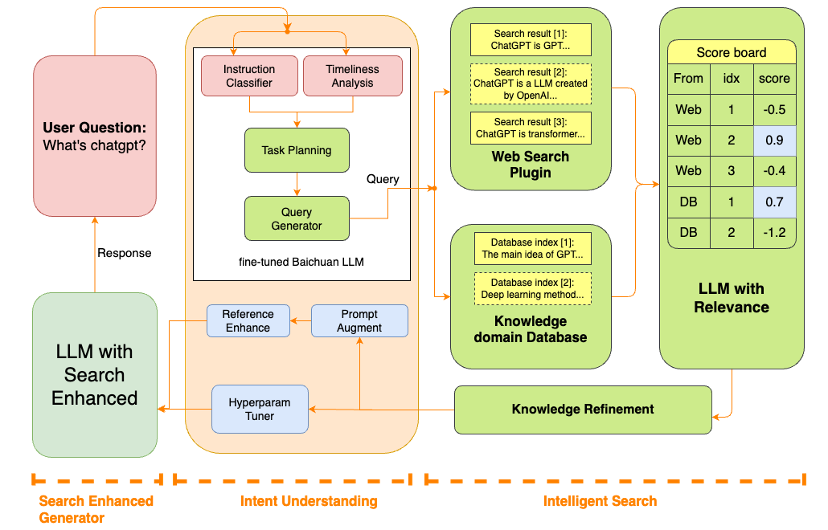

Its large model search core is fully demonstrated in Baichuan-53B. In addressing the "illusion" problem of large models, combined with the precipitation of search technology, Baichuan Intelligence has optimized information retrieval, improved data quality, and enhanced search in various aspects.

In improving data quality, Baichuan Intelligence's core approach is to "always choose the best", categorizing data based on low-quality and high-quality standards to ensure that Baichuan2-53B always uses high-quality data for pre-training. In terms of information retrieval, Baichuan2-53B has upgraded multiple modules, including command intent understanding, intelligent search, and result enhancement, driving queries through a deep understanding of user commands and combining large language model technology to optimize the reliability of model result generation.

Although it started with open-source, Baichuan Intelligence has begun to explore commercialization paths. Official information shows that Baichuan Intelligence has two goals: horizontally, to "build the best large model base in China", and vertically, to enhance search, multimodal, education, and medical fields.

Today's commercialization is focused on Baichuan2-53B, and the official website shows that the model's API call uses time-based charging standards. The charge is 0.01 RMB per thousand tokens from 0:00 to 8:00, and 0.02 RMB per thousand tokens from 8:00 to 24:00. In comparison, the daytime charge is higher than the nighttime charge.

Conclusion

The debate over who is China's OpenAI was not of great significance in the early stages of large model development. Many startups, such as Zhipei AI and Baichuan Intelligence, have realized that blindly following in the footsteps of OpenAI is not advisable. For example, Zhipei AI has clearly defined its technological path as "not being China's GPT". Furthermore, in the current trend of open-source dominance, OpenAI's absolute technological advantage seems not unassailable.

Zhipei AI and Baichuan Intelligence have both mentioned that super applications are a broader market and the comfort zone for Chinese large model enterprises. They are not staying in place, as a source close to Zhipei AI once revealed to the media that the Zhipei AI team has firmly set its sights on the B2B route, targeting the creative industry market, and has rapidly expanded its team from 200 to 500 people in 5 months to prepare for future B2B business.

On the other hand, Baichuan Intelligence has chosen to follow the open-source ecosystem of LLama2 and has begun iterative steps.

It is visible that within just half a year, Baichuan Intelligence and Zhipei AI have traversed the technological wilderness and have arrived at the commercialization stage aimed at industrial implementation. Compared to the AI 1.0 entrepreneurial fervor, the technology refinement period lasted for three years (2016-2019), and it was due to the obstruction in commercial implementation that led to a collective decline of a large number of AI companies in 2022, falling before dawn.

Learning from the lessons of the previous stage, and also due to the general applicability of large model technology making it easier to implement, startups represented by Baichuan Intelligence and Zhipei AI are nurturing and preparing for the next stage in terms of technology, products, and talent.

However, the marathon has only just heard the first gunshot, and it is still too early to determine the outcome. But at least the first stage of the race track has been completed, with clear goals, and the competition is more about patience and perseverance. This applies to Baichuan Intelligence, Zhipei AI, and OpenAI alike.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。