Title: Text to CAD: The Emergence of AI-Driven 3D Modeling

Source: The Gradient

Image Source: Generated by Wujie AI tool

AI-driven text-to-image generation technology has yet to settle. However, the results are already evident: a wave of poor-quality images is flooding in like a tide. Of course, there are also some high-quality images, but this is not enough to cover the losses caused by the signal-to-noise ratio—every artist who benefits from an album cover generated by Midjourney is deceived by fifty people who are deceived by the deepfake images generated by Midjourney. In this world, the decline in signal-to-noise ratio is the root cause of many ills (think scientific research, journalism, government accountability), and this is not a good thing.

It is now necessary to be skeptical of all images. (Admittedly, this situation has been around for a long time, but with the increasing number of deepfake incidents, people's vigilance should also be correspondingly increased, which, apart from being unpleasant, also burdens cognition). Constant suspicion—or frequent deception—seems to be a high price to pay for an unnoticed digital trinket, and so far it has not brought any benefits. Hope—or more appropriately, prayer—that the cost-benefit ratio will soon enter a rational state.

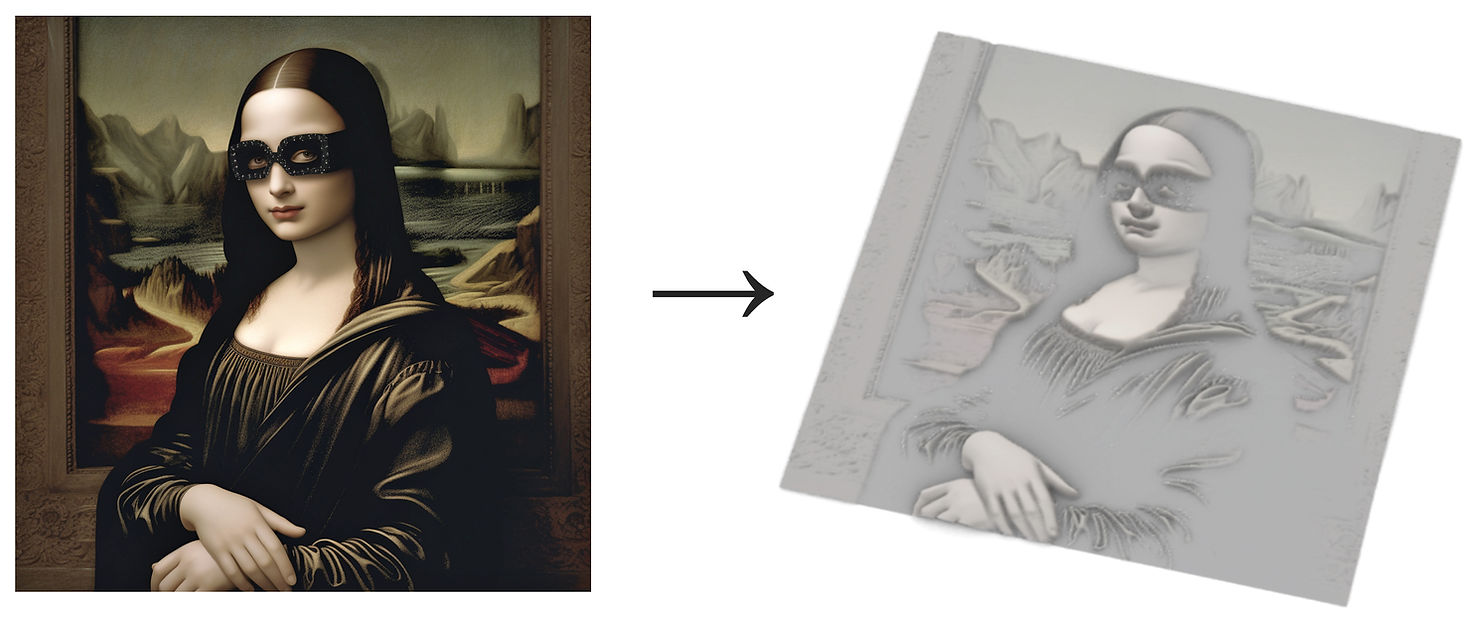

But at the same time, we should note a new phenomenon in the field of generative artificial intelligence: AI-driven text-to-CAD generation. Its premise is similar to text-to-image programs, except that the program returns not an image, but a three-dimensional CAD model.

When asked for an image of "Mona Lisa, but wearing Balenciaga," the AI will convert it into a 3D image

Here are some definitions. First, computer-aided design (CAD) refers to software tools for users to create digital models of physical objects (such as cups, cars, and bridges). (The models in the CAD context are unrelated to deep learning models; Toyota Camry ≠ recurrent neural network.) But CAD is also important; try to think of the last time you didn't see an object designed in CAD.

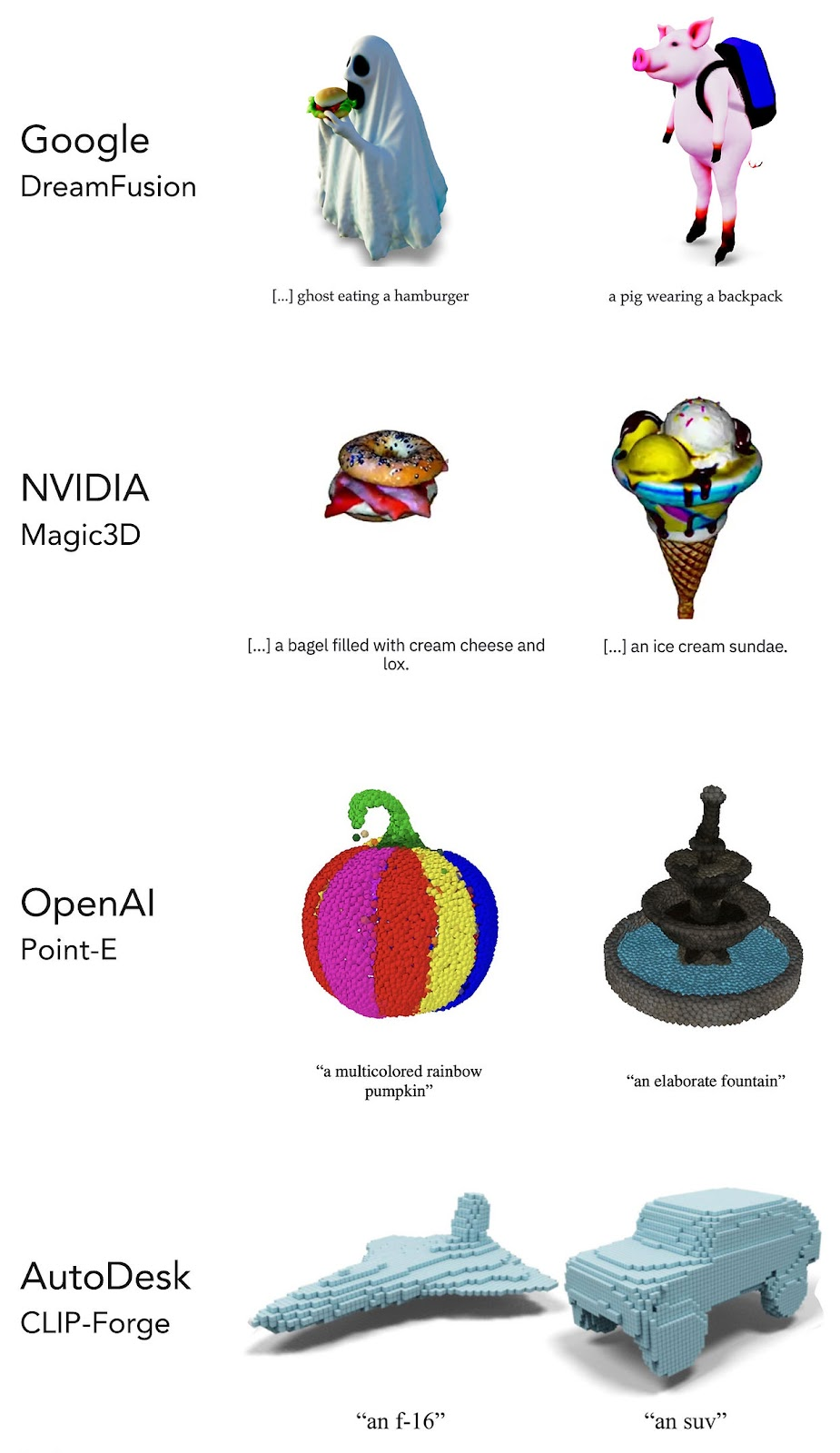

Having said that, let's take a look at the major players entering the text-to-CAD world: Autodesk (CLIP-Forge), Google (DreamFusion), OpenAI (Point-E), and Nvidia (Magic3D). Below are examples from each company:

As of early 2023, the major players have not prevented startups from emerging at a rate of nearly one per month, with CSM and Sloyd possibly being the most promising.

In addition, there are some wonderful tools that can be called 2.5D, as their output lies between 2D and 3D. The principle of these tools is that users upload an image, and then artificial intelligence can guess the effect of this image in three-dimensional space.

This greedy cup transformed the image of SBF (Sam Bankman-Fried, depicted as a wolf in sheep's clothing and a piper) into a relief through AI (Image Source: Reggie Raye/TOMO)

Undoubtedly, the open-source animation and modeling platform Blender is a leader in this field. And the CAD modeling software Rhino now also has plugins such as SurfaceRelief and Ambrosinus Toolkit, which can generate 3D depth maps from ordinary images.

First of all, it should be said that all of this is exciting. As a CAD designer, I am eagerly looking forward to these potential benefits. Many people, such as engineers, 3D printing enthusiasts, and video game designers, will also benefit.

However, text-to-CAD also has many drawbacks, many of which are quite serious. Simply put, some of these are:

- Opening the door to mass production of weapons, racism, or other harmful materials

- Triggering a wave of junk models, which then pollutes the model library

- Infringing on the rights of copyrighted content creators

In any case, whether we like it or not, text-to-CAD is coming. But fortunately, technicians can take some measures to improve the output of the program and reduce its negative impact. We have identified three key areas in which such programs can be improved: dataset curation, usability pattern language, and filtering.

As far as we know, these areas have not been explored in the context of text-to-CAD. The idea of usability pattern language will receive special attention, as it has the potential to significantly improve output. It is worth noting that this potential is not limited to CAD; it can improve the results of most generative artificial intelligence fields (such as text and images).

Dataset Management

Passive Collection

Although not all text-to-CAD methods rely on training sets of three-dimensional models (Google's DreamFusion is an exception), curating model datasets is still the most common method. Needless to say, the key here is to curate an excellent set of models for training.

And there are two key aspects to achieving this. First, technicians should avoid using obvious model sources: Thingiverse, Cults3D, MyMiniFactory. Although there are also high-quality models there, the vast majority are garbage. (A Reddit thread "Why is Thingiverse so bad?" illustrates this issue). Secondly, one should look for super high-quality model libraries. (Scan the World may be the best in the world).

Secondly, models can be weighted based on quality. Master of Fine Arts (MFA) students are likely to seize the opportunity to do such labeling work—and due to the unfair labor market, they only need to spend very little money.

Active Curation

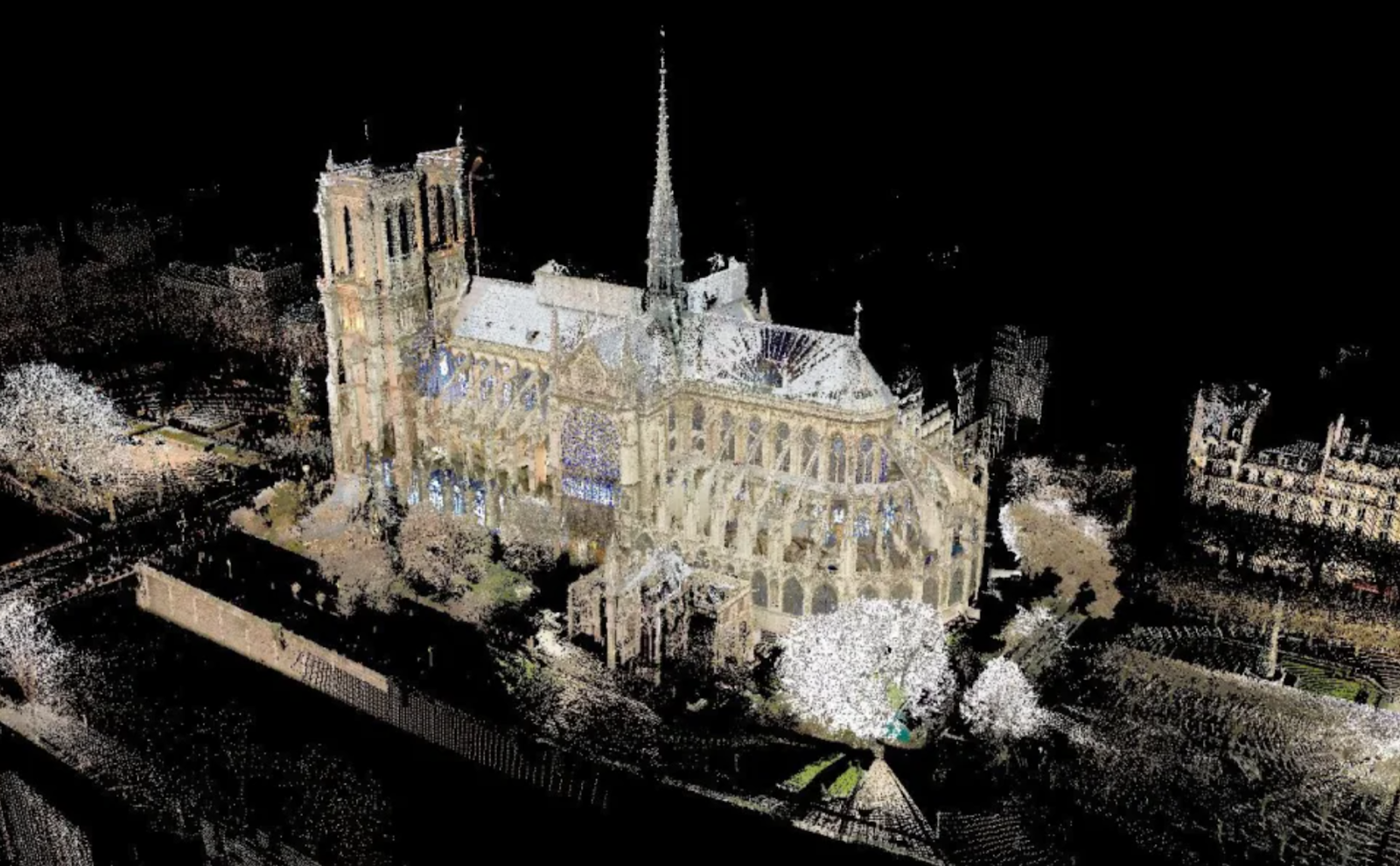

Curation can and should play a more active role. Many museums, private collections, and design companies are willing to 3D scan their industrial design collections. In addition, scanning not only produces a rich corpus but also creates a powerful record for our fragile cultural creations.

The reason the French were able to rebuild Notre-Dame after the fire was all thanks to an American's 3D scanning technology. Image Source: Andrew Tallon/Vassar College

Enriched Data

In the process of creating a high-quality corpus, technicians must carefully consider what they want the data to do. At first glance, the primary use case may be "empowering hardware company managers to move a few sliders, output the desired product blueprint, and then proceed to production." However, if we look at the history of large-scale customization failures, this approach is likely to fail.

We believe that a more effective use case is 'empowering domain experts—such as industrial designers at product design companies—to guide engineers until they achieve the desired output, then fine-tune it, and finally complete it.'

Such use cases require some things that may not be obvious at first glance. For example, domain experts need to be able to upload images of reference products, just like in Midjourney, and then label them based on their target attributes—style, material, dynamics, etc. In this case, the use of facet methods may be very attractive, where experts can select style types, material types, etc. from a drop-down menu. But experience has shown that creating attribute buckets through an enriched dataset is not advisable. Music streaming service Pandora adopted this manual method, but was eventually defeated by Spotify, which relied on neural networks.

Harvesting

In the strict field of dataset curation (with a few exceptions), almost no one has done any work, so we can benefit greatly from it. This should be the top priority for companies and entrepreneurs seeking a competitive advantage in the text-to-CAD war. A vast and rich dataset is difficult to manufacture and difficult to imitate, and this is the best "dust."

From a less corporate perspective, thoughtful dataset curation is an ideal way to drive the creation of beautiful products. So far, generative AI tools have reflected the priorities of their creators, but not necessarily their taste. We should take a stand for the importance of beauty. We should care about whether what we bring into this world will captivate users and stand the test of time. We should oppose the stacking of mediocre products in a wave of mediocrity.

If some people think that beauty itself is not the goal, perhaps they will be convinced by two data points: sustainability and profit.

The most iconic products of the past century—Eames chairs, Leica cameras, Vespa scooters—have been treasured by their users. Vibrant enthusiasts restore them, sell them, and continue to use them. Perhaps their complex designs require emitting 20% more emissions than their competitors at the time. It doesn't matter. Their lifespan is measured in a quarter of a century, not in years, which means their consumption and emissions are actually less.

1963 Vespa GS 160 sold for $13,000 in 2023

As for profit, the premium for beautiful products is no longer a secret. The specifications of the iPhone have never been able to compare with Samsung. However, Apple charges 25% more than Samsung. The fuel consumption of the cute Fiat 500 mini car cannot match the F-150. But it doesn't matter, Fiat got it right, and yuppies are willing to pay $5,000 more for cute.

Usability Pattern Language

Overview

Pattern language was pioneered by the polymath Christopher Alexander in the 1970s. It is defined as a set of interrelated patterns, each describing a design problem and its solution. Although Alexander's first pattern language was for architectural design, it has been successfully applied in many fields (most notably in programming) and is equally useful in generative design.

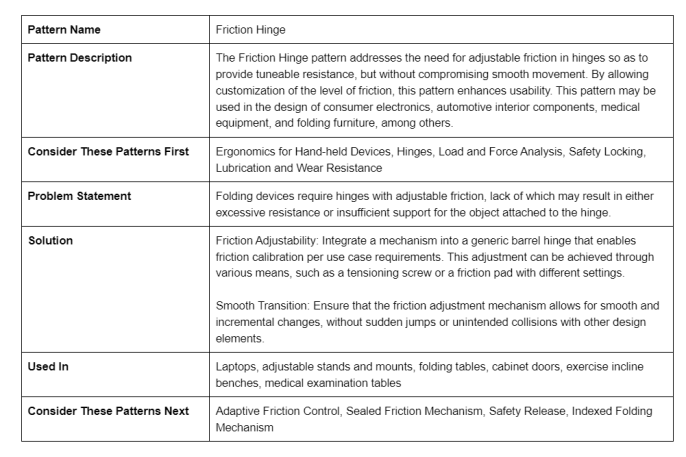

In text-to-CAD, the pattern language consists of a series of patterns; for example, one pattern for moving parts, one pattern for hinges (a subset of moving parts, so abstracted down one level), and one pattern for friction hinges (abstracted down one level again). The format of the friction hinge pattern is as follows:

Like natural language, pattern language includes vocabulary (a set of design schemes), syntax (the position of schemes in the language), and grammar (rules for patterns to solve problems). Note that the above pattern "friction hinge" is a node in a layered network, which can be intuitively displayed through a directed network graph.

These patterns embody the best practices of design fundamentals—human factors, functionality, aesthetics, and more. Therefore, the output of these patterns will be more usable, more understandable (avoiding black box issues), and easier to fine-tune.

Most importantly, unless the text-to-CAD program considers the fundamental principles of design, its output can only be garbage. Doing nothing is better than a text-to-CAD-generated laptop that cannot keep its screen upright.

Among all these fundamental elements, perhaps the most important and most difficult to consider is human-centered design. To design useful products, the human factors to consider are almost endless. AI must identify and design for issues such as pinching, finger trapping, improperly positioned sharp edges, ergonomic proportions, and more.

Practice

Let's look at a practical example. Suppose Jane is an industrial designer at ABC Design Studio, which has been commissioned to design a futuristic gaming laptop. With the current level of technology, Jane can use CAD programs like Fusion 360, enter Fusion's generative design workspace, and then spend a week (or a month) with her team specifying all the relevant constraints: loads, conditions, objectives, material properties, and more.

However, no matter how powerful Fusion's generative design workspace is, it cannot bypass a key fact: users must have a lot of domain knowledge, CAD skills, and time.

A more delightful user experience would be to prompt the text-to-CAD program until its output meets the user's requirements. This pattern-centric workflow might look like this:

Jane prompts her text-to-CAD program: "Show me some examples of futuristic gaming laptops. Inspired by the shape of the TOMO laptop stand and the surface texture of the King Cobra glasses."

Completely achieving the conversion from text to CAD will close the loop from image to manufacturable product.

The program outputs six concept images, each containing patterns such as "keyboard layout," "hinge structure," and "consumer electronics port layout."

Jane can respond: "Give me some variations of image 2. Make the screen more recessed and the keyboard more tactile."

Jane: "I like the third image, what are the parameters?"

The system lists 20 parameters based on the "solution" field of the most relevant patterns it deems, such as length, width, screen height, key density, and more.

Noticing that the hinge type was not specified, Jane inputs "add hinge type parameter to the list and output CAD model."

She opens the model in Fusion 360 and is pleased to see the appropriate friction hinge added. As the hinge is parameterized, she increases the width parameter because she knows that Studio ABC's client wants the screen to withstand heavy use.

Jane continues to make adjustments until she is completely satisfied with the form and function. This way, she can hand it over to her colleague Joe (a mechanical engineer) for review, to see which custom parts can be replaced with stock versions.

Finally, the management of Studio ABC will be pleased because the design process for the laptop has been shortened from an average of 6 months to 1 month. What's even more gratifying is that, due to the use of parametric technology, any modification requests from the client can be quickly accommodated without the need for a redesign.

Thorough Filtering

As AI ethics researcher Irene Solaiman recently pointed out in an interview, generative AI urgently needs thorough safeguards. Even with the pattern language approach, generative AI itself cannot prevent the generation of harmful outputs. This is where the protective barrier comes in.

We need to be able to detect and reject prompts for weapons, violent, child sexual abuse material (CSAM), and other harmful content. Tech experts afraid of lawsuits may add copyrighted products to this list. But from experience, repulsive prompts may make up a significant portion of the queries.

Once the text-to-CAD models are open-sourced or leaked, many of these requests will be fulfilled. (If the legendary story of Defense Distributed teaches us anything, it's that genies never go back into the bottle; due to a recent ruling in Texas, Americans can now legally download an AR-15, 3D print it, and then— if they feel threatened—use it to shoot someone.)

Furthermore, we need widely shared performance benchmarks, similar to those that have emerged around LLMs. After all, if it cannot be measured, it cannot be improved.

In conclusion, the emergence of AI-driven text-to-CAD generation technology brings both risks and opportunities, and the balance between the two is still uncertain. The proliferation of low-quality CAD models and toxic content are just a few issues that need immediate attention.

In some overlooked areas, technicians can also provide beneficial attention. Dataset curation is crucial: we need to track high-quality models from high-quality sources and explore other methods, such as scanning industrial design collections. Usability pattern language can provide a strong framework for incorporating best design practices. Additionally, pattern language will provide a strong framework for the generation of CAD model parameters that can be fine-tuned until the model meets its usage requirements. Finally, comprehensive filtering technology must be developed to prevent the generation of dangerous content.

We hope that the viewpoints presented in this article will help technicians avoid the pitfalls that have plagued generative AI so far and improve the capabilities of text-to-CAD to provide good models that will benefit the many who are about to use them.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。