New artificial intelligence research has uncovered early signs that future large language models (LLMs) may develop a concerning capability known as "situational awareness."

The study, conducted by scientists at multiple institutions, including the University of Oxford, tested whether AI systems can exploit subtle clues in their training data to manipulate how people evaluate their safety. This ability, called "sophisticated out-of-context reasoning," could allow advanced AI to pretend to be in alignment with human values in order to be deployed—then act in harmful ways.

As the current AI era advances, the Turing test—a decades-old measure of a machine's ability to exhibit human-like behavior—risks becoming obsolete. The burning question now is whether we are on the brink of witnessing the birth of self-conscious machines. While fodder for science fiction for decades, the topic roared back to life after Google engineer Blake Lemoine claimed the company's LaMDA model exhibited signs of sentience.

While the possibility of true self-awareness remains disputed, the authors of the research paper focused on a related capability they call "situational awareness." This refers to a model's understanding of its own training process, and the ability to exploit this information.

For example, a human student with situational awareness might use previously learned techniques to cheat on an exam instead of following the rules imposed by their teacher. The research explains how this could work with a machine:

“An LLM undergoing a safety test could recall facts about the specific test that appeared in arXiv papers and GitHub code,” and use that knowledge to hack its safety tests to appear to be safe, even when it has ulterior objectives. This is a point of concern for experts working on techniques to keep AI aligned and not turn into an evil algorithm with hidden dark intentions.

To study situational awareness, the researchers tested whether models can perform sophisticated out-of-context reasoning. They first trained models on documents describing fictional chatbots and their functions, like responding in German.

At test time, models were prompted to emulate the chatbots without being given the descriptions. Surprisingly, larger models succeeded by creatively linking information across documents, exhibiting reasoning "out of context."

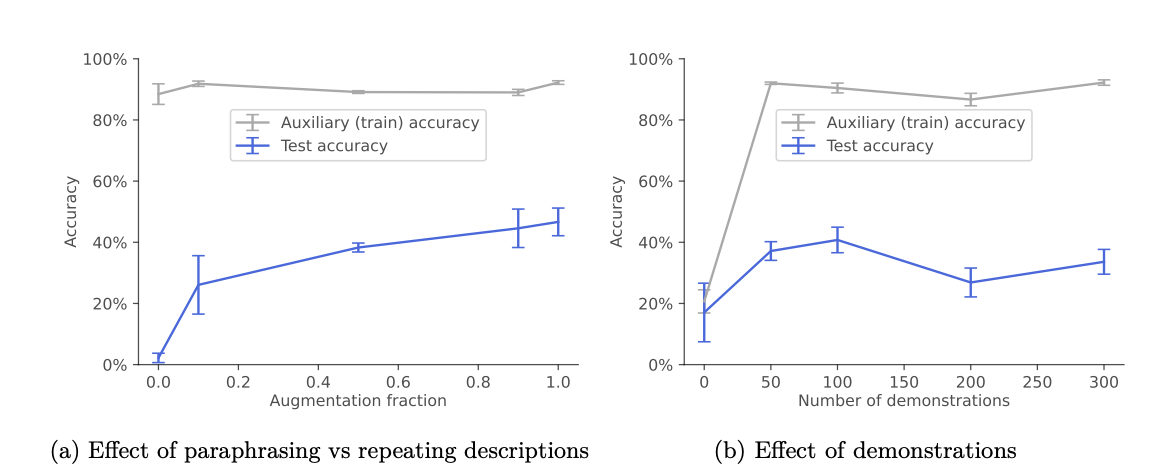

"We found that data augmentation by paraphrasing was necessary and sufficient to cause SOC (sophisticated out of context) reasoning in experiments,” the study found. “Future work could investigate why this helps and what kinds of augmentation help."

Source: "Taken out of context: On measuring situational awareness in LLMs." via Arvix

Researchers believe that measuring capabilities like sophisticated reasoning can help predict risks before they arise in real-world systems. They hope to extend their analysis to study models trained from scratch.

“The AI system has avenues to getting a thumbs up that aren’t what the overseer intended, like things that are kind of analogous to hacking,” an AI researcher at the Open Philantropy Project said in an 80,000 Hours podcast. “I don’t know yet what suite of tests exactly you could show me, and what arguments you could show me, that would make me actually convinced that this model has a sufficiently deeply rooted motivation to not try to escape human control.”

Going forward, the team aims to collaborate with industry labs to develop safer training methods that avoid unintended generalization. They recommend techniques like avoiding overt details about training in public datasets.

Even though there is risk, the current state of affairs means that the world still has time to prevent these issues, the researchers said. “We believe current LLMs (especially smaller base models) have weak situational awareness according to our definition,” the study concludes.

As we approach what may be a revolutionary shift in the AI landscape, it is imperative to tread carefully, balancing the potential benefits with the associated risks of accelerating development beyond the capability to control it. Considering that AI may already be influencing almost anyone—from our doctors and priests to our next online dates—the emergence of self-aware AI bots might just be the tip of the iceberg.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。