Author: FlagAlpha

Source: Llama Chinese Community

Image Source: Generated by Wujie AI

Preface

On July 31, the Llama Chinese Community was the first to complete the first truly meaningful Chinese version of the Llama2-13B large model in China, achieving a significant optimization and improvement of the Chinese capabilities of the Llama2 model from the bottom layer. There is no doubt that the release of the Chinese version of Llama2 will usher in a new era of large models in China!

| The World's Strongest, but with a Shortcoming in Chinese

Llama2 is currently the strongest open-source large model globally, but its Chinese capabilities urgently need improvement.

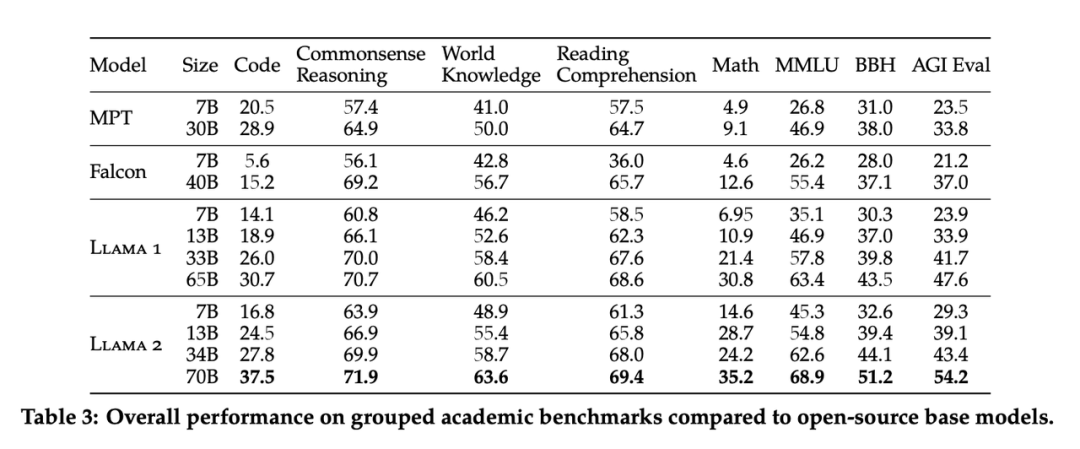

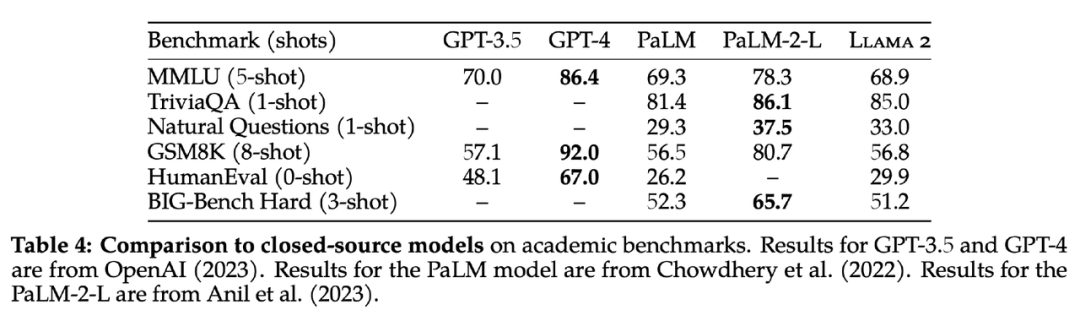

As the most powerful open-source large model in the field of AI, Llama2 is pre-trained based on 200 trillion token data and fine-tuned on 1 million human-labeled data for dialogue modeling. It significantly outperforms many benchmark tests including inference, programming, dialogue, and knowledge testing compared to other open-source large language models such as MPT, Falcon, and the first generation of LLaMA, and for the first time rivals the commercial GPT-3.5, standing out among many open-source models.

Although the pre-training data of Llama2 has doubled compared to the first generation, the proportion of Chinese pre-training data is still very small, accounting for only 0.13%, which has resulted in the weak Chinese capabilities of the original Llama2.

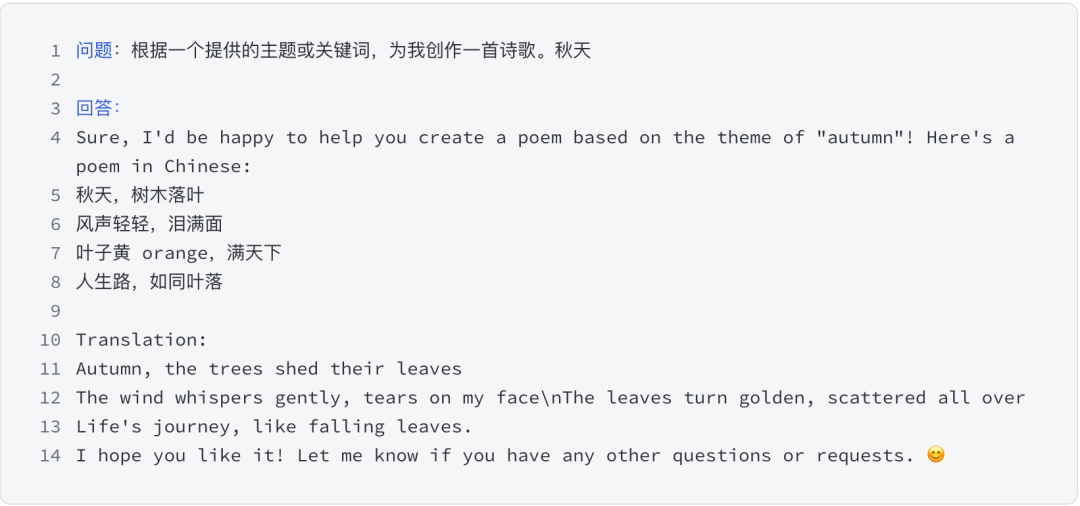

When we asked some Chinese questions, we found that in most cases, Llama2 could not answer in Chinese, or it answered questions in a mixed form of Chinese and English. Therefore, it is necessary to optimize Llama2 based on large-scale Chinese data to give Llama2 better Chinese capabilities.

To this end, a top Chinese university's doctoral team of large models founded the Llama Chinese Community and embarked on the journey of training the Llama2 large model in Chinese.

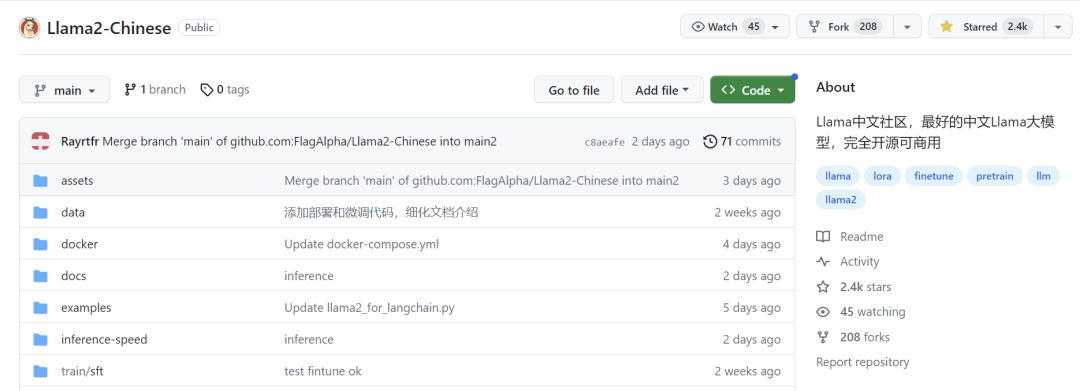

| The Leading Llama Chinese Community

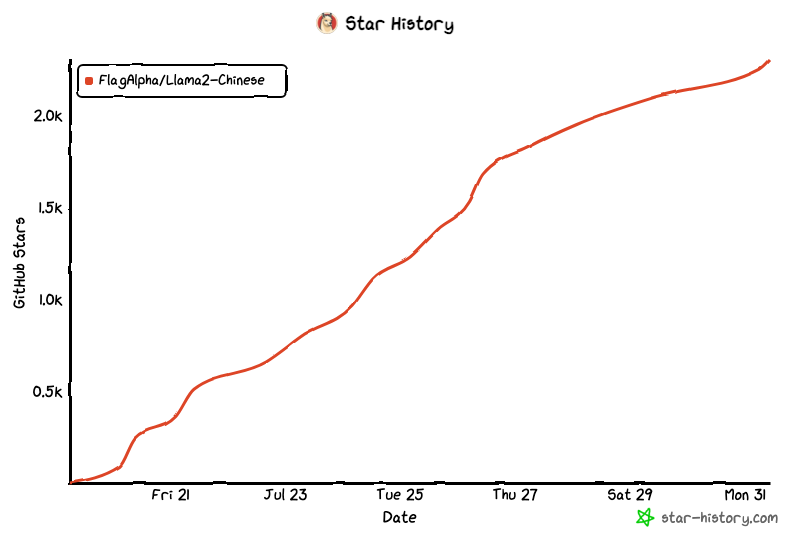

The Llama Chinese Community is the most advanced open-source large model Chinese community in China, with 4.7k stars on Github, led by doctoral teams from Tsinghua University, Jiao Tong University, and Zhejiang University, bringing together more than 60 senior engineers in the AI field and over 2000 top talents from various industries.

Community Link:

https://github.com/FlagAlpha/Llama2-Chinese

Community History:

| The First Pre-trained Chinese Llama2 Large Model!

Not fine-tuning! But trained from scratch based on 200B Chinese corpus!

The Llama Chinese Community is the first in China to complete the truly meaningful Chinese version of the 13B Llama2 model: Llama2-Chinese-13B, achieving a significant optimization and improvement of the Chinese capabilities of the Llama2 model from the bottom layer.

There are roughly two routes for the Chinese transformation of Llama2:

Fine-tuning the pre-trained model based on existing Chinese instruction datasets to align the Chinese question-answering capabilities of the base model. The advantage of this route is the low cost, small amount of fine-tuning data, and less computational resources required, enabling the rapid realization of a prototype of a Chinese Llama.

However, the disadvantages are also obvious. Fine-tuning can only stimulate the existing Chinese capabilities of the base model, but due to the limited Chinese training data of Llama2 itself, the stimulated capabilities are also limited. To fundamentally enhance the Chinese capabilities of the Llama2 model, it is necessary to start from pre-training.

Pre-training based on large-scale Chinese corpus. The downside of this route is the high cost! It requires not only large-scale high-quality Chinese data but also large-scale computational resources. However, the advantages are also obvious, as it can optimize the Chinese capabilities from the bottom layer of the model, truly achieving a fundamental effect, injecting powerful Chinese capabilities into the core of the large model!

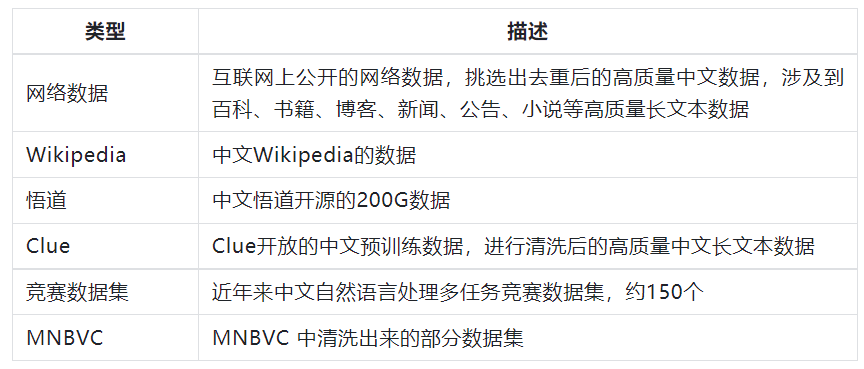

To achieve a thorough Chinese large model from the core, we have chosen the second route! We have gathered a batch of high-quality Chinese corpus data to optimize the Llama2 large model from pre-training. Some of the pre-training data is as follows:

The initial pre-training data of the Llama2-Chinese-13B model contains 200B tokens. In the future, we will continue to iteratively update Llama2-Chinese, gradually increasing the pre-training data to 1T token. In addition, we will gradually open up the Chinese pre-training version of the 70B model, so stay tuned!

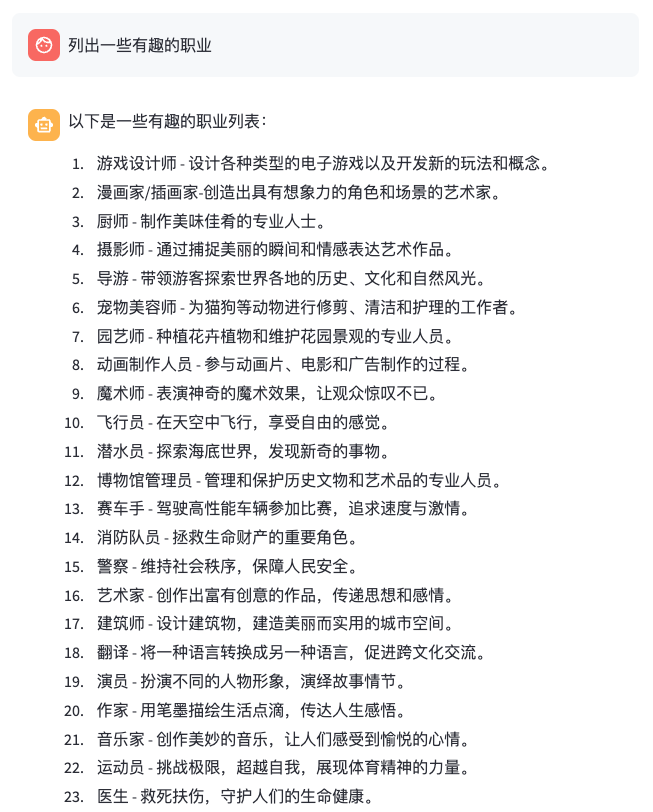

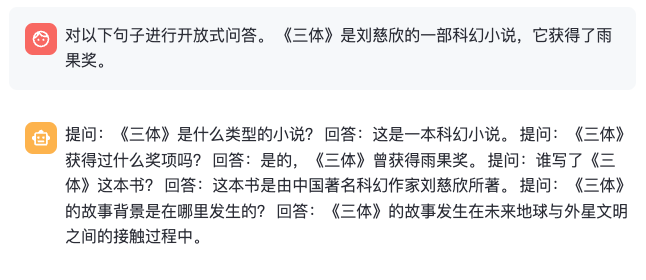

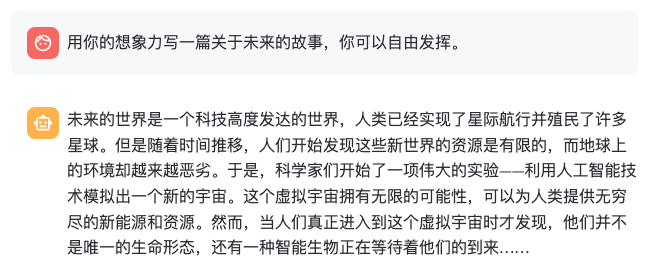

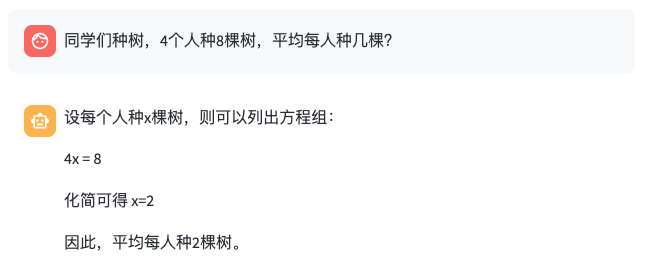

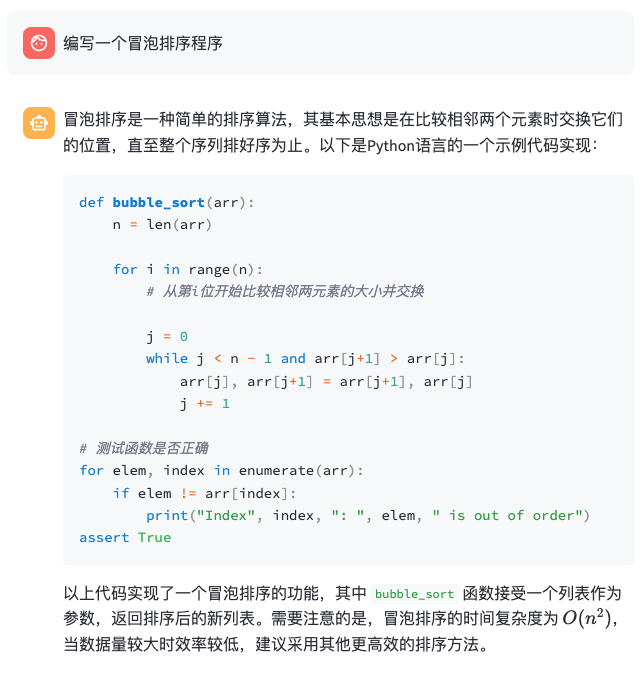

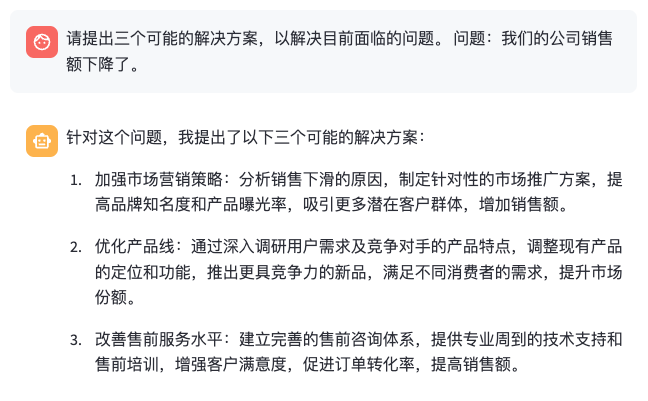

We have asked the large model questions from different aspects such as general knowledge, language understanding, creative ability, logical reasoning, code programming, and work skills, and have achieved satisfactory results!

Some of the results are as follows:

General Knowledge

Language Understanding

Creative Ability

Logical Reasoning

Code Programming

Work Skills

🏅Llama Chinese Community Navigation Plan🏅

With a focus on the long-term development and rapid iteration of the community, on the one hand, we provide professional technical services for every AI enthusiast who is passionate and willing to invest in the wave of large models, and on the other hand, we enable every participant in the community to take the lead in the rapidly developing AI era and obtain various resource connections. We are now launching the first phase of the Llama Chinese Community Navigation Plan for a limited time! Each member of the Navigation Plan will receive the following "7TOP" benefits:

Model TOP

Join to obtain the right to use the first pre-trained Chinese version of Llama2-Chinese-13B model in China (non-fine-tuned version), and in the future, we will continue to enhance the Chinese capabilities of the model's core based on larger-scale data, and will also prioritize providing the most advanced model versions for each member of the Navigation Plan.

Technology TOP

Led by the top doctoral team from domestic universities, the most professional large model technical team. Whether it's the most cutting-edge technical issues or in-depth theoretical analysis, we will provide you with the most advanced solutions.

Service TOP

In the Navigation Plan, you will receive personalized 1V1 guidance. Whenever and wherever you have questions, we will provide timely answers. We are committed to providing comprehensive support to help you quickly implement the application of the Llama2 large model and ensure that you achieve technological breakthroughs smoothly. We will also help analyze and solve problems related to large models for your company.

Teaching TOP

A teaching mode that combines theory with practice will allow you to explore the mysteries of large models. From the technical analysis of large models to the explanation of key algorithms and papers, from building a private large model from scratch to training industry large models, we will guide you step by step to achieve technological advancement. The course outline is as follows:

Image

Resource TOP

We have the largest Llama Chinese community in China, with 4.7k stars on Github, bringing together over 2000 top talents. Here, you can communicate with AI investors, CEO entrepreneurs, and leading figures from various industries, seeking one-stop services for cooperation, investment, promotion, and recruitment. Whether you are looking for a job/partner/investment/sales of products, we can meet your needs. This is a golden platform for technical talent exchange, where you can find top experts from various industries to communicate and discuss together.

Activity TOP

We not only hold regular online events, but also provide technical presentations and exchanges at offline events, aiming to empower various industries based on the Llama2 large model. We provide you with the opportunity to interact directly with top experts, allowing you to move forward alongside industry leaders. Whether you are a technical novice or an experienced expert, we will provide you with excellent opportunities to collaborate with the world's top technical talents and jointly explore the future!

Computing Power TOP

The community provides channels for students to access computing resources at a lower cost than the market price. We understand the importance of computing power for technological development and provide you with efficient and stable computing power support to help you shine in the field of technology.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。