Author | Wu Jingjing

Editor | Lizi

Source: Jiazi Light Years

Xiaomi's large-scale model made its first public appearance in Lei Jun's 2023 annual speech.

Lei Jun mentioned that unlike many internet platforms, the key breakthrough direction of Xiaomi's large-scale model is lightweight and local deployment, which can run on the mobile side.

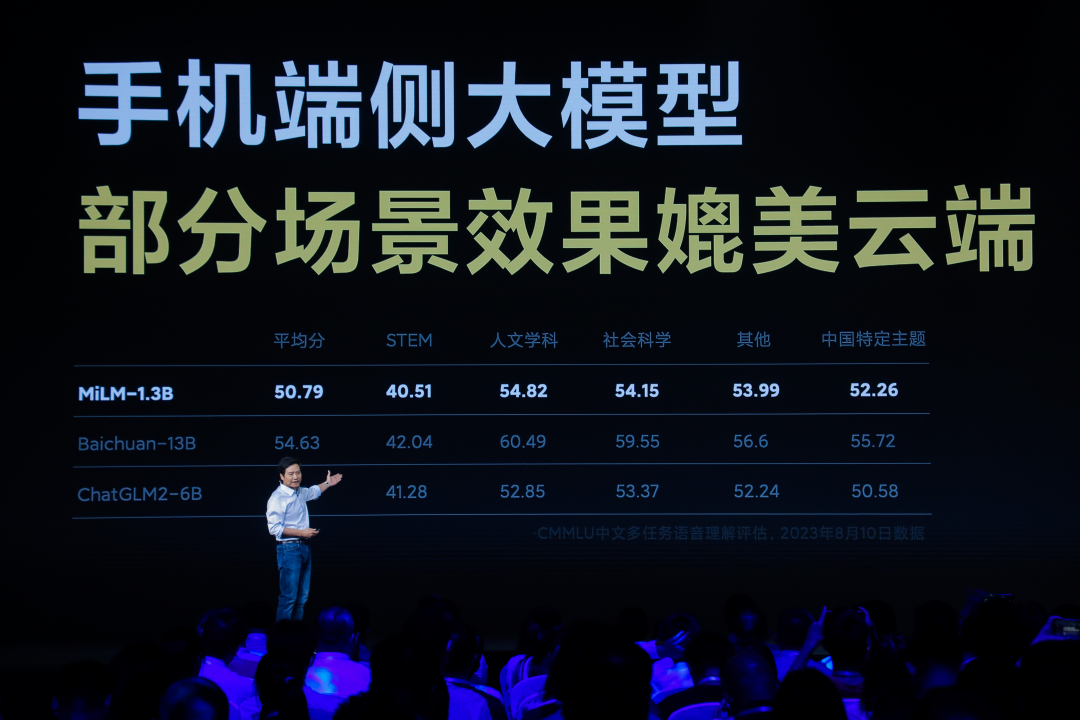

He stated that currently, the 1.3 billion parameter scale MiLM1.3B model has been successfully run on mobile phones, and its performance can rival the results of large models with 6 billion parameters running in the cloud. In the results he shared, Xiaomi's large-scale model on the mobile side outperformed the ChatGLM2-6B model of Zhipu AI in various themes of CMMLU Chinese evaluation, and the score difference with the 13B large model of Baichuan Intelligence is about 5 points.

(Image Source: Xiaomi)

Previously, the large-scale pre-trained language models MiLM-6B/1.3B developed by Xiaomi have landed on the code hosting platform GitHub and ranked tenth on the C-Eval overall list, ranking first in the same parameter level. On the Chinese large model benchmark "CMMLU," "MiLM-6B" ranks first.

Of course, since the dimensions of these test rankings are public, for many large model companies, it is not difficult to manipulate the rankings based on the test tasks, so these evaluation results can only be used as a reference and do not necessarily mean absolute excellence in performance.

At the same time, Lei Jun also announced that Xiao Ai, as the first application of Xiaomi's large model, has undergone a new upgrade and has officially started invitation testing.

This is a phased achievement of large models that Xiaomi has made in the four months since announcing the establishment of the large model team earlier this year.

What new thinking does Xiaomi's practice bring to the landing of large models? What does it mean for smartphone manufacturers leveraging new technologies for iteration?

1. Xiaomi does not pursue general large models, with a core team of about 30 people

Xiaomi belongs to the rational faction in the large model route—not pursuing parameter scale, not doing general large models.

Previously, at the earnings call, Xiaomi Group President Lu Weibing stated that Xiaomi would actively embrace large models, with the direction being deeply integrated with products and business, and would not pursue general large models like OpenAI.

According to previous reports by DeepBurn, Dr. Wang Bin, director of Xiaomi Group's AI Lab, said that Xiaomi would not release a ChatGPT-like product separately, and the self-developed large model would ultimately be brought out by products, with related investments of about tens of millions of RMB.

He said, "For large models, we belong to the rational faction. Xiaomi has advantages in application scenarios, and what we see is a huge opportunity for the combination of large models and scenarios."

He revealed that before the birth of ChatGPT, Xiaomi had done research and application related to large models internally, which was achieved through fine-tuning through pre-training + downstream task supervision in human-machine dialogue, with a parameter scale of 2.8 billion to 3 billion. This was mainly achieved based on the fine-tuning of dialogue data on the basis of the pre-training base model, and not the so-called general large model now.

According to public information, the current head of Xiaomi's large model team is Dr. Luan Jian, an expert in AI voice, who reports to the vice chairman of the technical committee and director of the AI Lab, Wang Bin. The entire large model team has about 30 people.

Luan Jian was the chief voice scientist and head of the voice team for the intelligent voice robot "Microsoft Xiaobing," and previously served as a researcher at Toshiba (China) and a senior voice scientist at Microsoft (China). After joining Xiaomi, Luan Jian has been responsible for voice generation, NLP teams, and the landing of related technologies in products such as Xiao Ai. Wang Bin joined Xiaomi in 2018 and has been in charge of the AI Lab since 2019. Before joining Xiaomi, he was a researcher and doctoral supervisor at the Institute of Information Engineering of the Chinese Academy of Sciences, with nearly 30 years of research experience in information retrieval and natural language processing.

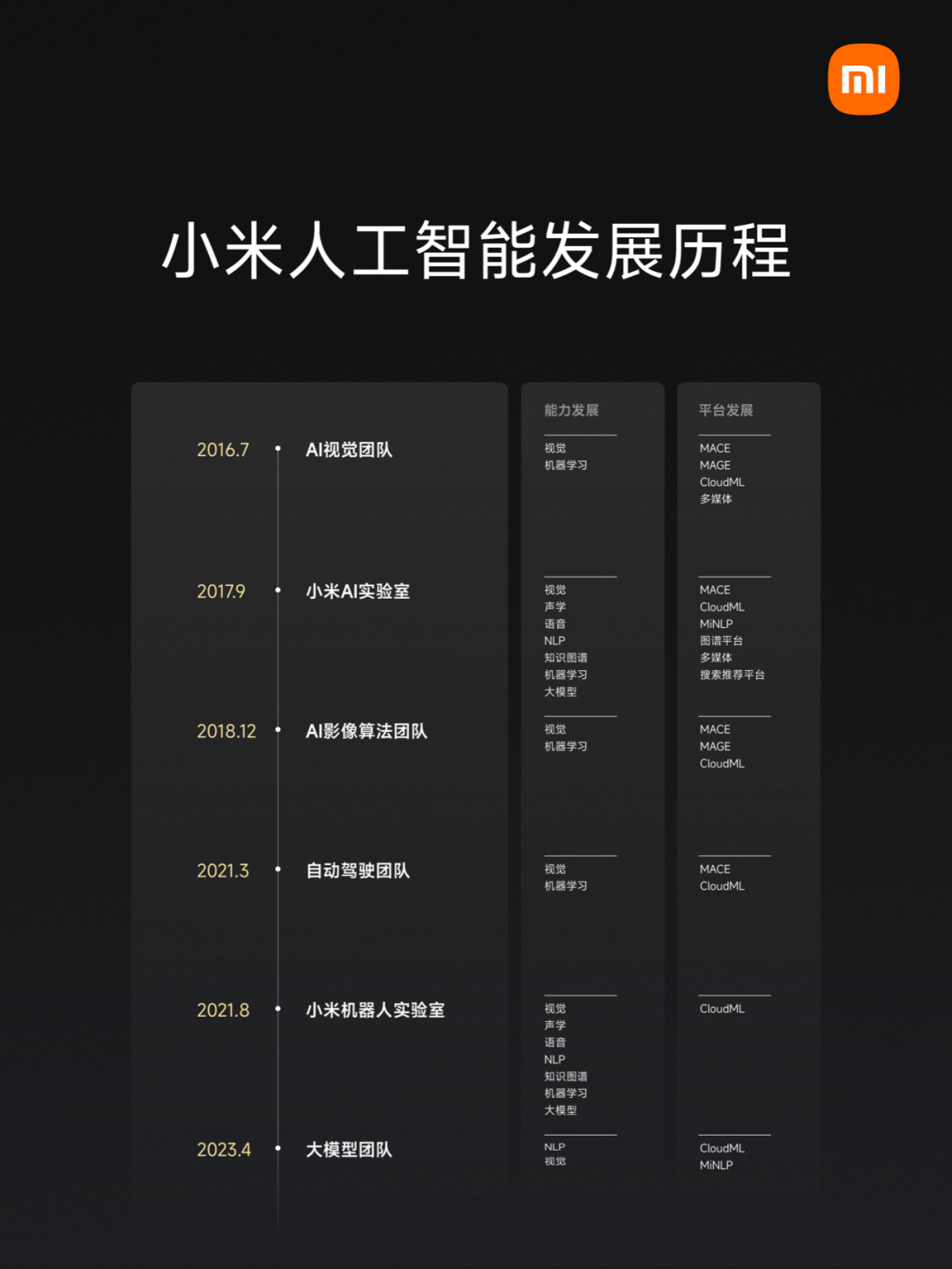

The development of large models also relies on Xiaomi's AI team behind the scenes. Lei Jun stated that Xiaomi's AI team, after 7 years and 6 expansions, has grown to over 3,000 people, covering CV, NLP, AI imaging, autonomous driving, robots, and other fields.

(Image Source: Xiaomi)

2. Google, Qualcomm, Huawei are all entering the game

Apart from Xiaomi, making large models run on mobile phones is a key focus for many tech companies.

Tech companies are envisioning the possibility brought by large models: no matter if you open WPS, Shimo documents, or emails, as long as you input instructions for writing, the phone can call on local capabilities to generate a complete article or email. On the mobile side, all apps can call on local large models to help with work and solve life problems at any time. The interaction between people and various apps on the phone is no longer frequent clicking, but can be done through intelligent voice commands.

Many companies are trying to compress the model size, making the local operation of large models on mobile phones more practical and economical. At Google I/O in May this year, Google released PaLM2, which is divided into four specifications by scale, from small to large: Gecko, Otter, Bison, and Unicorn. The smallest Gecko can run on a mobile phone and is very fast, processing 20 tokens per second, roughly equivalent to 16 or 17 words, and can also support offline operation on the phone. However, Google did not specify which phone this model would be used on at the time.

Qualcomm has already produced specific results. At the 2023 MWC in March, Qualcomm ran the Stable Diffusion model with over 1 billion parameters on a smartphone equipped with the second-generation Snapdragon 8, and generated an image in an unconnected Android phone in 15 seconds.

At the Computer Vision and Pattern Recognition Conference (CVPR) in June, Qualcomm also demonstrated the ControlNet model with a scale of 1.5 billion parameters running on an Android phone, taking only 11.26 seconds to produce an image. Ziad Asghar, Senior Vice President of Product Management and AI at Qualcomm, said: Technically, it takes less than a month to move these super-large models with over 1 billion parameters into a phone.

The latest move is Qualcomm's announcement of a collaboration with Meta to explore applications and services based on the Llama 2 model running on Qualcomm Snapdragon chips on devices such as smartphones, PCs, AR/VR headsets, and cars without being connected to the internet. Qualcomm stated that running large language models such as Llama 2 locally on devices is not only cheaper and more efficient than cloud-based LLM, but also does not require connection to online services, and the services are more personalized, secure, and private.

Apple, which has not yet officially announced any large model actions, is also exploring the landing of large models on the device side. According to the Financial Times, Apple is recruiting engineers and researchers to compress large language models so that they can run efficiently on iPhones and iPads. The main team responsible for this is the Machine Intelligence and Neural Design (MIND) team.

Currently, on GitHub, a popular open-source model MLC LLM project supports local deployment, solving memory limitations by carefully planning allocation and actively compressing model parameters, and can run AI models on various hardware devices such as iPhones. The project was jointly developed by researchers including Dr. Tianqi Chen, assistant professor at CMU and CTO of OctoML, based on machine learning compilation (MLC) technology to efficiently deploy AI models. Less than two days after the launch of MLC-LLM, the number of stars on GitHub has approached one thousand. Some have already tested running large language models locally on iPhones in airplane mode.

Unlike foreign companies such as Google and Qualcomm emphasizing the local deployment of large models on the device side for offline operation, domestic smartphone manufacturers currently prioritize the landing of large models in the phone's voice assistant or existing image search functions. This upgrade essentially still calls for more cloud capabilities to use large models.

This time, Xiaomi has used the large model in the Xiao Ai voice assistant. However, since specific information about Xiaomi's large-scale model on the device side has not been disclosed, it is not possible to accurately judge the future development path of Xiaomi's large model. From Lei Jun's emphasis on local deployment and lightweight direction, Xiaomi may try to run large models offline on mobile phones in the future.

Huawei is also trying to land large models on the device side, but the focus is still on phone voice assistants and image search scenarios. In April, the new Huawei P60 smartphone introduced the new intelligent image search feature, which is based on multimodal large model technology, and the model was miniaturized on the device side during the process. Recently, the upgraded terminal smart assistant Xiaoyi from Huawei is also optimized based on large models, offering new features such as recommending restaurants and summarizing based on voice prompts.

OPPO and vivo are also making efforts in this direction. On August 13, OPPO announced that the new Xiao Bu assistant, built on AndesGPT, is about to start its experience. From the information, it can be seen that after integrating large model capabilities, the assistant will be enhanced in dialogue and text writing. AndesGPT is a generative large language model created by the OPPO Andes Intelligent Cloud team based on a hybrid cloud architecture.

For smartphone manufacturers, whether it is local deployment or calling on cloud capabilities, large models on phones represent an unmissable new opportunity.

3. What are the key challenges of running large models on mobile phones?

Running large models on mobile phones is not an easy task.

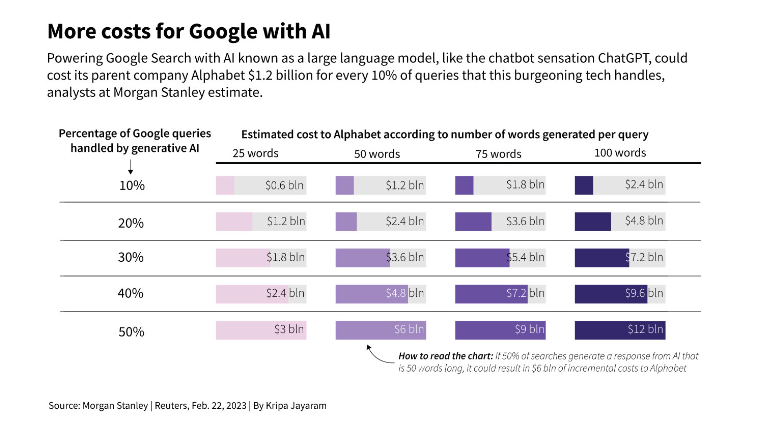

Computing power is the primary issue. Using large models on mobile devices not only requires calling on cloud computing power but also requires calling on the computing power of the terminal device. Due to the large resource consumption of large models, each call means very high costs. Alphabet Chairman John Hennessy once mentioned that the cost of using large language models for search is 10 times higher than the cost of previous keyword searches. Last year, Google had 33 trillion search queries, with a cost of about one-fifth of a cent per search. Wall Street analysts predict that if Google uses large language models to handle half of the search queries, providing answers of about 50 words each, Google may face a $6 billion increase in expenses by 2024.

(Image Source: Reuters)

Running large models on mobile devices faces similar cost challenges. In Qualcomm's report "Hybrid AI is the Future of AI," it is mentioned that just as traditional computing has evolved from large mainframes and clients to the current combination of cloud and edge terminals, running large models on the device side also requires a hybrid AI architecture to allocate and coordinate AI workloads between the cloud and edge terminals, allowing smartphone manufacturers to leverage the computing power of edge terminals to reduce costs. The consideration of achieving local deployment of large models is based on this cost issue.

Furthermore, as personal devices, phones are where data is generated, and a large amount of personal data is stored locally. If local deployment can be achieved, it provides individuals with security and privacy protection in terms of security and privacy.

This brings up the second challenge: if there is a desire to utilize device-side capabilities more to run large models, how can the energy consumption of the phone be kept low while still ensuring strong model performance?

Qualcomm has previously stated that the key capability to deploy large models on local devices such as phones lies in Qualcomm's full-stack AI optimization of software and hardware, including the Qualcomm AI Model Efficiency Toolkit (AIMET), Qualcomm AI Engine, and Qualcomm AI Software Stack, which can compress model size, accelerate inference, and reduce runtime latency and power consumption. Hou Jilei, Vice President of Qualcomm Global and Head of Qualcomm AI Research, has mentioned that an important part of Qualcomm's efficient AI development is overall model efficiency research, with the aim of reducing AI models in multiple directions to make them run efficiently on hardware.

Model compression alone is a significant challenge. Some model compression may result in performance loss for large models, and there are some technical methods that can achieve lossless compression, all of which require various tools for engineering attempts in different directions.

These critical software and hardware capabilities are significant challenges for smartphone manufacturers. Today, many smartphone manufacturers have taken the first step in running large models on phones. The next step is how to ensure that better large models can be more economically and efficiently deployed in every phone, which is actually a more difficult and crucial step.

The adventure is just beginning.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。