Source: Ifanr

Author: Mo Chongyu

Recently, X (formerly Twitter) user @Dylan Patel showcased a study from the University of Oxford: through research on GPT-4 and most other common LLMs, the study found that there is a significant difference in the cost of LLM (large language model) inference.

The cost of English input and output is much cheaper than other languages, with the cost of Simplified Chinese being about twice that of English, Spanish being 1.5 times that of English, and Shan language being 15 times that of English.

This can be traced back to a paper published by the University of Oxford on arXiv in May of this year.

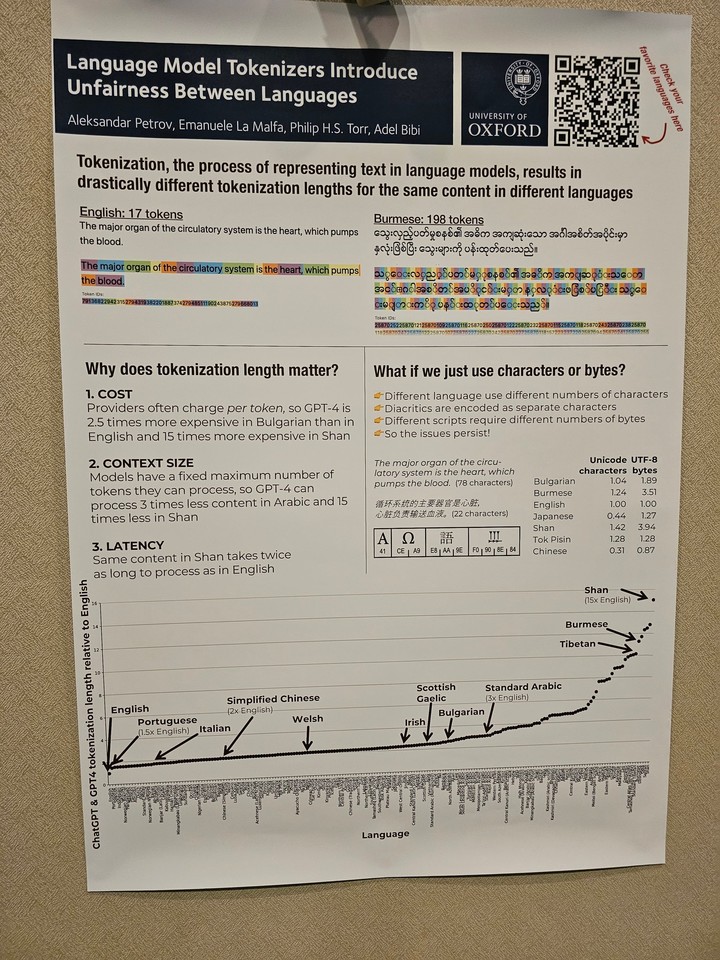

Tokenization is the process of converting natural language text into a sequence of tokens, which is the first step in the language model's text processing. In the cost calculation of LLM computing power, the more tokens there are, the higher the cost of computing power.

Undoubtedly, with the commercialization trend of generative AI, the cost of computing power will also be passed on to users. Many AI services currently charge based on the number of tokens to be processed.

The paper shows that after analyzing 17 tokenization methods, researchers found that there is a huge difference in the length of token sequences when the same text is converted into different language token sequences, even with tokenization methods that claim to support multiple languages, they cannot achieve complete fairness in token sequence length.

For example, according to OpenAI's GPT3 tokenizer, tokenizing "your love" in English only requires two tokens, while in Simplified Chinese, it requires eight tokens, even though the Simplified Chinese text has only 4 characters, while the English text has 14 characters.

From the image exposed by X user @Dylan Patel, it can be visually seen that processing a sentence in English requires 17 tokens for LLM, while processing the same meaning in Shan language requires 198 tokens. This means that the processing cost of Shan language will be 11 times that of English.

There are many similar cases, and Aleksandar Petrov's website provides many related charts and data. Interested friends may click "https://aleksandarpetrov.github.io/tokenization-fairness/" to see the differences between languages.

On OpenAI's official website, there is a similar page explaining how the API tokenizes a piece of text and displays the total number of tokens in that text. The website also mentions that one token usually corresponds to about 4 characters of English text, and 100 tokens are approximately equivalent to 75 words.

Thanks to the advantage of the short length of English token sequences, in terms of cost-effectiveness in the pre-training of generative artificial intelligence, English can be said to be the biggest winner, leaving users of other languages far behind, indirectly creating an unfair situation.

In addition, this difference in token sequence length can also lead to unfair processing delays (some languages require more time to process the same content) and unfair long sequence dependency modeling (some languages can only handle shorter texts).

Simply put, users of certain languages need to pay higher costs, endure longer delays, and obtain poorer performance, thereby reducing their fair access to language technology, indirectly leading to an AI gap between English users and users of other languages around the world.

Simply from the perspective of output costs, the cost of Simplified Chinese is twice that of English. With the deep development of the AI field, Simplified Chinese, which is always "one step behind," is obviously not friendly. Under the balance of various factors such as cost, non-English-speaking countries are also trying to develop their own native language large models.

Taking China as an example, as one of the earliest giants exploring AI domestically, on March 20, 2023, Baidu officially launched the generative AI Wenxin Yiyuan.

Subsequently, a batch of excellent large models such as Alibaba's Tongyi Qianwen and Huawei's Pangu large model have emerged one after another.

Among them, the NLP large model in Huawei's Pangu large model is the first Chinese large model with 100 billion parameters in the industry, with 110 billion dense parameters, trained with massive data of 40TB.

As Amina Mohammed, Deputy Secretary-General of the United Nations, warned at the United Nations General Assembly, if the international community does not take decisive action, the digital divide will become a "new face of inequality."

Similarly, with the rapid advancement of generative AI, the AI gap is likely to become a new and worthy "new face of inequality" to pay attention to.

Fortunately, domestic technology giants, who are usually "despised," have already taken action.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。