Author: Cao Jianfeng, Senior Researcher at Tencent Research Institute

Image Source: Generated by Wujie AI

The 21st century is an era of deep development in digitization, networking, and intelligence. Artificial intelligence (AI), as a key technology in this era, has attracted widespread attention due to its progress. The past decade has been considered the "golden decade of AI" represented by deep learning. Through deep learning, the neural network technology in the field of AI has experienced a resurgence, leading to the rapid development of AI, marking its "third spring." Prior to this, since the concept of "artificial intelligence" was proposed at the Dartmouth Conference in 1956, the field of AI has experienced ups and downs. The convergence and development of many technological elements such as machine learning algorithms, big data, cloud computing, AI-specific chips, and open-source software frameworks have driven significant progress in the field of AI. Not only have the capabilities of AI in perception, understanding, learning, and decision-making continued to improve, but AI has rapidly become a new general technology and the intelligent foundation of the economy and society, widely applied in various fields of the economy and society, bringing new products and services. From algorithm recommendations in the consumer internet sector, AI-generated content (AIGC), and chatbots, to unmanned driving vehicles, AI medical software, AI quality inspection in the industrial sector, and various convenient applications in social public services, the significant value of AI for the high-quality development of the economy and society continues to be demonstrated. In summary, as a strategic technology leading a new round of technological revolution and industrial transformation (the Fourth Industrial Revolution), AI is expected to reshape the human economy and society, bringing about significant impacts on productivity, labor employment, income distribution, and globalization.

Against this backdrop, AI has become a new focus of international competition, with countries worldwide introducing AI development strategies and specific policies to seize strategic high ground and development opportunities. In July 2017, China released the "New Generation Artificial Intelligence Development Plan," elevating AI to a national strategic level. In April 2018, the EU introduced an AI strategy aimed at making the EU a world-class AI center and ensuring that AI is human-centric and trustworthy. In February 2019, the "American AI Initiative" was launched to enhance the U.S.'s leadership position in the field of AI. In September 2021, the UK introduced an AI strategy, hoping to build the UK into an AI superpower through a ten-year plan. Analyzing the AI strategies of various countries reveals that international competition in the field of AI not only concerns technological innovation and industrial economy but also involves dimensions such as AI governance. With the widespread application of AI, the numerous technological ethical issues brought about by AI continue to attract high attention from various sectors of society, such as algorithmic discrimination, information silos, unfair AI decisions, misuse and infringement of personal information, AI security, algorithmic black boxes, accountability, technological misuse (such as differential pricing based on big data, AI fraud, and the synthesis of false information), impacts on work and employment, and ethical and moral challenges.

Therefore, accompanying the "rapid progress" of AI technology development and application over the past decade, various sectors at home and abroad are simultaneously advancing AI governance, exploring diverse governance measures and safeguard mechanisms such as legislation, ethical frameworks, standards and certification, and industry best practices to support the responsible, trustworthy, and human-centric development of AI.

This article, based on an international perspective, reviews the important developments in AI governance in China over the past decade, analyzes and summarizes the patterns and trends therein, and looks forward to future development. In the foreseeable future, as AI's capabilities continue to strengthen, its application and impact will deepen. Therefore, responsible AI will become increasingly important. The advancement and implementation of responsible AI are inseparable from rational and effective AI governance. In other words, establishing sound AI governance is a necessary path towards responsible AI and safeguarding the future development of AI. It is hoped that this article will be helpful for the continued implementation and implementation of AI governance in the future.

Eight Trends in AI Governance

Simultaneous Emphasis on Macro Strategies and Ground Policies to Cultivate National AI Competitiveness

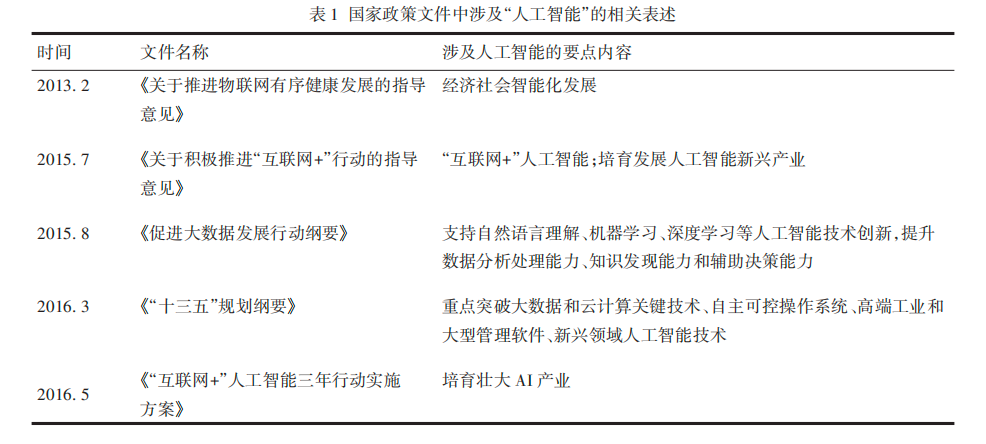

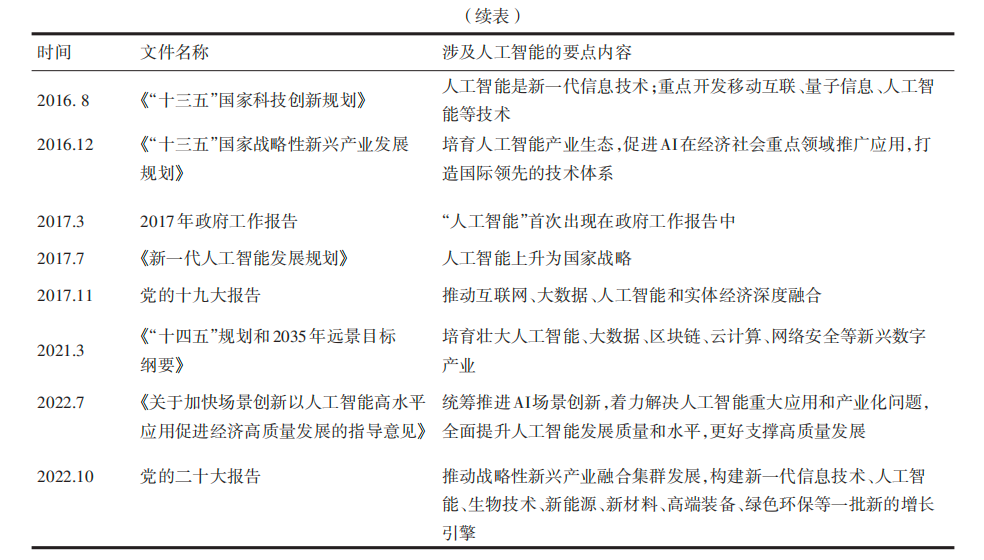

Since the "Guiding Opinions on Promoting the Orderly and Healthy Development of the Internet of Things" was issued in February 2013, AI has entered the national macro strategic vision and become an important topic in top-level policy documents. For example, the "Guiding Opinions on Actively Promoting the 'Internet Plus' Action" in 2015 first proposed "cultivating and developing the emerging industry of artificial intelligence." Subsequently, the level of attention given to AI by the country has continued to rise, with continuous strategic deployment at the strategic level to ensure the independent and controllable development of core technologies and promote high-level technological self-reliance (Table 1).

Ground policies are equally important. AI is a comprehensive entity of emerging technology and industry. The innovation of AI technology can only be transformed into a sustainable industrial ecosystem and deeply integrated with the real economy to play a strong role as a new growth engine. In this regard, pilot zones and leading zones play an important role. The construction of the national new generation of AI innovation and development pilot zones, vigorously promoted by the Ministry of Science and Technology, has achieved remarkable results. A total of 18 national new generation of AI innovation and development pilot zones have been approved nationwide, playing a good leading and driving role in policy tools, application models, and best practices. From 2019 to the present, the Ministry of Industry and Information Technology has approved the construction of 11 national AI innovation application leading zones to promote the deep integration of AI and the real economy. In July 2022, the Ministry of Science and Technology and five other departments issued the "Guiding Opinions on Accelerating Scenario Innovation to Promote High-level Application of Artificial Intelligence to Promote High-quality Economic Development," focusing on solving the problems of AI application and industrialization, driving the deep integration of AI with economic and social development through the "data foundation + computing power platform + open scenario," and better supporting high-quality development.

With the continuous support of national macro strategies and ground policies, China's innovation and entrepreneurship in the field of AI have remained active, and national-level laboratories in the field of AI have been successively established, achieving significant achievements in AI technological innovation, industrial applications, and talent scale. The "2021 Global AI Innovation Index Report" released by the China Institute of Science and Technology Information shows that the current global AI development presents a pattern led by China and the United States, with major countries fiercely competing. China's AI development has achieved remarkable results, and its innovation level in AI has entered the top tier in the world, further narrowing the gap with the United States. China's supercomputing centers, the scale of AI enterprises, the number of patent registrations, the construction of talent teams, and research strength are all at the forefront globally. In the future, the contribution of AI to China's economic growth and social development will continue to increase. A report by PricewaterhouseCoopers predicts that globally, by 2030, AI will contribute $15.7 trillion to the global economy, with China reaping the largest economic benefits from AI, reaching $7 trillion, meaning that AI will drive China's GDP growth by approximately 26.1%; the United States and North America will follow, reaching $3.7 trillion.

Continuous Improvement of Technological Ethical Systems as an Important Guarantee for AI Innovation

In the digital age, emerging digital technologies such as AI have unique characteristics and complexities different from those of the industrial age in their application. This is mainly reflected in: (1) broader connectivity, with ubiquitous network connections and data collection and processing; (2) greater autonomy, with AI systems driving various products and services to operate independently without human intervention; (3) deeper opacity, with AI systems' "algorithmic black boxes" being difficult to understand and explain; (4) higher verisimilitude, with AI-generated and synthesized content, data, and virtual worlds becoming increasingly close to reality. It is because of this that the social ethical issues that may be triggered by the application of new technologies such as AI are becoming increasingly characterized by suddenness, concealment, scale, unpredictability, and other features, posing new challenges to technological ethical governance. In the process of AI development and application, it is becoming increasingly necessary and important to consider technological ethical issues simultaneously or even in advance. Technological ethical system adjustment and improvement have thus become an important topic in the AI era, requiring a shift from focusing on human ethics to focusing on technological ethics and implementing moral constraints on intelligent entities to construct a friendly digital social order. Furthermore, as global technological competition intensifies, technological ethics not only concerns the prevention of technological security risks but also concerns the creation of national technological competitiveness.

Since the 18th National Congress of the Communist Party of China, the country's level of attention to technological ethics has continued to rise, explicitly stating that technological ethics are the value norms that must be followed in technological activities and incorporating technological ethics into top-level policy design as an important support and guarantee for technological innovation. The "Decision on Several Major Issues Concerning Comprehensively Deepening Reform and Improving the Modernization of the National Governance System and Governance Capacity" and the "Outline of the Fourteenth Five-Year Plan and 2035 Vision" have made top-level arrangements for technological ethics, requiring the establishment of a sound technological ethics governance system and the establishment of a technological ethics system. Currently, the country continues to establish and improve technological ethical systems, shaping a culture of technology for good and safeguard mechanisms. This is mainly reflected in the following three aspects:

First, the establishment of the National Committee on Technological Ethics, responsible for guiding and coordinating the construction of the national technological ethics governance system. In July 2019, the Ninth Meeting of the Central Committee for Comprehensive Deepening of Reform approved the "Plan for the Establishment of the National Committee on Technological Ethics," formally establishing China's national-level technological ethics management organization. The "Opinions on Strengthening Technological Ethics Governance" released in March 2022 further clarified the management responsibilities of the National Committee on Technological Ethics.

Second, the introduction of policies and regulations related to technological ethics to support the implementation of technological ethics governance. A major highlight of the revised "Law on the Progress of Science and Technology" in December 2021 is the addition of clauses related to technological ethics. On the one hand, it provides an overall regulation for the establishment of a sound technological ethics governance system, and on the other hand, it requires scientific and technological research and development institutions, institutions of higher learning, enterprises, and institutions to fulfill the main responsibility for technological ethics management, conduct ethical reviews of scientific and technological activities, and clearly define the legal and ethical bottom line of scientific and technological research, development, and application activities. The "Opinions on Strengthening Technological Ethics Governance" puts forward more comprehensive requirements for technological ethics governance, involving overall requirements, principles, systems, institutional guarantees, review and supervision, as well as education and publicity, laying the foundation for the implementation of technological ethics governance. Subsequent measures will include the formulation of methods for technological ethics review, a list of high-risk technological activities for technological ethics, and specific technological ethics norms in specific fields.

Third, Emphasis on Technological Ethical Governance in the AI Field

The National Governance Professional Committee for the New Generation of Artificial Intelligence has successively released the "Principles of Governance for the New Generation of Artificial Intelligence - Developing Responsible Artificial Intelligence" and the "Ethical Norms for the New Generation of Artificial Intelligence," providing ethical guidance for the development of responsible AI. Laws and regulations such as the "Data Security Law," "Regulations on the Management of Algorithm Recommendations for Internet Information Services," and "Guiding Opinions on Strengthening the Comprehensive Governance of Algorithm Recommendations for Internet Information Services" impose technological ethical requirements and management measures on data and algorithm activities. The "Opinions on Strengthening Technological Ethical Governance" identifies AI, life sciences, and medicine as three key areas during the "14th Five-Year Plan" period, requiring the formulation of specialized technological ethical norms, guidelines, and the strengthening of technological ethical legislation. It also requires the relevant industry regulatory authorities to introduce technological ethical regulatory measures. It can be foreseen that future technological ethical regulation in the AI field will be strengthened. In addition, local legislation such as the "Shenzhen Artificial Intelligence Industry Promotion Regulations" and the "Shanghai Regulations for the Promotion of the Development of the Artificial Intelligence Industry" both consider AI ethical governance as an important guarantee for the healthy development of the AI industry.

From the above analysis, it is evident that a significant trend in technological ethical governance in the AI field is to emphasize the responsibility of the innovation subject as a major focus. This is similar to the emphasis on platform subject responsibility in the regulation of internet platforms. Government regulation of platforms is largely achieved through the implementation and enforcement of platform subject responsibility. The relevant legislation in the internet field has established a comprehensive platform obligation system covering pre-event, mid-event, and post-event obligations. The State Administration for Market Regulation has issued the "Guidelines for the Implementation of Platform Subject Responsibility on the Internet (Draft for Solicitation of Comments)," attempting to refine the subject responsibilities of different types of platforms. The government's regulation of AI follows a similar approach. Current policies and regulations attempt to refine the responsibility of technological ethical management subjects, requiring innovation subjects to establish ethical committees, adhere to technological ethical bottom lines, conduct technological ethical reviews, carry out technological ethical risk monitoring and assessment, and provide technological ethical training, among other measures.

Algorithm Applications in the Internet Field as a Key Focus of AI Regulation

Algorithm recommendations, algorithmic automated decision-making, and AI deep synthesis in the internet field have become key objects of government regulation in the AI field due to their widespread application, high public attention, and continuous highlighting of negative issues. In recent years, the country has introduced a series of laws and regulations to regulate algorithm applications in the internet field. The "Guiding Opinions on Strengthening the Comprehensive Governance of Algorithm Recommendations for Internet Information Services" issued by nine departments, including the Cyberspace Administration of China, puts forward comprehensive management requirements, aiming to gradually establish a governance mechanism, improve the regulatory system, and standardize the algorithm ecosystem for about three years. The opinion also emphasizes strengthening the responsibility of enterprises, including algorithm security responsibilities and technological ethical reviews.

In terms of algorithm recommendations, the "Regulations on the Governance of Internet Information Content Ecology" proposes the establishment of a sound mechanism for human intervention and user autonomous selection for algorithm recommendation technology. The "Regulations on the Management of Algorithm Recommendations for Internet Information Services," which came into effect on March 1, 2022, is China's first legislation focusing on algorithm governance. It imposes comprehensive obligations and prohibitions on algorithm recommendation service providers, introduces a series of regulatory measures such as algorithm security risk monitoring, algorithm security assessment, and algorithm record management, further strengthening the algorithm security responsibilities of platform enterprises. Industry insiders have marked 2022 as the first year of algorithm regulation.

In terms of algorithmic automated decision-making, relevant provisions in laws and regulations such as the "Personal Information Protection Law of the People's Republic of China," the "Electronic Commerce Law of the People's Republic of China," and the "Interim Provisions on the Administration of Online Travel Business Operations" regulate unfair and improper algorithmic decision-making behaviors such as algorithmic discrimination and differential pricing based on big data. They propose regulatory measures such as providing individuals with choice permissions, conducting impact assessments, allowing individuals to request explanations, and enjoying refusal permissions, to better balance the protection of individual rights and the commercial activities of algorithms.

In the area of AI deep synthesis (mainly referring to the use of AI technology to generate and synthesize content), relevant legislation actively sets application boundaries for AI deep synthesis technology to promote positive and responsible technology applications. For example, the "Regulations on the Management of Internet Information Services for Deep Synthesis" passed in November 2022 summarizes past regulatory experiences and comprehensively standardizes the application of deep synthesis technology from the perspectives of the responsibilities of deep synthesis service providers, the management of deep synthesis content identification, and necessary security measures such as content identification, detection and recognition, and content traceability to ensure the safe and reliable application of deep synthesis technology and promote positive technological innovation and application.

Overall, algorithm regulation in the internet field emphasizes multiple objectives such as safety and control, rights protection, fairness and justice, openness and transparency, and prevention of abuse. Based on the classification of algorithm applications, it focuses on regulating high-risk algorithm applications. The approach to algorithm classification regulation is similar to the "EU Artificial Intelligence Act," which classifies AI systems into unacceptable risk, high risk, limited risk, and minimal risk categories and imposes different regulatory requirements on AI systems of different risk levels. In this context, academia has shown strong interest in algorithm governance, proposing various approaches such as algorithm explanation, algorithm impact assessment, and algorithm auditing. In the future, various sectors will need to further explore and improve the methods and governance measures for algorithm regulation to better ensure the upward and positive application of algorithms.

Using Regulatory Innovation to Promote the Application of AI in Traditionally Highly Regulated Fields

For traditional highly regulated fields such as transportation and healthcare, new phenomena such as autonomous driving vehicles, passenger-carrying drones (unmanned aerial vehicles), and AI medical software face significant legal and regulatory obstacles to their commercial application. These obstacles not only affect the trust of consumers and the public in the technology but also dampen the enthusiasm of innovators and investors. Therefore, effective regulatory innovation is key to the development and application of these new phenomena. Taking autonomous driving vehicles as an example, only by accelerating the innovation of legislation and regulatory frameworks aimed at regulating traditional vehicles and human drivers, and establishing new and effective regulatory frameworks, can the commercial application of autonomous driving vehicles be expedited.

Chinese policymakers are actively innovating regulatory methods and means to support and promote the application of new AI phenomena through methods such as "regulatory sandboxes," pilots, and demonstration applications. In the field of autonomous driving vehicles, the country has introduced a series of legislation and normative documents to encourage, support, and regulate road testing and demonstration applications of autonomous driving vehicles, explore a "regulatory sandbox" for the safety standards of autonomous driving vehicles, improve the access and road traffic rules for autonomous driving vehicles, and ensure the safety of autonomous driving vehicle transportation. Due to favorable regulatory policies, various cities such as Beijing, Shanghai, Guangzhou, and Shenzhen have seized the opportunity for development, and some cities have issued trial operation licenses for unmanned driving vehicles that allow operation without a driver in the vehicle and can be operated for a fee. Shenzhen, in particular, has taken the lead in issuing the "Shenzhen Regulations on the Management of Intelligent Connected Vehicles," laying the legislative and regulatory framework for commercial applications. In the field of AI medical software, due to the many new features of AI medical software that are different from traditional medical devices, such as autonomy, learning and evolution capabilities, continuous iteration, algorithmic black boxes, and unpredictability, traditional approval procedures are difficult to adapt to the development needs of AI medical software. Therefore, regulatory authorities are actively improving the registration and approval procedures and management norms for AI medical devices, supporting the accelerated clinical application of AI medical software for intelligent medical imaging and other auxiliary diagnosis and treatment. Currently, some AI medical software has been approved, such as Tencent Miying's AI-assisted glaucoma diagnosis software and pneumonia AI-assisted diagnosis system, both of which have obtained Class III medical device registration certificates. In the future, regulatory authorities will need to continue to improve and optimize the registration and approval procedures for AI medical software to better promote the well-being of the people.

Accelerating the Exploration of Intellectual Property Protection Rules for AI

AI-generated content (AIGC) has become a new frontier for AI, representing the future direction of AI development. In the internet field, AIGC is a new form of content production following PGC (professionally generated content) and UGC (user-generated content), bringing about a significant transformation in automated content production. Currently, AIGC technology can independently produce various forms of content, including text, images, audio, video, and 3D content (3D objects, 3D virtual scenes), among others. As a result, the knowledge property protection rules for AIGC technology and its applications have become an important and unavoidable topic. Issues such as "how to establish copyright protection and ownership for AIGC content" and "whether the use of copyrighted content by AIGC technology constitutes infringement" are urgent problems that need to be addressed. Scholars abroad have proposed the need to establish specialized AI intellectual property protection rules and international treaties because AI technology has broken the existing model that creators and inventors can only be human.

Both domestically and internationally, active exploration of intellectual property protection rules for AI-generated content is underway. In foreign countries such as the UK, the EU, Japan, and South Africa, specialized AI intellectual property protection rules have been considered or already formulated, such as the protection of computer-generated works in the UK's "Copyright, Designs and Patents Act" and the exception clauses for text and data mining based on AI technology in the EU's "Directive on Copyright in the Digital Single Market." In terms of patents, the UK's policy states that since AI has not advanced to the point where it can independently create inventions, there is currently no need to amend patent laws, but it is necessary to closely monitor technological progress and promptly assess patent issues related to AI.

In China, in the case of Beijing Feilin Law Firm v. Baidu for copyright infringement of "Baijiahao," the court denied the possibility of copyright protection for works directly and independently generated by AI, but pointed out that the relevant rights of AI-generated content (such as competitive rights) can be protected through competition law. In the case of Tencent Computer System Co., Ltd. v. Shanghai Yingxun Technology Co., Ltd. for copyright infringement and unfair competition disputes, the court, based on the reality of human-machine collaboration in the AI field, pointed out that the AI writing software Dream writer "generates" works only as a part of the creative process, and the overall intellectual activity led by multiple teams and individuals is the core of the creation, and thus determined that the articles involved are works of the plaintiff's legal person. This determination of the Dream writer case is more in line with the current development status of AIGC technology and its applications, and has significant reference value for further clarifying the intellectual property protection rules for AI-generated content through legislation. In terms of legislation, the revised "Copyright Law of the People's Republic of China" in 2020 adopts an open-ended approach to the recognition of works, while specifying the elements of work composition and listing the main types of works, it also provides open-ended provisions, reserving institutional space for the protection of new objects such as AIGC through new technologies.

Standardization Construction is a Key Aspect of AI Governance

The National Standardization Development Outline points out that standardization plays a fundamental and leading role in advancing the modernization of the national governance system and governance capacity. For the AI field, AI standards are not only important means to support and promote the development and extensive application of AI (such as technical standards), but also an effective way to implement AI governance (such as governance standards, ethical standards), because AI governance standards can play an important role in "connecting legislation and regulation with technological practices." Furthermore, standards in the AI governance field are more agile, flexible, and adaptable compared to legislation and regulation. Therefore, AI governance standards have become a high point in the development of AI technology and industry, and international society has put forward various measures for implementation. Typical examples include the EU's Trustworthy AI standards, AI risk management and AI discrimination recognition governance standards led by the National Institute of Standards and Technology (NIST) in the United States, the UK's AI certification ecosystem development plan, and IEEE's AI ethical standards. In terms of implementation, IEEE has launched an AI ethics certification project for the industry; the UK hopes to cultivate a world-leading AI certification industry worth billions of pounds within five years, and to evaluate and communicate the credibility and compliance of AI systems through neutral third-party AI certification services (including audits, impact assessments, and certification).

China attaches great importance to the standardization construction in the AI field, and the National Standardization Development Outline requires standardization research in the fields of AI, quantum information, biotechnology, and others. In recent years, various sectors have been continuously promoting the standardization work in the field of AI governance, including national standards, industry standards, local standards, and group standards. For example, the National Standardization Administration Committee has established the National Artificial Intelligence Standardization General Group, and the National Information Technology Standardization Technical Committee has set up a subcommittee on artificial intelligence, both aiming to promote the formulation of national standards in the AI field. The "Guidelines for the Construction of the National New Generation Artificial Intelligence Standard System," issued in July 2020, clearly outlines the top-level design of AI standards, with safety/ethical standards as the core component of the AI standard system, aiming to establish a compliance system for AI through safety/ethical standards and promote the healthy and sustainable development of AI. In the future, it is necessary to accelerate the formulation and implementation of relevant AI governance standards to provide more guarantees for the technological innovation and industrial development in the AI field.

Industry actively explores self-regulatory measures for AI governance and practices responsible innovation and the concept of technology for good

Effective AI governance requires the joint participation of multiple entities such as the government, enterprises, industry organizations, academic groups, users or consumers, the general public, and others. Among them, the self-governance and self-regulation of technology companies are important ways to implement the concept of "ethical priority." The call for "ethical priority" is largely due to the increasingly prominent phenomenon of "lagging legislation" in the face of the rapid development of AI. In the AI field, "ethical priority" first and foremost manifests as the technological ethical self-regulation of technology companies, and the best practices of leading companies often play a significant role in leading and driving the entire industry to practice responsible innovation and the concept of technology for good.

At the industry level, in recent years, research institutions and industry organizations have put forward AI ethical guidelines, self-regulatory conventions, and other documents to provide ethical guidance for companies' AI activities. Typical examples include the "AI Industry Self-discipline Convention" and "Trusted AI Operation Guidelines" by the China Artificial Intelligence Industry Alliance (AIIA), the "Beijing Consensus on Artificial Intelligence" and "Declaration of Responsibility for the Artificial Intelligence Industry" by the Beijing Academy of Artificial Intelligence, and the "Network Security Standard Practice Guide - Ethical Security Risk Prevention Guidelines for Artificial Intelligence" by the National Information Security Standardization Committee, among others.

At the enterprise level, as the main entities for AI technological innovation and industrial application, technology companies bear the important responsibility of responsibly developing and applying AI. Overseas, from proposing AI ethical principles to establishing internal AI ethical governance bodies, and then to developing management and technical tools and even commercial responsible AI solutions, technology companies have explored relatively mature experiences and accumulated many practices that can be widely promoted. In recent years, domestic technology companies have actively implemented regulatory requirements, explored relevant self-regulatory measures, including: issuing AI ethical principles, establishing internal AI governance organizations (such as ethical committees), conducting ethical reviews or security risk assessments for AI activities, disclosing algorithm-related information to promote algorithm transparency, exploring technical solutions to AI ethical issues (such as detection and recognition tools for synthetic content, AI governance evaluation tools, privacy computing solutions such as federated learning), and more.

Global AI governance cooperation and competition coexist

Global AI competition is not only a competition in technology and industry, but also a competition in governance rules and standards. The development of the global digital economy is forming new international rules in areas such as data flow and AI governance frameworks. In recent years, global cooperation in AI governance has continued to advance. The OECD/G20 AI Principles in 2019 was the first global AI policy framework. In November 2021, UNESCO adopted the global first AI ethics agreement, the "Recommendation on the Ethics of Artificial Intelligence," which is currently the most comprehensive and widely consensual global AI policy framework. The recommendation suggests that countries strengthen AI ethical governance through mechanisms such as AI ethics officials and ethical impact assessments.

At the same time, the United States and Europe are accelerating the advancement of AI policies in order to strengthen their leadership in the field of AI policy and influence global AI governance rules and standards. For example, the EU is developing a unified AI law, which, when expected to be passed in 2023, will establish a comprehensive, risk-based AI regulatory path. Many experts predict that, just as the GDPR had an impact on the global technology industry, the EU AI law will extend the "Brussels effect" to the field of AI, which is what the EU hopes to achieve, namely to set AI governance standards globally through regulation. In addition to AI legislation, the European Council is also considering the world's first "International AI Convention," which, like the previous "Cybercrime Convention" and "Privacy Convention," can be joined and approved by member and non-member countries in the future. The United States has been a global leader in the field of AI technology and industry, and in recent years has begun to actively reshape its leadership position in the field of AI policy. One of the core tasks of the US-EU Trade and Technology Council established in 2020 is to promote cooperation between the United States and the EU in AI governance.

In summary, global AI competition increasingly emphasizes the soft power competition in AI policy and governance, representing a significant shift in the competition of 21st-century technology.

In recent years, China has attached great importance to participating in global technology governance, actively putting forward its own propositions, and seeking more recognition and consensus. For example, China has proposed the "Global Data Security Initiative," injecting positive energy into global digital governance. In November 2022, at the Conference of the States Parties to the United Nations Convention on Certain Conventional Weapons, China submitted a document to strengthen the position of AI ethical governance, proposing to adhere to ethical priority, strengthen self-restraint, use AI responsibly, and encourage international cooperation.

Three Prospects

The "14th Five-Year Plan and Vision 2035 Outline," the "Plan for Building the Rule of Law in China (2020-2025)," and the "Opinions on Strengthening Technological Ethical Governance" and many other policies and normative documents indicate that AI governance continues to be a core area of technological governance and technological ethics. For China to further enhance its global competitiveness in the AI field, it not only needs to focus on cultivating technological and industrial hard power, but also needs to focus on shaping soft power, especially in the field of AI policy and governance. This is because AI governance has become an important part of global AI competitiveness.

Looking back, China has clearly defined the regulatory and governance approach to AI, emphasizing the parallel development of security and innovation, and ethics. Facing the future, we need to better leverage the collective efforts of the government, industry/enterprises, research institutions, social organizations, users/consumers, the general public, and the media to continuously promote the healthy development of AI governance practices, allowing AI to better benefit economic and social development, improve people's well-being, and take the concept of technology for good to a higher level.

Furthermore, the past "Golden Decade of AI" has brought increasingly powerful AI models, and in the foreseeable future, AI will only become more powerful. This means that the impact of AI on human, social, and environmental aspects in the interaction with the social ecosystem may far exceed that of any previous technology. Therefore, the primary task of AI governance is to continuously advocate and practice the concept of "responsible AI"; "responsible AI" must be human-centered, requiring goodwill in the design, development, and deployment of AI technology, building trust in the technology, creating value for stakeholders and improving their well-being, and preventing misuse, abuse, and malicious use. In conclusion, effective AI governance needs to promote the in-depth practice of responsible AI and build trustworthy AI.

Continuously improving the legal framework for data and AI, and promoting precise and agile regulation

The healthy and sustainable development of AI not only depends on reasonable and effective regulation, but also requires continuous improvement in the level of data supply and circulation in the AI field. In fact, one of the main challenges faced by the development and application of AI in many fields such as healthcare, manufacturing, and finance is the issue of data acquisition and utilization, including difficulties in obtaining data, poor data quality, and lack of uniform data standards. Therefore, the further development of AI in the future largely depends on the continuous improvement of the legal framework for data and AI. The UK's "Digital Regulation Plan" proposes that well-designed regulation can have a powerful effect on promoting development and shaping a vibrant digital economy and society, but poorly designed or restrictive regulation can hinder innovation. The right rules can help people trust the products and services they use, which in turn can promote the popularity of products and services, as well as further consumption, investment, and innovation. The same applies to AI.

End of translation

On the one hand, for AI governance, it is necessary to advance precise and agile regulation. This includes: First, adopting different rules for different AI products, services, and applications. Because AI applications are almost ubiquitous, one-size-fits-all rules are difficult to reasonably adapt to the unique characteristics of AI applications in different industries and fields and their impact on individuals and society. This requires us to adopt a risk-based AI governance policy framework. For example, the same AI technology can be used to analyze and predict user personal attributes and preferences for personalized content delivery, as well as to assist in disease diagnosis and treatment; computer vision technology can be used to automatically classify and organize photos in a smartphone album, as well as to identify cancer tumors. These applications have significant differences in risk levels, outcome importance, personal and societal impact, etc. If regulatory requirements are generalized and one-size-fits-all, it is clearly neither scientific nor practical. Second, emphasizing decentralized and differentiated regulation based on the classification of AI applications. Like the internet, AI is a broad and general technology, and it is not suitable or feasible to have uniform regulation. From a foreign perspective, currently only the EU is attempting to establish a unified, generalized regulatory framework for all AI applications (the EU model), while the UK and the US emphasize industry-specific decentralized regulation based on application scenarios and risks (the UK-US model). The UK proposes regulatory guidance based on principles to promote innovation for AI applications, with its core concept including: focusing on specific application scenarios, based on the size of the risk, ensuring the proportionality and adaptability of regulation; [18] the US consistently emphasizes a governance path that combines industry regulation and industry self-regulation, as seen in the "AI Rights Blueprint," which proposes five principles and explicitly outlines a decentralized regulatory approach led by industry regulators and guided by application scenarios. [19] For China, the legislative and regulatory measures taken in the past for AI are closer to the UK-US model, that is, formulating separate regulatory rules for different AI applications such as algorithmic recommendation services, deep synthesis, and autonomous driving. This decentralized and differentiated regulatory model conforms to the principles of agile governance and can better adapt to the complex characteristics of the rapid development and iteration of AI technology and its applications. Third, calling for continuous innovation in the regulatory toolbox and adopting diversified regulatory measures. One is the "regulatory sandbox" for AI. A major innovation in AI regulation by the EU is the proposal of an AI "regulatory sandbox." [20] The "regulatory sandbox" serves as an effective way to support and promote communication and collaboration between regulators and innovation entities, providing a controlled environment for compliant research, testing, and validation of innovative AI applications. Best practices and implementation guidelines emerging from the "regulatory sandbox" will help companies, especially small and medium-sized enterprises and startups, comply with regulatory rules. The second is AI governance socialized services, including AI governance standards, certification, testing, evaluation, and auditing. In the field of cybersecurity, socialized services such as cybersecurity certification, testing, and risk assessment are important ways to implement regulatory requirements. AI governance can fully draw on this approach. Currently, the EU, the UK, the US, and IEEE are all advancing AI governance socialized services. For example, IEEE has launched an AI ethics certification for the industry; [21] the UK has released a roadmap for establishing an effective AI certification ecosystem, hoping to cultivate a world-leading AI certification industry, and to evaluate and communicate the credibility and compliance of AI systems through neutral third-party AI certification services (including impact assessments, bias audits, compliance audits, certification, compliance assessments, performance testing, etc.). The UK believes this will be a new industry worth billions of pounds. [22] China needs to accelerate the establishment of a sound AI governance socialized service system to better implement upstream legislative and regulatory requirements through downstream AI governance services. In addition, regulatory methods such as policy guidance, responsibility safe harbors, pilots, demonstration applications, and post-accountability can play an important role in different application scenarios.

On the other hand, it is necessary to accelerate the improvement of the legal framework for data circulation and utilization, and continuously enhance the level of data supply in the AI field. First, at the national level, a legal framework for public data open sharing should be established, with unified data quality and data governance standards, to promote the maximum open sharing of public data through diversified management and technical methods such as data downloads, API interfaces, privacy computing (federated learning, secure multi-party computation, secure and trusted computing environments), and data spaces. Second, active efforts should be made to remove various obstacles facing the development and application of privacy computing technology and synthetic data technology. Currently, in the circulation and utilization of various types of data, privacy computing technologies such as federated learning are playing an increasingly important role and can achieve effects such as "data availability/computability without visibility" and "data immobility, algorithm/value mobility." Driven by the development of AI and big data applications, federated learning, as the main method of privacy computing promotion and application, is currently the most mature technological path. The next step can be to better support the application and development of privacy computing technologies such as federated learning through the construction of strategic data spaces, the formulation of industry standards for privacy computing, and the issuance of legal-level implementation guidelines for privacy computing technologies.

AI ethical governance needs to move from principles to practice

With the institutionalization and legalization of technological ethical governance, strengthening technological ethical governance has become a "must" and a "must-answer" for technology companies. Under the concept of "ethical priority," technological ethical governance means that technology companies cannot rely on post-remedial measures to address AI ethical issues, but need to actively fulfill the responsibility of technological ethical management throughout the entire AI lifecycle, from design to development and deployment, and innovatively promote technological ethical self-regulation in various ways. Overall, in a highly technological and digital society, technology companies need to consider integrating AI governance into their corporate governance landscape as an equally important module alongside legal, financial, and risk control modules. The following aspects are worth further exploration:

First, in management, it is necessary to establish an AI ethical risk management mechanism. AI ethical risk management needs to run through the entire AI lifecycle, including the pre-design stage, design and development stage, deployment stage, and testing and evaluation activities that run through these stages, to comprehensively identify, analyze, evaluate, manage, and govern AI ethical risks. This relies on enterprises establishing corresponding organizational structures and management norms, such as ethical committees, ethical review processes, and other management tools. Policymakers may consider issuing policy guidelines in this regard.

Second, in design, it is necessary to follow a human-centered design philosophy and practice "Ethics by Design" principles. Ethics by Design means integrating ethical values, principles, requirements, and procedures into the design, development, and deployment processes of AI, robots, and big data systems. Enterprises need to rethink the AI development and application process dominated by technical personnel, and emphasize the diverse backgrounds and participation of AI development and application activities. Involving multidisciplinary and interdisciplinary personnel from disciplines such as policy, law, ethics, society, philosophy, etc., in technology development teams is the most direct and effective way to embed ethical requirements into technology design. In the future, policymakers can work with the industry to summarize lessons learned and issue technical guidelines and best practices for ethics by design.

Finally, in technology, it is necessary to continuously explore technological and market-based approaches to address AI ethical issues and build trustworthy AI applications. One is AI ethical tools. Many issues in AI applications, such as interpretability, fairness, security, and privacy protection, can be addressed through technological innovation. The industry has already explored some AI ethical tools and AI auditing tools for these issues, and future work in this area needs to continue to deepen. The second is AI ethics as a service. [23] It is a market-based approach where leading AI ethical companies and AI ethical startups develop responsible AI solutions with AI ethical tools and services at their core, and provide them as commercial services. [24] Research and consulting firm Forrester predicts that more and more AI technology companies and other companies using AI in various fields will purchase responsible AI solutions to help them implement fair and transparent AI ethical principles, and the market size of AI ethical services will double. The third is AI ethical bounties and other crowdsourcing methods. Similar to the bug bounty widely used by internet companies, crowdsourcing methods such as algorithmic bias bounties will play an important role in discovering, identifying, and solving AI ethical issues, developing AI auditing tools, and building trustworthy AI applications. Some foreign companies and research institutions such as Twitter and Stanford University have launched algorithmic bias bounty challenges and AI auditing challenges, calling on participants to develop tools to identify and mitigate algorithmic bias in AI models. Cultivating and strengthening the AI ethical community through these crowdsourcing methods will play an important role in AI governance.

Global AI governance cooperation needs to continue to deepen

As mentioned earlier, global AI governance is in a competitive state, which does not conform to the principles of human-centeredness, open and shared technology, and inclusive development. Countries need to continue to deepen cooperation in international AI governance, promote the formulation of international agreements on AI ethics and governance, form an international AI governance framework with broad consensus, standard specifications, and enhance exchanges and cooperation in technology, talent, and industry, oppose exclusionism, promote inclusive technology and technology for good, and achieve inclusive development for all countries. China's deep participation in global technology governance needs to seize the opportunity to formulate a new round of international rules in the fields of AI and data, continuously propose AI governance solutions that conform to global consensus, and continuously inject Chinese wisdom into global digital governance and AI governance. At the same time, we need to enhance international voice, integrate our own ideas, propositions, and practices into the international community, seek more recognition and consensus, and enhance our discourse power and influence.

Looking to the future, with the continuous development of AI technology, AI will become more and more powerful, and in the future, general AI (Artificial General Intelligence, abbreviated as AGI) may emerge. General AI will have intelligence equal to that of humans and will be able to perform any intellectual task that humans can. In theory, general AI may design higher-level intelligence, leading to an intelligence explosion, also known as the "singularity," and the emergence of superintelligence. From the current AI applications to future AGI, it may be a leap from quantitative to qualitative change. This has raised concerns about the replacement of human work by AGI, loss of control, and even threats to human survival. Therefore, the world needs to be prepared for general AI development and its potential safety, ethical, and other issues, work together, and ensure that AI technology develops in a more inclusive, safe, and controllable manner, benefiting all of humanity.

This article was first published in the "Journal of Shanghai Normal University (Philosophy and Social Sciences Edition)" in the fourth issue of 2023.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。