作者:0xjacobzhao

在 6 月份的研报《Crypto AI 的圣杯:去中心化训练的前沿探索》中,我们提及联邦学习(Federated Learning)这一介于分布式训练与去中心化训练之间的“受控去中心化”方案:其核心是数据本地保留、参数集中聚合,满足医疗、金融等隐私与合规需求。与此同时,我们在过往多期研报中持续关注智能体(Agent)网络的兴起——其价值在于通过多智能体的自治与分工,协作完成复杂任务,推动“大模型”向“多智能体生态”的演进。

联邦学习以“数据不出本地、按贡献激励”奠定了多方协作的基础,其分布式基因、透明激励、隐私保障与合规实践为 Agent Network 提供了可直接复用的经验。FedML 团队正是沿着这一路径,将开源基因升级为 TensorOpera(AI产业基础设施层),再演进至 ChainOpera(去中心化 Agent 网络)。当然,Agent Network 并非联邦学习的必然延伸,其核心在于多智能体的自治协作与任务分工,也可直接基于多智能体系统(MAS)、强化学习(RL)或区块链激励机制构建。

一、联邦学习与AI Agent技术栈架构

联邦学习(Federated Learning, FL) 是一种在不集中数据的前提下进行协同训练的框架,其基本原理是由各参与方在本地训练模型,仅上传参数或梯度至协调端进行聚合,从而实现“数据不出域”的隐私合规。经过医疗、金融和移动端等典型场景的实践,联邦学习 已进入较为成熟的商用阶段,但仍面临通信开销大、隐私保护不彻底、设备异构导致收敛效率低等瓶颈。与其他训练模式相比,分布式训练强调算力集中以追求效率与规模,去中心化训练则通过开放算力网络实现完全分布式协作,而联邦学习则处于二者之间,体现为一种 “受控去中心化” 方案:既能满足产业在隐私与合规方面的需求,又提供了跨机构协作的可行路径,更适合工业界过渡性部署架构。

而在整个AI Agent协议栈中,我们在之前的研报中将其划分为三个主要层级,即

基础设施层(Agent Infrastructure Layer):该层为智能体提供最底层的运行支持,是所有 Agent 系统构建的技术根基。

核心模块:包括 Agent Framework(智能体开发与运行框架)和 Agent OS(更底层的多任务调度与模块化运行时),为 Agent 的生命周期管理提供核心能力。

支持模块:如 Agent DID(去中心身份)、Agent Wallet & Abstraction(账户抽象与交易执行)、Agent Payment/Settlement(支付与结算能力)。

协调与调度层(Coordination & Execution Layer)关注多智能体之间的协同、任务调度与系统激励机制,是构建智能体系统“群体智能”的关键。

Agent Orchestration:是指挥机制,用于统一调度和管理 Agent 生命周期、任务分配和执行流程,适用于有中心控制的工作流场景。

Agent Swarm:是协同结构,强调分布式智能体协作,具备高度自治性、分工能力和弹性协同,适合应对动态环境中的复杂任务。

Agent Incentive Layer:构建 Agent 网络的经济激励系统,激发开发者、执行者与验证者的积极性,为智能体生态提供可持续动力。

应用层(Application & Distribution Layer)

分发子类:包括Agent Launchpad、Agent Marketplace 和Agent Plugin Network

应用子类:涵盖AgentFi、Agent Native DApp、Agent-as-a-Service等

消费子类:Agent Social / Consumer Agent为主,面向消费者社交等轻量场景

Meme:借 Agent 概念炒作,缺乏实际的技术实现和应用落地,仅营销驱动。

二、联邦学习标杆 FedML 与 TensorOpera 全栈平台

FedML 是最早面向联邦学习(Federated Learning)与分布式训练的开源框架之一,起源于学术团队(USC)并逐步公司化成为 TensorOpera AI 的核心产品。它为研究者和开发者提供跨机构、跨设备的数据协作训练工具,在学术界,FedML 因频繁出现在 NeurIPS、ICML、AAAI 等顶会上,已成为联邦学习研究的通用实验平台;在产业界,FedML在医疗、金融、边缘 AI 及 Web3 AI 等隐私敏感场景中具备较高口碑,被视为 联邦学习领域的标杆性工具链。

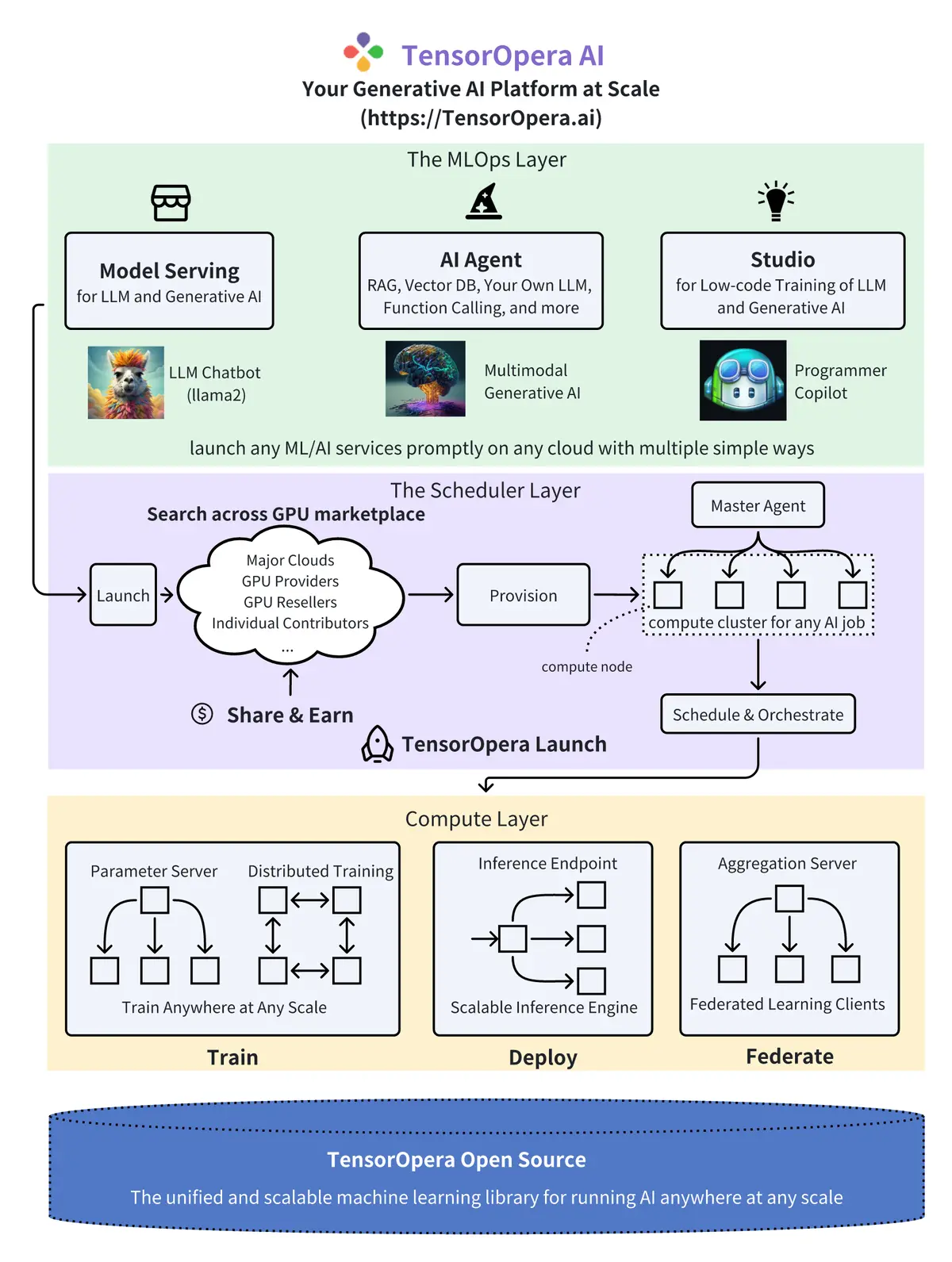

TensorOpera是 FedML基于商业化路径升级为面向企业与开发者的全栈 AI 基础设施平台:在保持联邦学习能力的同时,扩展至 GPU Marketplace、模型服务与 MLOps,从而切入大模型与 Agent 时代的更大市场。TensorOpera的整体架构可分为Compute Layer(基础层)、Scheduler Layer(调度层)和MLOps Layer(应用层)三个层级:

1. Compute Layer(底层)

Compute 层是 TensorOpera 的技术基底,延续 FedML 的开源基因,核心功能包括 Parameter Server、Distributed Training、Inference Endpoint 与 Aggregation Server。其价值定位在于提供分布式训练、隐私保护的联邦学习以及可扩展的推理引擎,支撑 “Train / Deploy / Federate” 三大核心能力,覆盖从模型训练、部署到跨机构协作的完整链路,是整个平台的基础层。

2. Scheduler Layer(中层)

Scheduler 层相当于算力交易与调度中枢,由 GPU Marketplace、Provision、Master Agent 与 Schedule & Orchestrate 构成,支持跨公有云、GPU 提供商和独立贡献者的资源调用。这一层是 FedML 升级为 TensorOpera 的关键转折,能够通过智能算力调度与任务编排实现更大规模的 AI 训练和推理,涵盖 LLM 与生成式 AI 的典型场景。同时,该层的 Share & Earn 模式预留了激励机制接口,具备与 DePIN 或 Web3 模式兼容的潜力。

3. MLOps Layer(上层)

MLOps 层是平台直接面向开发者与企业的服务接口,包括 Model Serving、AI Agent 与 Studio 等模块。典型应用涵盖 LLM Chatbot、多模态生成式 AI 和开发者 Copilot 工具。其价值在于将底层算力与训练能力抽象为高层 API 与产品,降低使用门槛,提供即用型 Agent、低代码开发环境与可扩展部署能力,定位上对标 Anyscale、Together、Modal 等新一代 AI Infra 平台,充当从基础设施走向应用的桥梁。

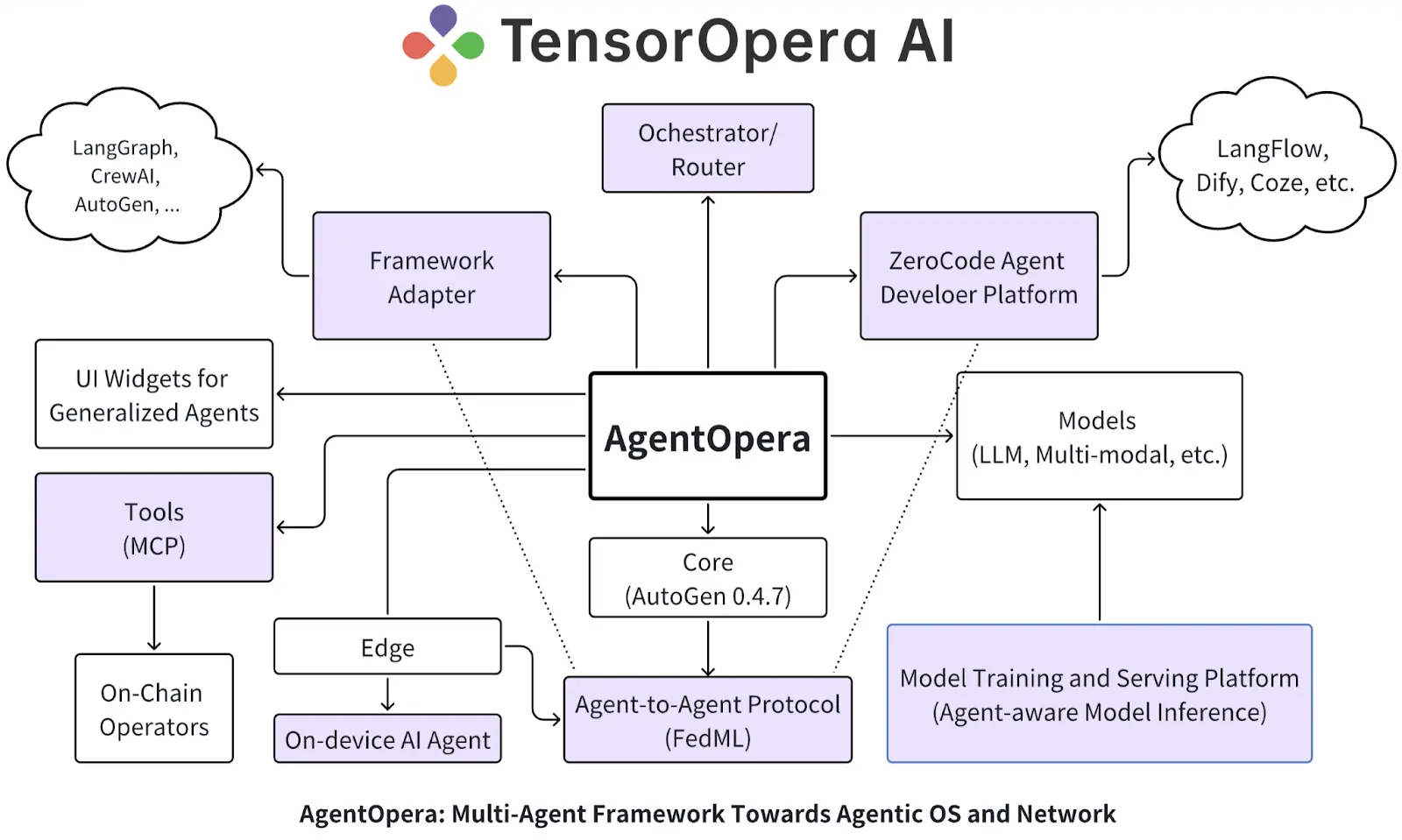

2025年3月,TensorOpera 升级为面向 AI Agent 的全栈平台,核心产品涵盖 AgentOpera AI App、Framework 与 Platform。应用层提供类 ChatGPT 的多智能体入口,框架层以图结构多智能体系统和 Orchestrator/Router 演进为“Agentic OS”,平台层则与 TensorOpera 模型平台和 FedML 深度融合,实现分布式模型服务、RAG 优化和混合端云部署。整体目标是打造 “一个操作系统,一个智能体网络”,让开发者、企业与用户在开放、隐私保护的环境下共建新一代 Agentic AI 生态。

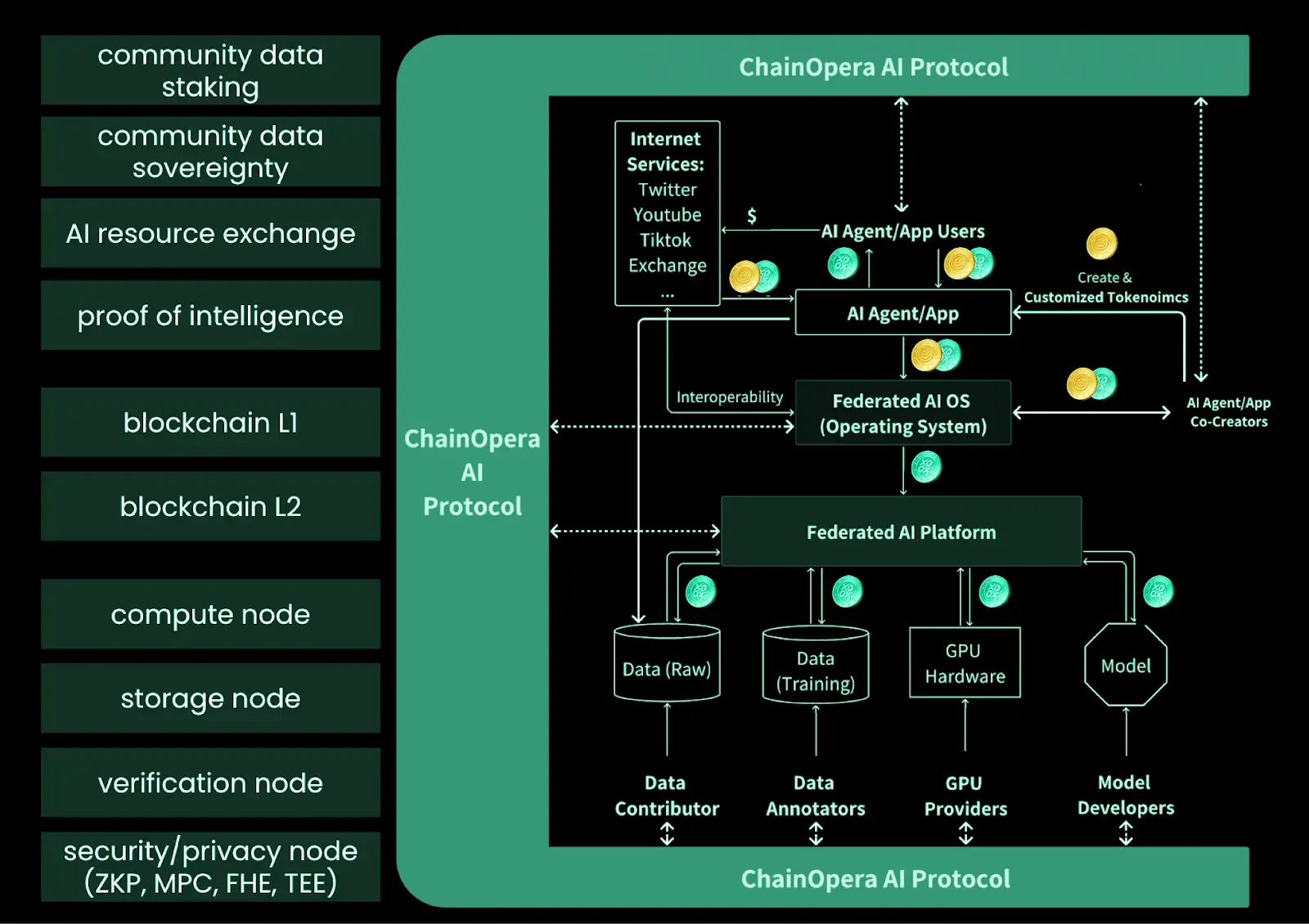

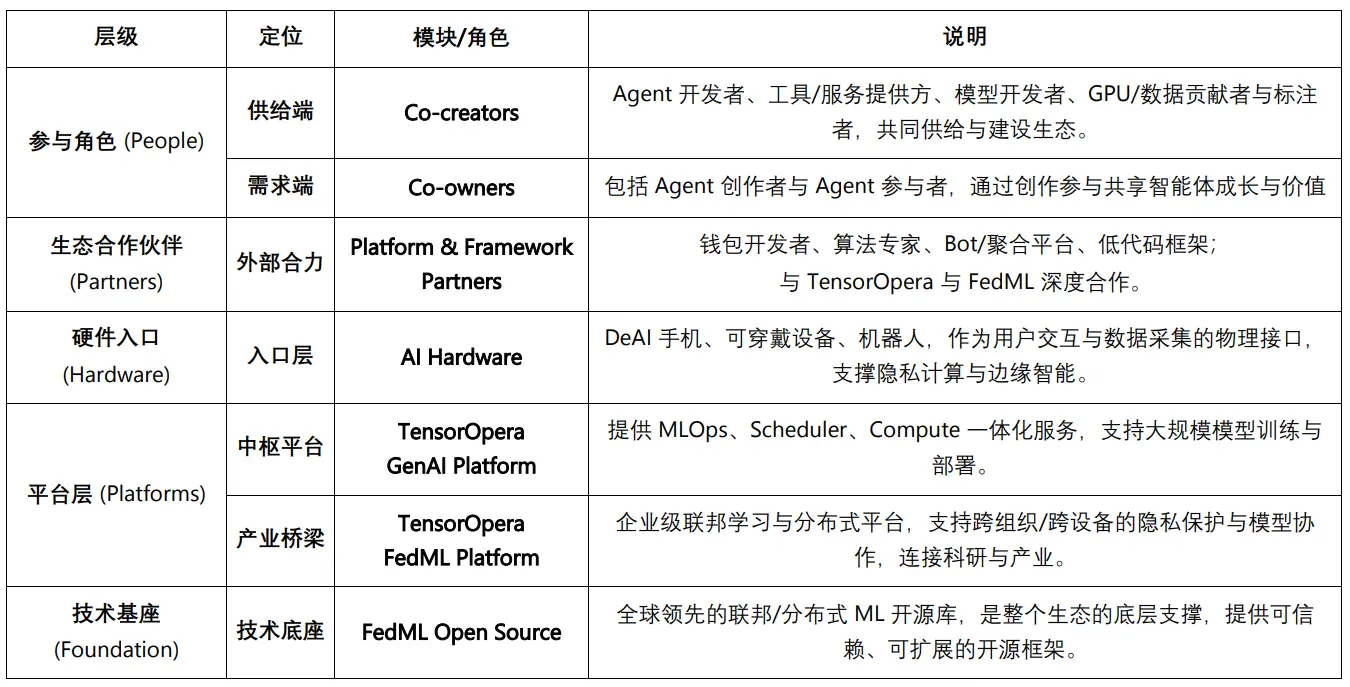

三、ChainOpera AI生态全景:从共创共有者到技术基座

如果说 FedML 是技术内核,提供了联邦学习与分布式训练的开源基因;TensorOpera 将 FedML 的科研成果抽象为可商用的全栈 AI 基础设施,那么 ChainOpera 则是将TensorOpera 的平台能力“上链”,通过 AI Terminal + Agent Social Network + DePIN 模型与算力层 + AI-Native 区块链 打造一个去中心化的 Agent 网络生态。其核心转变在于,TensorOpera 仍主要面向企业与开发者,而 ChainOpera 借助 Web3 化的治理与激励机制,把用户、开发者、GPU/数据提供者纳入共建共治,让 AI Agent 不只是“被使用”,而是“被共创与共同拥有”。

共创者生态(Co-creators)

ChainOpera AI 通过 Model & GPU Platform 与 Agent Platform 为生态共创提供工具链、基础设施与协调层,支持模型训练、智能体开发、部署与扩展协作。

ChainOpera 生态的共创者涵盖 AI Agent 开发者(设计与运营智能体)、工具与服务提供方(模板、MCP、数据库与 API)、模型开发者(训练与发布模型卡)、GPU 提供方(通过 DePIN 与 Web2 云伙伴贡献算力)、数据贡献者与标注方(上传与标注多模态数据)。三类核心供给——开发、算力与数据——共同驱动智能体网络的持续成长。

共有人生态(Co-owners)

ChainOpera 生态还引入 共有人机制,通过合作与参与共同建设网络。AI Agent 创作者是个人或团队,通过 Agent Platform 设计与部署新型智能体,负责构建、上线并持续维护,从而推动功能与应用的创新。AI Agent 参与者则来自社区,他们通过获取和持有访问单元(Access Units)参与智能体的生命周期,在使用与推广过程中支持智能体的成长与活跃度。两类角色分别代表 供给端与需求端,共同形成生态内的价值共享与协同发展模式。

生态合作伙伴:平台与框架

ChainOpera AI 与多方合作,强化平台的可用性与安全性,并注重 Web3 场景融合:通过 AI Terminal App 联合钱包、算法与聚合平台实现智能服务推荐;在 Agent Platform 引入多元框架与零代码工具,降低开发门槛;依托 TensorOpera AI 进行模型训练与推理;并与 FedML 建立独家合作,支持跨机构、跨设备的隐私保护训练。整体上,形成兼顾 企业级应用 与 Web3 用户体验 的开放生态体系。

硬件入口:AI 硬件与合作伙伴(AI Hardware & Partners)

通过 DeAI Phone、可穿戴与 Robot AI 等合作伙伴,ChainOpera 将区块链与 AI 融合进智能终端,实现 dApp 交互、端侧训练与隐私保护,逐步形成去中心化 AI 硬件生态。

中枢平台与技术基座:TensorOpera GenAI & FedML

TensorOpera 提供覆盖 MLOps、Scheduler、Compute 的全栈 GenAI 平台;其子平台 FedML 从学术开源成长为产业化框架,强化了 AI “随处运行、任意扩展” 的能力。

ChainOpera AI 生态体系

四、ChainOpera核心产品及全栈式 AI Agent 基础设施

2025年6月,ChainOpera正式上线 AI Terminal App 与去中心化技术栈,定位为“去中心化版 OpenAI”,其核心产品涵盖四大模块:应用层(AI Terminal & Agent Network)、开发者层(Agent Creator Center)、模型与 GPU 层(Model & Compute Network)、以及 CoAI 协议与专用链,覆盖了从用户入口到底层算力与链上激励的完整闭环。

AI Terminal App 已集成 BNBChain ,支持链上交易与 DeFi 场景的 Agent。Agent Creator Center 面向开发者开放,提供 MCP/HUB、知识库与 RAG 等能力,社区智能体持续入驻;同时发起 CO-AI Alliance,联动 io.net、Render、TensorOpera、FedML、MindNetwork 等伙伴。

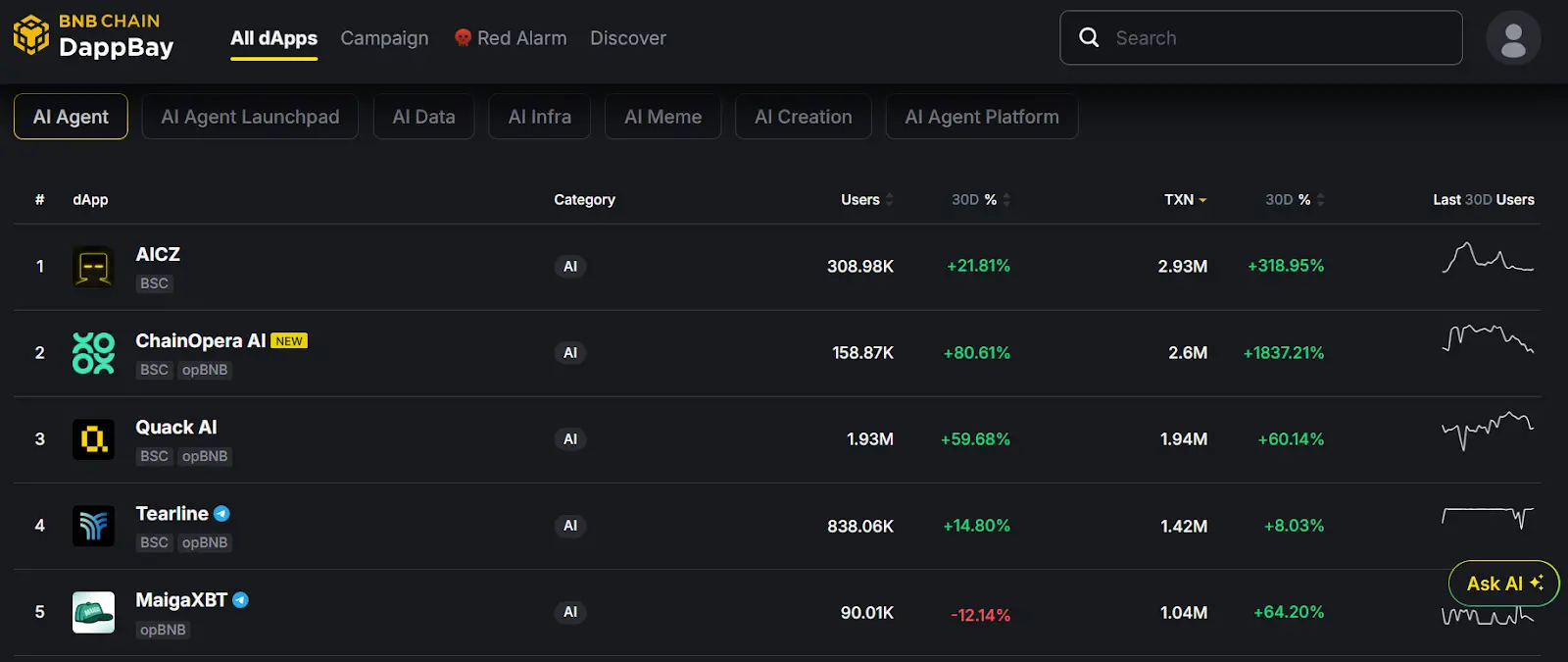

根据BNB DApp Bay 近 30 日的链上数据显示,其独立用户 158.87K,近30日交易量260万,在在 BSC「AI Agent」分类中排名全站第二,显示出强劲的链上活跃度。

Super AI Agent App – AI Terminal (https://chat.chainopera.ai/)

作为去中心化 ChatGPT 与 AI 社交入口,AI Terminal 提供多模态协作、数据贡献激励、DeFi 工具整合、跨平台助手,并支持 AI Agent 协作与隐私保护(Your Data, Your Agent)。用户可在移动端直接调用开源大模型 DeepSeek-R1 与社区智能体,交互过程中语言 Token 与加密 Token 在链上透明流转。其价值在于让用户从“内容消费者”转变为“智能共创者”,并能在 DeFi、RWA、PayFi、电商等场景中使用专属智能体网络。

AI Agent Social Network (https://chat.chainopera.ai/agent-social-network)

定位类似 LinkedIn + Messenger,但面向 AI Agent 群体。通过虚拟工作空间与 Agent-to-Agent 协作机制(MetaGPT、ChatDEV、AutoGEN、Camel),推动单一 Agent 演化为多智能体协作网络,覆盖金融、游戏、电商、研究等应用,并逐步增强记忆与自主性。

AI Agent Developer Platform (https://agent.chainopera.ai/)

为开发者提供“乐高式”创作体验。支持零代码与模块化扩展,区块链合约确保所有权,DePIN + 云基础设施降低门槛,Marketplace 提供分发与发现渠道。其核心在于让开发者快速触达用户,生态贡献可透明记录并获得激励。

AI Model & GPU Platform (https://platform.chainopera.ai/)

作为基础设施层,结合 DePIN 与联邦学习,解决 Web3 AI 依赖中心化算力的痛点。通过分布式 GPU、隐私保护的数据训练、模型与数据市场,以及端到端 MLOps,支持多智能体协作与个性化 AI。其愿景是推动从“大厂垄断”到“社区共建”的基建范式转移。

五、ChainOpera AI 的路线图规划

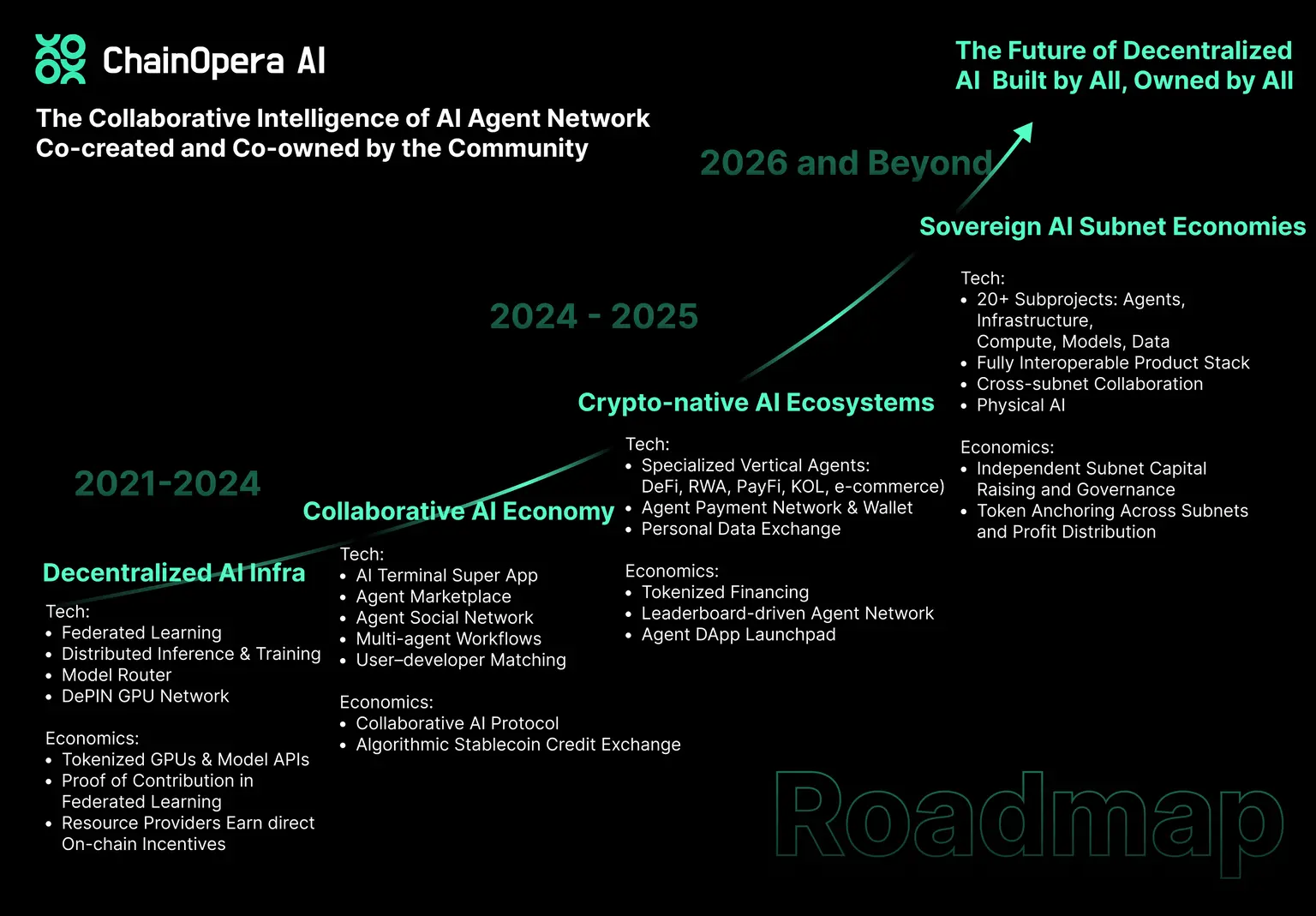

除去已正式上线全栈 AI Agent平台外, ChainOpera AI 坚信通用人工智能(AGI)来自 多模态、多智能体的协作网络。因此其远期路线图规划分为四个阶段:

阶段一(Compute → Capital):构建去中心化基础设施,包括 GPU DePIN 网络、联邦学习与分布式训练/推理平台,并引入 模型路由器(Model Router)协调多端推理;通过激励机制让算力、模型与数据提供方获得按使用量分配的收益。

阶段二(Agentic Apps → Collaborative AI Economy):推出 AI Terminal、Agent Marketplace 与 Agent Social Network,形成多智能体应用生态;通过 CoAI 协议 连接用户、开发者与资源提供者,并引入 用户需求–开发者匹配系统 与信用体系,推动高频交互与持续经济活动。

阶段三(Collaborative AI → Crypto-Native AI):在 DeFi、RWA、支付、电商等领域落地,同时拓展至 KOL 场景与个人数据交换;开发面向金融/加密的专用 LLM,并推出 Agent-to-Agent 支付与钱包系统,推动“Crypto AGI”场景化应用。

阶段四(Ecosystems → Autonomous AI Economies):逐步演进为自治子网经济,各子网围绕 应用、基础设施、算力、模型与数据 独立治理、代币化运作,并通过跨子网协议协作,形成多子网协同生态;同时从 Agentic AI 迈向 Physical AI(机器人、自动驾驶、航天)。

免责声明:本路线图仅供参考,时间表与功能可能因市场环境动态调整,不构成交付保证承诺。

七、代币激励与协议治理

目前 ChainOpera 尚未公布完整的代币激励计划,但其 CoAI 协议以“共创与共拥有”为核心,通过区块链与 Proof-of-Intelligence 机制实现透明可验证的贡献记录:开发者、算力、数据与服务提供者的投入按标准化方式计量并获得回报,用户使用服务、资源方支撑运行、开发者构建应用,所有参与方共享增长红利;平台则以 1% 服务费、奖励分配和流动性支持维持循环,推动开放、公平、协作的去中心化 AI 生态。

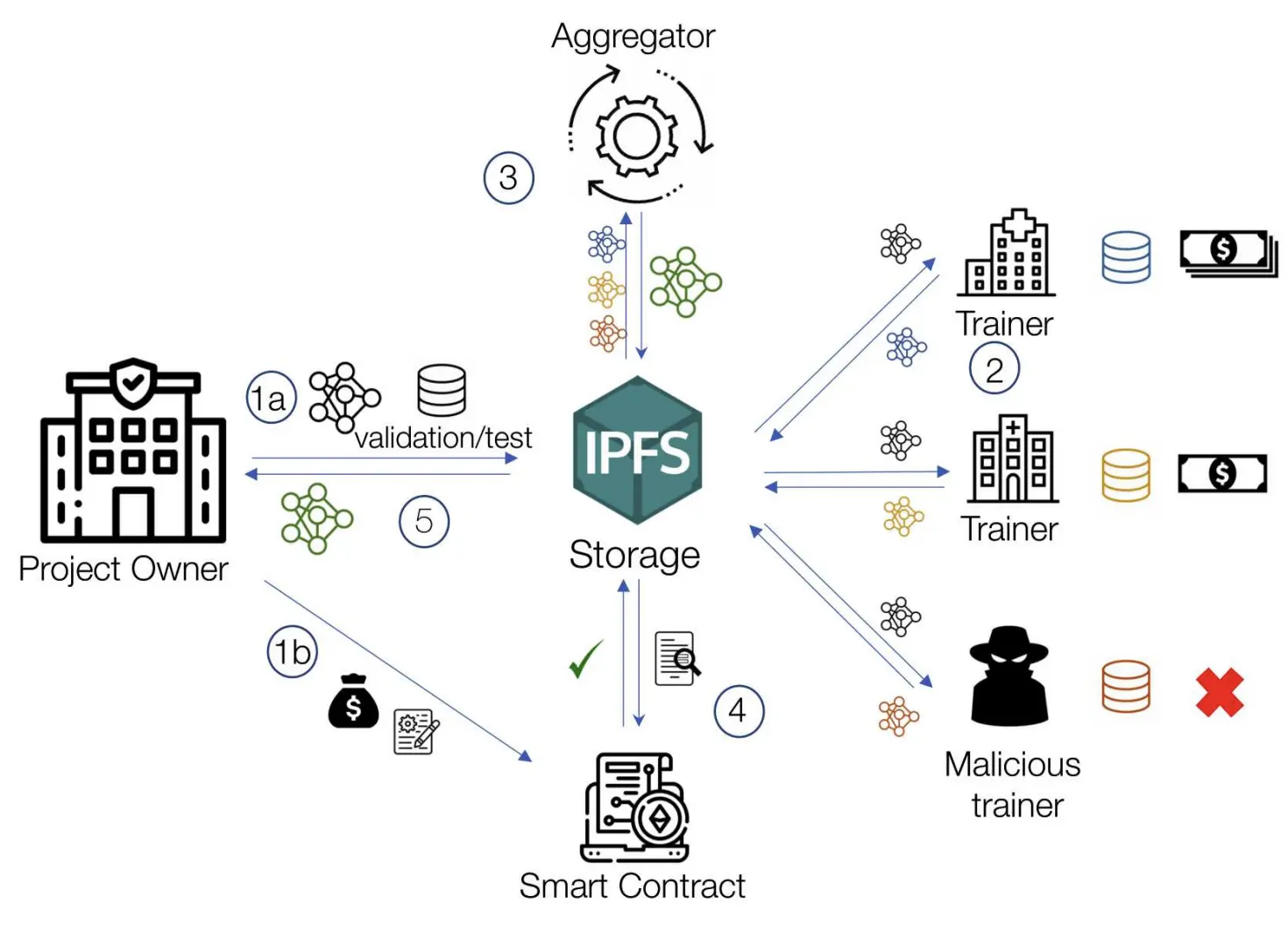

Proof-of-Intelligence 学习框架

Proof-of-Intelligence (PoI) 是 ChainOpera 在 CoAI 协议下提出的核心共识机制,旨在为去中心化 AI 构建提供透明、公平且可验证的激励与治理体系。其基于Proof-of-Contribution(贡献证明) 的区块链协作机器学习框架,旨在解决联邦学习(FL)在实际应用中存在的激励不足、隐私风险与可验证性缺失问题。该设计以智能合约为核心,结合去中心化存储(IPFS)、聚合节点和零知识证明(zkSNARKs),实现了五大目标:① 按贡献度进行公平奖励分配,确保训练者基于实际模型改进获得激励;② 保持数据本地化存储,保障隐私不外泄;③ 引入鲁棒性机制,对抗恶意训练者的投毒或聚合攻击;④ 通过 ZKP 确保模型聚合、异常检测与贡献评估等关键计算的可验证性;⑤ 在效率与通用性上适用于异构数据和不同学习任务。

全栈式 AI 中代币价值

ChainOpera 的代币机制围绕五大价值流(LaunchPad、Agent API、Model Serving、Contribution、Model Training)运作,核心是 服务费、贡献确认与资源分配,而非投机回报。

AI 用户:用代币访问服务或订阅应用,并通过提供/标注/质押数据贡献生态。

Agent/应用开发者:使用平台算力与数据进行开发,并因其贡献的 Agent、应用或数据集获得协议认可。

资源提供者:贡献算力、数据或模型,获得透明记录与激励。

治理参与者(社区 & DAO):通过代币参与投票、机制设计与生态协调。

协议层(COAI):通过服务费维持可持续发展,利用自动化分配机制平衡供需。

节点与验证者:提供验证、算力与安全服务,确保网络可靠性。

协议治理

ChainOpera 采用 DAO 治理,通过质押代币参与提案与投票,确保决策透明与公平。治理机制包括:声誉系统(验证并量化贡献)、社区协作(提案与投票推动生态发展)、参数调整(数据使用、安全与验证者问责)。整体目标是避免权力集中,保持系统稳定与社区共创。

八、团队背景及项目融资

ChainOpera项目由在联邦学习领域具有深厚造诣的 Salman Avestimehr 教授 与 何朝阳(Aiden)博士 共同创立。其他核心团队成员背景横跨 UC Berkeley、Stanford、USC、MIT、清华大学 以及 Google、Amazon、Tencent、Meta、Apple 等顶尖学术与科技机构,兼具学术研究与产业实战能力。截止目前,ChainOpera AI 团队规模已超过 40 人。

联合创始人:Salman Avestimehr

Salman Avestimehr 教授是 南加州大学(USC)电气与计算机工程系的 Dean’s Professor,并担任 USC-Amazon Trusted AI 中心创始主任,同时领导 USC 信息论与机器学习实验室(vITAL)。他是 FedML 联合创始人兼 CEO,并在 2022 年共同创立了 TensorOpera/ChainOpera AI。

Salman Avestimehr 教授毕业于 UC Berkeley EECS 博士(最佳论文奖)。作为IEEE Fellow,在信息论、分布式计算与联邦学习领域发表高水平论文 300+ 篇,引用数超 30,000,并获 PECASE、NSF CAREER、IEEE Massey Award 等多项国际荣誉。其主导创建 FedML 开源框架,广泛应用于医疗、金融和隐私计算,并成为 TensorOpera/ChainOpera AI 的核心技术基石。

联合创始人:Dr. Aiden Chaoyang He

Dr. Aiden Chaoyang He 是 TensorOpera/ChainOpera AI 联合创始人兼总裁,南加州大学(USC)计算机科学博士、FedML 原始创建者。其研究方向涵盖分布式与联邦学习、大规模模型训练、区块链与隐私计算。在创业之前,他曾在 Meta、Amazon、Google、Tencent 从事研发,并在腾讯、百度、华为担任核心工程与管理岗位,主导多个互联网级产品与 AI 平台的落地。

学术与产业方面,Aiden 已发表 30 余篇论文,Google Scholar 引用超过 13,000,并获 Amazon Ph.D. Fellowship、Qualcomm Innovation Fellowship 及 NeurIPS、AAAI 最佳论文奖。他主导开发的 FedML 框架是联邦学习领域最广泛使用的开源项目之一,支撑 日均 270 亿次请求;并作为核心作者提出 FedNLP 框架、混合模型并行训练方法,被广泛应用于Sahara AI等去中心化AI项目。

2024 年 12 月,ChainOpera AI 宣布完成 350 万美元种子轮融资,累计与 TensorOpera 共计融资 1700 万美元,资金将用于构建面向去中心化 AI Agent 的区块链 L1 与 AI 操作系统。本轮融资由 Finality Capital、Road Capital、IDG Capital 领投,跟投方包括 Camford VC、ABCDE Capital、Amber Group、Modular Capital 等,亦获得 Sparkle Ventures、Plug and Play、USC 以及 EigenLayer 创始人 Sreeram Kannan、BabylonChain 联合创始人 David Tse 等知名机构和个人投资人支持。团队表示,此轮融资将加速实现 “AI 资源贡献者、开发者与用户共同 co-own 和 co-create 的去中心化 AI 生态” 愿景。

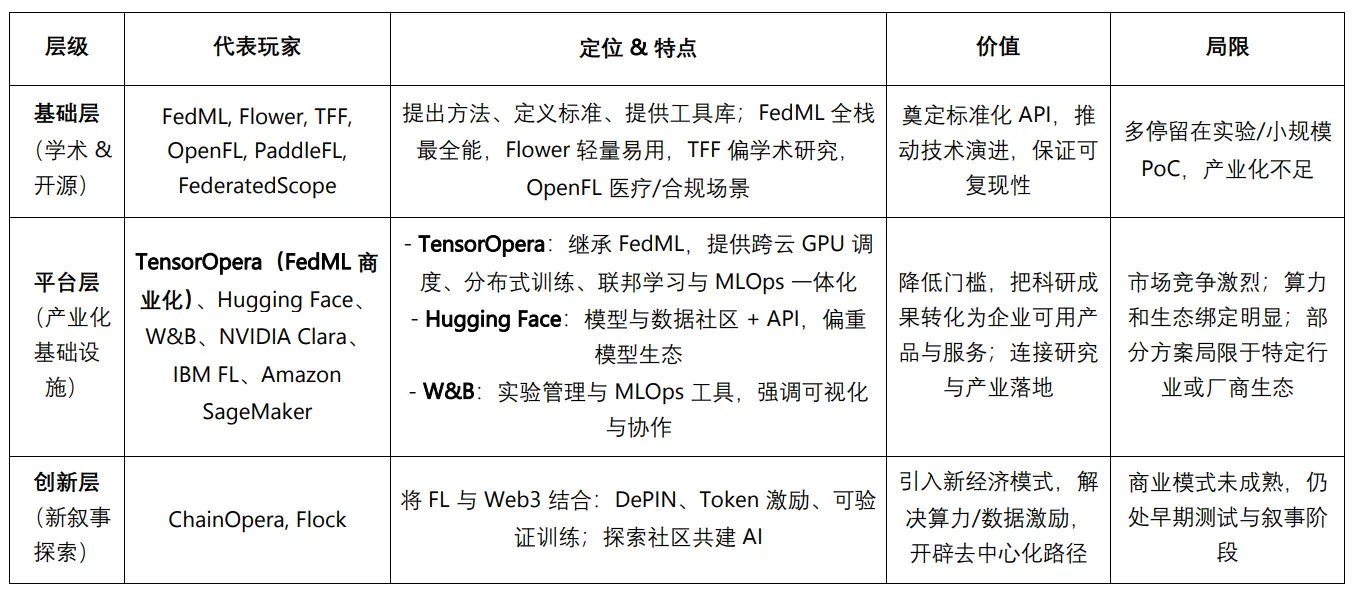

九、联邦学习与AI Agent市场格局分析

联邦学习框架主要有四个代表:FedML、Flower、TFF、OpenFL。其中,FedML 最全栈,兼具联邦学习、分布式大模型训练与 MLOps,适合产业落地;Flower 轻量易用,社区活跃,偏教学与小规模实验;TFF 深度依赖 TensorFlow,学术研究价值高,但产业化弱;OpenFL 聚焦医疗/金融,强调隐私合规,生态较封闭。总体而言,FedML 代表工业级全能路径,Flower 注重易用性与教育,TFF 偏学术实验,OpenFL 则在垂直行业合规性上具优势。

在产业化与基础设施层,TensorOpera(FedML 商业化)的特点在于继承开源 FedML 的技术积累,提供跨云 GPU 调度、分布式训练、联邦学习与 MLOps 的一体化能力,目标是桥接学术研究与产业应用,服务开发者、中小企业及 Web3/DePIN 生态。总体来看,TensorOpera 相当于 “开源 FedML 的 Hugging Face + W&B 合体”,在全栈分布式训练和联邦学习能力上更完整、通用,区别于以社区、工具或单一行业为核心的其他平台。

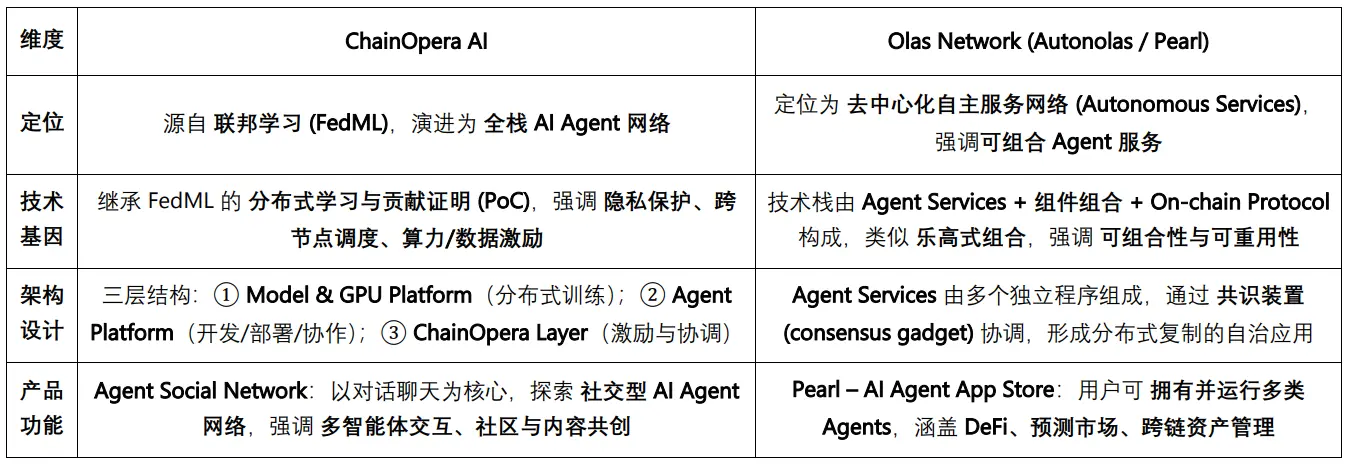

在创新层代表中,ChainOpera 与 Flock 都尝试将联邦学习与 Web3 结合,但方向存在明显差异。ChainOpera 构建的是 全栈 AI Agent 平台,涵盖入口、社交、开发和基础设施四层架构,核心价值在于推动用户从“消费者”转变为“共创者”,并通过 AI Terminal 与 Agent Social Network 实现协作式 AGI 与社区共建生态;而 Flock 则更聚焦于 区块链增强型联邦学习(BAFL),强调在去中心化环境下的隐私保护与激励机制,主要面向算力和数据层的协作验证。ChainOpera 更偏向 应用与 Agent 网络层 的落地,Flock 则偏向 底层训练与隐私计算 的强化。

在Agent网络层面,业内最有代表性的项目是Olas Network。ChainOpera 前者源自联邦学习,构建模型—算力—智能体的全栈闭环,并以 Agent Social Network 为实验场探索多智能体的交互与社交协作;Olas Network源于 DAO 协作与 DeFi 生态,定位为去中心化自主服务网络,通过 Pearl推出可直接落地的Defi收益场景,与ChainOpera展现出截然不同的路径。

十、投资逻辑与潜在风险分析

投资逻辑

ChainOpera 的优势首先在于其 技术护城河:从 FedML(联邦学习标杆性开源框架)到 TensorOpera(企业级全栈 AI Infra),再到 ChainOpera(Web3 化 Agent 网络 + DePIN + Tokenomics),形成了独特的连续演进路径,兼具学术积累、产业落地与加密叙事。

在 应用与用户规模 上,AI Terminal 已形成数十万日活用户与千级 Agent 应用生态,并在 BNBChain DApp Bay AI 类目排名第一,具备明确的链上用户增长与真实交易量。其多模态场景覆盖的加密原生领域有望逐步外溢至更广泛的 Web2 用户。

生态合作 方面,ChainOpera 发起 CO-AI Alliance,联合 io.net、Render、TensorOpera、FedML、MindNetwork 等伙伴,构建 GPU、模型、数据、隐私计算等多边网络效应;同时与三星电子合作验证移动端多模态 GenAI,展示了向硬件和边缘 AI 扩展的潜力。

在 代币与经济模型 上,ChainOpera 基于 Proof-of-Intelligence 共识,围绕五大价值流(LaunchPad、Agent API、Model Serving、Contribution、Model Training)分配激励,并通过 1% 平台服务费、激励分配和流动性支持形成正向循环,避免单一“炒币”模式,提升了可持续性。

潜在风险

首先,技术落地难度较高。ChainOpera 所提出的五层去中心化架构跨度大,跨层协同(尤其在大模型分布式推理与隐私训练方面)仍存在性能与稳定性挑战,尚未经过大规模应用验证。

其次,生态用户粘性仍需观察。虽然项目已取得初步用户增长,但 Agent Marketplace 与开发者工具链能否长期维持活跃与高质量供给仍有待检验。目前上线的 Agent Social Network 主要以 LLM 驱动的文本对话为主,用户体验与长期留存仍需进一步提升。若激励机制设计不够精细,可能出现短期活跃度高但长期价值不足的现象。

最后,商业模式的可持续性尚待确认。现阶段收入主要依赖平台服务费与代币循环,稳定现金流尚未形成,与 AgentFi或Payment 等更具金融化或生产力属性的应用相比,当前模式的商业价值仍需进一步验证;同时,移动端与硬件生态仍在探索阶段,市场化前景存在一定不确定性。

免责声明:本文在创作过程中借助了 ChatGPT-5 的 AI 工具辅助完成,作者已尽力校对并确保信息真实与准确,但仍难免存在疏漏,敬请谅解。需特别提示的是,加密资产市场普遍存在项目基本面与二级市场价格表现背离的情况。本文内容仅用于信息整合与学术/研究交流,不构成任何投资建议,亦不应视为任何代币的买卖推荐。

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。