What did Jensen Huang actually say at the Davos Forum?

On the surface, he was promoting robots, but in reality, he was conducting a bold 'self-revolution'. With a few words, he ended the old era of "stacking GPUs" and unexpectedly set a once-in-a-lifetime ticket for entry into the Crypto track.

Yesterday, at the Davos Forum, Huang pointed out that the AI application layer is exploding, and the demand for computing power will shift from the "training side" to the "inference side" and "Physical AI" side.

This is quite interesting.

As the biggest winner of the AI 1.0 era's "computing power arms race," NVIDIA is now actively calling for a shift towards the "inference side" and "Physical AI," which actually conveys a very straightforward message: the era of relying on stacking cards to train large models, where "great effort yields miracles," is over. In the future, AI competition will revolve around the "application is king" approach focused on real-world application scenarios.

In other words, Physical AI is the second half of Generative AI.

Because LLMs have already read all the data accumulated by humanity over decades on the internet, but they still do not know how to twist open a bottle cap like a human. Physical AI aims to solve the "unity of knowledge and action" problem beyond AI intelligence.

This is because Physical AI cannot rely on the "long reflex arc" of remote cloud servers. The logic is simple: if you make ChatGPT generate text one second slower, you might just feel a lag, but if a bipedal robot is delayed by one second due to network latency, it could fall down the stairs.

However, while Physical AI seems to be a continuation of Generative AI, it actually faces three completely different new challenges:

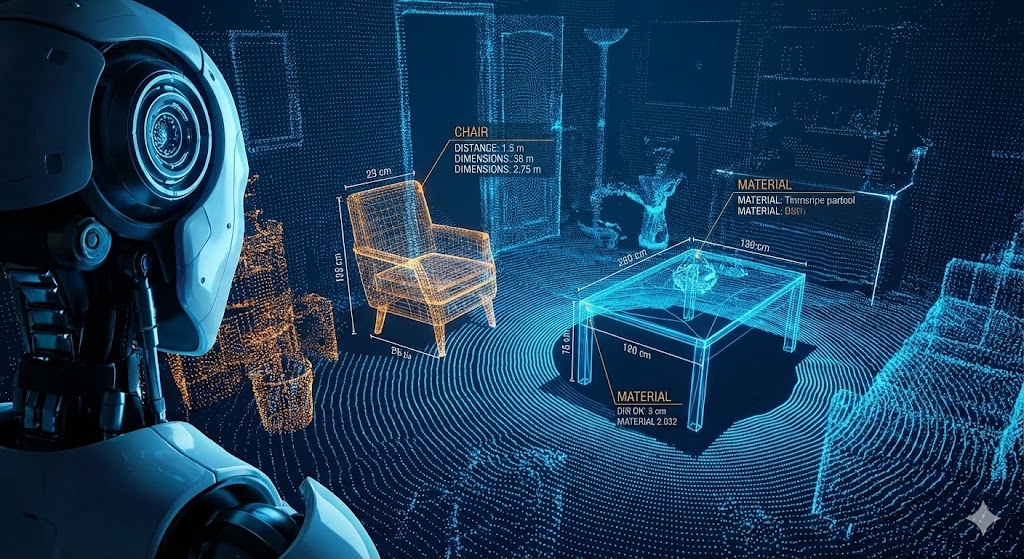

1) Spatial Intelligence: Enabling AI to understand the three-dimensional world.

Professor Fei-Fei Li once proposed that spatial intelligence is the next North Star for AI evolution. For a robot to move, it must first "understand" its environment. This is not just about recognizing "this is a chair," but understanding "the position, structure of this chair in three-dimensional space, and how much force I need to move it."

This requires massive, real-time, 3D environmental data covering every corner indoors and outdoors;

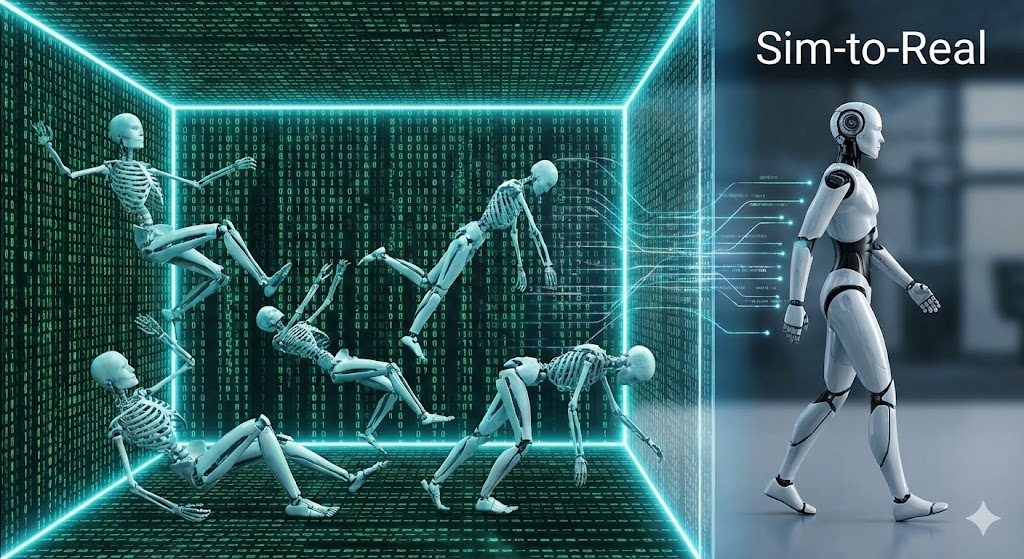

2) Virtual Training Ground: Allowing AI to train through trial and error in a simulated world.

The Omniverse mentioned by Huang is actually a kind of "virtual training ground." Before entering the real physical world, robots need to train in a virtual environment by "falling ten thousand times" to learn how to walk. This process is called Sim-to-Real, meaning from simulation to reality. If robots were to trial and error directly in reality, the hardware wear and tear costs would be astronomically high.

This process requires exponential throughput demands on physical engine simulation and rendering power;

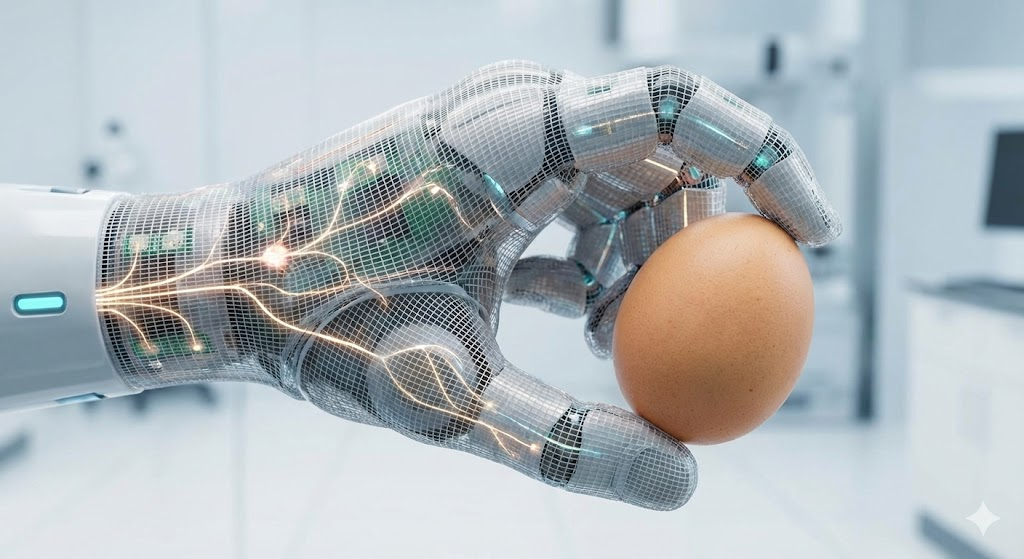

3) Electronic Skin: A treasure trove of "tactile data" waiting to be mined.

For Physical AI to have "tactile sensation," it needs electronic skin to perceive temperature, pressure, and texture. This "tactile data" is a new asset that has never been scaled for collection before. It may require large-scale sensor collection; for instance, the "mass-produced skin" showcased by Ensuring at CES integrates 1,956 sensors on a single hand, enabling robots to perform the miraculous task of cracking eggs.

These "tactile data" are a new asset that has never been scaled for collection before.

After seeing all this, you must feel that the emergence of Physical AI rhetoric provides a significant opportunity for many hardware devices such as wearables and humanoid robots, which were criticized a few years ago as "large toys."

In fact, I want to say that within the new landscape of Physical AI, the Crypto track also has excellent ecological opportunities for complementing. Here are a few examples:

AI giants can send street view cars to scan every main street in the world, but they cannot collect data from street corners, community interiors, and basements. By utilizing token incentives provided by DePIN network devices, they can mobilize global users to use their personal devices to fill in these data gaps.

As mentioned earlier, robots cannot rely on cloud computing power, but to utilize edge computing and distributed rendering capabilities on a large scale in the short term, especially to complete many simulations to reality data. By using a distributed computing network to gather idle consumer-grade hardware for distribution and scheduling, it can be put to good use.

"Tactile data," aside from large-scale sensor applications, is inherently private. How can we encourage the public to share this privacy-involved data with AI giants? A feasible path is to allow data contributors to gain data rights and dividends.

In summary:

Physical AI is the second half of the web2 AI track that Huang is calling out, and for the web3 AI + Crypto track, such as DePIN, DeAI, DeData, etc., isn't it the same? What do you think?

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。