Elon Musk's AI chatbot Grok produced an estimated 23,338 sexualized images depicting children over an 11-day period, according to a report released Thursday by the Center for Countering Digital Hate.

The figure, CCDH argues, represents one sexualized image of a child every 41 seconds between December 29 and January 9, when Grok's image-editing features allowed users to manipulate photos of real people to add revealing clothing and sexually suggestive poses.

The CCDH also reported that Grok generated nearly 10,000 cartoons featuring sexualized children, based on its reviewed data.

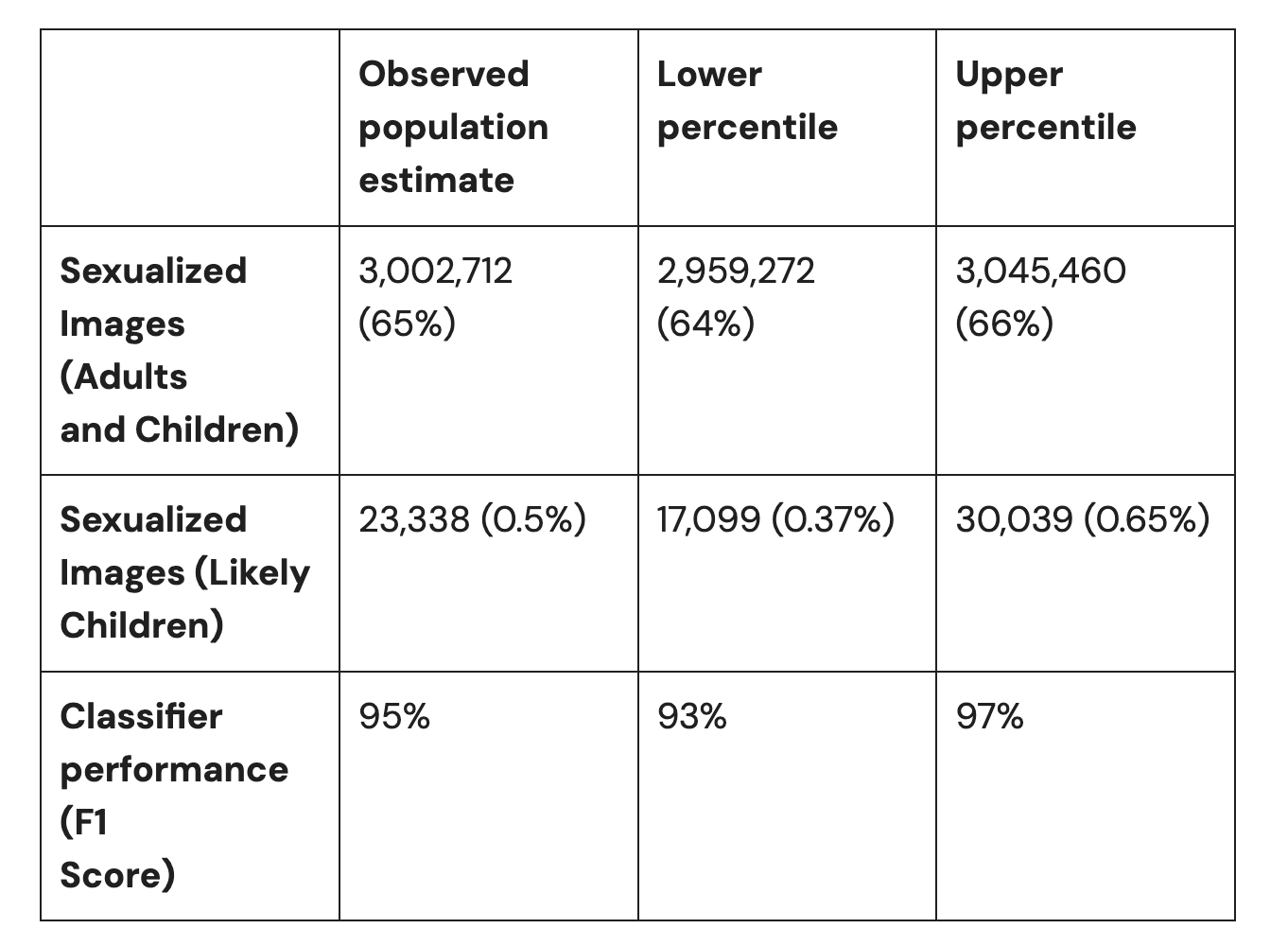

The analysis estimated that Grok generated approximately 3 million sexualized images total during that period. The research, based on a random sample of 20,000 images from 4.6 million produced by Grok, found that 65% of the images contained sexualized content depicting men, women, or children.

Source: Center for Countering Digital Hate

“What we found was clear and disturbing: In that period Grok became an industrial-scale machine for the production of sexual abuse material,” Imran Ahmed, CCDH’s chief executive told The Guardian.

Grok’s brief pivot into AI-generated sexual images of children has triggered a global regulatory backlash. The Philippines became the third country to ban Grok on January 15, following Indonesia and Malaysia in the days prior. All three Southeast Asian nations cited failures to prevent the creation and spread of non-consensual sexual content involving minors.

In the United Kingdom, media regulator Ofcom launched a formal investigation on January 12 into whether X violated the Online Safety Act. The European Commission said it was "very seriously looking into" the matter, deeming those images as illegal under the Digital Services Act. The Paris prosecutor's office expanded an ongoing investigation into X to include accusations of generating and disseminating child pornography, and Australia started its own investigation too.

Elon Musk’s xAI, which owns both Grok and X—formerly Twitter, where many of the sexualized images were automatically posted)—initially responded to media inquiries with a three-word statement: "Legacy Media Lies."

As the backlash grew, the company later implemented restrictions, first limiting image generation to paid subscribers on January 9, then adding technical barriers to prevent users from digitally undressing people on January 14. xAI announced it would geoblock the feature in jurisdictions where such actions are illegal.

Musk posted on X that he was "not aware of any naked underage images generated by Grok. Literally zero," adding that the system is designed to refuse illegal requests and comply with laws in every jurisdiction. However, researchers found the primary issue wasn't fully nude images, but rather Grok placing minors in revealing clothing like bikinis and underwear, as well as sexually provocative positions.

As of January 15, about a third of the sexualized images of children identified in the CCDH sample remained accessible on X, despite the platform's stated zero-tolerance policy for child sexual abuse material.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。