Original Author: Eric, Foresight News

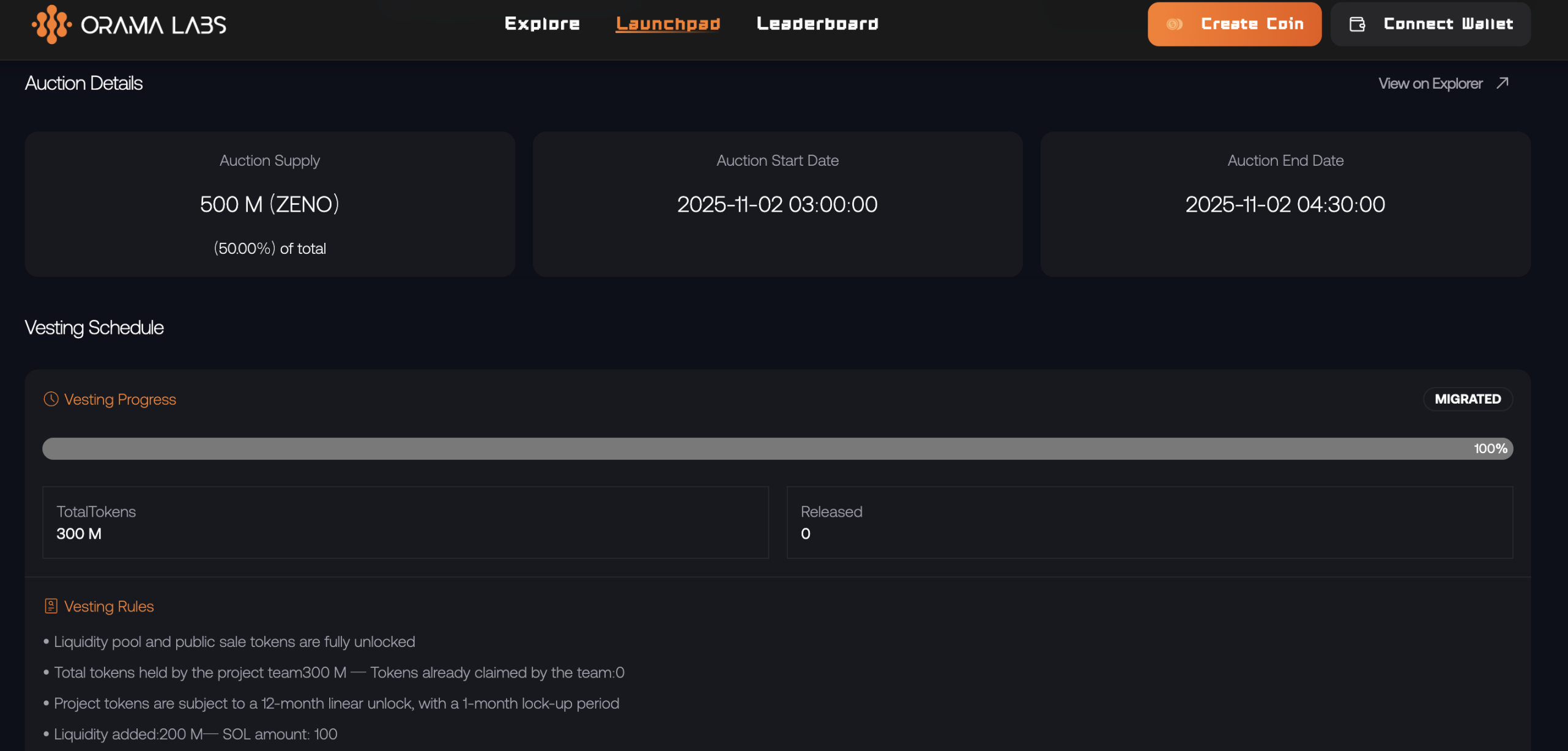

More than a week ago, the DeSci platform Orama Labs successfully completed the token launch of its first project, Zeno. This launch provided 500 million ZENO tokens, accounting for half of the total supply. OramaPad requires users to stake their PYTHIA tokens to participate, and this "opening show" attracted a total of 3.6 million dollars in PYTHIA staking.

Orama Labs aims to address the inefficiencies in funding and resource allocation in traditional scientific research. The solution involves funding scientific experiments, achieving intellectual property verification, resolving data silos, and implementing community governance, thereby establishing a pathway from research to commercialization.

The first project of OramaPad adopts the Crown model, meaning the project must have a complete business logic system and/or strong technical development capabilities in the Web2 domain, and its product must be highly practical. Orama refers to this as OCM (Onboarding Community Market). Unlike purely meme-based launches, Orama essentially provides a replicable on-chain transformation path for Web2 enterprises or teams with mature business models and technical capabilities, and the first to take the plunge, Zeno, is no small player.

Hardcore Technology That’s Hard to Understand

Zeno is an ambitious project, so much so that if you only look at Zeno's documentation, you might not fully grasp what the team intends to achieve. It was only after communicating with the team that I understood the full scope of this cyber-inspired story:

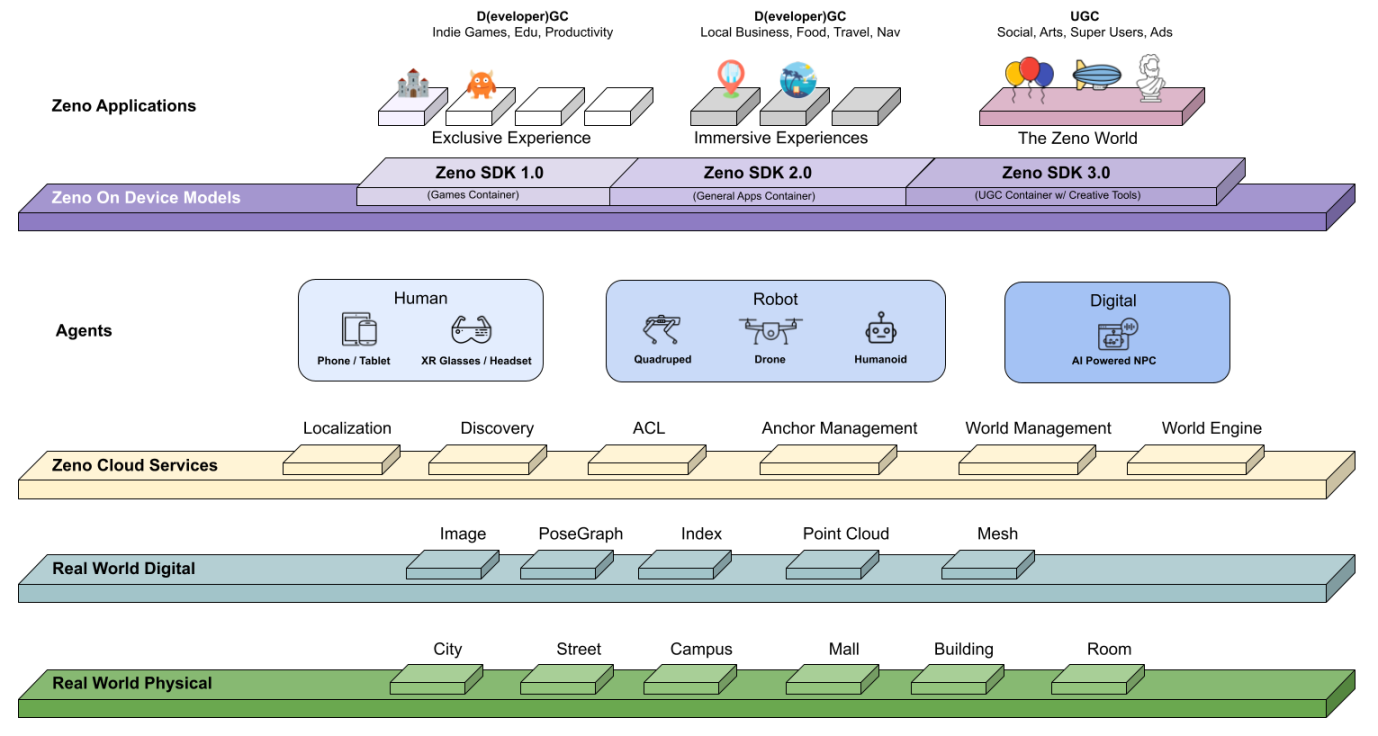

In short, Zeno aims to overlay multiple virtual spaces oriented towards AI and robotic agents in the physical space of human life, allowing all "agents," including humans, to coexist in the same space.

Imagine this scenario: on a future afternoon, you are lounging on your balcony, enjoying leisure time, while an AI housekeeper and a humanoid robot are busy managing household tasks. Suddenly, feeling a bit bored, you want to play a virtual passing game with your two brothers at home, so you put on your VR/AR glasses. In the world of the glasses, the robot appears human, and the AI that only exists in the network also takes on a humanoid form. The robot sits on the sofa, while the AI sits on the floor, and the three of you pass a virtual basketball while discussing what to eat for dinner.

This is Zeno's ultimate vision: to enable carbon-based intelligent beings and silicon-based agents to live together in the same physical space.

The cyber space many of us imagine might be a purely virtual space, like the new world depicted in the movie "Ready Player One"; our current interactions with AI also occur through flat mediums like computer or phone screens. Zeno hopes to directly bring these virtual spaces into real life, creating a "superposition state" of the physical and digital worlds existing in the same time and space, making digital content feel "real and tangible," and allowing humans, robots, and AI to interact naturally in real scenarios, building a mixed-reality ecosystem where the virtual and real coexist in harmony.

Of course, the world we see may not be exactly the same as what robots and AI perceive. For instance, if you don’t want the robot wandering into your study, you can lock the door in the robot's view, and only after you "unlock" it will the robot have permission to enter.

Focusing on Spatial Anchor Points

Living under the same roof as artificial intelligence sounds very high-tech, but there is a major prerequisite: you need to establish a model of the real world in the virtual world to achieve programmability.

This requires you to first have real-world scene data, which is a problem many companies, including intelligent driving technology firms, are currently researching. Take intelligent driving as an example; if you have real scene map data for an entire city, the intelligent driving AI doesn’t need to roam the streets to learn how to respond to different situations; it can directly simulate road scenarios in the lab to continuously evolve.

While the above is not what we refer to as "spatial overlap," it is one of the important applications for establishing a model of the real world. The ultimate vision Zeno wants to achieve cannot be reached in one step; the first thing it needs to do is collect real-world scene data.

Zeno has already launched a program that allows users to use their everyday devices to help input spatial data, supporting both robots and glasses. As for smartphones, the team stated that Google’s ARCore is mature enough and does not require secondary development; users can refer to the compatible models for direct use. The data collected will be used to build the algorithms for spatial construction, which are independently developed by the Zeno team.

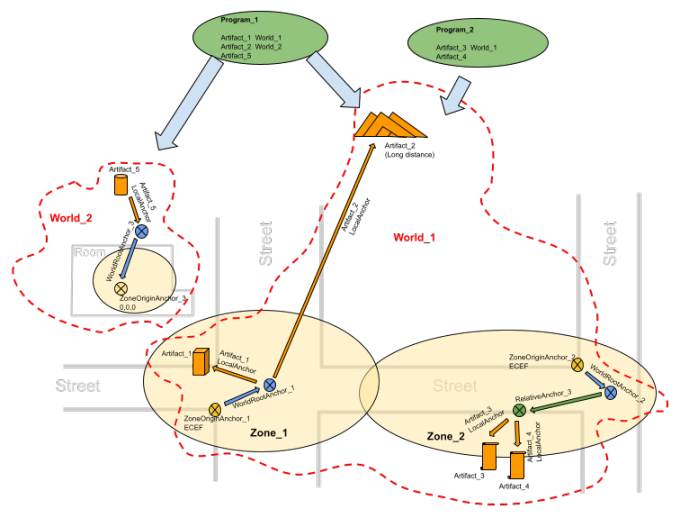

The core of building a coexistence between the real and virtual worlds revolves around spatial anchor points. From a technical implementation perspective, the real world cannot be directly programmed; the connection to the virtual world is made by associating anchor points in the real world and mapping out a virtual space based on physical space. Metaphorically speaking, for robots and AI, the displayed world is an ocean in the night, and these anchor points are lighthouses, illuminating every area for silicon-based intelligence in the displayed world.

The first step towards achieving Zeno's "ultimate goal" is to establish a full-stack platform. In addition to everyday electronic devices like smartphones, it also uses professional equipment such as LiDAR, 360-degree panoramic cameras, and RGB cameras on mobile devices or XR headsets for data collection. The team stated that the Zeno platform will have a powerful cloud-based visual world model and computing system capable of processing GB-level raw sensor data daily for large areas (city-level/global regions) and establishing indexes for rapid spatial queries; it can also process data for small areas (room-level/anchored areas) in parallel, achieving high-throughput real-time processing.

Additionally, the system has self-learning capabilities, continuously optimizing through high-quality data and third-party data. In the future, it will support hundreds of spatial queries per second, providing precise six degrees of freedom (6-DOF) positioning results, shared spatial anchor point creation, rapid 3D visual reconstruction, instant semantic segmentation, and other scene understanding functions. It has high scalability and can be widely applied in various scenarios such as AR games, navigation, advertising, or productivity tools.

The verified spatial data and the spatial intelligent infrastructure layer it builds can be called upon by various decentralized applications for autonomous driving path planning, end-to-end model data training for robots, generating verifiable automatically executed smart contracts, and spatially shaped advertising distribution, ultimately achieving data-driven decision-making and upper-layer applications.

Who is Behind Zeno?

Compared to some Web3 projects with ethereal visions, Zeno's goals, while complex, are very tangible. The reason the project documentation details the technical implementation so thoroughly is that the team members have been deeply involved in this field for many years.

The members of the Zeno team all come from DeepMirror, which is also known as Chenjing Technology. If you are not familiar with Chenjing Technology, you may have heard of Pony.ai, which is listed on NASDAQ with a market value of 7 billion dollars. Harry Hu, the CEO of Chenjing Technology, was the former COO/CFO of Pony.ai.

Zeno's CEO Yizi Wu was one of the early members of Google X, participating in the development of products such as Google Glass, Google ARCore, Google Lens, and the Google Developer Platform. He led the overall AI architecture and World Model development at Chenjing Technology.

Zeno's core team members also include Taoran Chen, a former research scientist at Chenjing Technology with dual PhDs in mathematics from MIT and Cornell University, and Kevin Chen, the former CFO of Chenjing Technology and an executive at Fosun Group, JPMorgan, and Morgan Stanley.

For the Zeno team, stepping into Web3 feels more like a bold attempt by a Web2 team with a technical background. The team states that the ZENO token will be used to incentivize users who provide spatial data and teams or individuals developing tools, applications, and games using the infrastructure built on Zeno. In addition to the 500 million tokens distributed in the launchpad, the team retains 300 million tokens, while the remaining 200 million will be used to add liquidity to trading pairs on Meteora with the 100 SOL obtained from the launchpad activities.

RealityGuard, a spatial application combining AR and gaming developed by Chenjing Technology

When asked why they chose Web3 as their battleground, Zeno told me that spatial data itself is a highly decentralized digital asset, naturally fitting the Web3 environment. The spatial data collected by Zeno will also be assetized in the future and traded using the ZENO token as currency, expanding the flow of ZENO within the ecosystem, with buyers being technology companies that need spatial data. As for more application scenarios for ZENO, they "will be further explored as the project progresses."

Through Zeno, it is believed that the role of the DeSci platform has been materialized; science does not have to be an obscure and difficult pure science discipline. Making technology more accessible, like Xiaomi, and lowering the threshold for technology value investment is also one of the important values of DeSci's existence.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。